PhD Oral Presentation

An Investigation on the use of Analytical Tools

Victor Sanches Portella

July 30, 2024

, and Martingales

Privacy

Experts

,

Analytical Methods in CS

(and Back!)

Martingales

Privacy

Experts

Stochastic Calc.

& Online Learning

Vector Calculus and Probability Theory

Stochastic Calculus

Privacy

Differential Privacy

Output 1

Output 2

Not too far apart

What does it mean for an algorithm \(\mathcal{M}\) to be private?

Differential Privacy: \(\mathcal{M}\) does not rely heavily on any individual

\((\varepsilon, \delta)\)-Diff. Privacy

\(\varepsilon \equiv \) "Privacy leakage", small constant

\(\delta \equiv \) "Chance of failure", usually \(O(1/\text{\#samples})\)

Covariance Estimation

Unknown Covariance Matrix

\((\varepsilon, \delta)\)-differentially private \(\mathcal{M}\) to estimate \(\Sigma\)

on \(\mathbb{R}^d\)

Goal:

Required even without privacy

Required even for \(d = 1\)

Is this tight?

Exists \((\varepsilon, \delta)\)-DP \(\mathcal{M}\) such that

samples

Known algorithmic results

with

Our Results - New Lower Bounds

Theorem

For any \((\varepsilon, \delta)\)-DP algorithm \(\mathcal{M}\) such that

and

we have

Our results generalize both of them

Nearly highest reasonable value

[Kamath et al. 22]

Previous lower bounds required

[Narayanan 23]

OR

Lower Bounds via Fingerprinting

A measure of the correlation between \(z\) and \(\mathcal{M}(X)\)

Correlation statistic

If \(z \sim \mathcal{N}(0, \Sigma)\) indep. of \(X\)

small

If \(\mathcal{M}\) is accurate

large

Fingerprinting Lemma

Approx. equal by privacy

Approx. equal by privacy

Score Statistic

Score function

Score Attack Statistic

To get a fingerprinting lemma, we need to randomize \(\Sigma\) so that

is large

Previous work

\(\Sigma\) with "small radius" \(\implies\) Weak FP Lemma

\(\Sigma\) with "large radius" \(\implies\) hard to bound \(\mathbb{E}[|\mathcal{A}(z, \mathcal{M}(X))|]\)

New Fingerprinting Lemma

Fingerprinting Lemma

Need to Lower Bound

\(\Sigma \sim\) Wishart leads to elegant analysis

Stein-Haff Identity

"Move the derivative" from \(g\) to \(p\) with integration by parts

Stokes' Theorem

FP Lemma

Upper Bound

Experts

Player

Adversary

\(n\) Experts

0.5

0.1

0.3

0.1

Probabilities

1

-1

0.5

-0.3

Gains

Player's gain:

Prediction with Expert's Advice

Gain of Best Expert

Player's Gain

Quantile Regret

Best Expert

Best Experts

\(\varepsilon\)-fraction

Multiplicative Weights Update:

Needs knowledge of \(\varepsilon\)

We design an algorith with \(\sqrt{T \ln(1/\varepsilon)}\) quantile regret

for all \(\varepsilon\) and best known leading constant

Gain of

top \(\varepsilon n \) expert

\(\varepsilon\)-Quantile Regret

Moving to Continuous Time

Analysis often becomes clean

Sandbox for design of optimization algorithms

Key Question: How to model non-smooth (online) optimization in continuous time?

Why go to ?

continuous time

Discrete Time

Useful perspective: \(G(i)\) is a realization of a random walk

Continuous Time

\(G_t(i)\) is a realization of a Brownian Motion

Worst-case =

Probability 1

Improved Quantile Regret

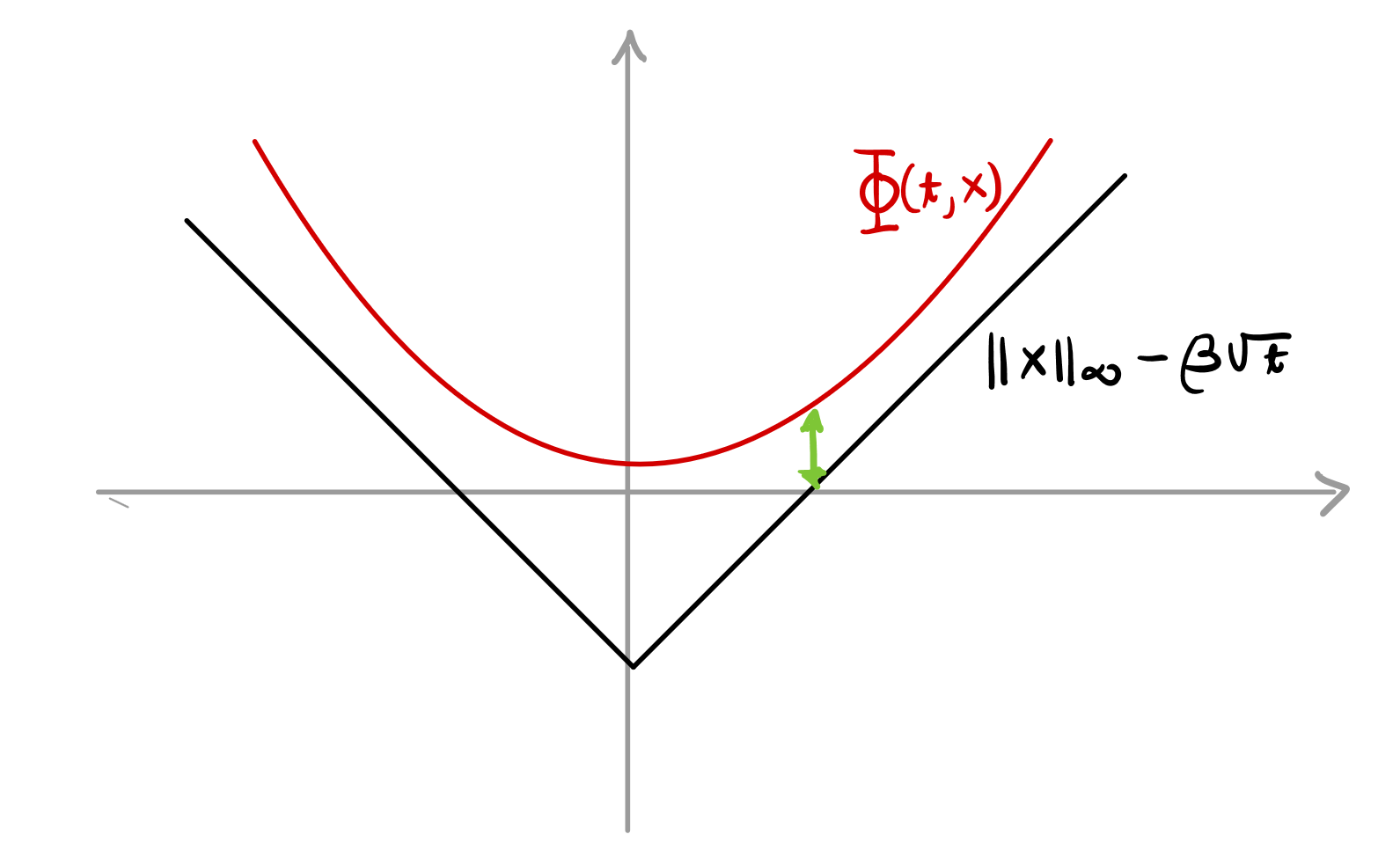

Potential based players

Stochastic Calculus suggests \(\Phi\) that satisfy the Backwards Heat Equation

For all \(\varepsilon\)

Using this potential*, we get

Best leading constant

Discrete time analysis is IDENTICAL to continuous time analysis

*(with a slightly bigger cnst. in the BHE)

Anytime Experts

Question:

Are the minimax regret with and without knowledge of \(T\) different?

fixed-time

anytime

anytime

fixed-time

Theorem: In Continuous Time, both are equal if Brownian Motions are independent.

MinmaxRegret

Can we get better lower bounds?

=

?

Martingales

Motivation: Expected Regret

Player's Total Gain

Vector of the Experts' Gains

High expected regret \(\implies\) anytime lower bound

Max expected anytime regret without independent experts?

Anytime Regret \(\equiv\) \(\tau\) is a stopping time

How big can

be?

Norm of High-Dimensional Martingales

For a martingale \((G_t)_{t \geq 0}\), find upper and lower bounds to

sup

is a stopping time

No assumptions

on the dependency between coordinates

Theorem

If \(G_t(i)\) is a Brownian motion for all \(i = 1, \dotsc, n\), then

Evidence that Anytime Lower Bounds for

continuous experts needs new techniques

Other Results

For a martingale \((G_t)_{t \geq 0}\), find upper and lower bounds to

sup

is a stopping time

Similar upper bounds when \(G_t(i)\) has smooth quadratic variation

If \(G_t(i)\) is a discrete martingale with increments in \([-1,1]\), we have

Beyond Brownian Motion

Discrete Martingales

Discrete Ito's Lemma

Main Ideas of the Analysis

Goal:

non-smooth \(\implies\) hard to show it is a supermartingale

Idea:

Design a smooth function \(\Phi\) such that

\((\Phi(t, G_t))_{t \geq 0}\)

is a supermartingale

and

Backwards Heat Eq.

Tune Constants

Summary

Martingales

Tight bounds on expected norm for a large family of martingales. Nearly tight bounds and implications for the experts problem.

Experts

Continuous-time model for the experts' problem and new algorithms. Sandbox for online learning algorithms.

Privacy

New lower-bounds for private covariance estimation. Techniques suggest a proof strategy for lower bounds in DP.

PhD Oral Presentation

An Investigation on the use of Analytical Tools

Victor Sanches Portella

July 30, 2024

, and Martingales

Privacy

Experts

,

Fingerprinting and Stein's Identity

Score function

If \(z \sim \mathcal{N}(0, \Sigma)\) indep. of \(X\)

For \(x_1, \dotsc, x_n\) from \(X\)

\(= \Theta(d^2)\) if \(\mathbb{E}[\mathcal{M(X)}] = \Sigma\)

Modeling Adversarial Costs in Continuous Time

Total gains of expert \(i\):

Useful perspective: \(G(i)\) is a realization of a random walk

realization of a Brownian Motion

Probability 1 = Worst-case

Discrete Time

Continuous Time

Score Statistic

Main Property

Score function

Score Attack Statistic

Previous work:

Want random \(\Sigma\) such that this is large in expectation

Covariance Estimation

Known bounds on sample complexity

There is an \((\varepsilon, \delta)\)-DP mechanism \(\mathcal{M}\) such that

Unknown Covarince Matrix

on \(\mathbb{R}^d\)

for some

Required even without privacy

Required even for \(d = 1\)

Is this tight?

Lower Bounds via Fingerprinting

Correlation statistic

If \(z \sim \mathcal{N}(0, \Sigma)\) indep. of \(X\)

small

If \(\mathcal{M}\) is accurate

large

Approx. equal by privacy

Approx. equal by privacy

Fingerprinting Lemma

for covariance estimation

\(\mathcal{A}(z, \mathcal{M}(X))\)

leads to limited lower bounds

Lower Bounds via Fingerprinting

Correlation statistic

If \(z \sim \mathcal{N}(0, \Sigma)\) indep. of \(X\)

small

If \(\mathcal{M}\) is accurate

large

Approx. equal by privacy

Approx. equal by privacy

Fingerprinting Lemma

for covariance estimation

\(\mathcal{A}(z, \mathcal{M}(X))\)

leads to limited lower bounds

Online Learning and Experts

Prediction with Expert's Advice

Player

Adversary

\(n\) Experts

0.5

0.1

0.3

0.1

Probabilities

1

-1

0.5

-0.3

Costs

Player's loss:

Adversary knows the strategy of the player

Performance Measure - Regret

Loss of Best Expert

Player's Loss

Optimal!

For random \(\pm 1\) costs

Multiplicative Weights Update:

(Hedge)

Why Learning with Experts?

Boosting in ML

Understanding sequential prediction

Universal Optimization

Solving SDPs, TCS, Learning theory...

Motivating Problem - Fixed Time vs Anytime

MWU regret

when \(T\) is known

when \(T\) is not known

anytime

fixed-time

Does knowing \(T\) gives the player an advantage?

[Harvey, Liaw, Perkins, Randhawa '23]

Random cost are (probably) too easy to show separation

[VSP, Liaw, Harvey '22]

Anytime > Fixed time for 2 experts + optimal algorithm

+ new algorithms for quantile regret!

With stochastic calculus:

Continuous Experts' Problem

Modeling Adversarial Costs in Continuous Time

Analysis often becomes clean

Sandbox for design of optimization algorithms

Gradient flow is useful for smooth optimization

How to model non-smooth (adversarial) optimization in continuous time?

Why go to ?

continuous time

Modeling Adversarial Costs in Continuous Time

Total loss of expert \(i\):

Useful perspective: \(L(i)\) is a realization of a random walk

realization of a Brownian Motion

Probability 1 = Worst-case

Discrete Time

Continuous Time

The Continuous Time Model

Discrete time

Continuous time

Cummulative loss

Player's cummulative loss

Player's loss per round

[Freund '09]

MWU in Continuous Time

Potential based players

Regret bounds

when \(T\) is known

when \(T\) is not known

anytime

fixed-time

MWU!

Same as discrete time!

Idea: Use stochastic calculus to guide the algorithm design

with prob. 1

A Peek Into the Analysis

Ito's Lemma

(Fundamental Theorem of Stochastic Calculus)

\(B_t\) is very non-smooth \(\implies\) second-order terms matter

\(n = 1\)

Ito's Lemma

Potential does not change too much

Would be great

A Peek Into the Analysis

Potential based players

Matches fixed-time!

Stochastic calculus suggests pontential that satisfy the Backwards Heat Equation

This new anytime algorithm has good regret!

Does not translate easily to discrete time

need to add correlation between experts

Take away: independent experts cannot give better lower-bounds (in continuous-time)

Beyond i.i.d. Experts

Discrete time analysis is IDENTICAL to continuous time analysis

Improved anytime algorithms with bounds

quantile regret

Discrete Ito's

Lemma

Online Linear Optimization

Based on work by Zhang, Yang, Cutkosky, Paschalidis

Online Linear Optimization

Player

Adversary

Unconstrained

Linear functions

Player's loss:

Loss of Fixed \(u\)

Player's Loss

Parameter-Free Online Linear Optimization

Goal:

Parameter-Free = No knowledge of \(\lVert u \rVert\)

Even better:

"Adaptive" = Adapts to gradient norm

A One Dimensional Continuous Time Model

Discrete Regret

Continuos Regret

Theorem:

If \(\Phi\) satisfies the BHE and

Going to higher dim:

Continuous time analogue

of

Learn direction and scale separately

Use refined discretization

Discretizing:

Conclusion and Open Questions

Continuous Time Model for Experts and OLO

Thanks!

Main References:

[VSP, Liaw, Harvey '22] Continuous prediction with experts' advice.

[Zhang, Yang, Cutkosky, Paschalidis '24] Improving adaptive online learning using refined discretization.

[Freund '09] A method for hedging in continuous time.

[Harvey, Liaw, Perkins, Randhawa '23] Optimal anytime regret with two experts.

How to discretize the algorithm for indep. experts?

?

Improve LB for anytime experts?

?

High-dim continuous time OLO?

?

PhD Oral Presentation

By Victor Sanches Portella

PhD Oral Presentation

- 475