explaining for the best intervention

yuan meng

april 25, 2022

exit talk 🐻

exit talk

how do we learn about the world?

learning by observing

"a painting of 20 athletes playing a brand new sport"

dall·e 2 (openai, 2022)

palm ( google ai, 2022 )

trained on broad data at scale + adaptable to wide range of downstream tasks

how else do people learn?

anti-depressants

mood

thoughts

anti-depressants

mood

thoughts

🤔

learning by experimenting

intervene on mood (e.g., exercise) without anti-depressants 👉 are thoughts affected?

no

yes

data isn't always sufficient or clear...

learning by thinking

image credit: unsplash @maxsaeling

two well-known facts

explanation

the night sky is not filled with starlight; there's darkness in between

if light has travelled infinitely long, it will have filled the night sky

why?🤨

light has only travelled for a finite amount of time 👉 the universe may have a beginning

don't always need new data!

designing good experiments is challenging...

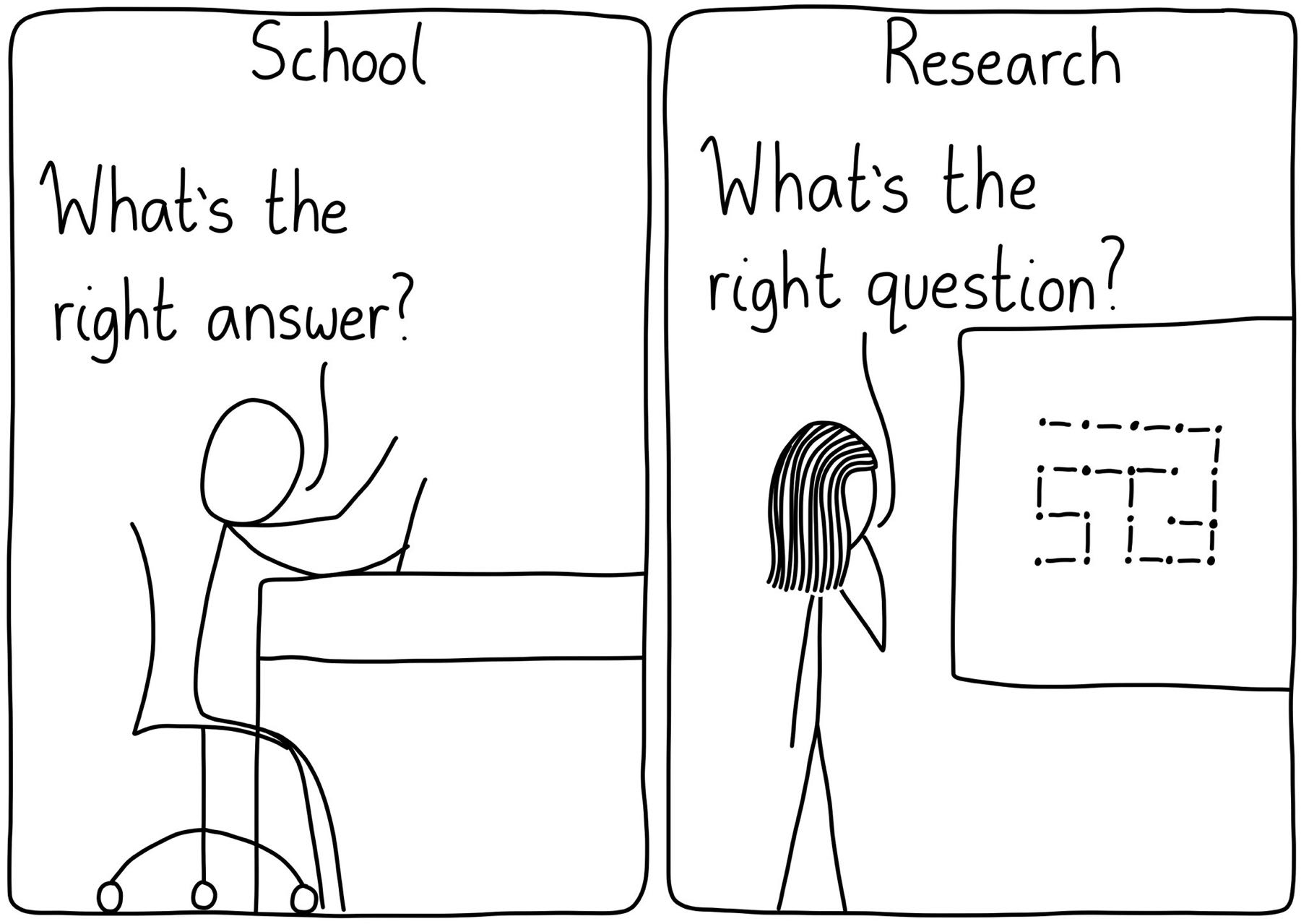

can thinking lead to better experimenation?

intervention

explaining (cause: why, mechanism: how...)

can explaining lead to better intervention?

where the quest began...

evidence on self-explaining effect

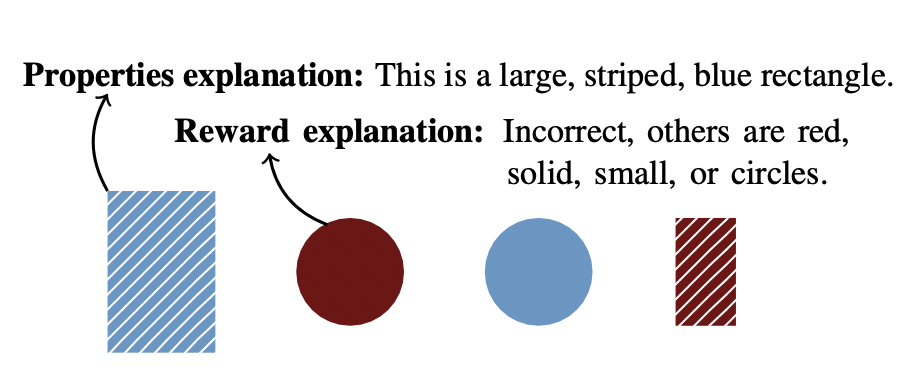

explaining for the best inference

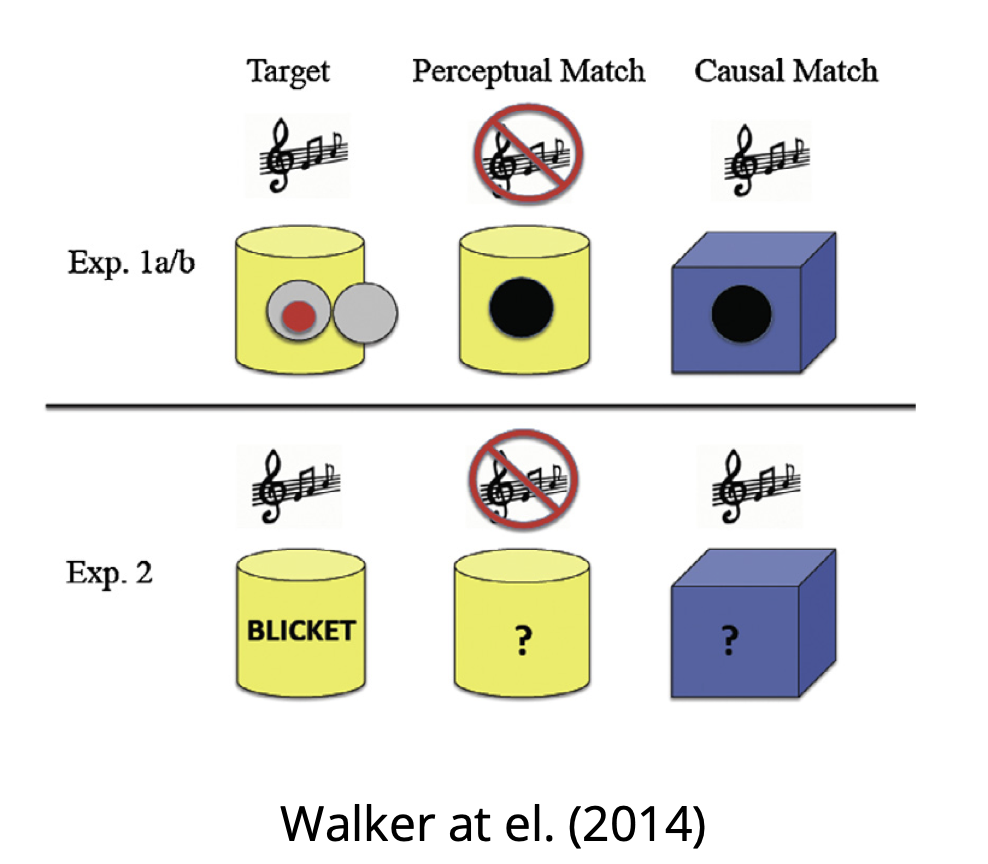

walker et al. (2014)

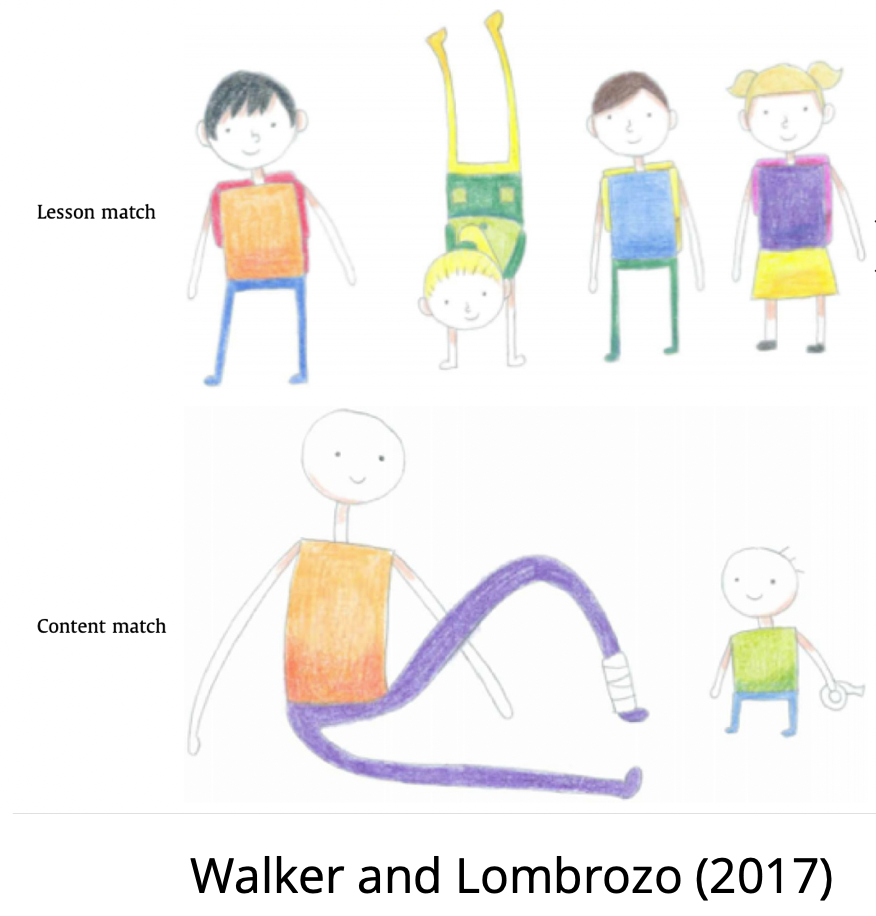

walker and lombrozo (2017)

williams and lombrozo (2010)

explaining helps children and adults acquire generalizable abstractions useful for future scenarios (lombrozo, 2016)

inductive rich properties

the moral of the story

subtle yet broad patterns

explaining may help learners pinpoint own uncertainty or grasp the difference-making principle behind effective intervention

"trouble-shooting"

intervention design

legare (2012)

why is it suddenly working?

why does it stop working?

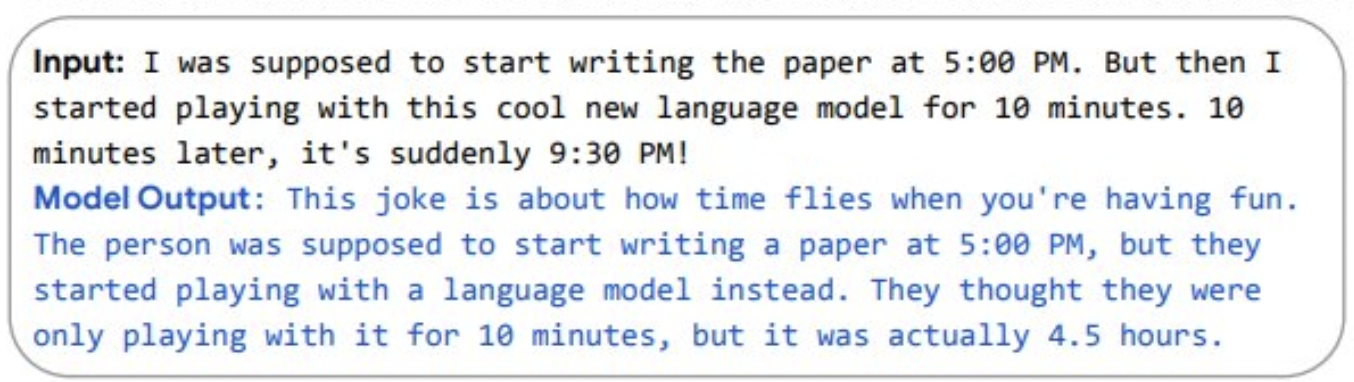

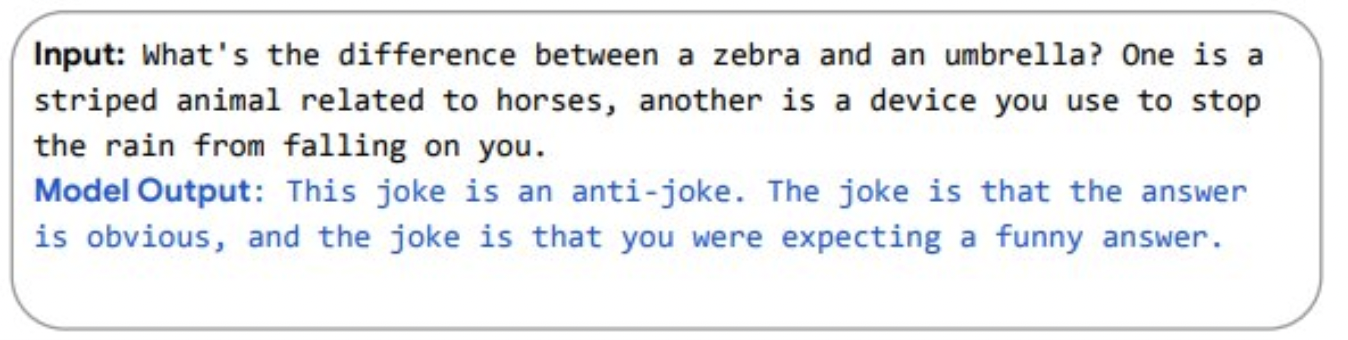

explanations generated by reinforcement learning agent

lampinen et al. (2021)

other domains: question-asking (ruggeri et al., 2019), choosing to observe more in multi-armed bandit problem (liquin & lombrozo, 2017)...

explaining for the best intervention?

outline of talk

- study 1: natural intervention strategies of children (5- to 7-year-olds) + adults

- study 2: ask to explain interventions (5- to 7-year-olds + adults)

- study 3: train to explain interventions (7- to 11-year-olds)

can explaining lead to better intervention?

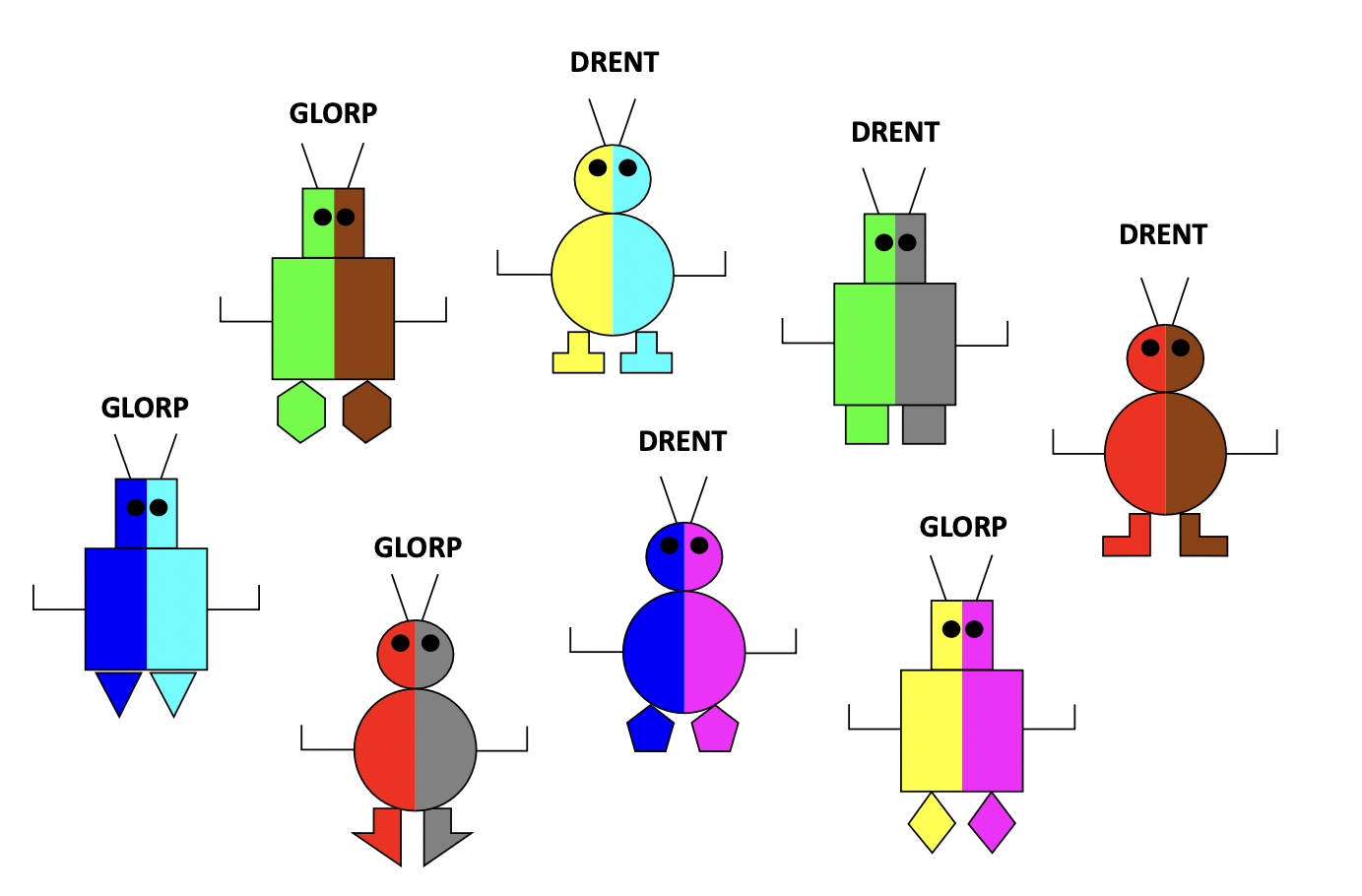

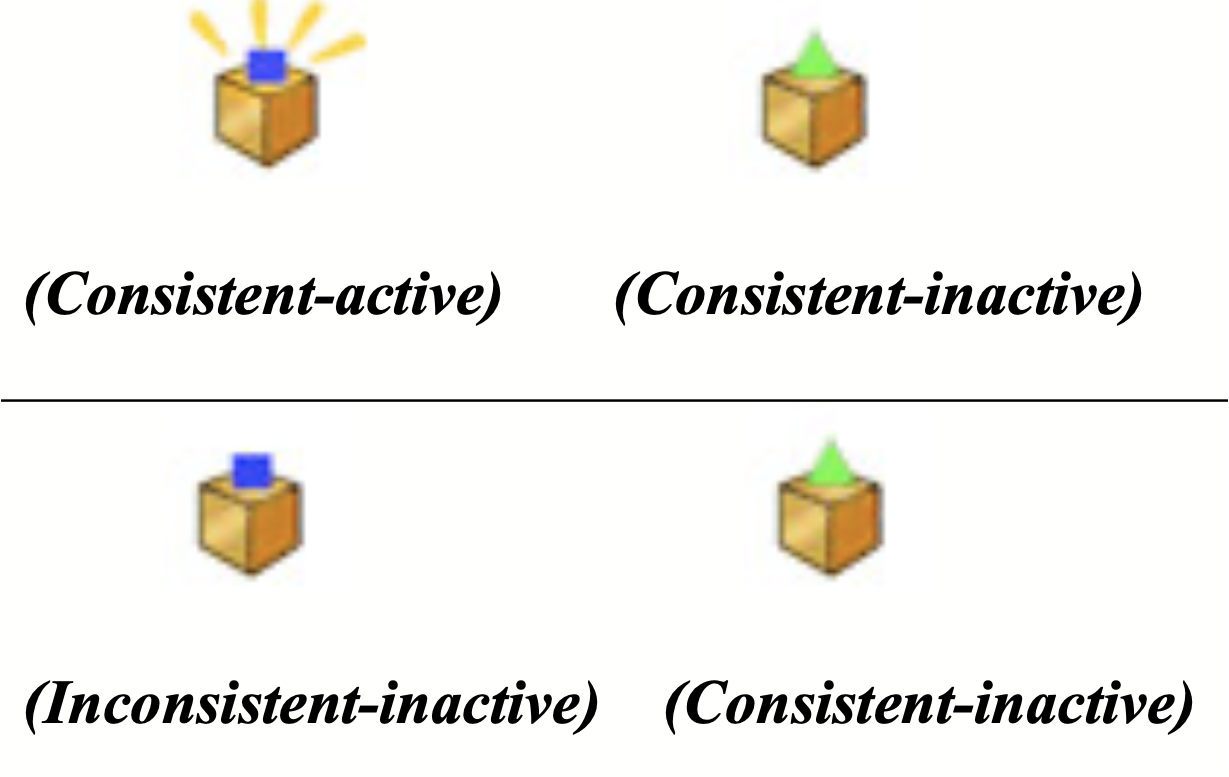

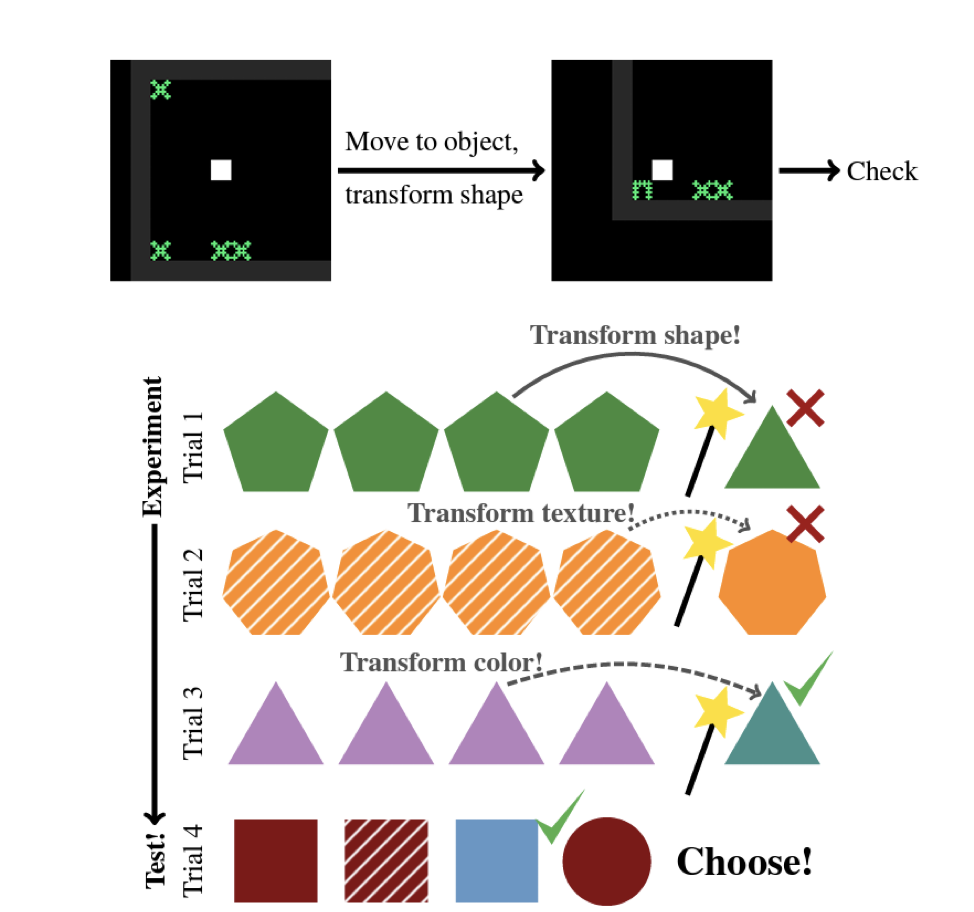

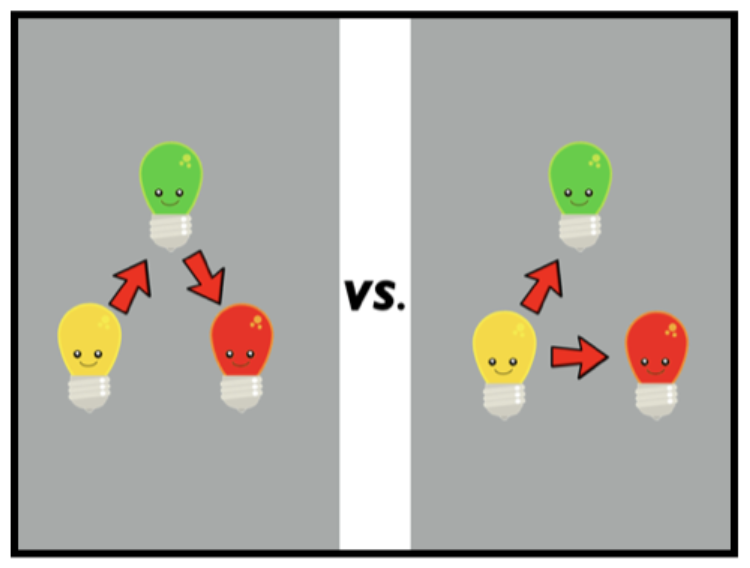

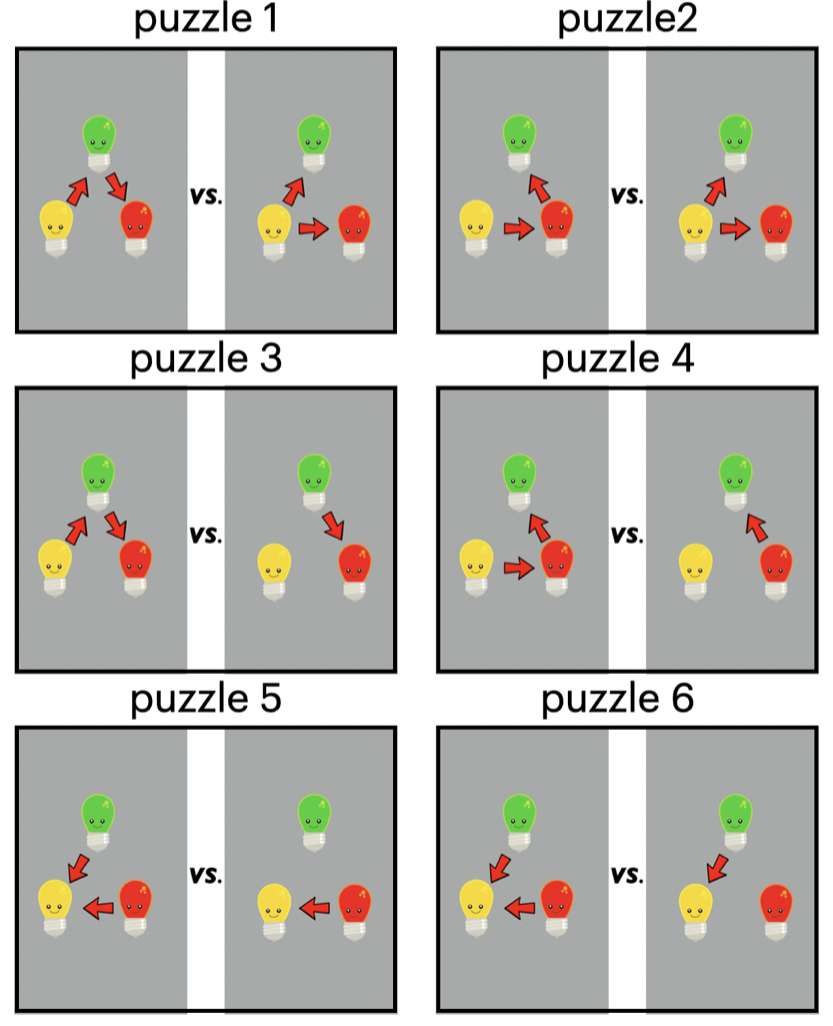

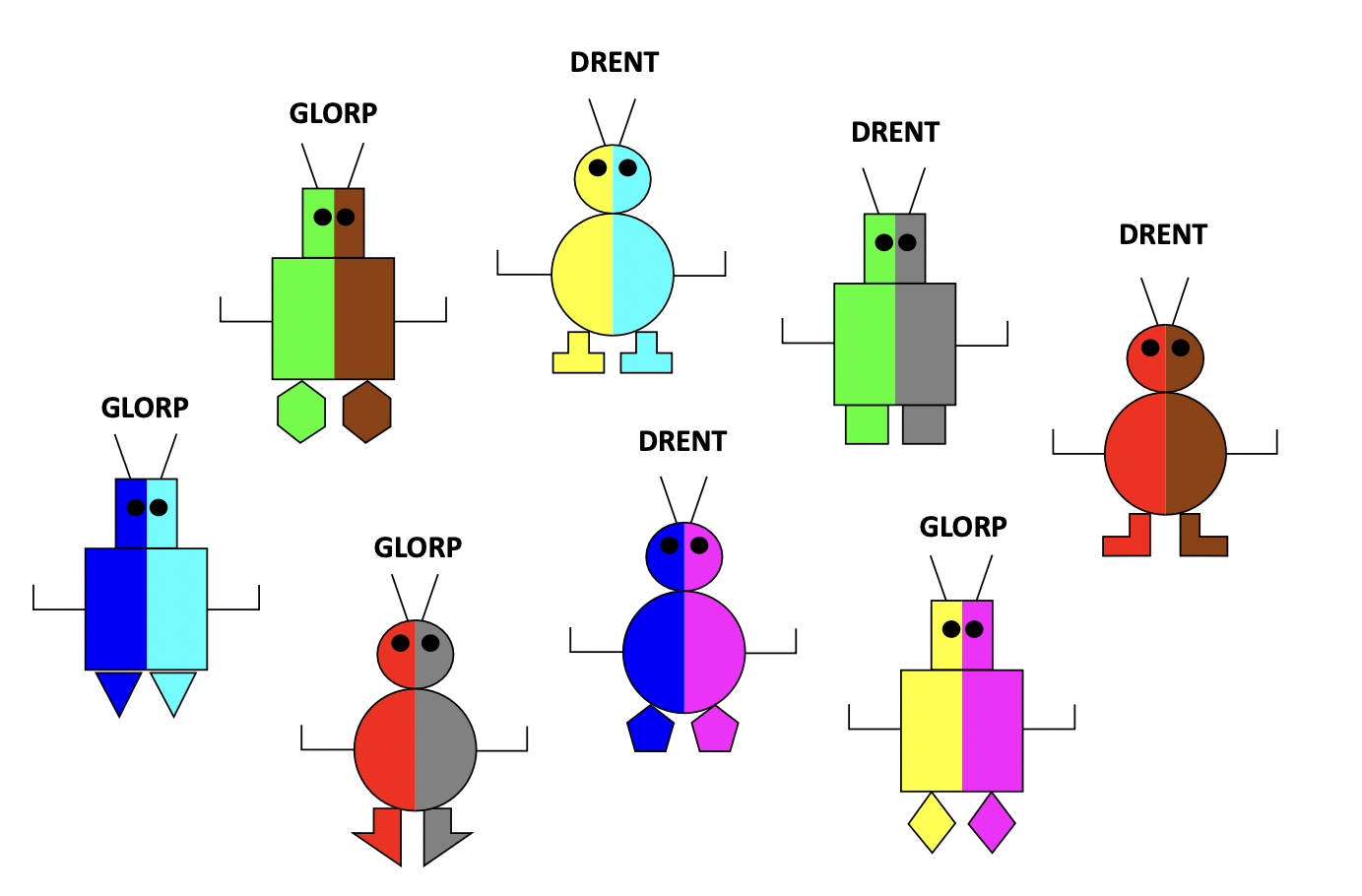

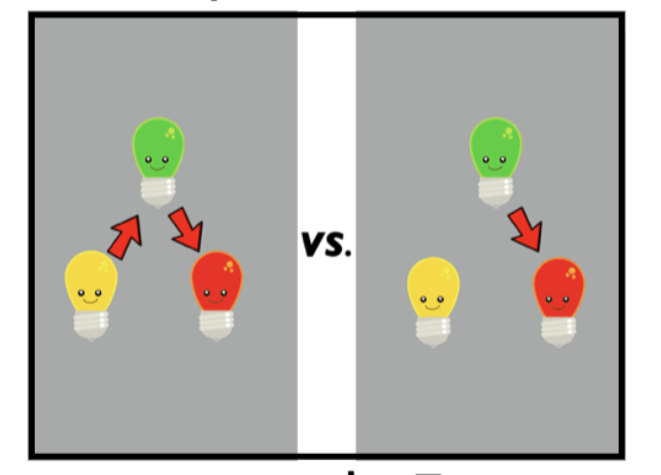

task desiderata

- easy to quantify intervention quality

adapted from coenen et al. (2015)

😵💫

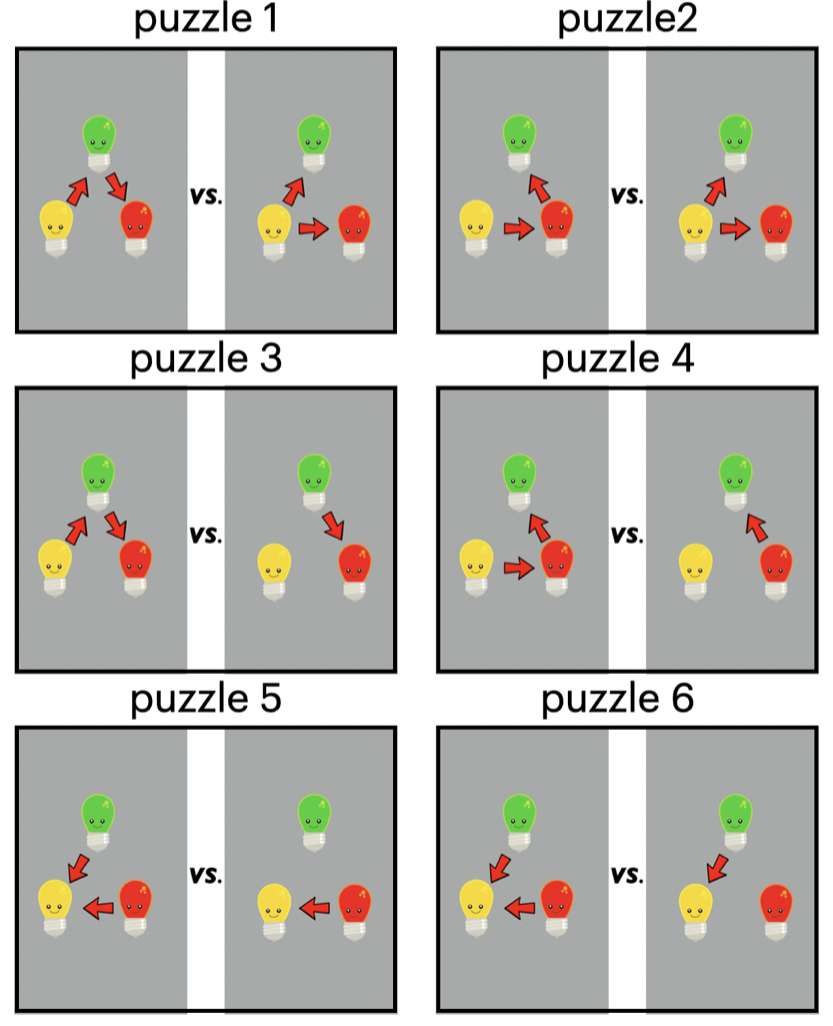

light bulb game

task desiderata

- easy to quantify intervention quality

- suitable for wide age range

adapted from coenen et al. (2015)

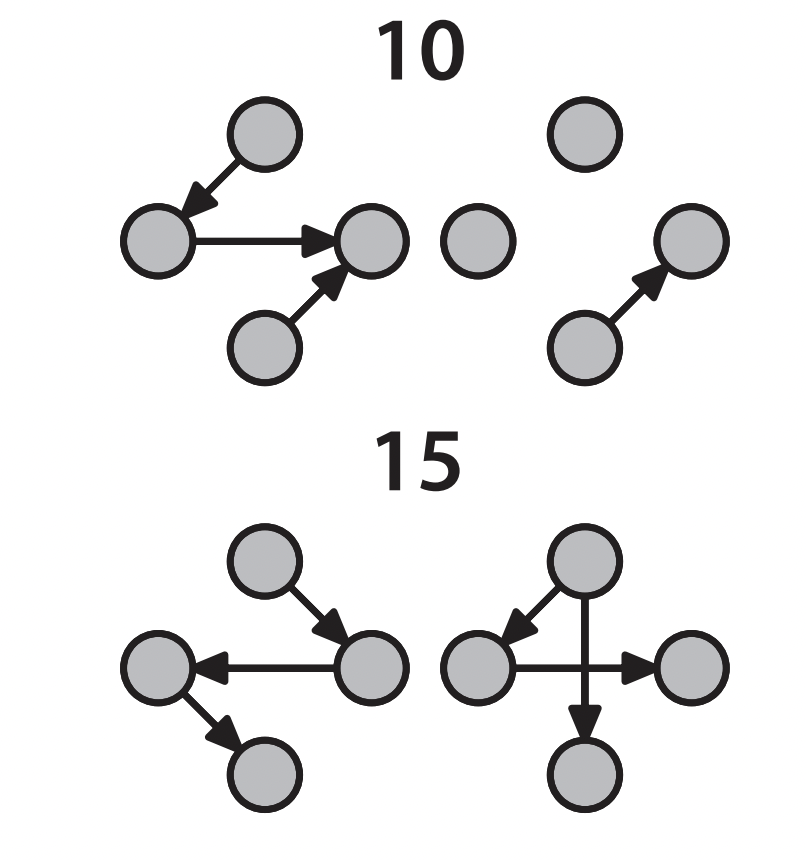

optimal strategy

expected information gain (EIG)

1. surprisal of each hypothesis

2. average surprisal = information entropy (shannon, 1948)

3. reduction in entropy after intervention (e.g., turning on green) and outcome = information gain

expected information gain over all outcomes 👉 guides intervention

don't know what's gonna happen!

difference making

1

0

0

suboptimal strategy

positive test strategy (PTS)

test one hypothesis at a time

- begin with one hypothesis

- score each intervention by the proportion of links it can affect

(coenen et al., 2015; mccormack et al., 2016; meng et al., 2018; meng & xu, 2019; nussenbaum et al., 2020; steyvers et al. 2003)

not being considered yet

1

0.5

0

why suboptimal?

- EIG: 1 intervention

- PTS: 1 or more

suboptimal strategy

positive test strategy (PTS)

test one hypothesis at a time

- begin with one hypothesis

- score each intervention by the proportion of links it can affect

- repeat for the other hypothesis

take the maximum across hypotheses

: yellow

(coenen et al., 2015; mccormack et al., 2016; meng et al., 2018; meng & xu, 2019; nussenbaum et al., 2020; steyvers et al. 2003)

1

0.5

0

1

0

0

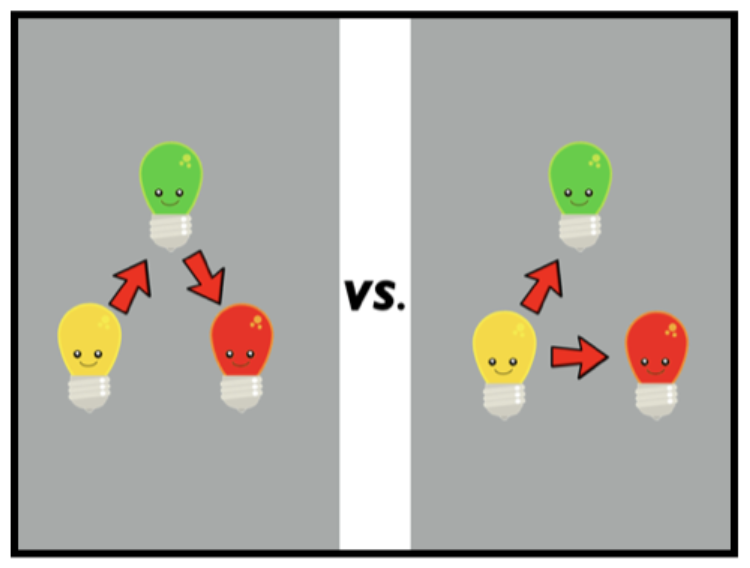

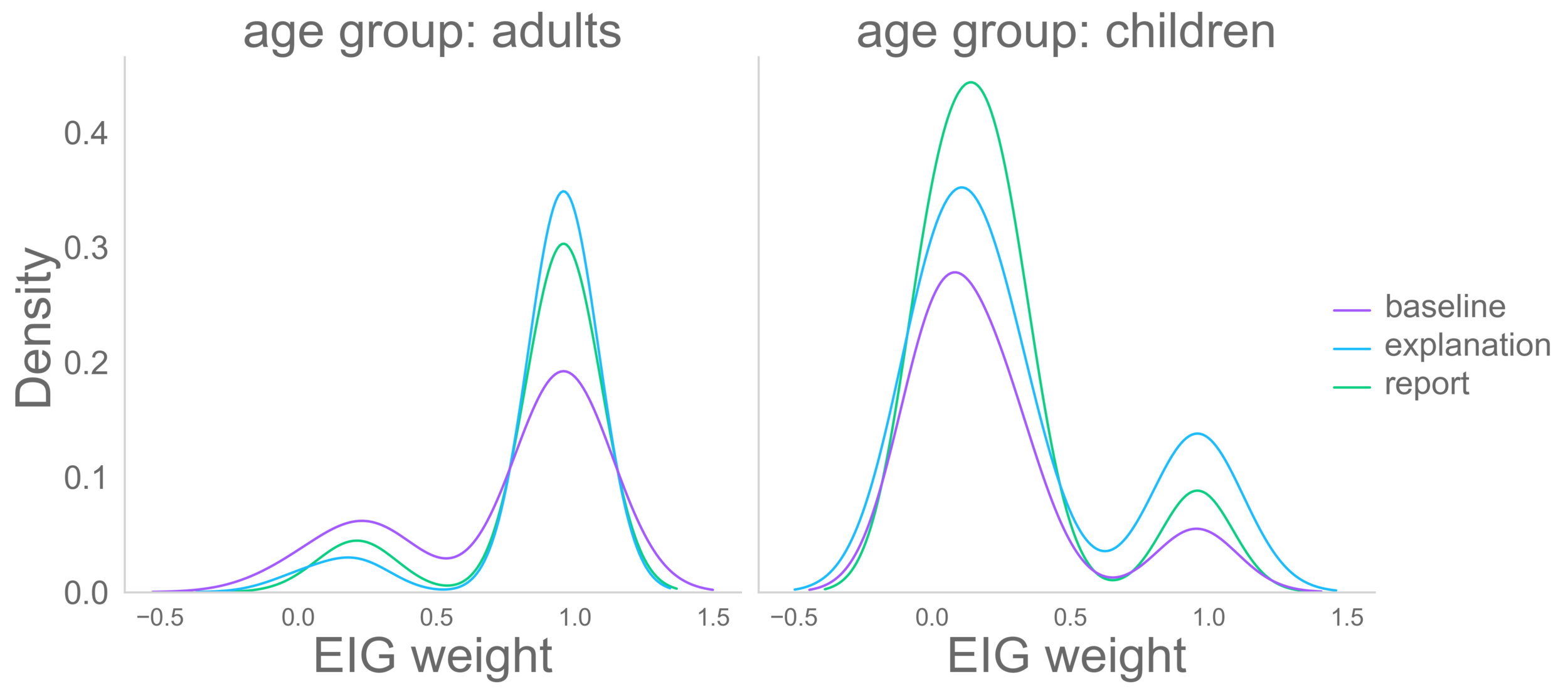

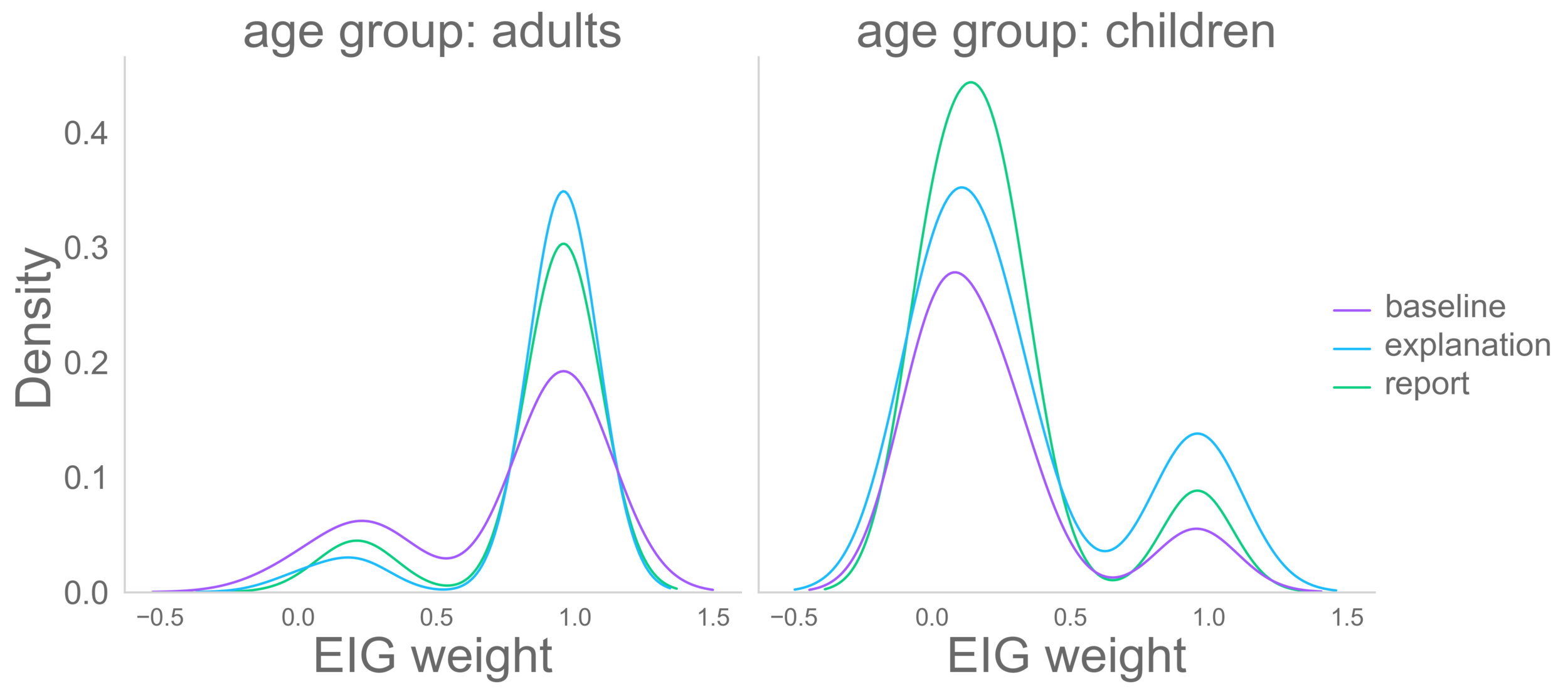

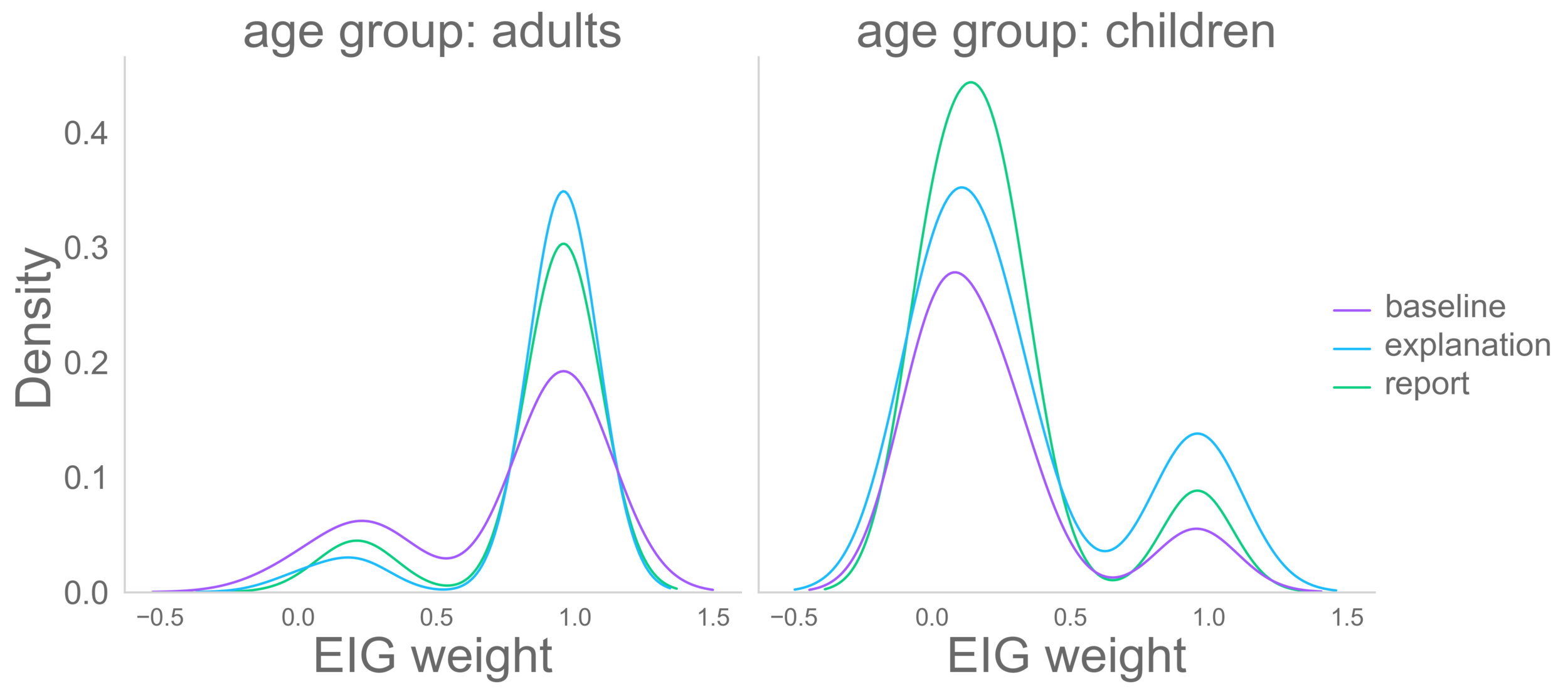

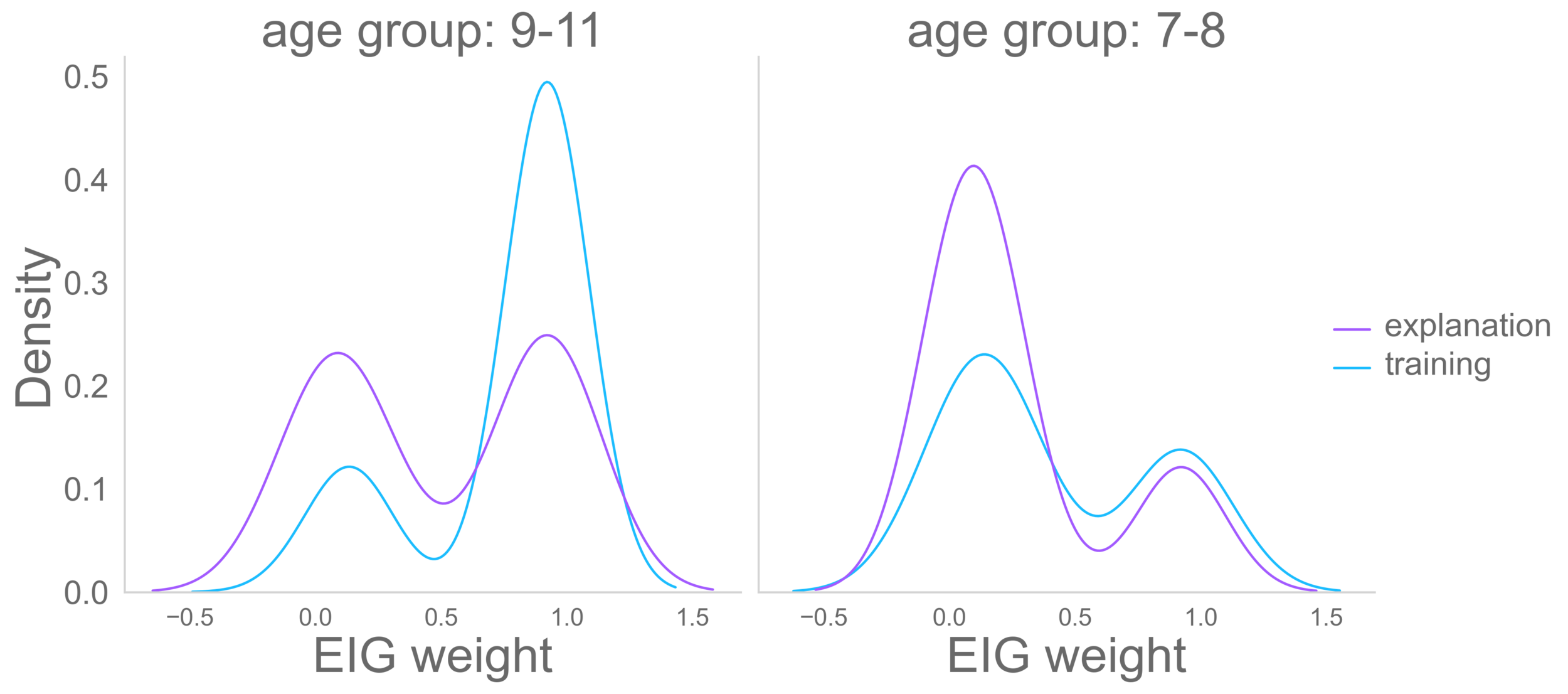

computational modeling:

infer weight of EIG

1. each person's weight of EIG: θ

2. value of an intervention

3. probability choosing intervention

- τ 👉 ∞: maximize V(a)

- τ 👉 ∞: choose randomly

infer each person's θ and τ from interventions they chose

n × 6 × 3 tensor (unobserved): probability of each of n people choosing each of 3 interventions in each of 6 puzzles

n-vector (unobserved): each of n people’s EIG weight

n-vector (unobserved): each of n people’s "temperature"

6 × 3 matrix (observed): EIG score of each of 3 interventions in each of 6 puzzles

6 × 3 matrix (observed): PTS score of each of 3 interventions in each of 6 puzzles

n × 6 matrix (observed): each of n people’s choices in each of 6 puzzles

adapted from coenen et al. (2015)

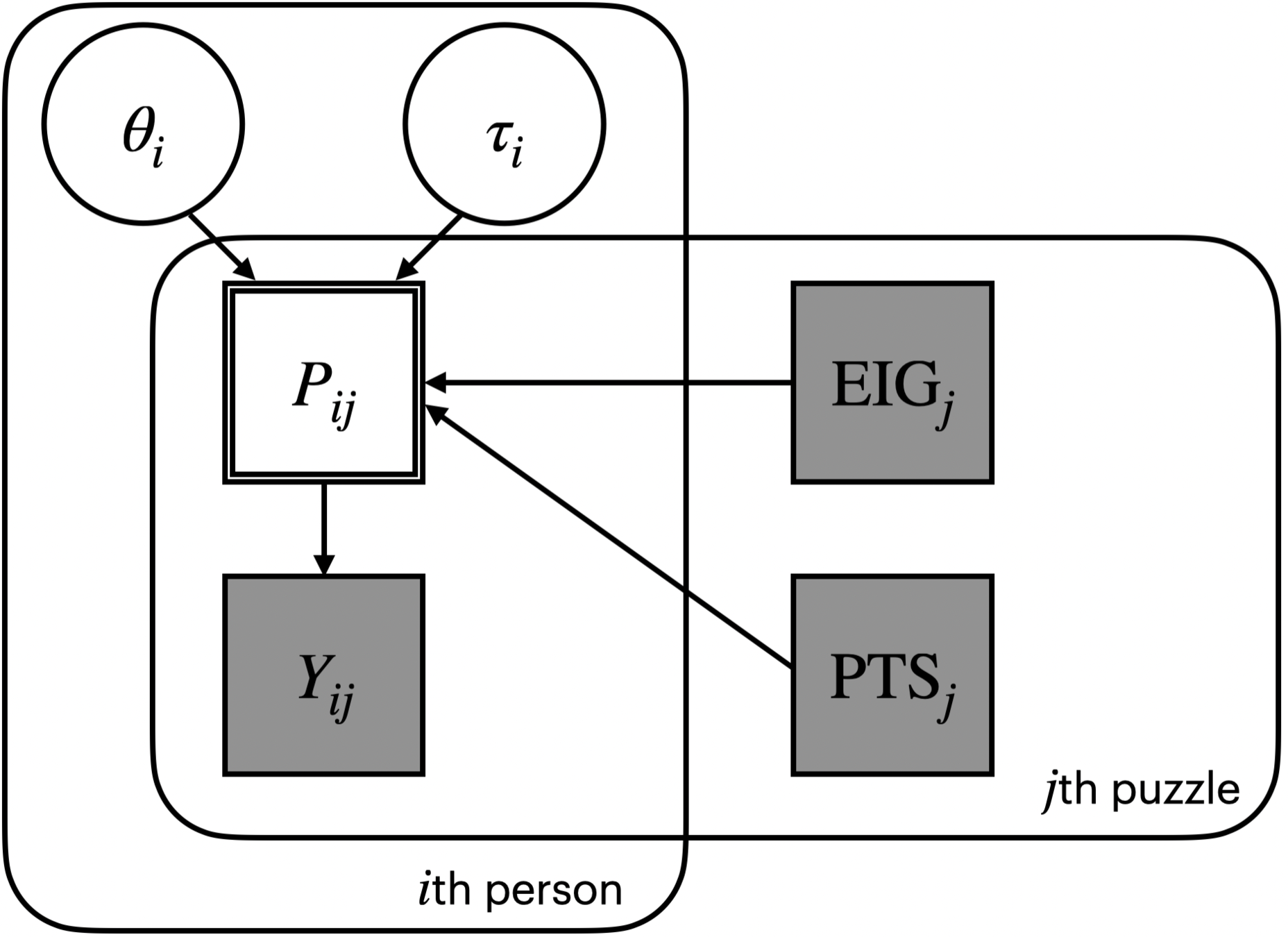

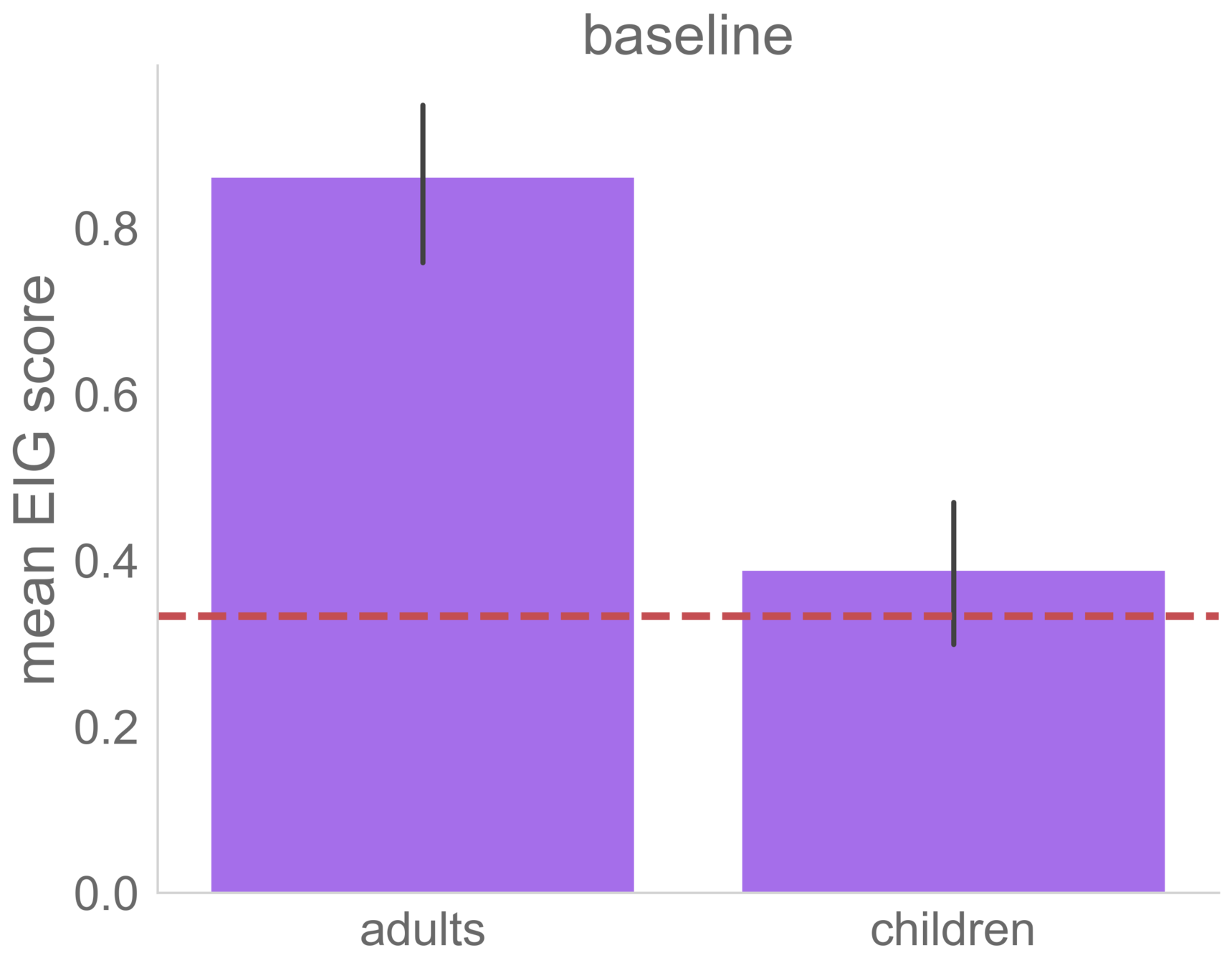

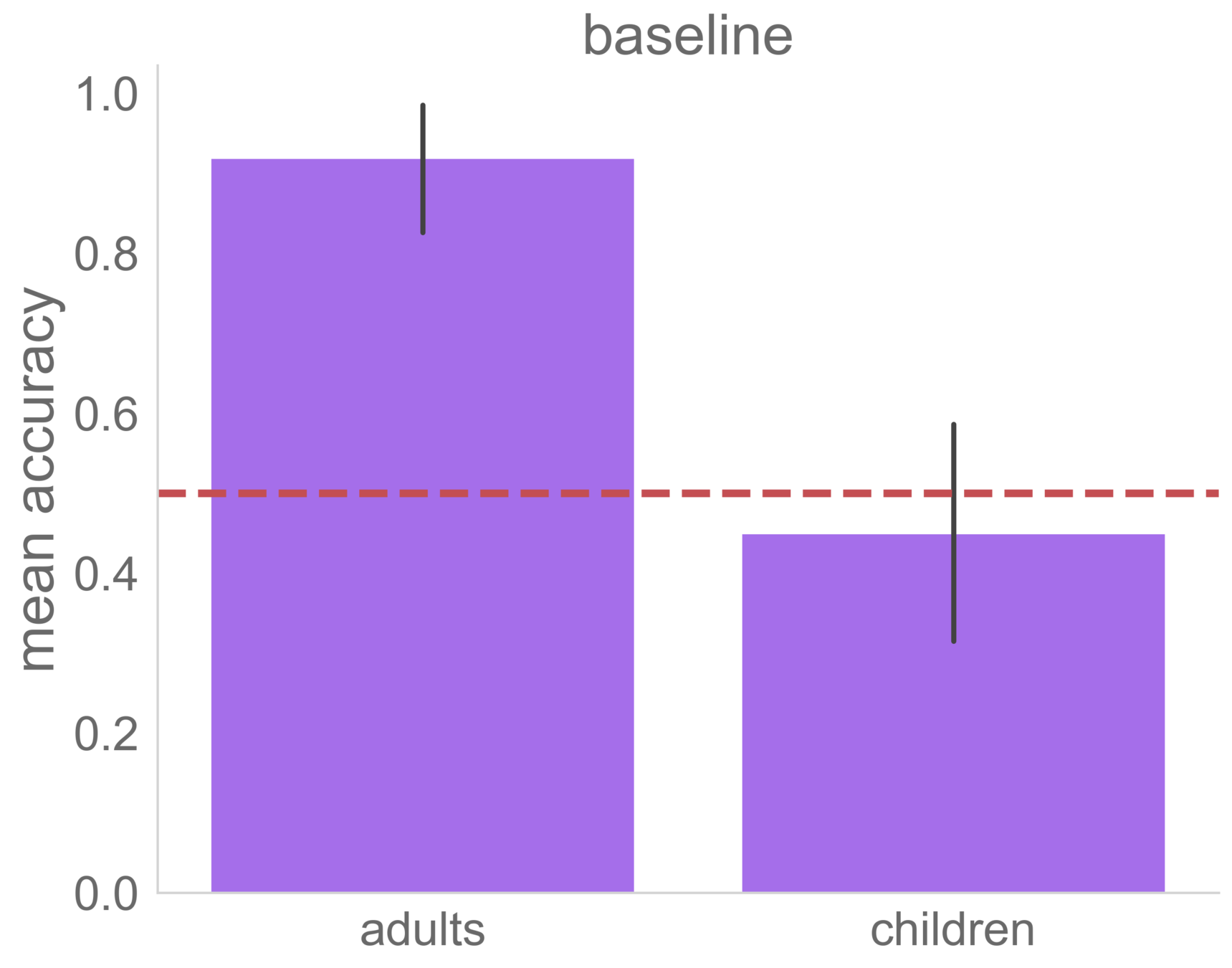

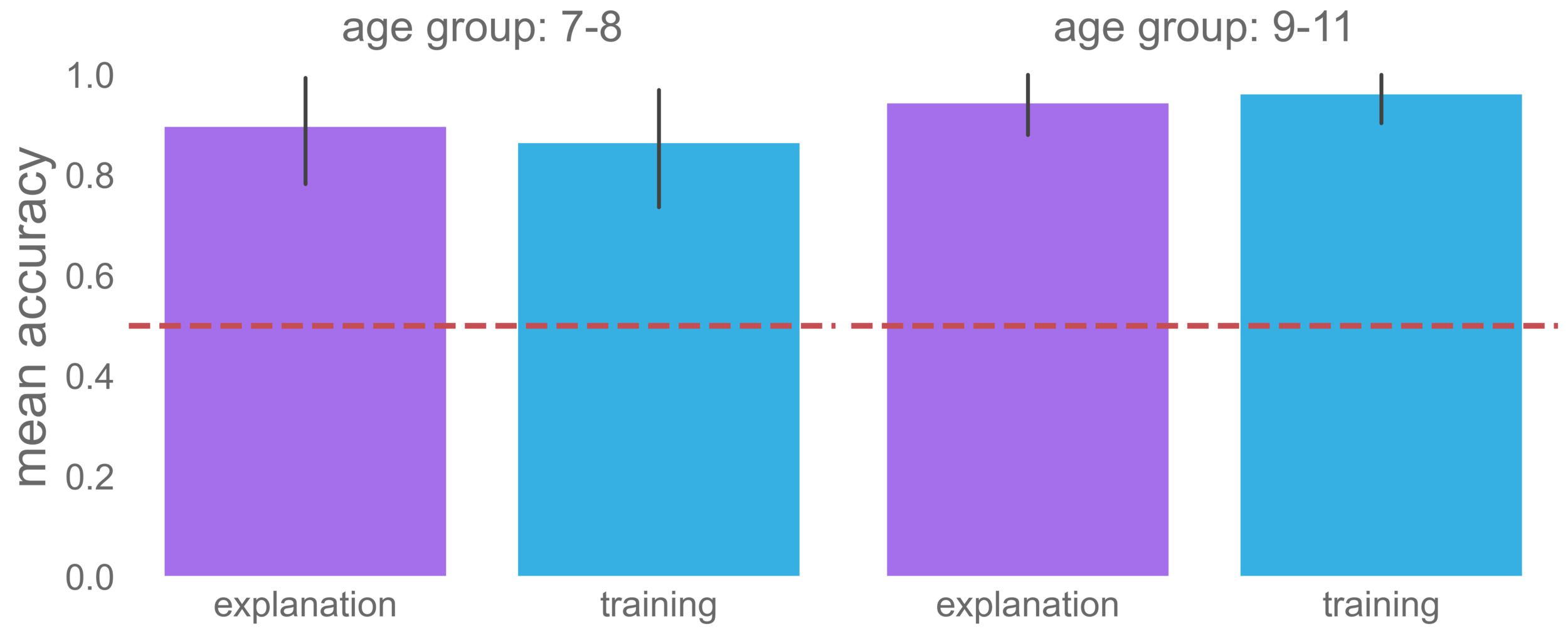

study 1

baseline startegy

5- to 7-year-olds: n = 39

adults: n = 29

meng, bramley, and xu (2018)

mccormack et al. (2016)

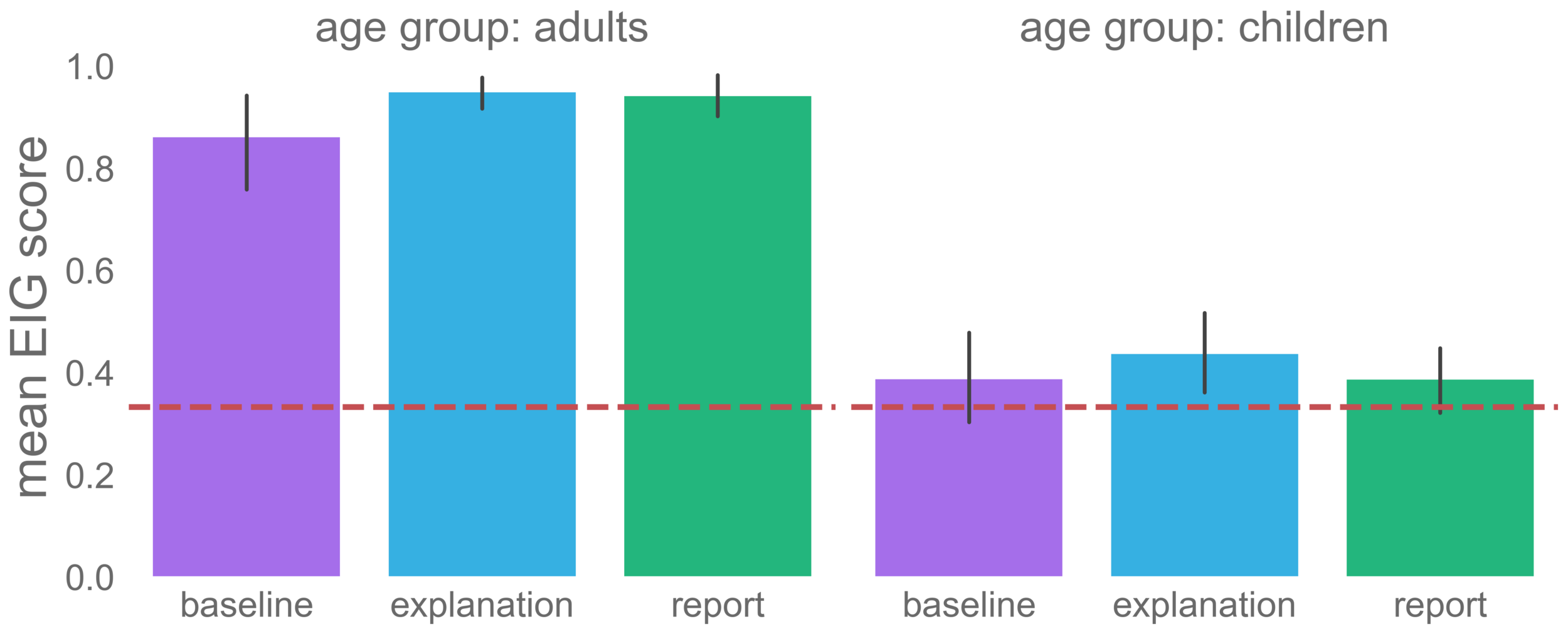

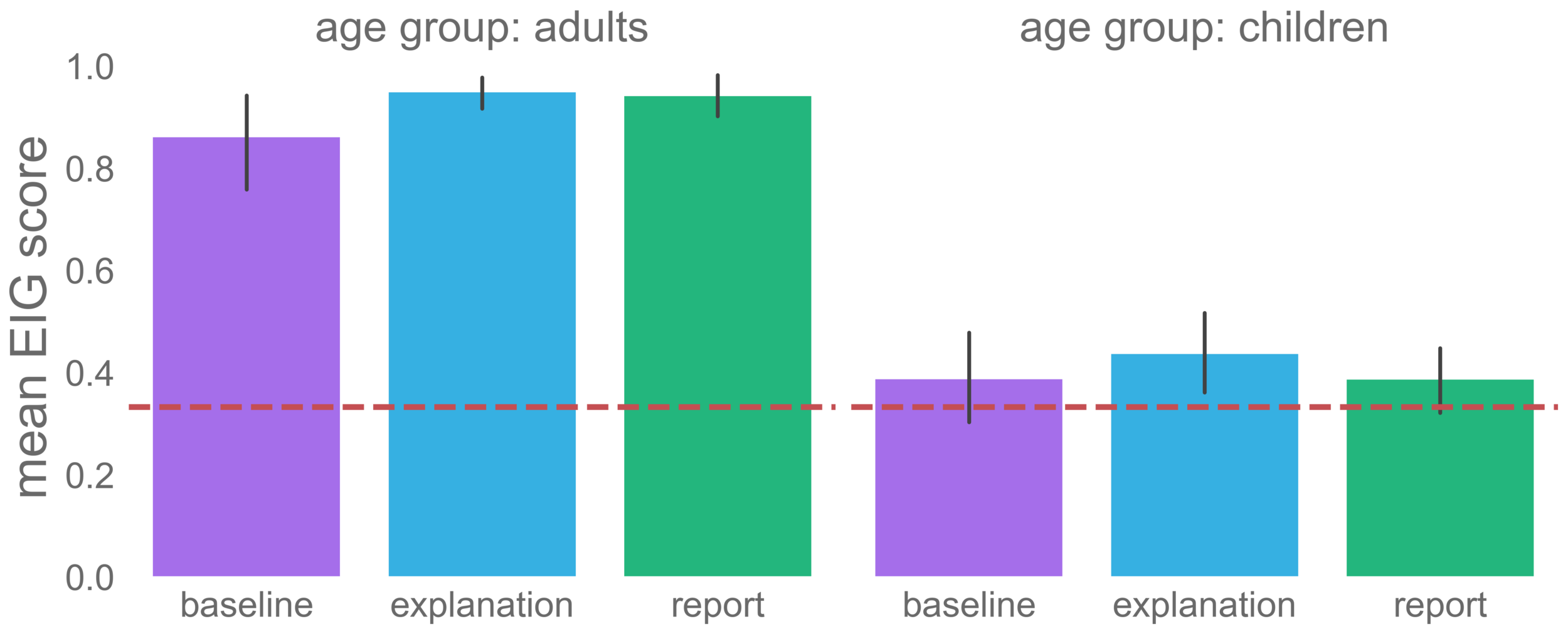

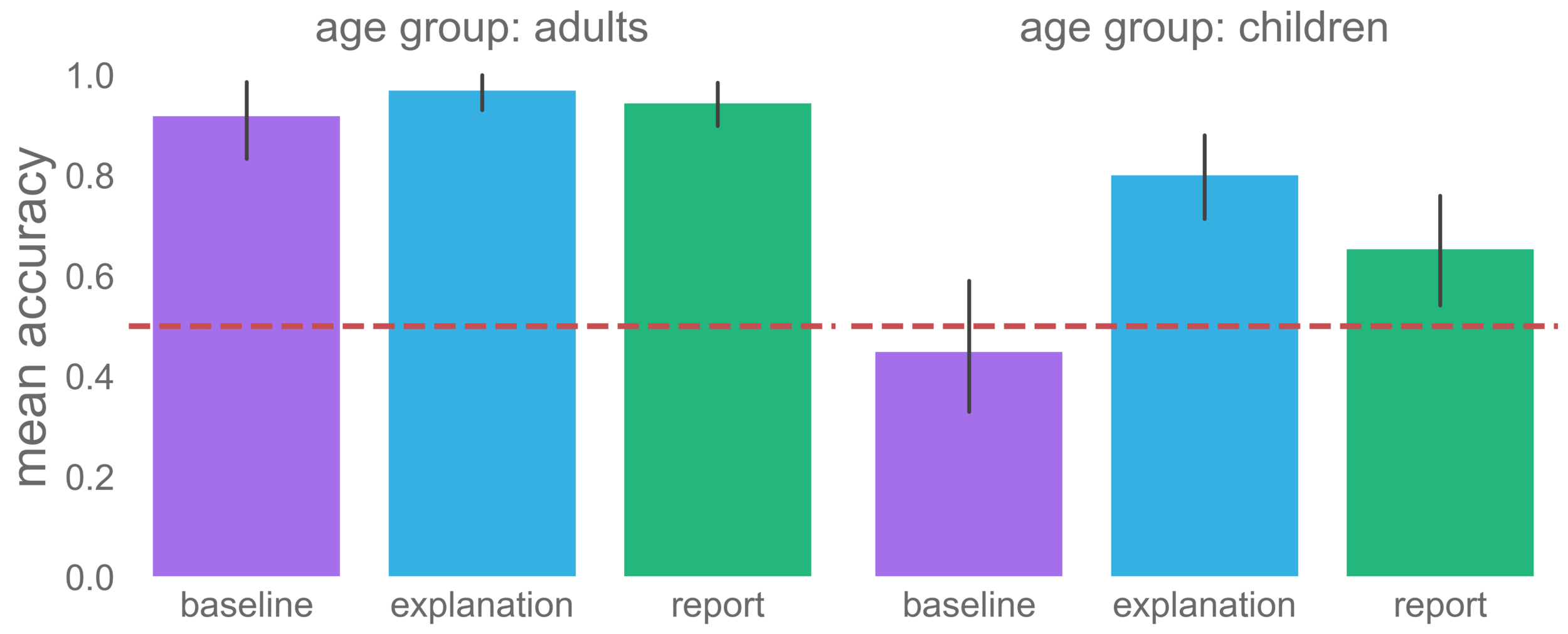

intervention strategy

tl;dr: adults mainly used EIG vs. children mainly PTS

0.86

0.38

0.77

0.24

averaged across all participants and all puzzles: chance = 1/3

learn from interventions

tl:dr: children didn't learn from informative interventions like adults did

0.91

0.45

only included trials where EIG = 1 (same later)

does explaining intervention choices help?

5- to 7-year-olds didn't choose informative interventions or learn from outcomes

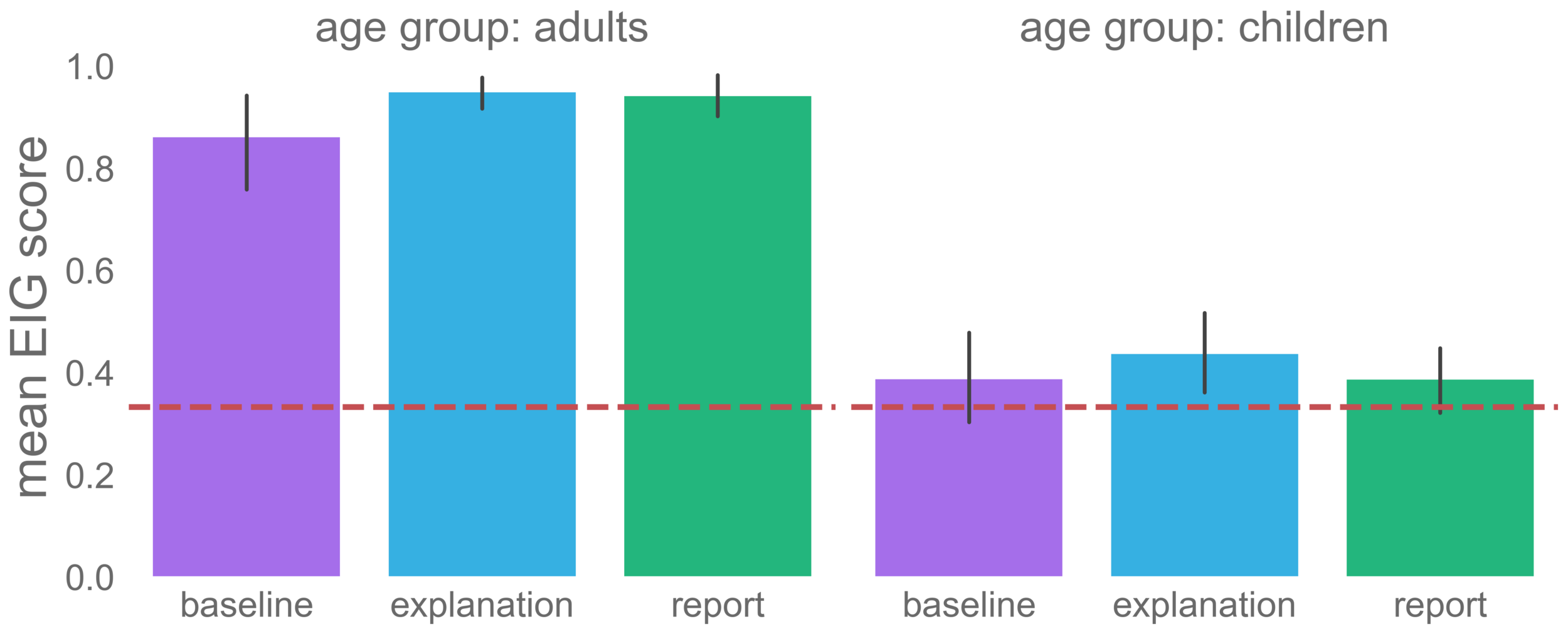

study 2

asked to explain

5- to 7-year-olds: n = 59 (explain); n = 58 (report)

adults: n = 30 (explain); n = 27 (report)

meng and xu (2019)

before choosing

"after deciding on which light bulb you wanna use, don't turn it on yet! point to it and I'll ask you…"

- explanation: "… why you choose that one to find the answer."

- report (control): "… which one you choose to find the answer."

afterwards

"cool, can you tell me…"

- explanation: "… why do you wanna use that light bulb to help?"

- report (control): "… which light bulb do you wanna use to help?"

if choice changed, use last one for modeling

intervention strategy

tl:dr: explaining had no significant impact on intervention strategies

0.86

0.38

0.95

0.94

0.44

0.39

baseline 👉 explanation 👉 report

0.76, 0.86, 0.84

0.24, 0.28, 0.21

learn from interventions

tl:dr: children who either explained or reported were able to learn from interventions

0.91

0.97

0.94

0.45

0.80

0.65

*

*

*

why no effect on intervention strategies?

hypothesis: explanation quality matters?

wrong explanations likely relevant to the task

- explain an outcome: wrong explanations are likely still relevant to the task

- explain an intervention: recognize uncertainty, simulate possible interventions + outcomes, reassess uncertainty after an intervention-outcome pair...

wrong explanations don't target intervention selection at all

why no effect on intervention strategies?

hypothesis: does the quality of explanations matter?

- study older children who explain better

confounding!

intelligence, education...

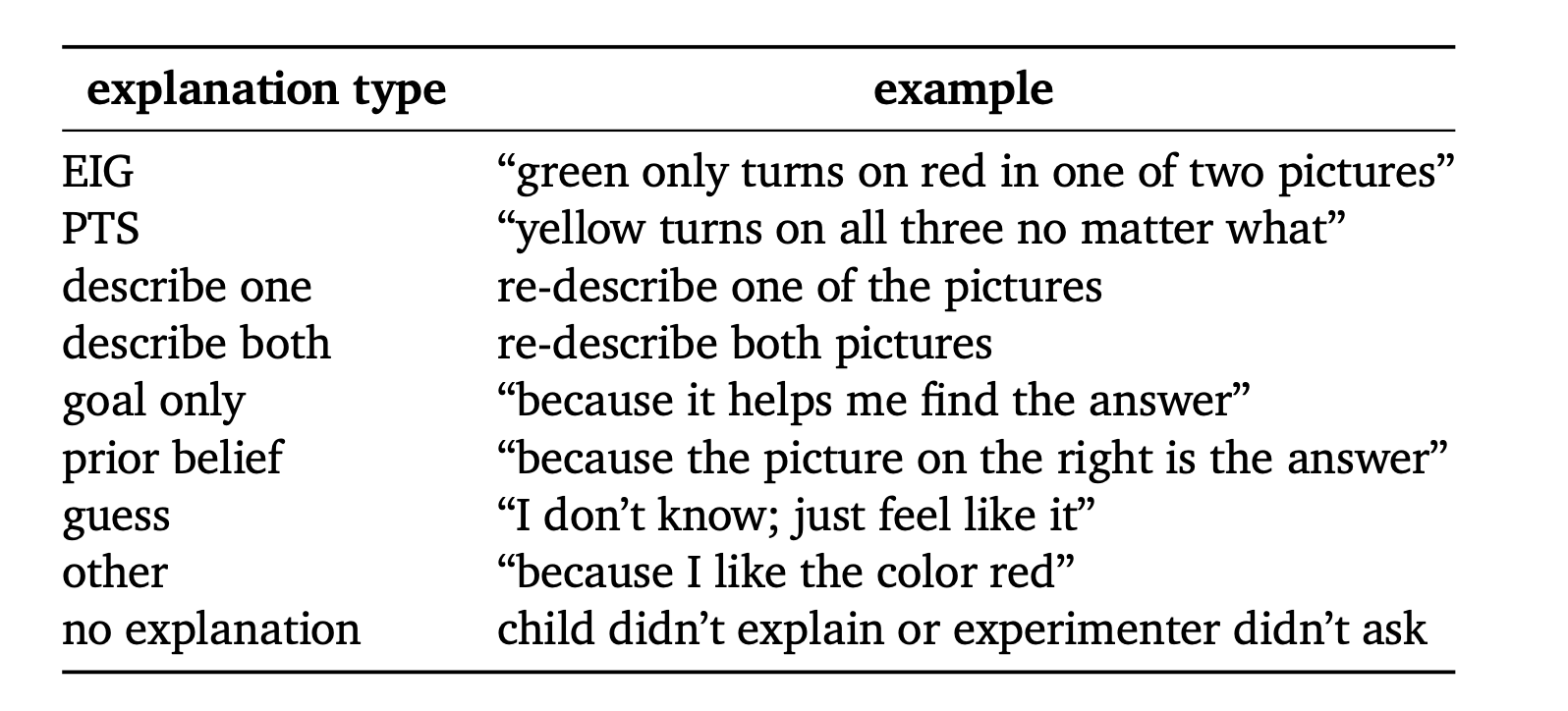

explanation type

intervention strategy

why no effect on intervention strategies?

hypothesis: does the quality of explanations matter?

- study older children who explain better

- train to generate EIG-based explanations 👉 does it change intervention strategies?

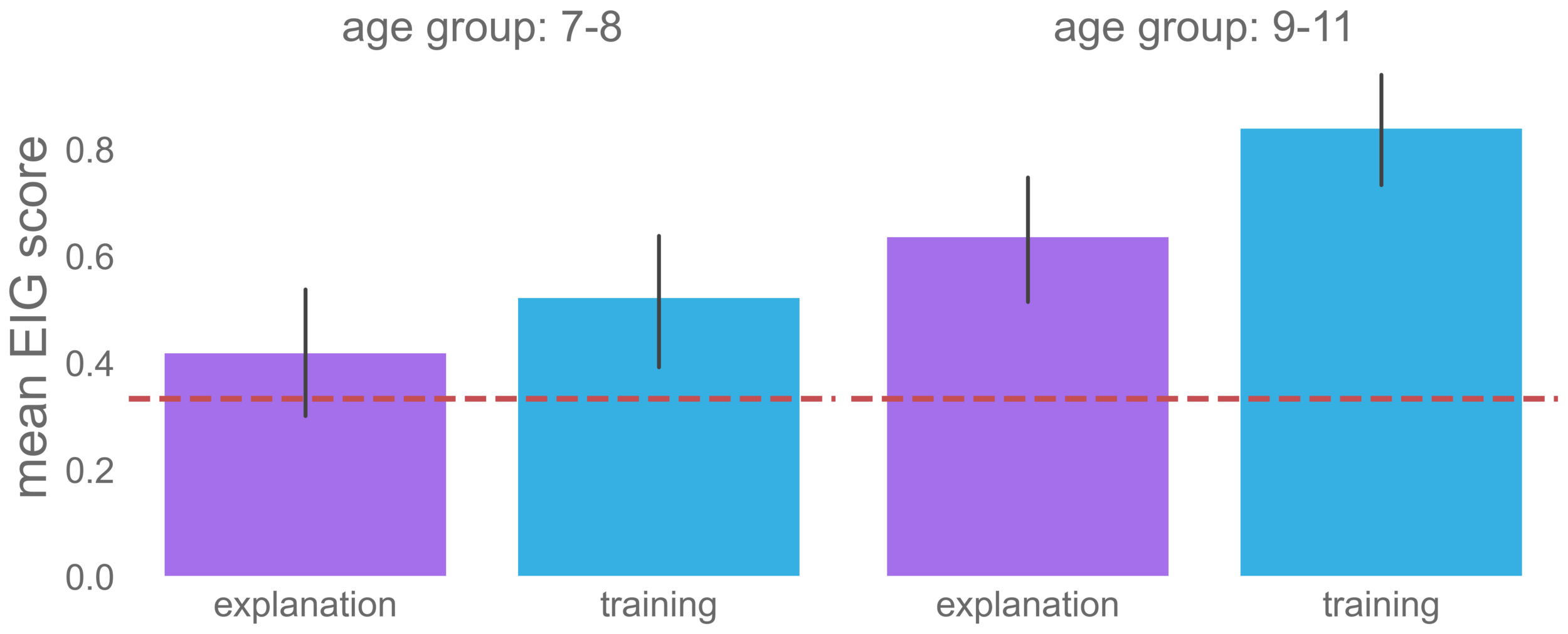

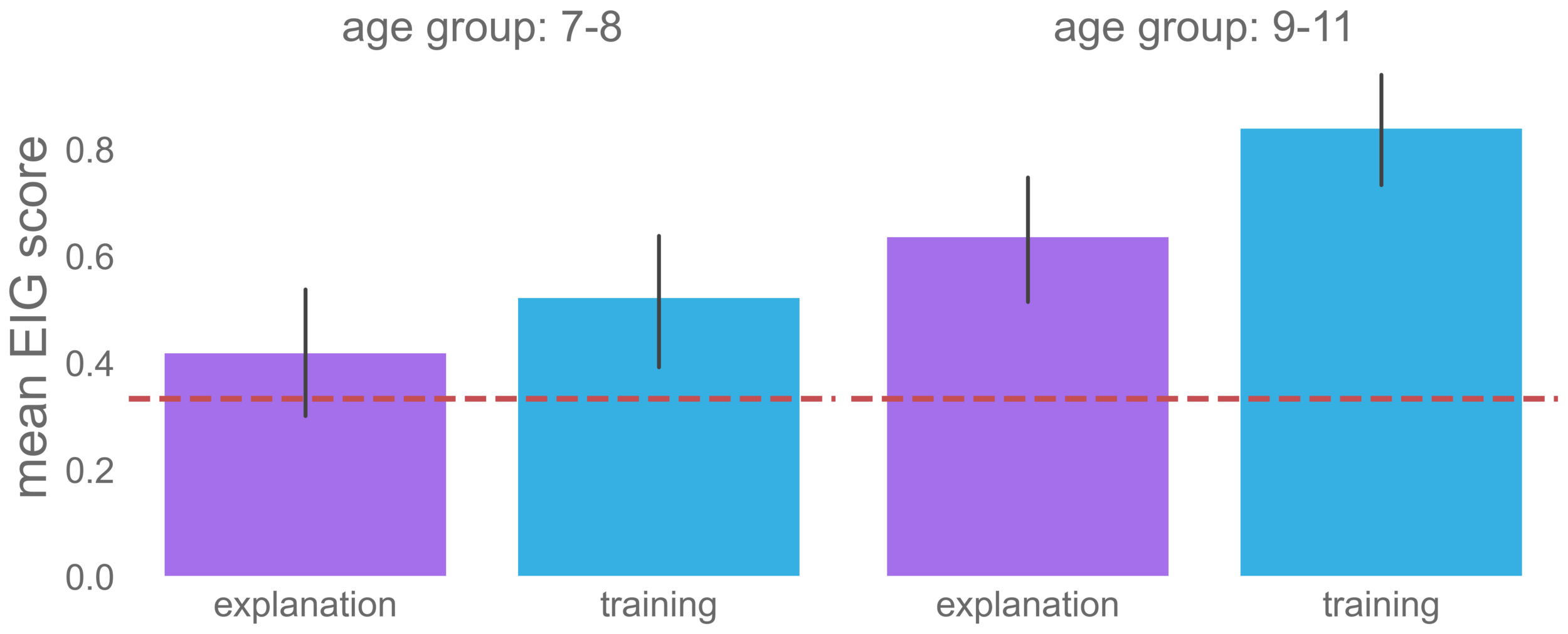

study 3

trained to explain

7- to 8-year-olds: n = 31 (explain), n = 27 (training)

9- to 11-year-olds: n = 30 (explain), n = 30 (training)

(control condition in progress)

same as in study 2

first trial as training

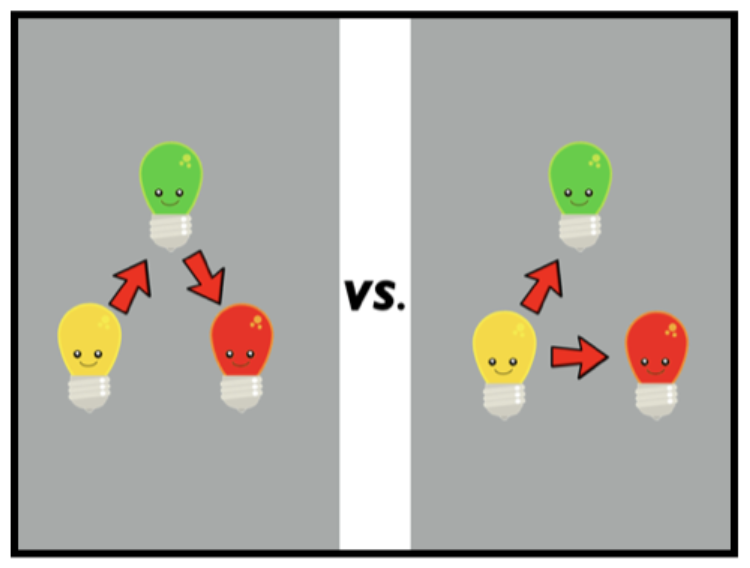

EIG training

"if alex turns on the {yellow, green, red} light bulb, can they find out the answer?" (correct if wrong)

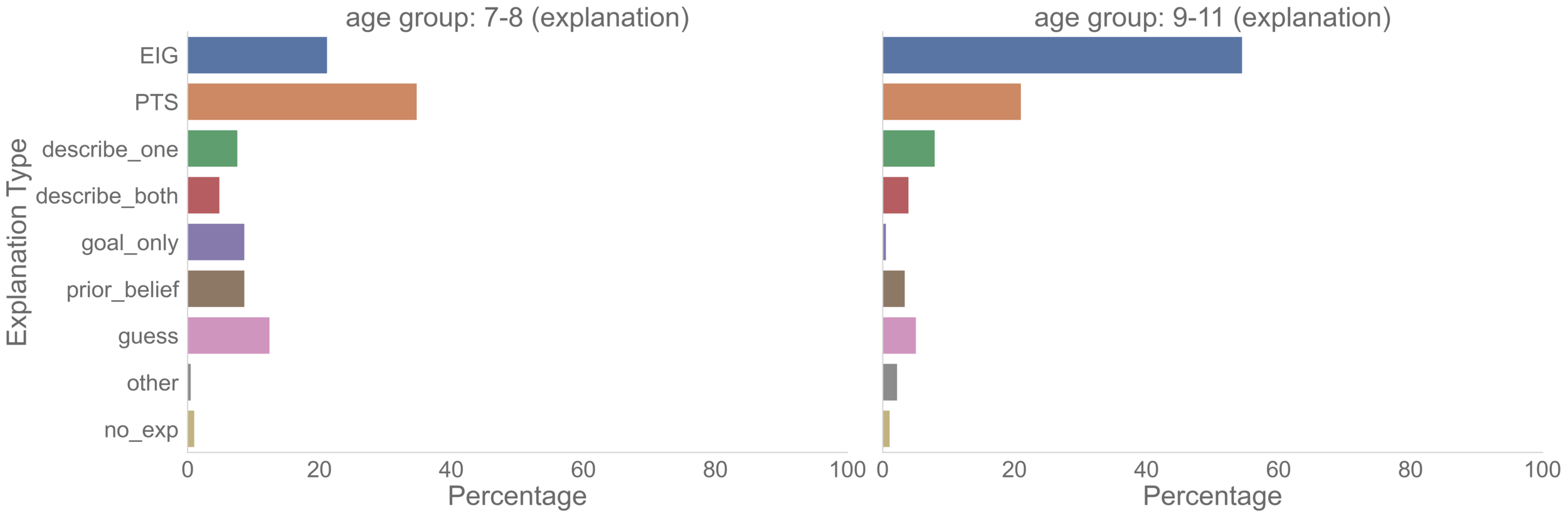

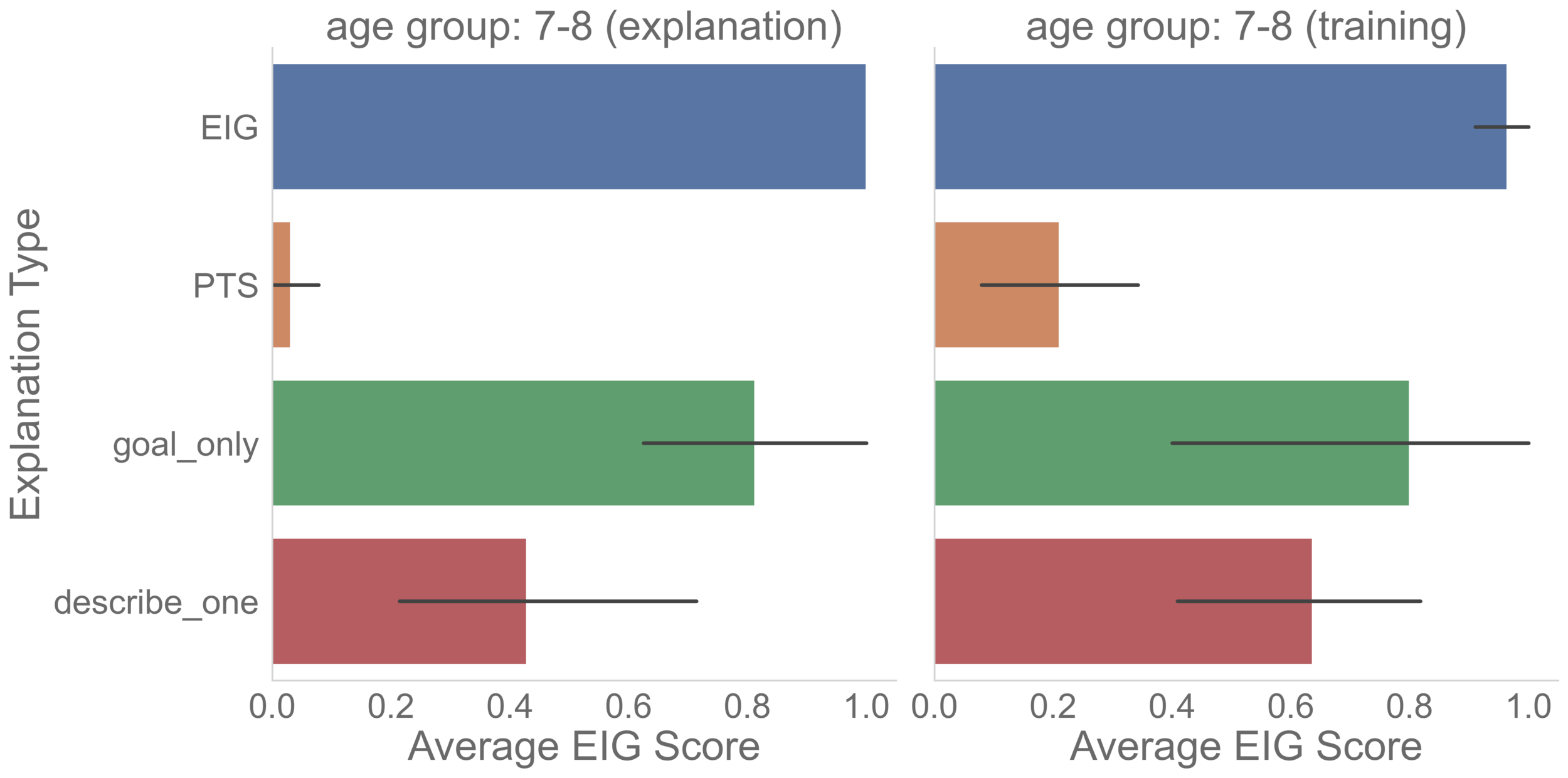

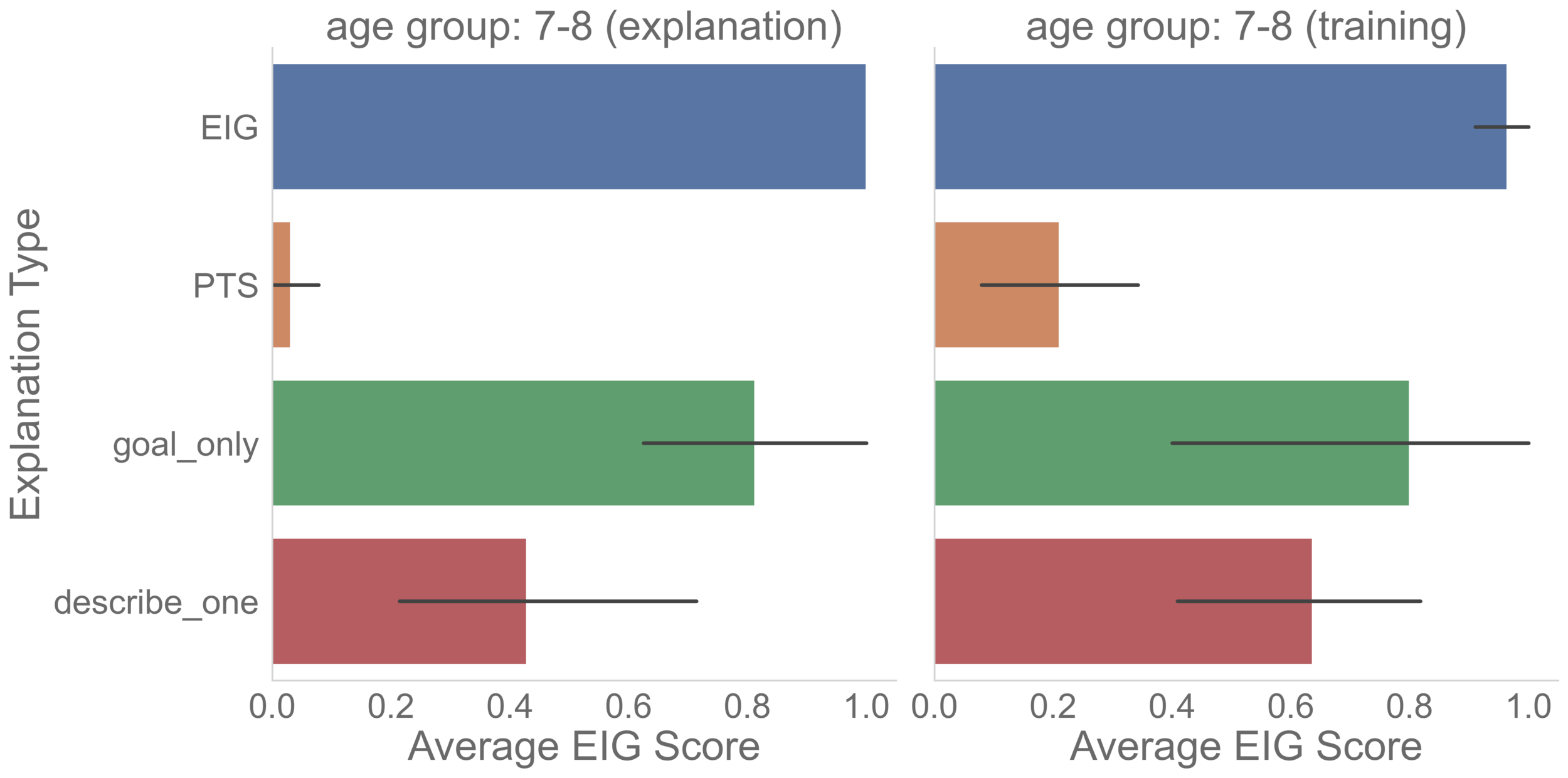

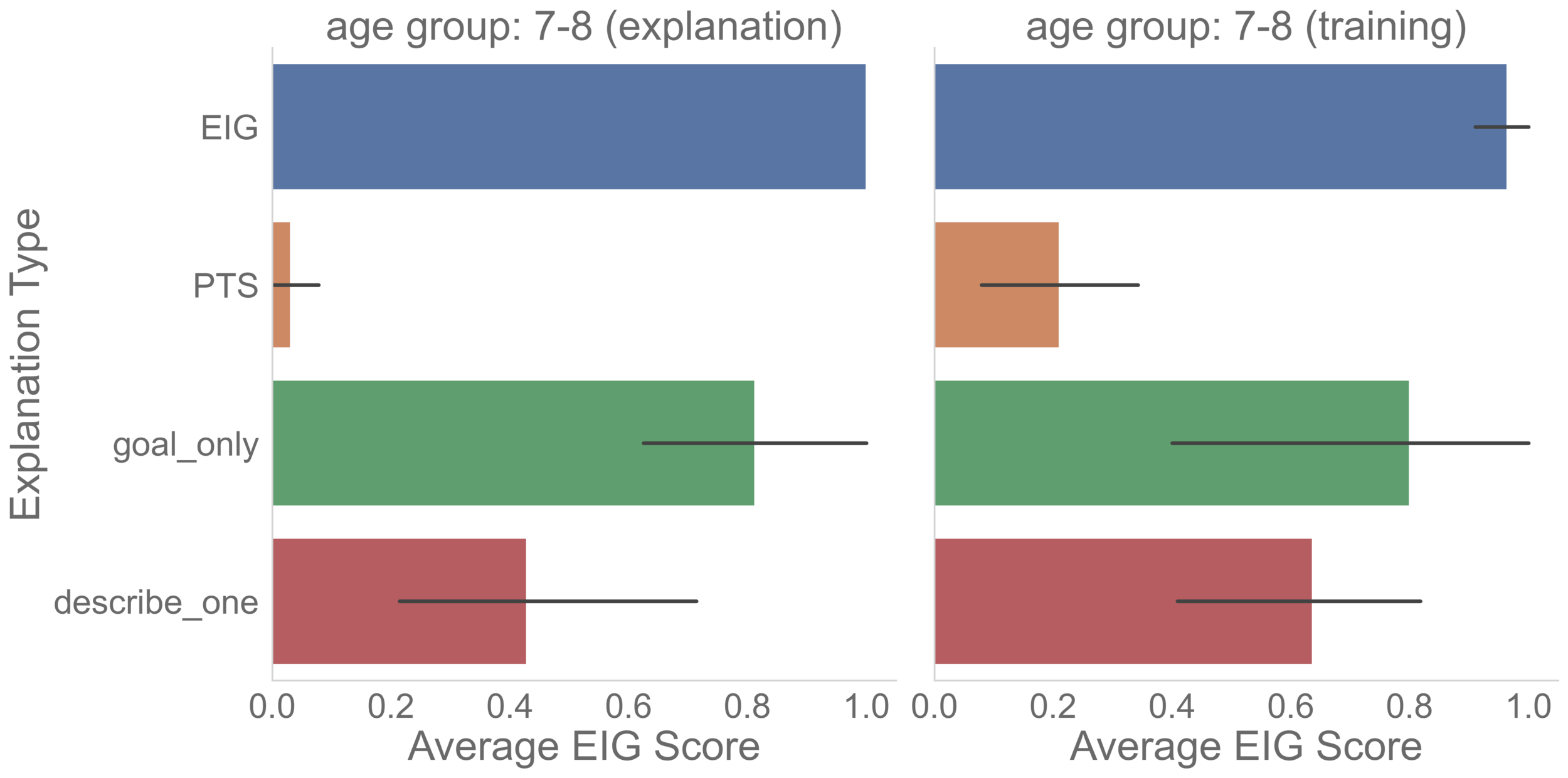

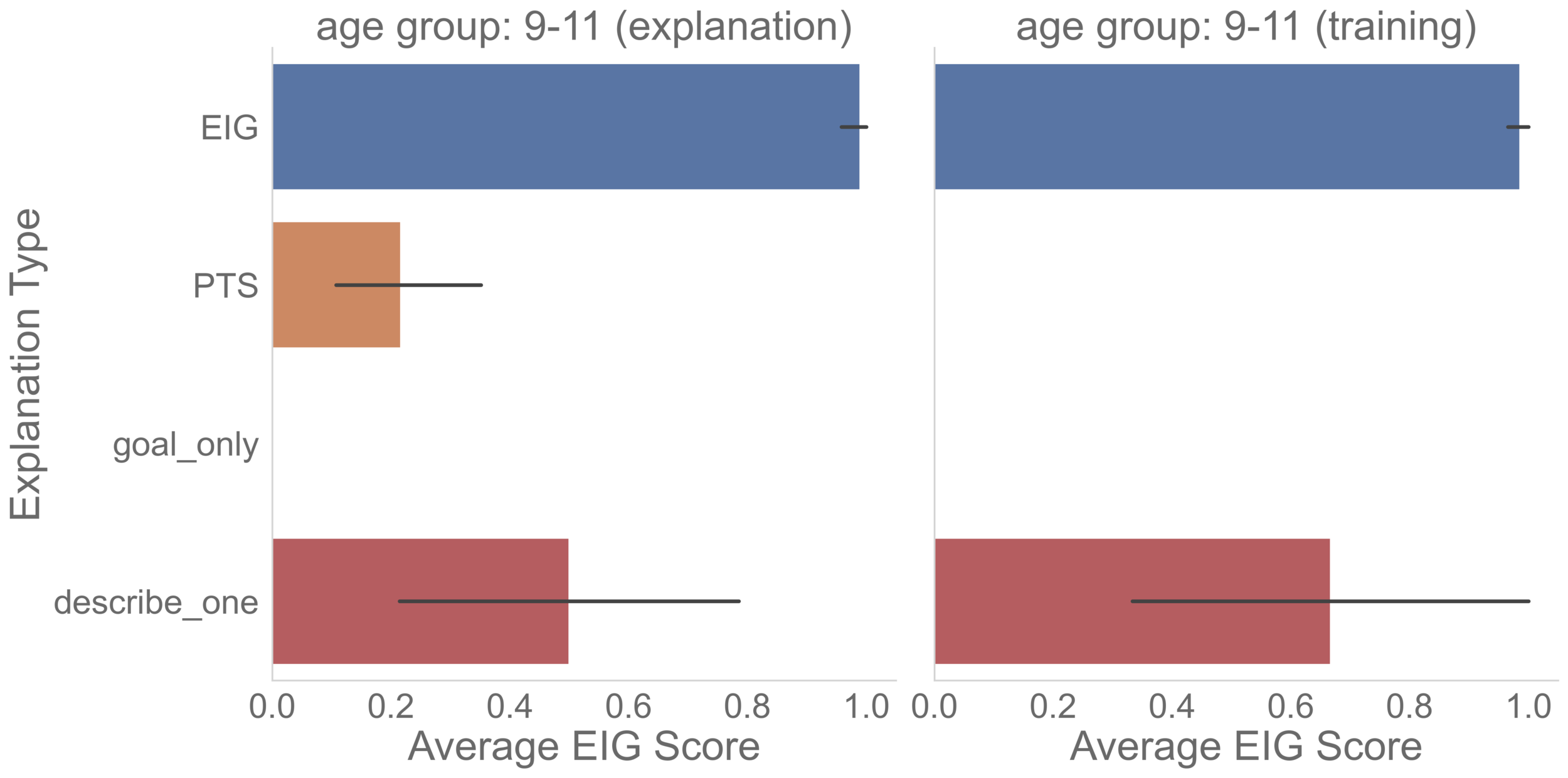

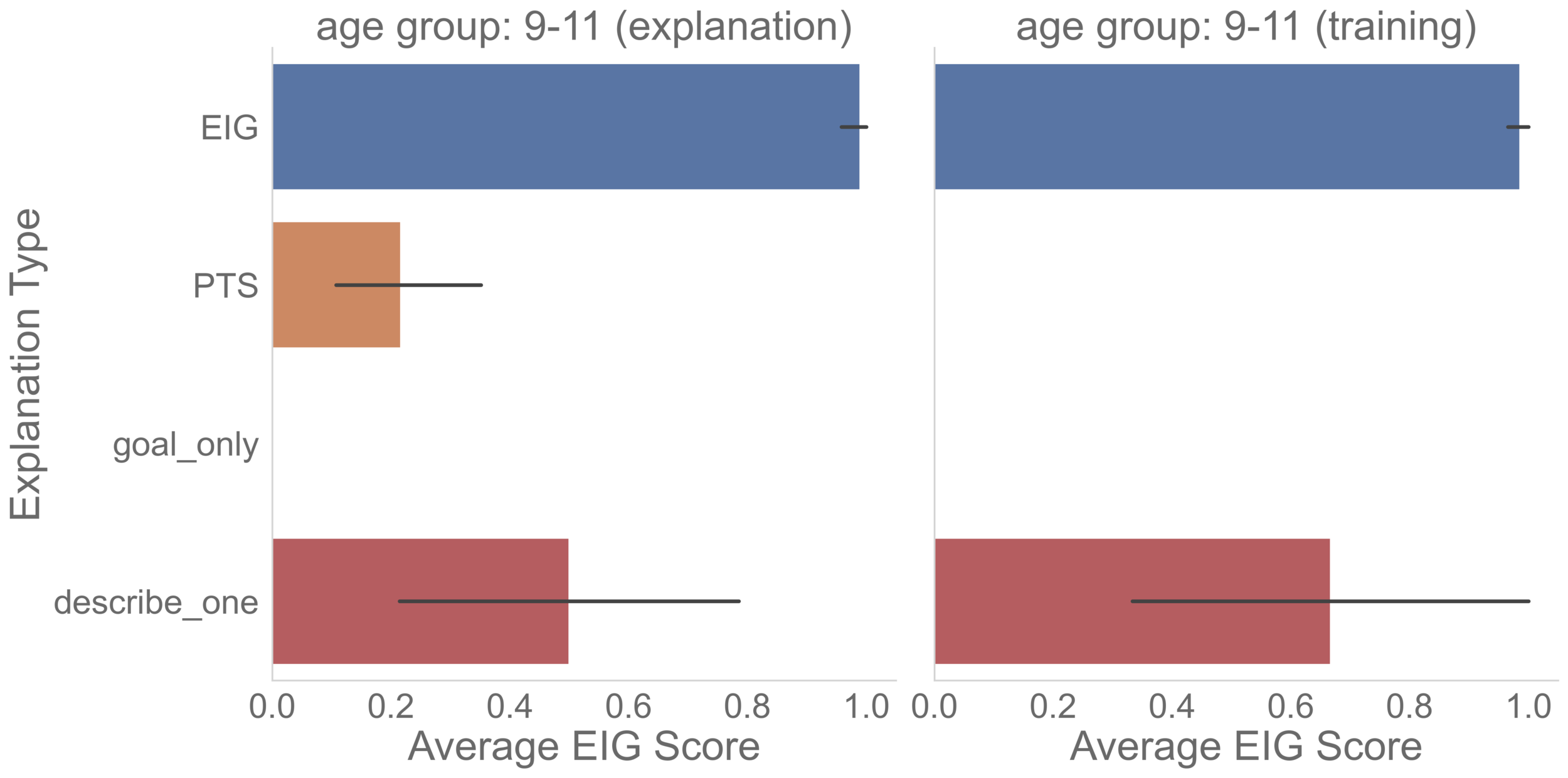

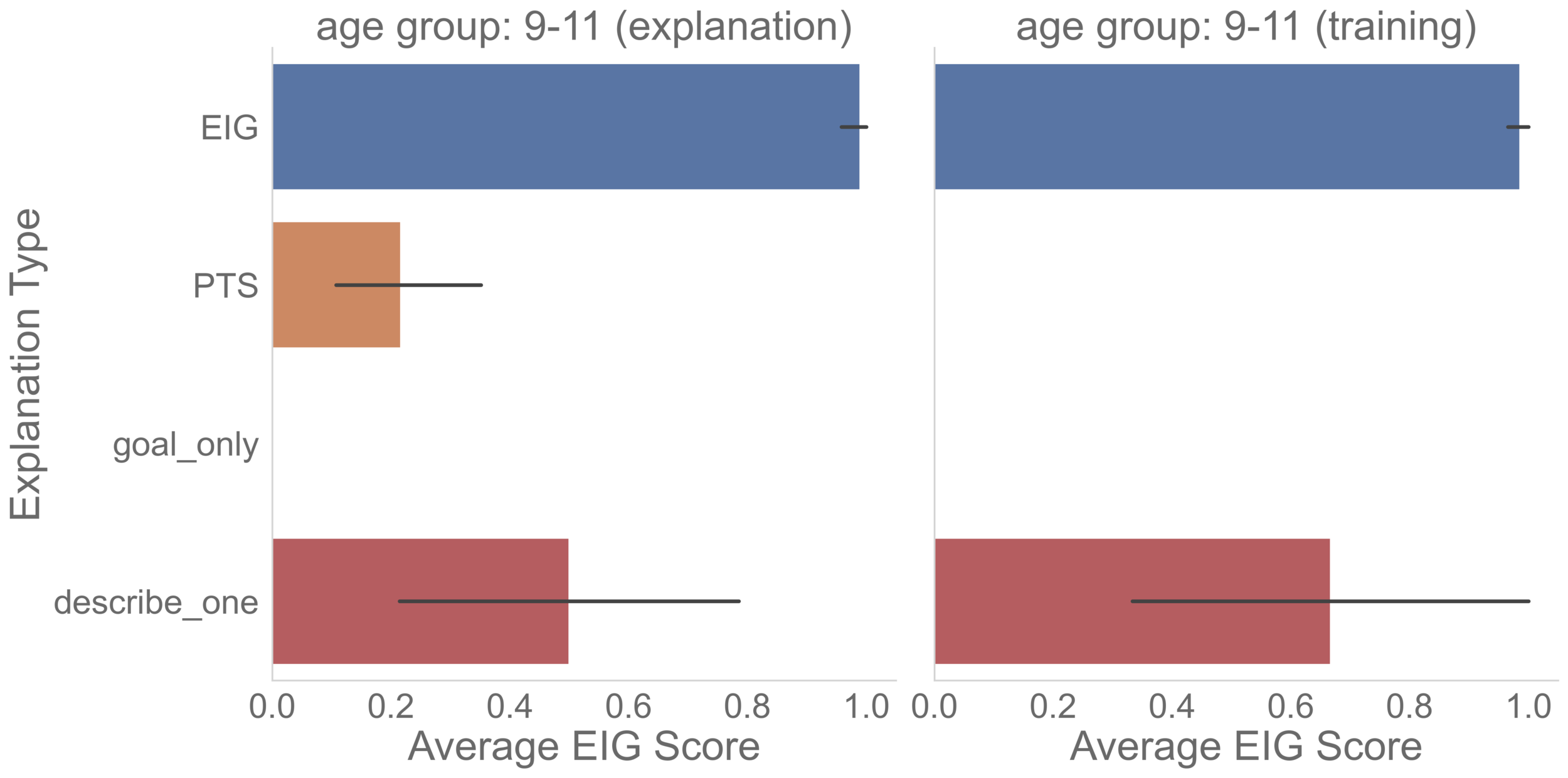

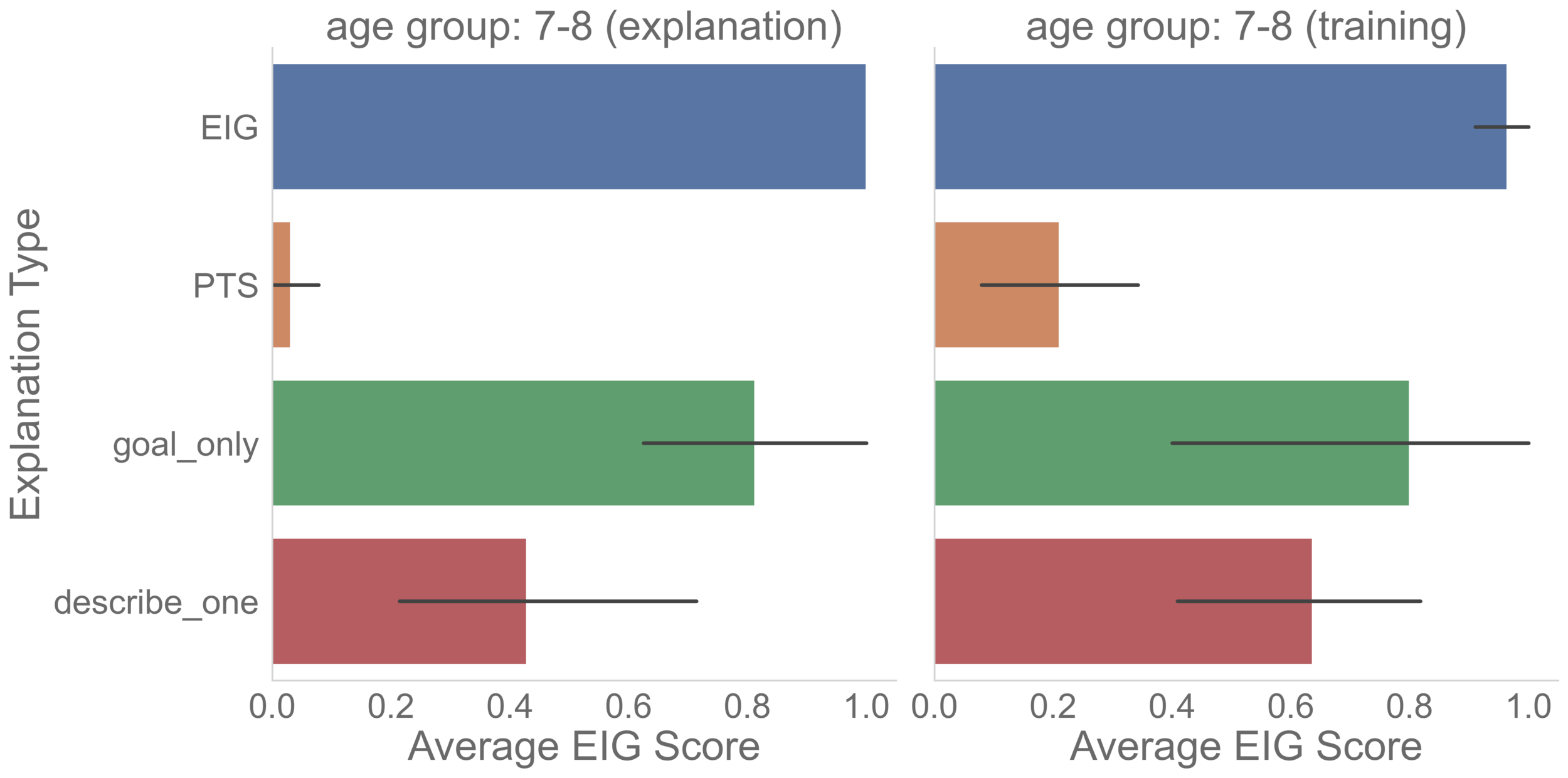

explanation condition: explanation types

tl:dr: without training, PTS is the most common type in 7- to 8-year-olds and EIG in 9- to 11-year-olds

true rationale unknown

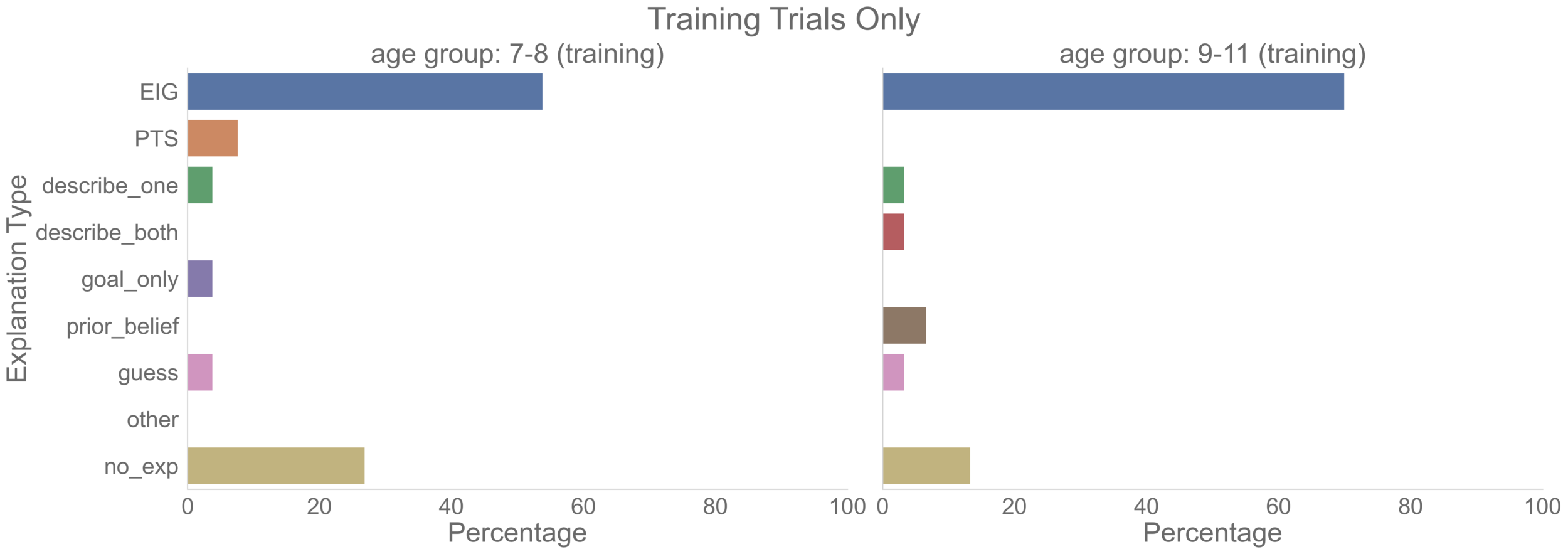

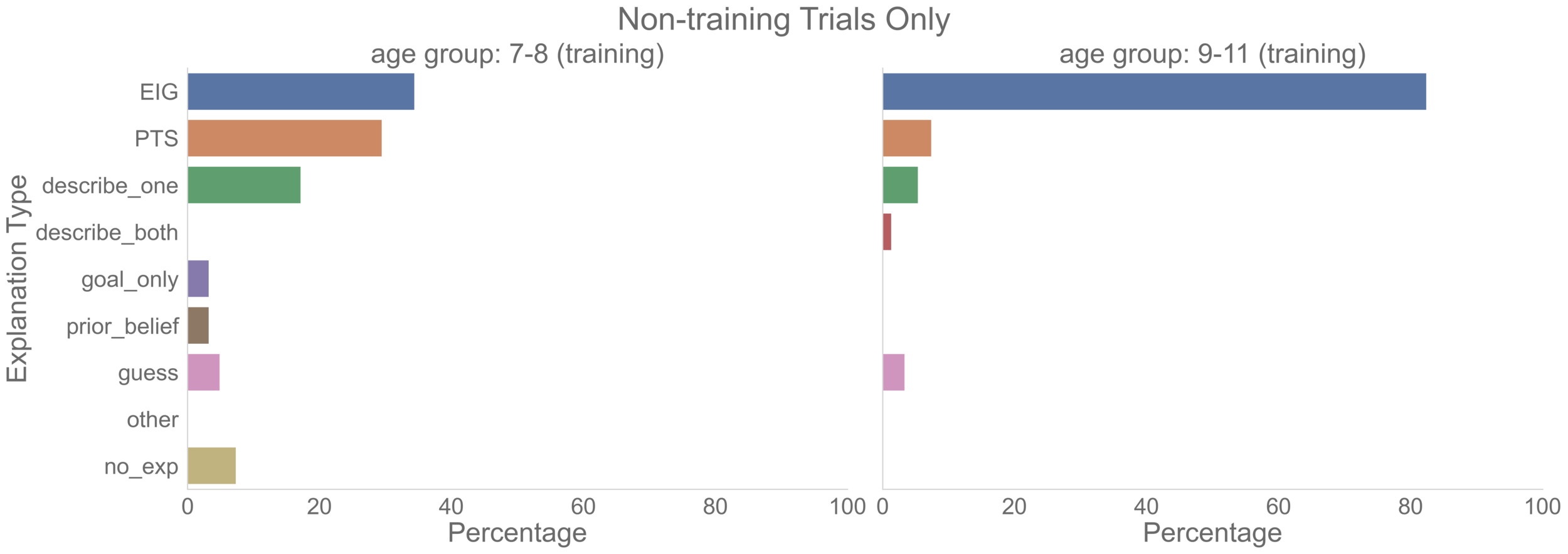

tl:dr: EIG was the dominant type during training; only remained so in 9- to 11-year-olds after training

training condition:

explanation types

training trials (first puzzle)

non-training trials (last 5)

explanation condition

EIG score by explanation type

tl;dr: EIG-based explanations 👉 informative interventions; PTS-based explanations 👉 uninformative interventions

condition: explanation

condition: training

no 9- to 11-year-olds explained this way

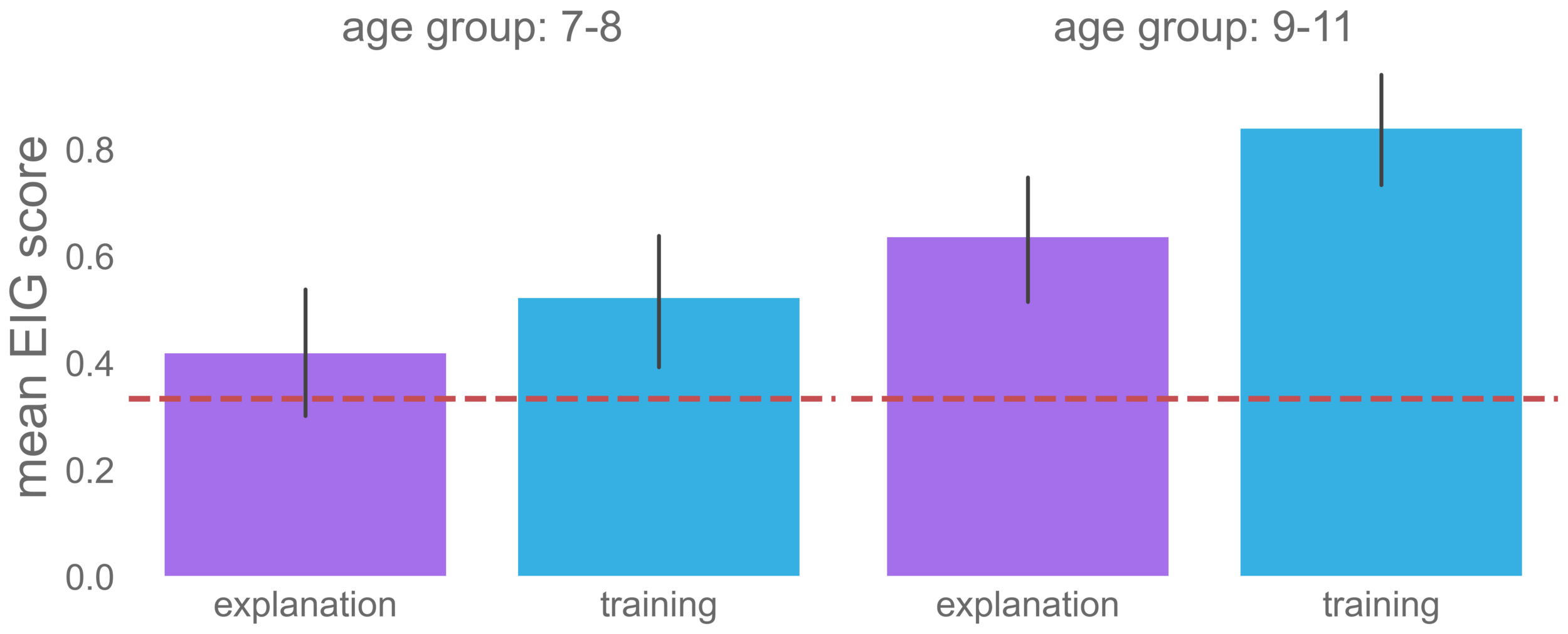

intervention strategy

tl:dr: 9- to 11-year-olds relied far more on EIG after training; 7- to 8-year-olds weren't affected as much

0.44

0.86

0.64

0.59

on a par with adult baseline (study 1)

explanation 👉 training

0.26, 0.35

0.45, 0.71

*

*

(training trials excluded from all analyses)

*

learn from interventions

tl:dr: all children chose correct structures after informative interventions (EIG = 1) most of the time

0.90

0.89

0.94

0.95

key findings

- didn't help (study 1: natural strategies) 👉 adults mainly used EIG vs. 5- to 7-year-olds mainly used PTS to select interventions

- "socrates method" (studies 2 & 3: ask to explain) 👉 did not change adults' or children's (5- to 11-year-olds) intervention strategies

- teach the principle (study 3: train to explain) 👉 brief training in EIG maximization led 9- to 11-year-olds to rely as much on EIG as adults

can explaining lead to better intervention?

6 years later...

thinking alone doesn't lead to better experimentation, but training the prepared mind to think might

open question: why use PTS at all?

-

resource rationality (griffiths et al. 2015; lieder & griffiths, 2020)?

- quality: EIG takes fewer steps to find the answer than PTS

- cost: EIG requires more costly computations than PTS

# of structures:

# of costly computations (hold all structures in mind)

- EIG: 4 vs. PTS: 1

future research: manipulate cost-quality balance

- can learners reach an optimal trade-off between computational cost and information gain?

explain the "inexplicable"

worse case 👉 test one edge at a time: 6 × 2 = 12 interventions

gonna be complicated...

each edge: 2 interventions at most

explain the "inexplicable"

open question: what does PTS really mean in causal learning?

- goals: make everything vs. same thing happen?

-

formalizations: 4 ways to define PTS

- proportion of links: max (y = g), mean (y < g)

- number of links: max (y > g), mean (y = g)

make everything happen? e.g., "yellow is most useful because it may turn on every single one."

make same thing happen? e.g., "green always goes to red, so it helps in both examples."

future research: unpack PTS

- personalization: model PTS according to PTS explanations given

- model comparison: fit different formalizations to human data

examples of PTS-based explanations

1 (0.5)

1 (1)

2 (1)

0 (0)

0 (0)

0 (0)

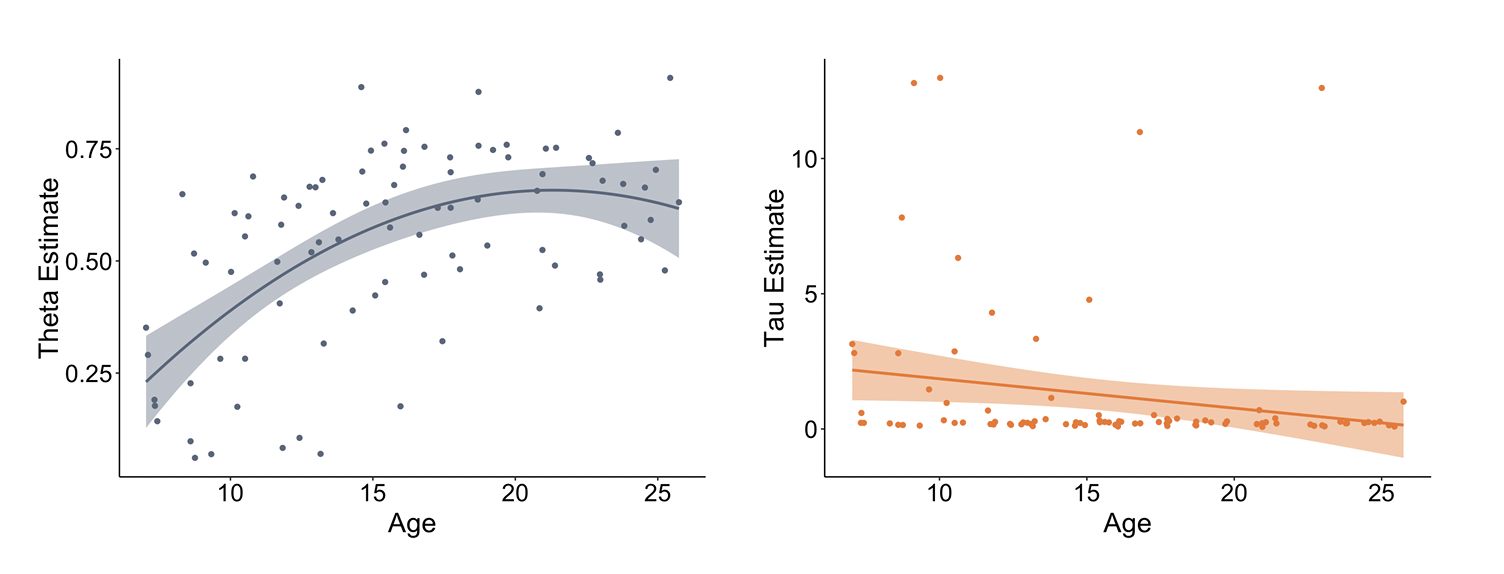

explain the "inexplicable"

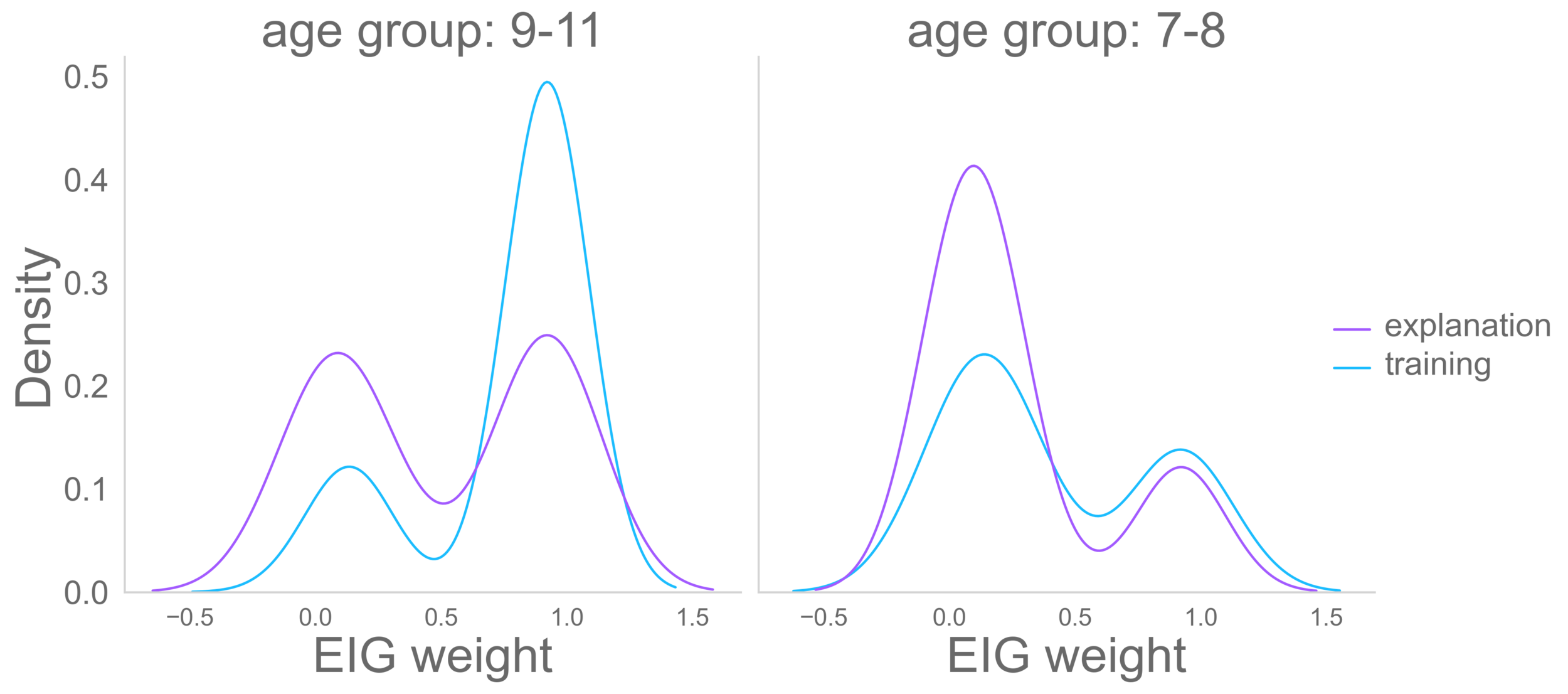

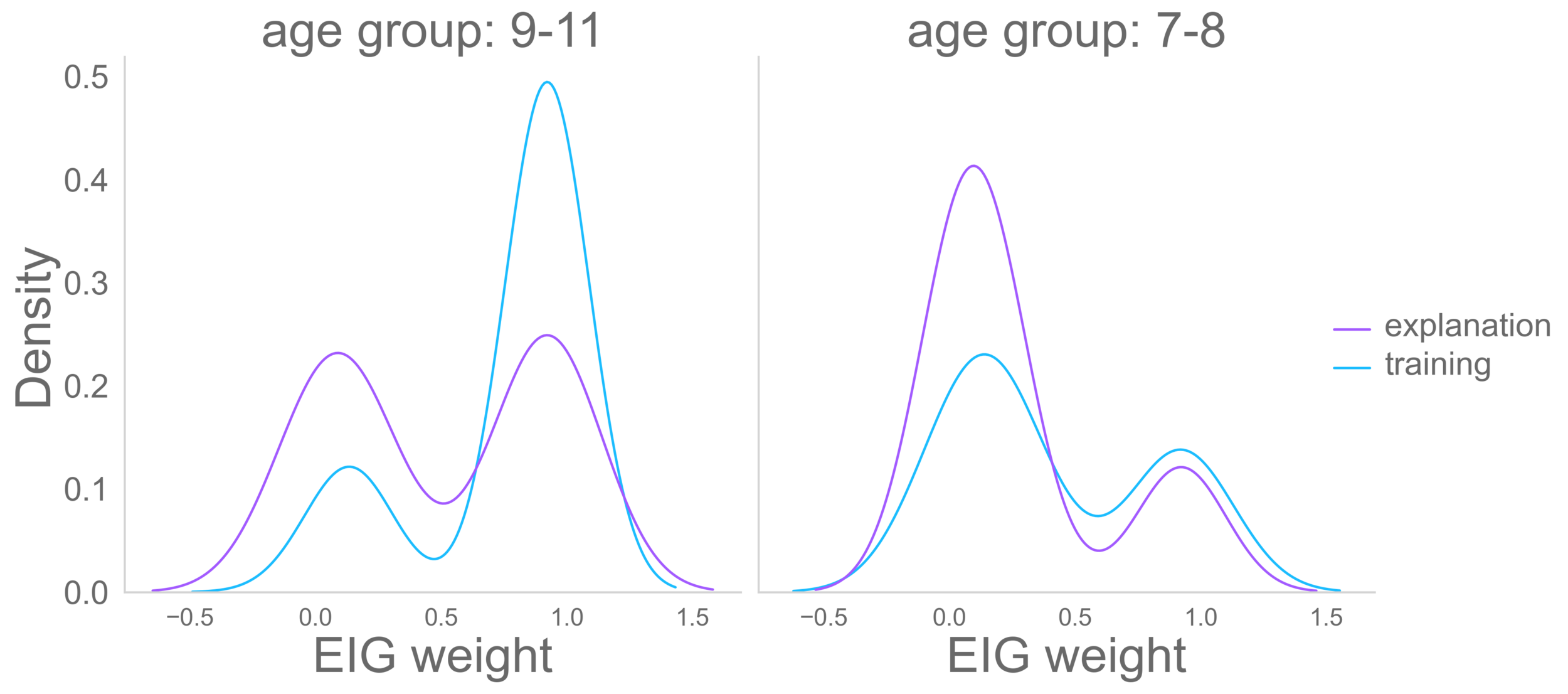

open question: why did older children benefit more from training?

- EIG training may require readiness in counterfactual reasoning, working memory, etc.

nussenbaum et al. (2020)

EIG weight

decision noise

~0.38

future research: more elaborative training for younger children?

- train children to think beyond why, but also what if, why else... (nyhout et al. 2019; engle & walker, 2021)

acknowledgments

- committee: fei xu, alison gopnik, mahesh srinivasan, neil bramley

- labmates: rongzhi liu, ruthe foushee, shaun o'grady, elena luchkina, stephanie alderete, roya baharloo, kaitlyn tang, emma roth, gwyneth heuser, harmonie strohl, phyllis lun...

- research assistants & interns: eliza huang, anqi li, sophie peeler, shengyi wu, jessamine li, selena cheng, chelsea leung, nina li, stella rue...

- for your kindness: silvia bunge, julie aranda

"it is the usual fate of mankind to get things done in some boggling way first, and find out afterward how they could have been done much more easily and perfectly."

— charles s. pierces (1882)

the phd grind

"i procrastinated more than i had ever done in my life thus far: i watched lots of tv shows, took many naps, and wasted countless hours messing around online.

unlike my friends with nine-to-five jobs, there was no boss to look over my shoulder day to day, so i let my mind roam free without any structure in my life.

having full intellectual freedom was actually a curse, since i was not yet prepared to handle it." (p. 16-17)

"so, was it fun?

i'll answer using another f-word: it was fun at times, but more importantly, it was fulfilling.

fun is often frivolous, ephemeral, and easy to obtain, but true fulfillment comes only after overcoming significant and meaningful challenges." (p. 107)

well into my 3rd year: hooked by data science 👉re-realized coolness & importance of self-supervised learning + bayes + information theory... or just scientific computing in general (post on data science)

questions?

exit talk

By Yuan Meng

exit talk

- 175