Polar Alignment

and

Primal Retrieval

Zhenan Fan

Department of Computer Science

Collaborators:

Huang Fang, Yifan Sun, Halyun Jeong, Michael Friedlander

Atomic Decomposition

[Chen, Donoho & Sauders'01; Chandrasekaran et al.'12]

- sparse n-vectors

-

low-rank matrices

How do we identify the support of a vector x with respect to an arbitrary atomic set A?

cardinality

atomic set

weight

atom

Gauge and Support Functions

Gauge function

Support function

Polar Alignment

Polar inequality

Alignment

Theorem

Examples

Sparse vector

Low-rank Matrix

X has rank r

largest singular value of Z has multiplicity d

Alignment in Structured Optimization

(P1)

(P2)

(P3)

Theorem

(y* is same as optimal dual variable up to proper scaling.)

Extension to Sum of Sets

Theorem

(P1)

(P2)

(P3)

Cardinality-Constrained Data-Fitting

Assumption

(P)

is feasible to (P)

Dual problem

Goal retrieve a primal variable near-feasible to (P) from a near-optimal dual variable

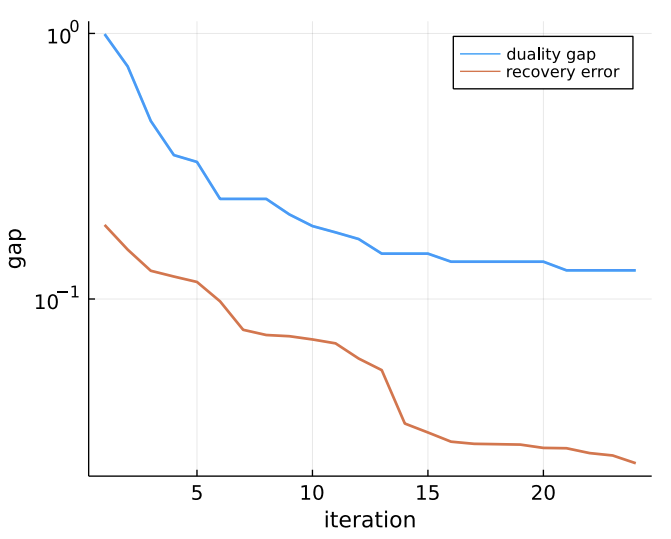

Primal Retrieval

Essential Cone of Atoms

Primal retrieval

(PR)

Key Idea

(PR) is easy to solve when k is small

is feasible to (P)

Polyhedral Atomic Set

(PR)

can be removed when A is symmetric

Theorem

Suppose the primal problem is non-degenerate

(duality gap)

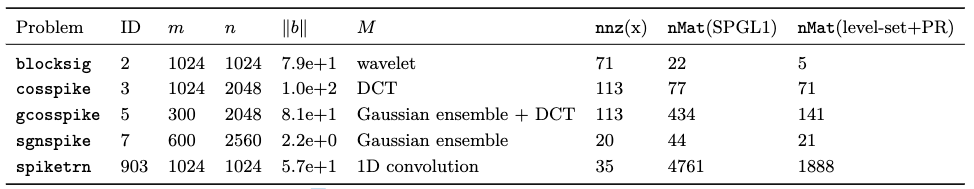

Experiment: Basis Pursuit Denoise

(P)

Test problems from Sparco [van den Berg et al.'09]

Spectral Atomic Set

(PR)

Theorem

Experimet: Low-Rank Matrix Completion

(P)

Simiar experiment as in [Candès & Plan'10]

( from National Centers for Environmental Information)

is approximately low-rank

We subsample 50% of

Alignment and Retrieval

By Zhenan Fan

Alignment and Retrieval

Slides for alignment.

- 271