Omnibenchmark:

Open, continuous and collaborative benchmarking

Workshop BC²

Basel, 11.09.23

Benchmarking:

Why benchmarking and why open, collaborative and continuous benchmarking?

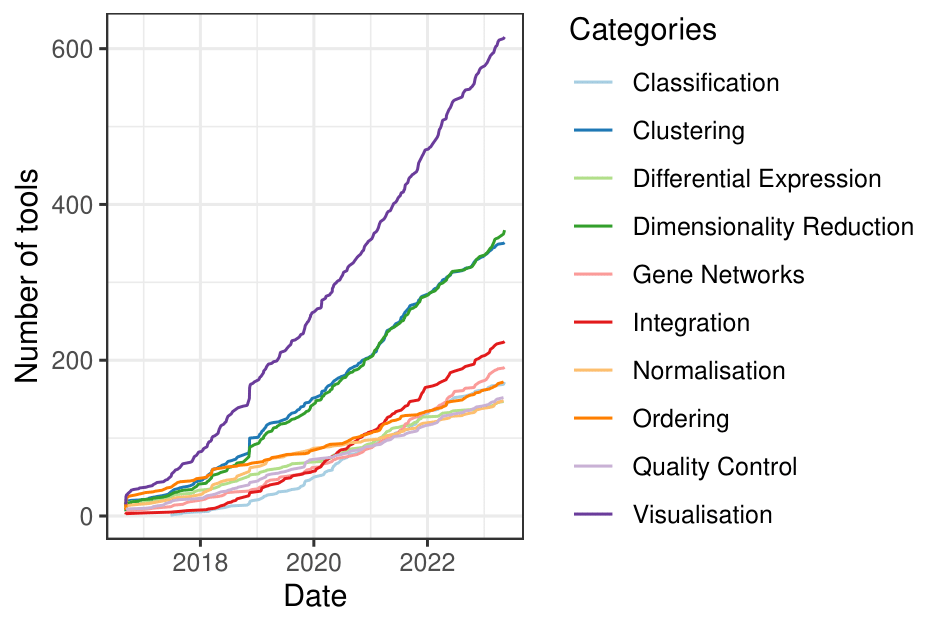

Selecting appropriate computational methods is challenging

https://www.scrna-tools.org

"Non-Neutral" benchmarks:

The self assessment trap

1. cms_default 2. cms_kmin 3. lisi

Lütge A. et al, 2020

Norel et al, 2011

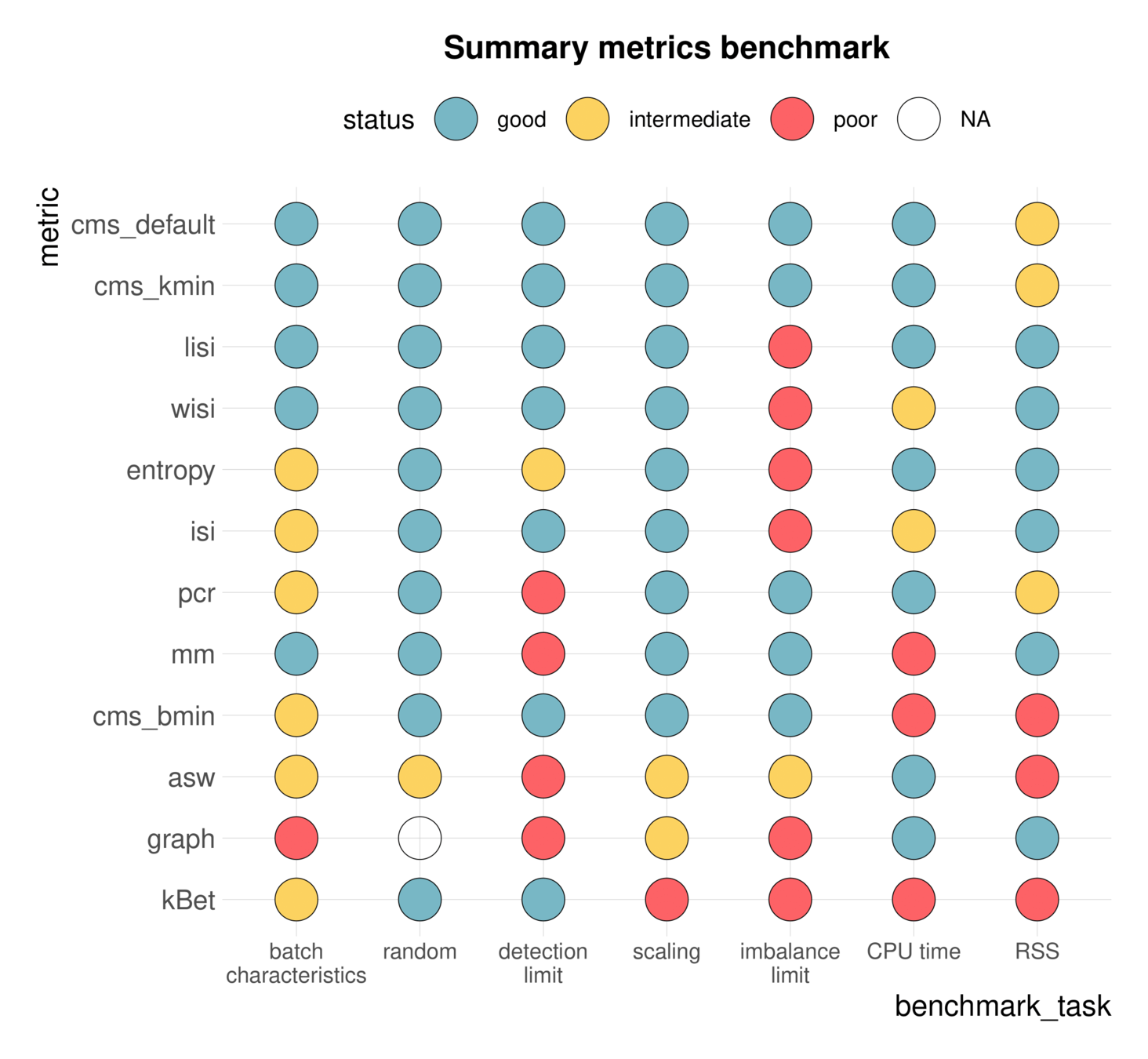

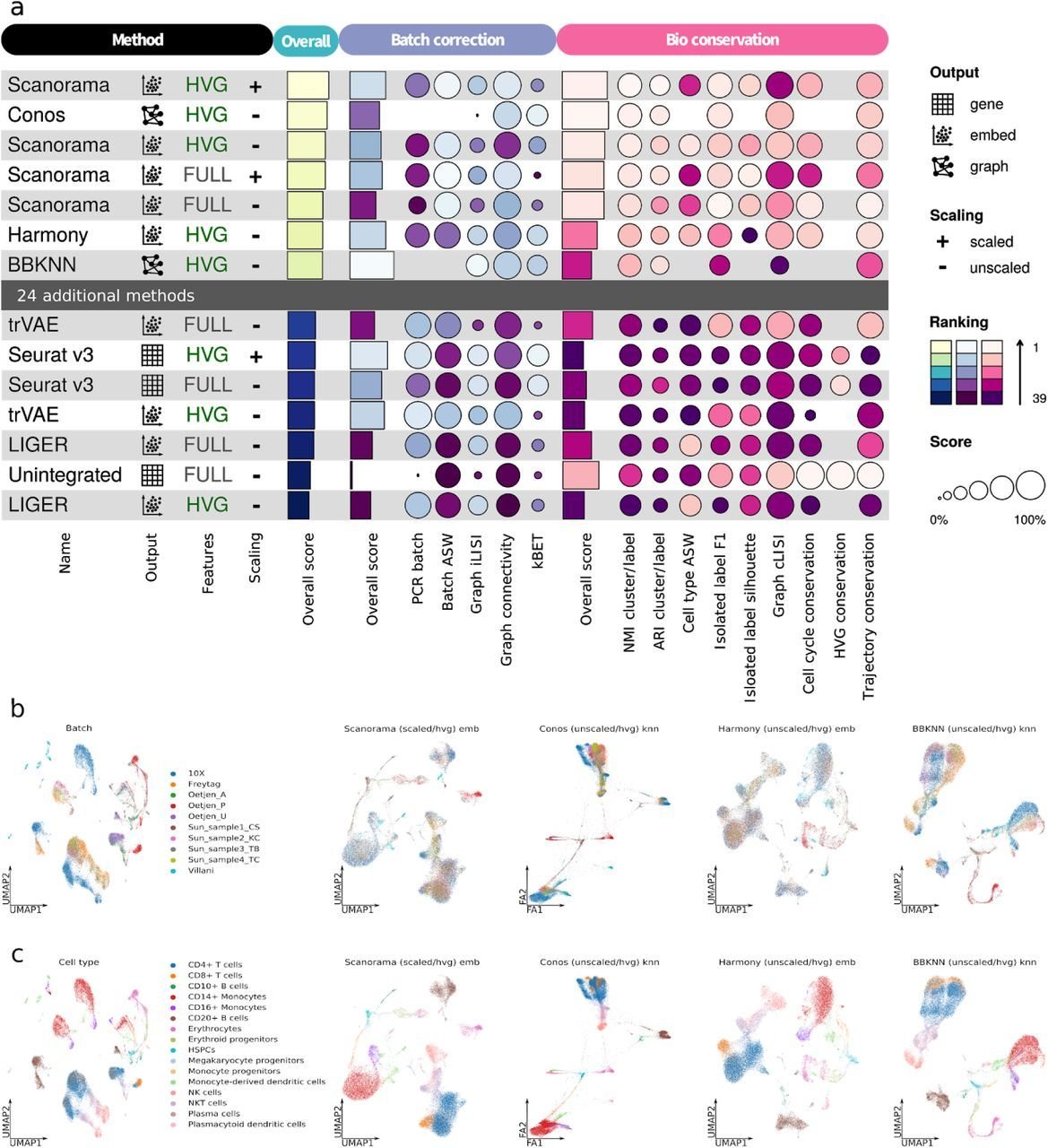

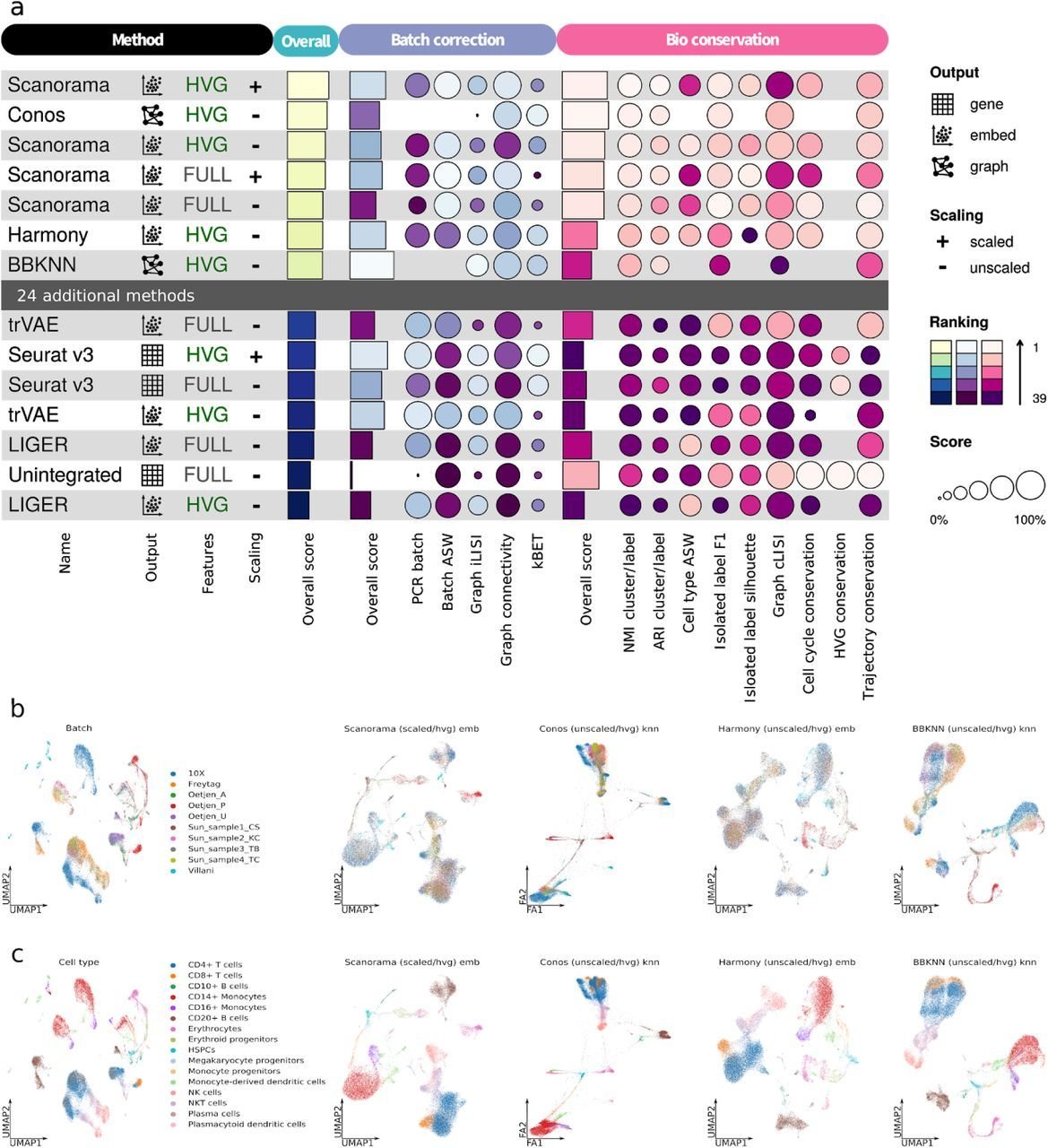

Benchmarking results can be ambiguous

Luecken et al., 2021

Different benchmarks - different conclusions

- Scanorama

- Conos

- Harmony

- Limma

- Combat

- Liger

- Seurat

- Harmony

-

- Seurat

- Harmony

- Scanorama

- Liger

- TrVAE

- Seurat

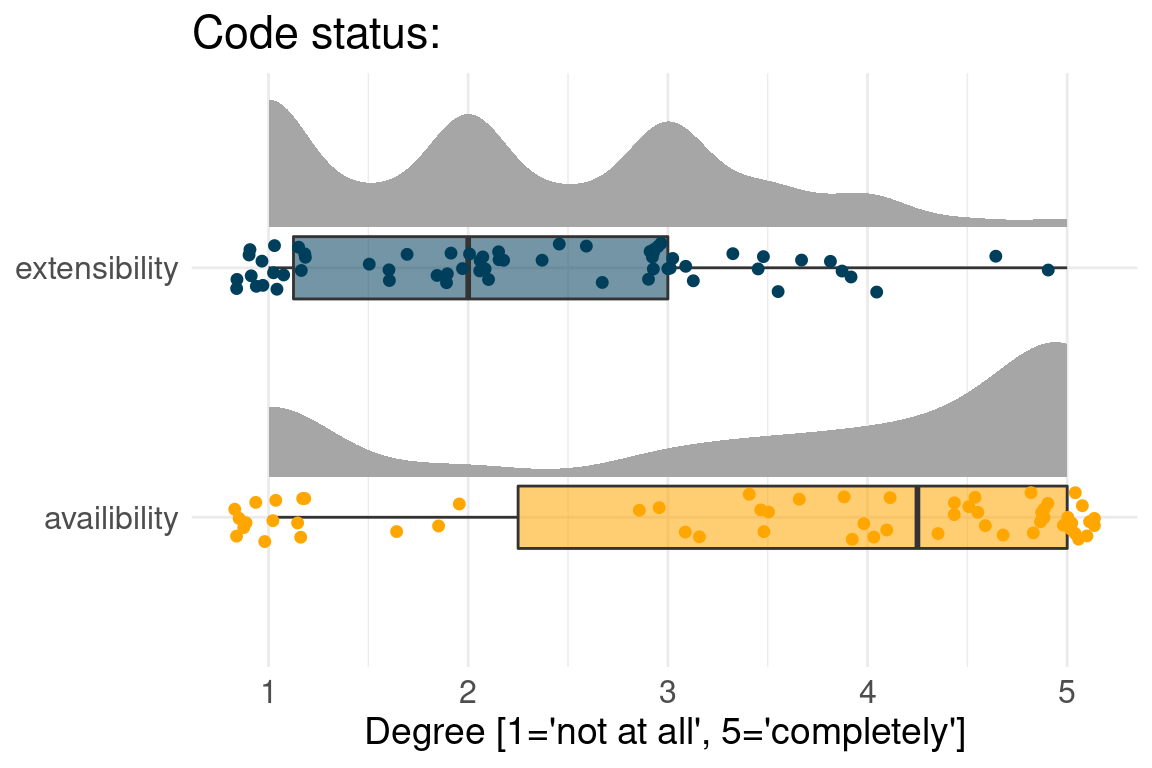

status quo:

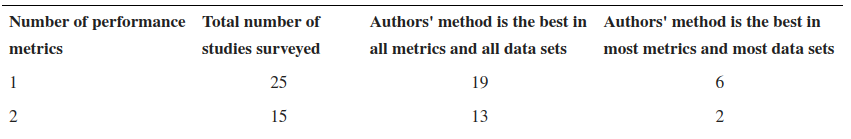

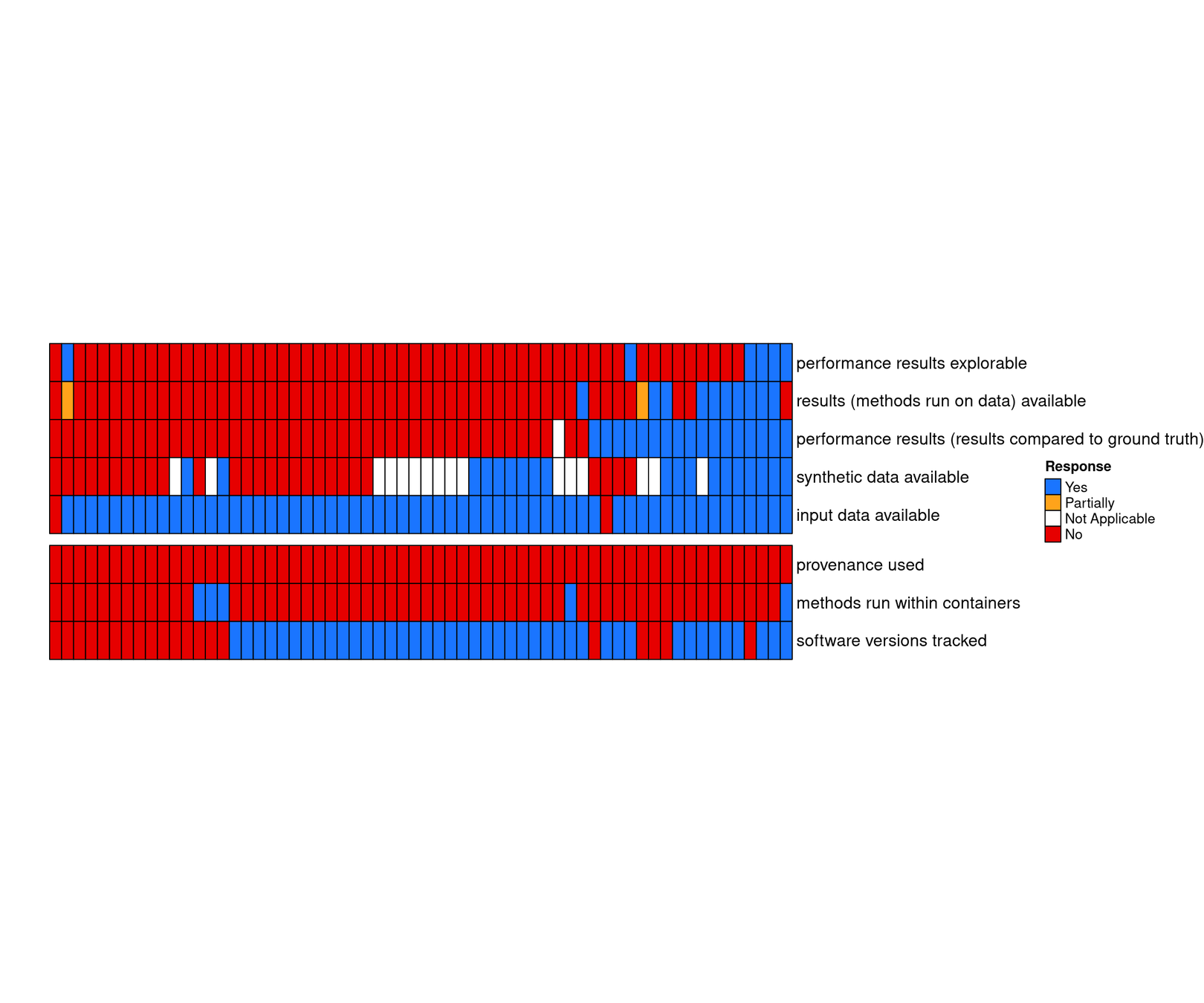

Meta-analysis of 62 method benchmarks in the field of single cell omics

Benchmark code is available but not extensible

Sonrel et al, 2023

Sonrel et al, 2023

Raw input data are available, but not results

Open and continuous benchmarking

Data

inputs

simulations

results

Reproducibility

versions

environments

workflows

scale

comprehensive

continuous

Code

available

extensible

reusable

currently part of most benchmarks

not part of current standards

Omnibenchmark:

Design and basic concepts

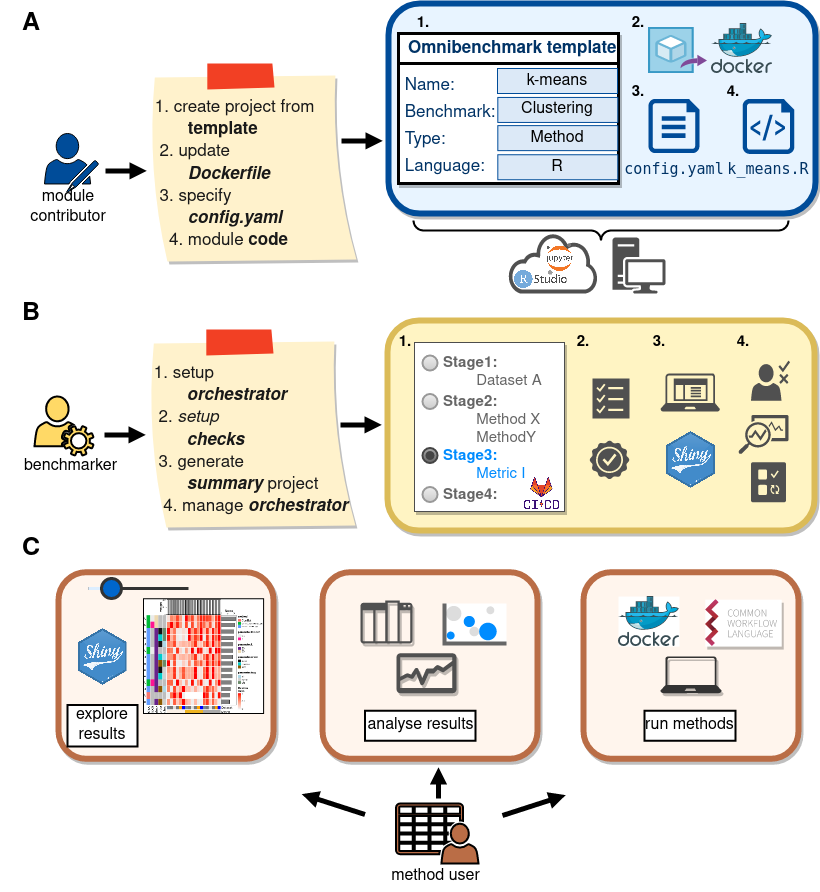

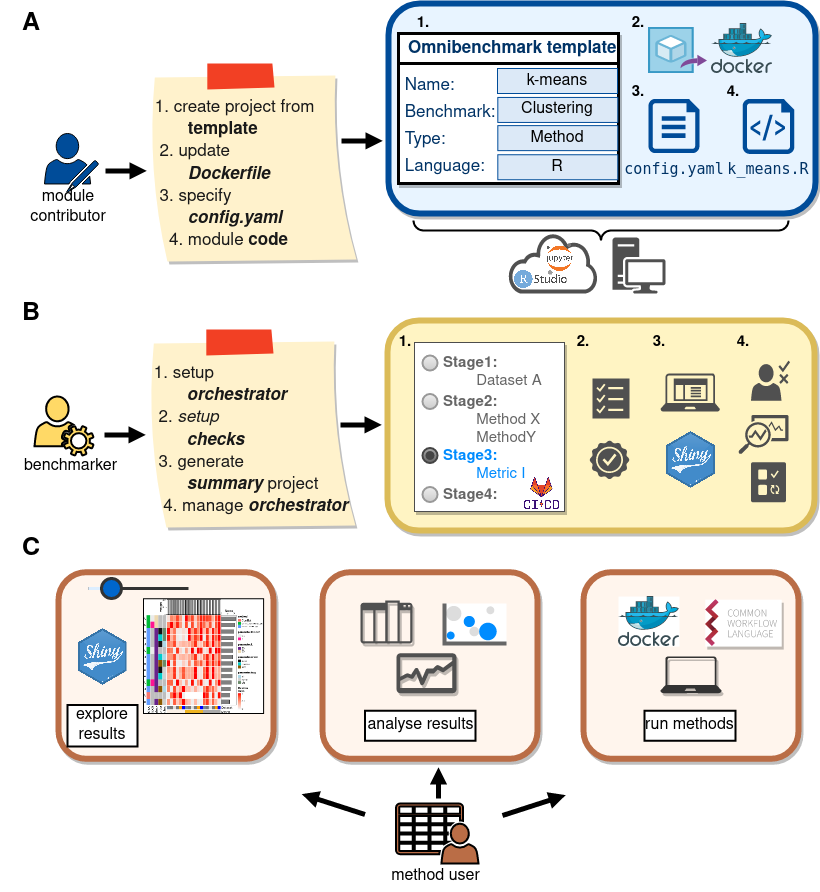

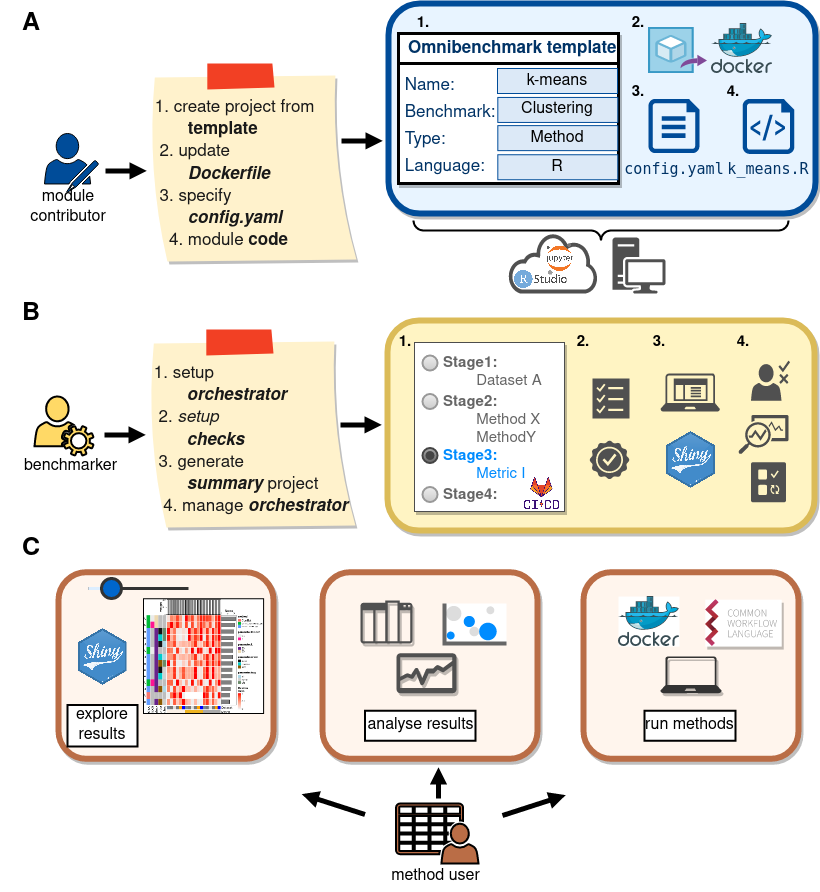

A platform for collaborative and continuous benchmarking

Method developer/

Benchmarker

Method user

Methods

Datasets

Metrics

Omnibenchmark

Goals:

- continuous

- software environments

- workflows

- all "products" can be accessed

- anyone can contribute

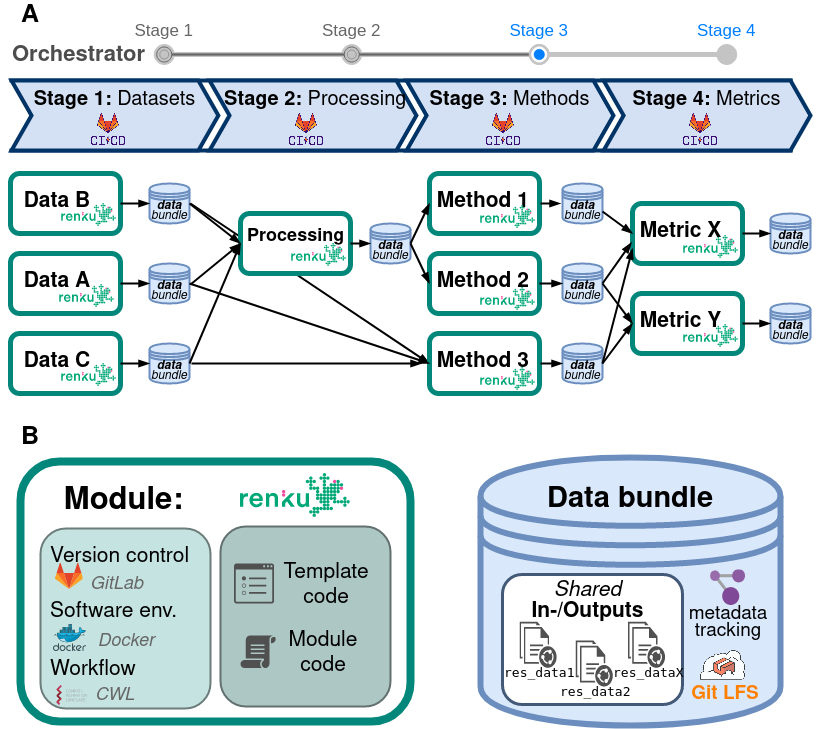

Design: Benchmark modules

Data

standardized datasets

= 1 "module" (renku project )

Methods

method results

Metrics

metric results

Dashboard

interactive result exploration

Method user

Method developer/

Benchmarker

Hands on: Iris-example

Omnibenchmark:

Module coordination and user roles

Omnibenchmark components

GitLab

Docker

Workflow

Module:

Template code

Module code

modules are connected through data bundles

Data X

Data y

Data Z

= 1 "data bundle" (data files + meta data)

= 1 "module" (renku project )

process

Method 1

Method 2

Method 3

Benchmark runs are coordinated by an Orchestrator

Data X

Data y

Data Z

process

Method 1

Method 2

Method 3

Orchestrator

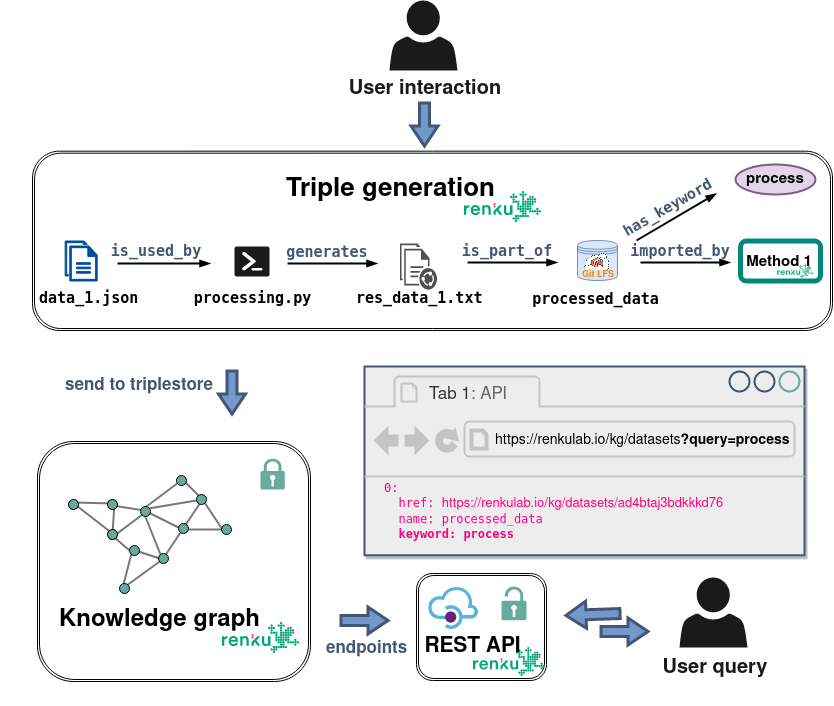

Data bundles are identified by Knowledge graph queries

Omnibenchmark user I

Omnibenchmark user II

Omnibenchmark user III

BC2 workshop

By Almut Luetge

BC2 workshop

- 326