Machine Learning

how to start to teach the machine

@ArkadiuszKondas

ARKADIUSZ KONDAS

Lead Software Architect

@ Proget Sp. z o.o.

Poland

Zend Certified Engineer

Code Craftsman

Blogger

Ultra Runner

@ ArkadiuszKondas

arkadiuszkondas.com

Zend Certified Architect

Agenda:

- Why Machine Learning?

- Ways of learning

- How to predict realty price in Russia?

- Summary

Why machine learning?

Why Machine Learning?

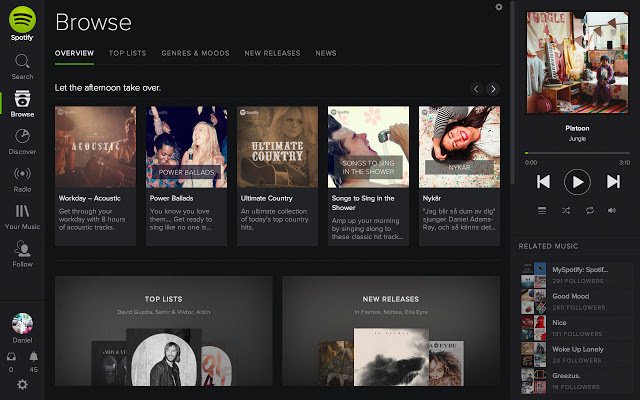

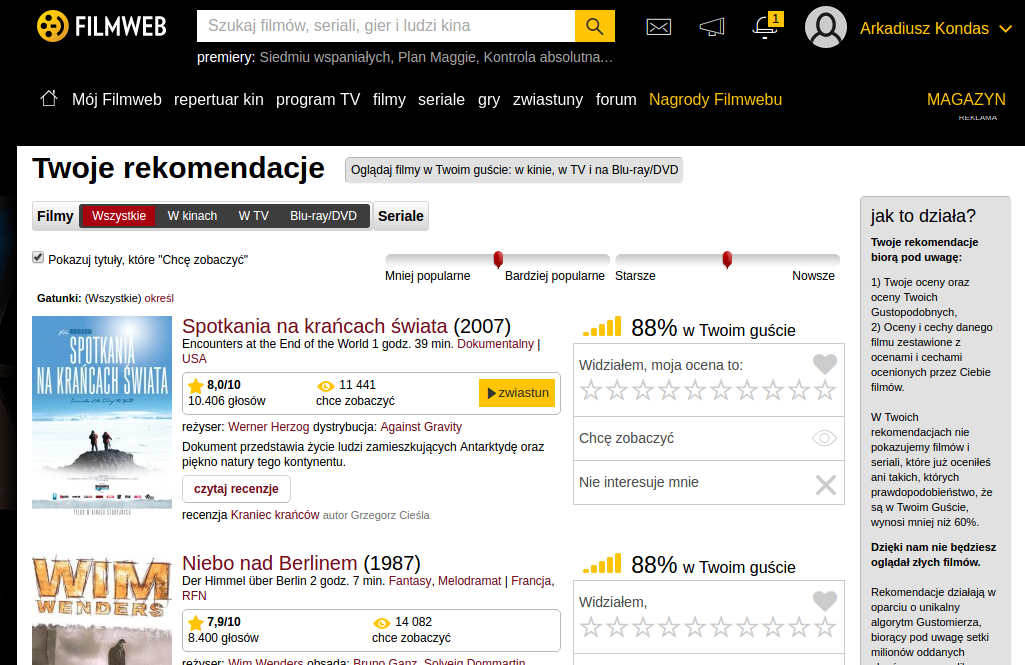

- Develop systems that can automatically adapt and customize themselves to individual users.

- personalized news or mail filter

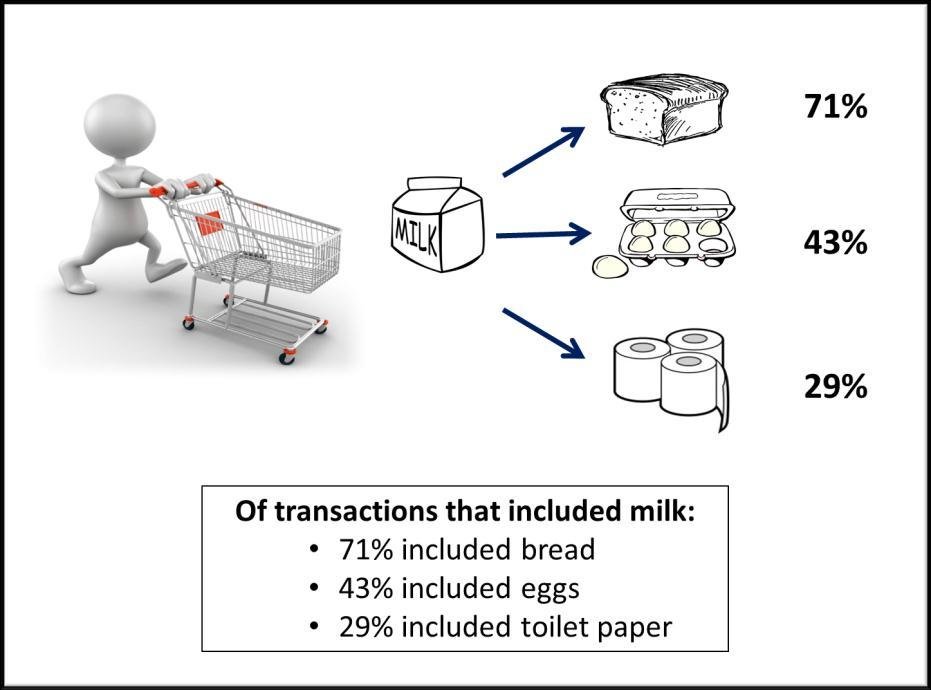

Why Machine Learning?

- Discover new knowledge from large databases (data mining).

- market basket analysis

Source: https://blogs.adobe.com/digitalmarketing/analytics/shopping-for-kpis-market-basket-analysis-for-web-analytics-data/

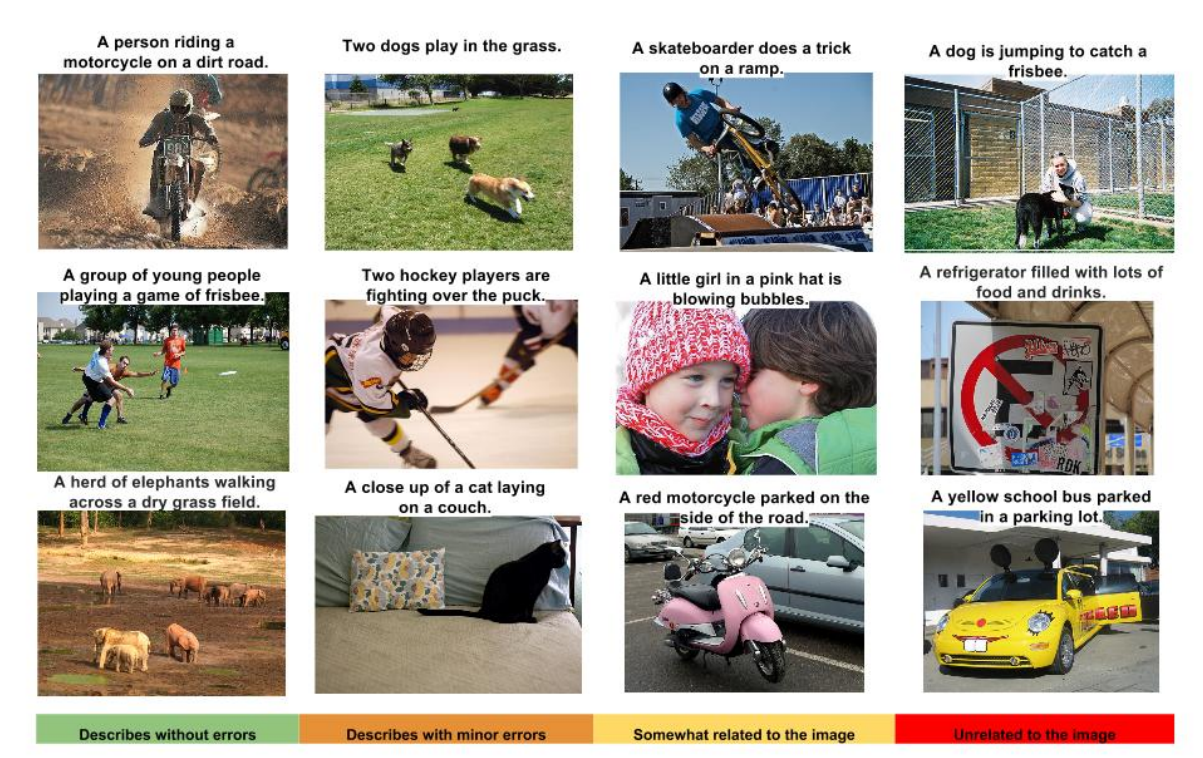

Why Machine Learning?

- Ability to mimic human and replace certain monotonous tasks - which require some intelligence.

- like recognizing handwritten characters

https://github.com/tensorflow/models/tree/master/im2txt

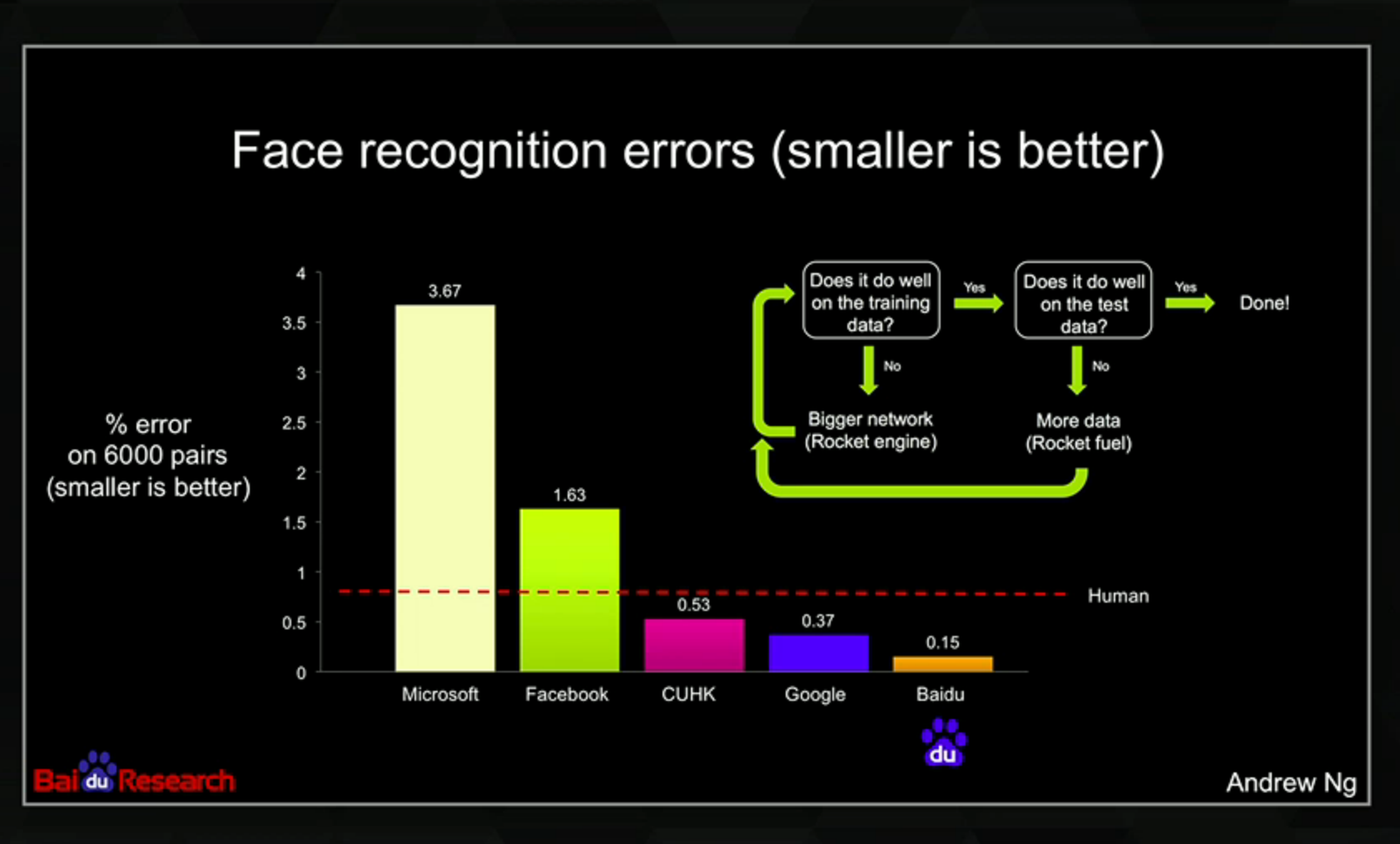

Why Machine Learning?

http://articles.concreteinteractive.com/nicole-kidmans-fake-nose/

Why Machine Learning?

- Develop systems that are too difficult/expensive to construct manually because they require specific detailed skills or knowledge tuned to a specific task (knowledge engineering bottleneck)

Why now?

The name machine learning was coined in 1959

- Data availability

- Computation power

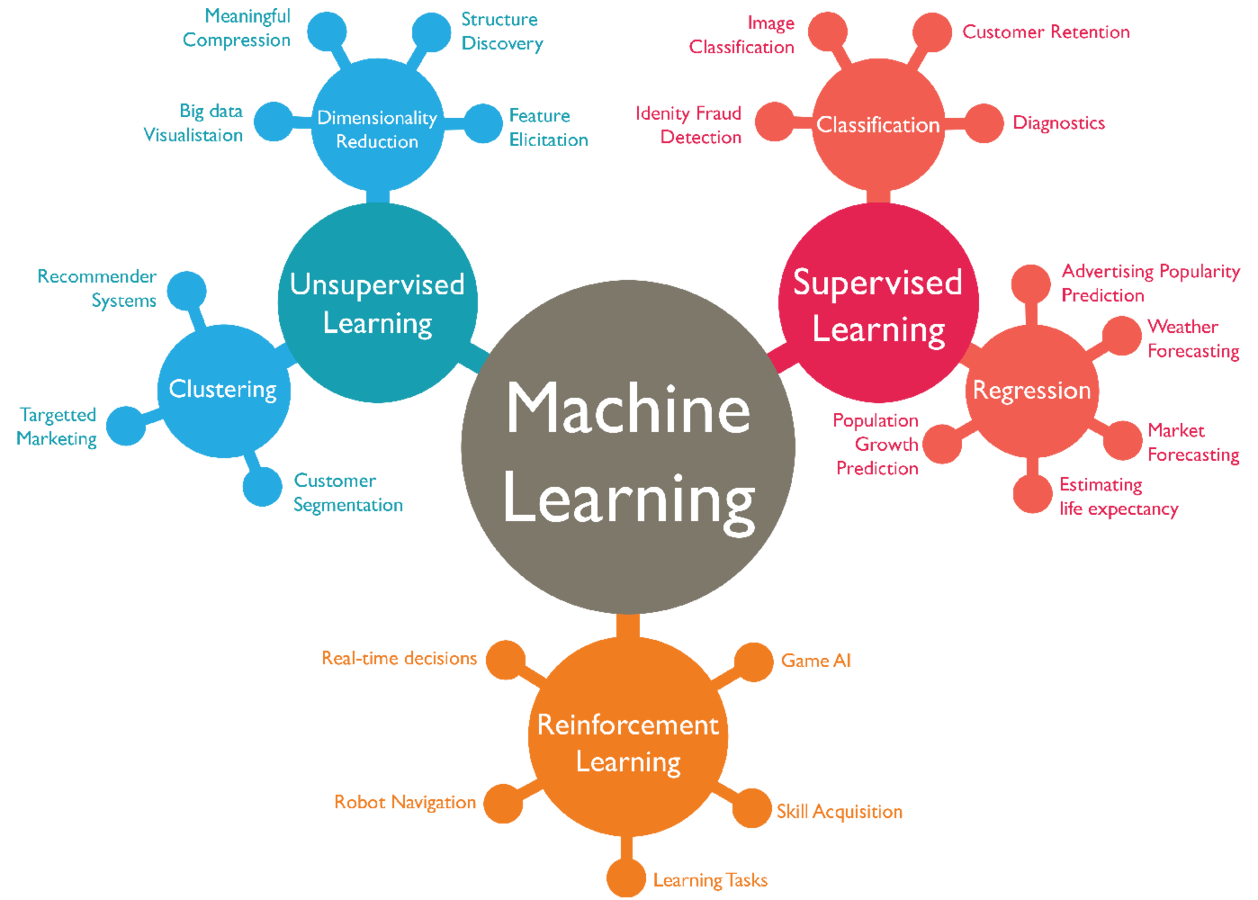

Ways of learning

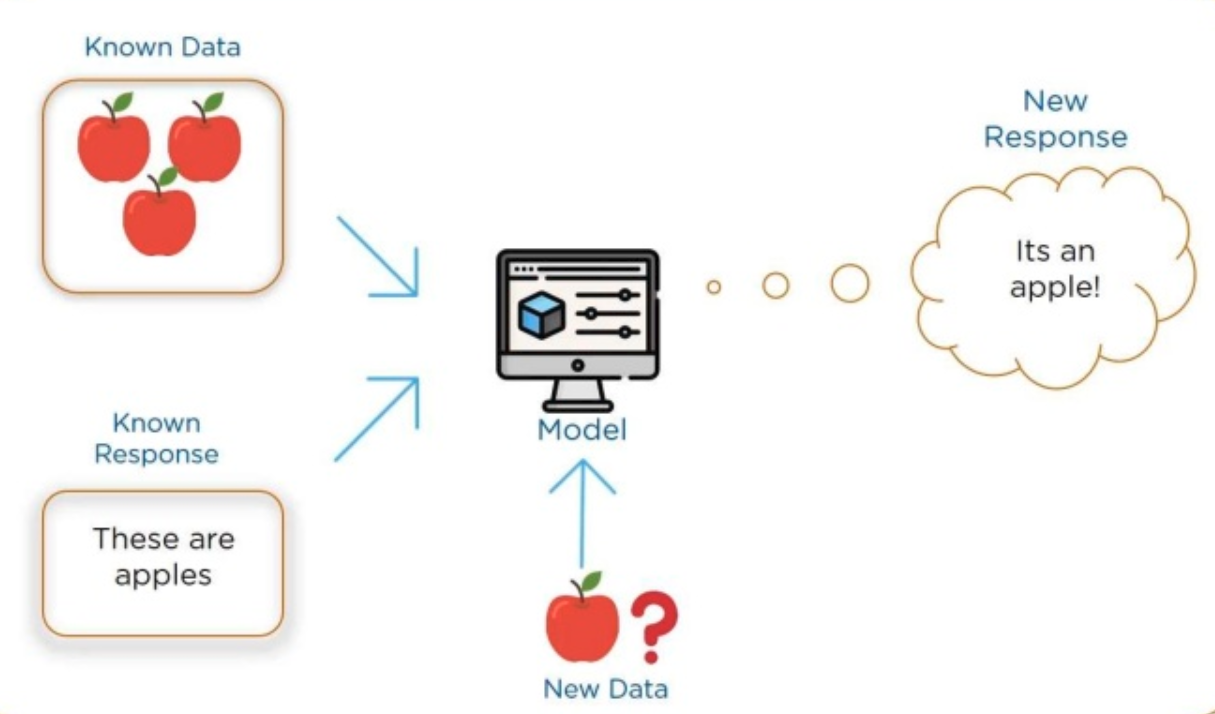

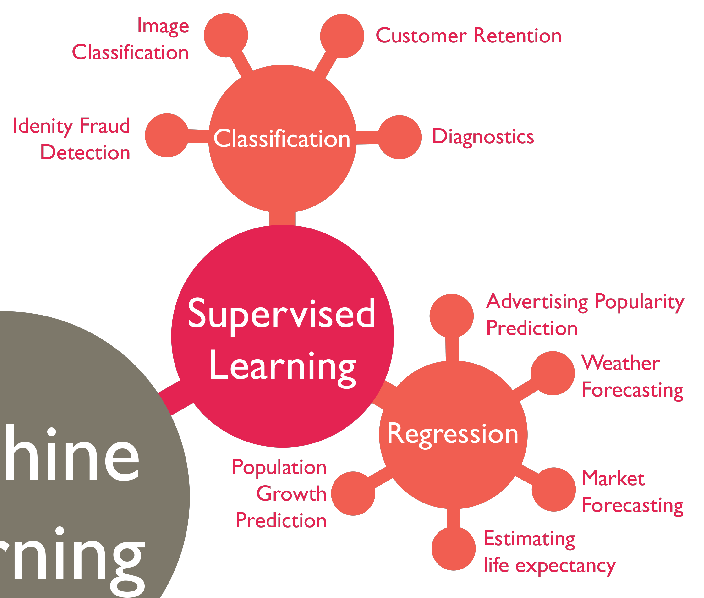

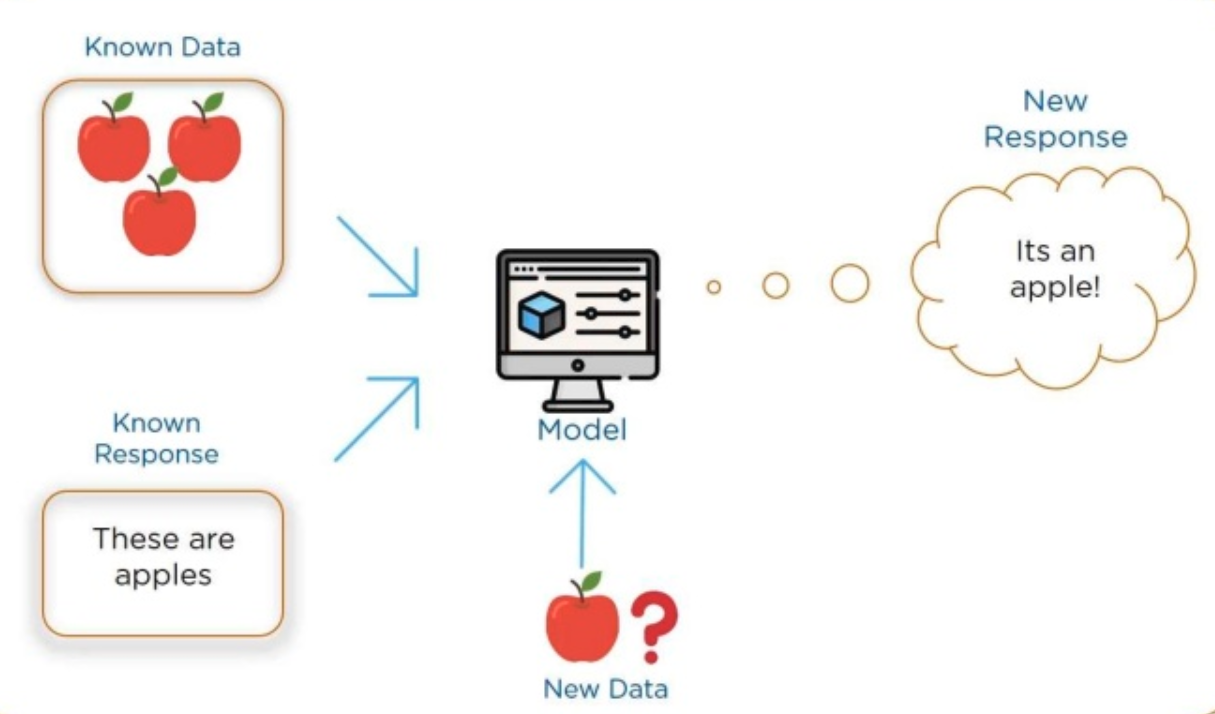

Supervised learning

Source: https://www.slideshare.net/Simplilearn/what-is-machine-learning-machine-learning-basics-machine-learning-algorithms-simplilearn

Supervised learning

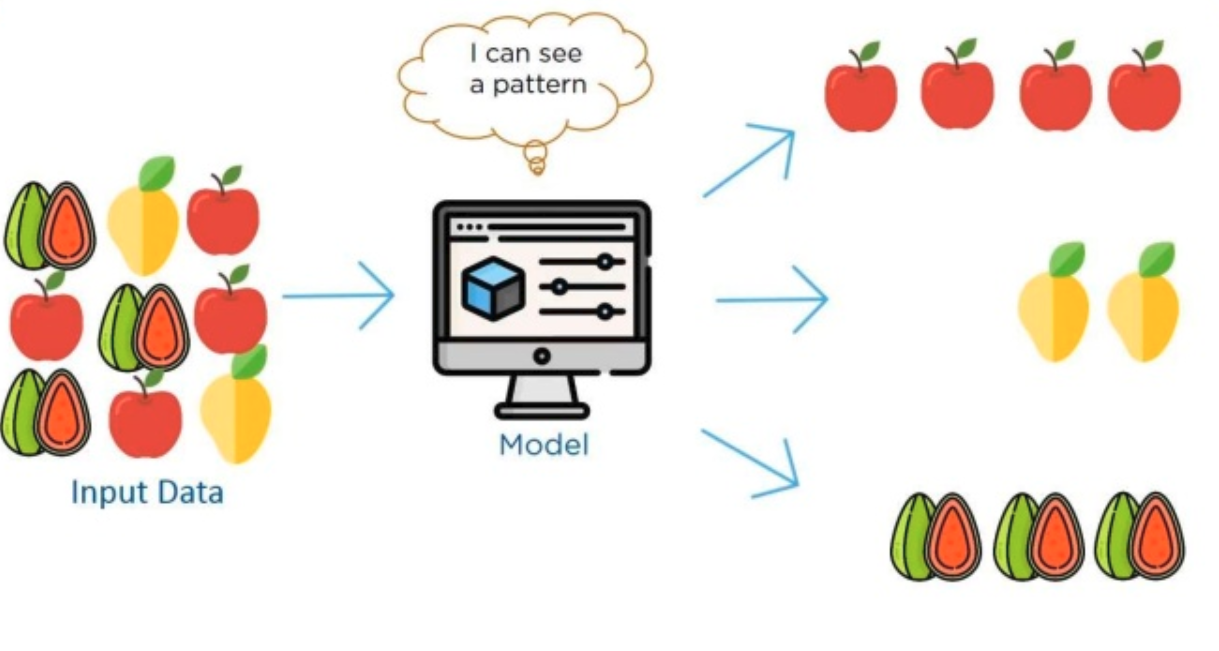

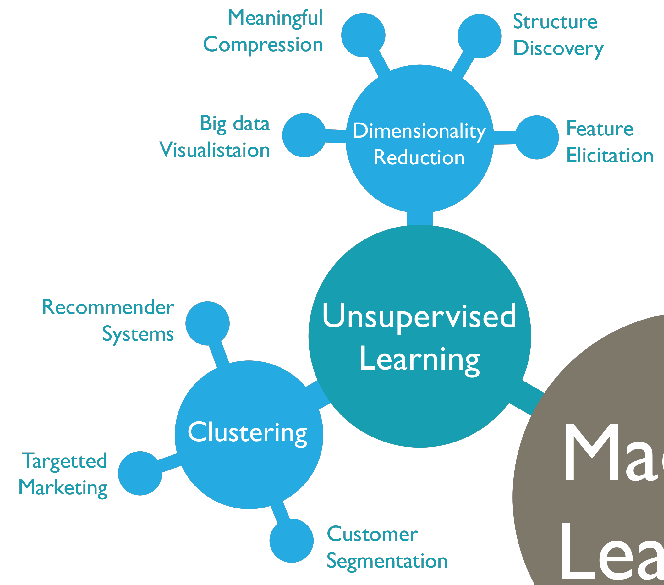

Unsupervised learning

Source: https://www.slideshare.net/Simplilearn/what-is-machine-learning-machine-learning-basics-machine-learning-algorithms-simplilearn

Unsupervised learning

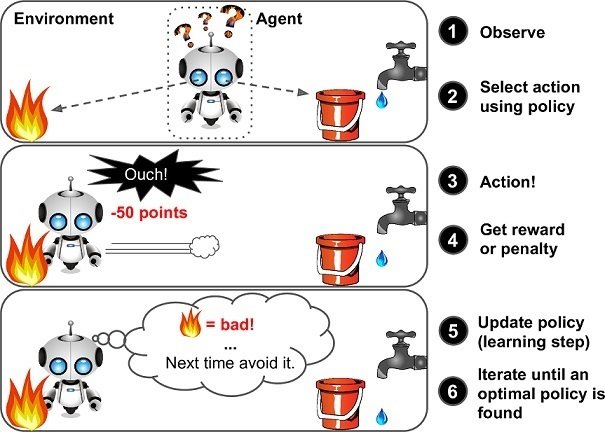

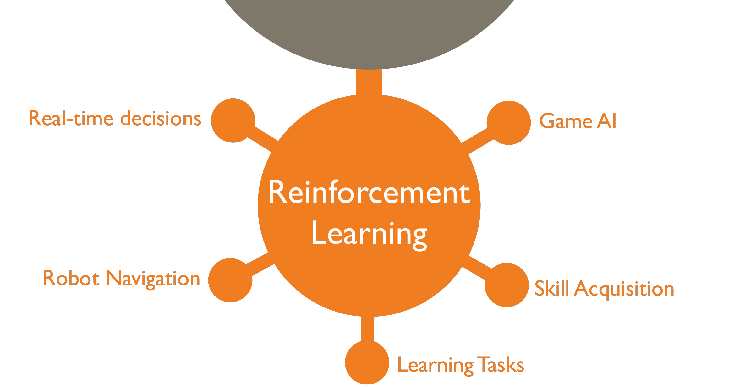

Reinforcement learning

Source: https://www.marutitech.com/businesses-reinforcement-learning/

Reinforcement learning

How to predict realty price in Russia?

Supervised learning

Source: https://www.slideshare.net/Simplilearn/what-is-machine-learning-machine-learning-basics-machine-learning-algorithms-simplilearn

Sberbank Russian Housing Market

Can you predict realty price fluctuations in Russia’s volatile economy?

Dataset

id,timestamp,full_sq,life_sq,floor,max_floor,material,build_year,num_room,kitch_sq,state,product_type,sub_area,area_m,raion_popul,green_zone_part,indust_part,children_preschool,preschool_quota,preschool_education_centers_raion,children_school,school_quota,school_education_centers_raion,school_education_centers_top_20_raion,hospital_beds_raion,healthcare_centers_raion,university_top_20_raion,sport_objects_raion,additional_education_raion,culture_objects_top_25,culture_objects_top_25_raion,shopping_centers_raion,office_raion,thermal_power_plant_raion,incineration_raion,oil_chemistry_raion,radiation_raion,railroad_terminal_raion,big_market_raion,nuclear_reactor_raion,detention_facility_raion,full_all,male_f,female_f,young_all,young_male,young_female,work_all,work_male,work_female,ekder_all,ekder_male,ekder_female,0_6_all,0_6_male,0_6_female,7_14_all,7_14_male,7_14_female,0_17_all,0_17_male,0_17_female,16_29_all,16_29_male,16_29_female,0_13_all,0_13_male,0_13_female,raion_build_count_with_material_info,build_count_block,build_count_wood,build_count_frame,build_count_brick,build_count_monolith,build_count_panel,build_count_foam,build_count_slag,build_count_mix,raion_build_count_with_builddate_info,build_count_before_1920,build_count_1921-1945,build_count_1946-1970,build_count_1971-1995,build_count_after_1995,ID_metro,metro_min_avto,metro_km_avto,metro_min_walk,metro_km_walk,kindergarten_km,school_km,park_km,green_zone_km,industrial_km,water_treatment_km,cemetery_km,incineration_km,railroad_station_walk_km,railroad_station_walk_min,ID_railroad_station_walk,railroad_station_avto_km,railroad_station_avto_min,ID_railroad_station_avto,public_transport_station_km,public_transport_station_min_walk,water_km,water_1line,mkad_km,ttk_km,sadovoe_km,bulvar_ring_km,kremlin_km,big_road1_km,ID_big_road1,big_road1_1line,big_road2_km,ID_big_road2,railroad_km,railroad_1line,zd_vokzaly_avto_km,ID_railroad_terminal,bus_terminal_avto_km,ID_bus_terminal,oil_chemistry_km,nuclear_reactor_km,radiation_km,power_transmission_line_km,thermal_power_plant_km,ts_km,big_market_km,market_shop_km,fitness_km,swim_pool_km,ice_rink_km,stadium_km,basketball_km,hospice_morgue_km,detention_facility_km,public_healthcare_km,university_km,workplaces_km,shopping_centers_km,office_km,additional_education_km,preschool_km,big_church_km,church_synagogue_km,mosque_km,theater_km,museum_km,exhibition_km,catering_km,ecology,green_part_500,prom_part_500,office_count_500,office_sqm_500,trc_count_500,trc_sqm_500,cafe_count_500,cafe_sum_500_min_price_avg,cafe_sum_500_max_price_avg,cafe_avg_price_500,cafe_count_500_na_price,cafe_count_500_price_500,cafe_count_500_price_1000,cafe_count_500_price_1500,cafe_count_500_price_2500,cafe_count_500_price_4000,cafe_count_500_price_high,big_church_count_500,church_count_500,mosque_count_500,leisure_count_500,sport_count_500,market_count_500,green_part_1000,prom_part_1000,office_count_1000,office_sqm_1000,trc_count_1000,trc_sqm_1000,cafe_count_1000,cafe_sum_1000_min_price_avg,cafe_sum_1000_max_price_avg,cafe_avg_price_1000,cafe_count_1000_na_price,cafe_count_1000_price_500,cafe_count_1000_price_1000,cafe_count_1000_price_1500,cafe_count_1000_price_2500,cafe_count_1000_price_4000,cafe_count_1000_price_high,big_church_count_1000,church_count_1000,mosque_count_1000,leisure_count_1000,sport_count_1000,market_count_1000,green_part_1500,prom_part_1500,office_count_1500,office_sqm_1500,trc_count_1500,trc_sqm_1500,cafe_count_1500,cafe_sum_1500_min_price_avg,cafe_sum_1500_max_price_avg,cafe_avg_price_1500,cafe_count_1500_na_price,cafe_count_1500_price_500,cafe_count_1500_price_1000,cafe_count_1500_price_1500,cafe_count_1500_price_2500,cafe_count_1500_price_4000,cafe_count_1500_price_high,big_church_count_1500,church_count_1500,mosque_count_1500,leisure_count_1500,sport_count_1500,market_count_1500,green_part_2000,prom_part_2000,office_count_2000,office_sqm_2000,trc_count_2000,trc_sqm_2000,cafe_count_2000,cafe_sum_2000_min_price_avg,cafe_sum_2000_max_price_avg,cafe_avg_price_2000,cafe_count_2000_na_price,cafe_count_2000_price_500,cafe_count_2000_price_1000,cafe_count_2000_price_1500,cafe_count_2000_price_2500,cafe_count_2000_price_4000,cafe_count_2000_price_high,big_church_count_2000,church_count_2000,mosque_count_2000,leisure_count_2000,sport_count_2000,market_count_2000,green_part_3000,prom_part_3000,office_count_3000,office_sqm_3000,trc_count_3000,trc_sqm_3000,cafe_count_3000,cafe_sum_3000_min_price_avg,cafe_sum_3000_max_price_avg,cafe_avg_price_3000,cafe_count_3000_na_price,cafe_count_3000_price_500,cafe_count_3000_price_1000,cafe_count_3000_price_1500,cafe_count_3000_price_2500,cafe_count_3000_price_4000,cafe_count_3000_price_high,big_church_count_3000,church_count_3000,mosque_count_3000,leisure_count_3000,sport_count_3000,market_count_3000,green_part_5000,prom_part_5000,office_count_5000,office_sqm_5000,trc_count_5000,trc_sqm_5000,cafe_count_5000,cafe_sum_5000_min_price_avg,cafe_sum_5000_max_price_avg,cafe_avg_price_5000,cafe_count_5000_na_price,cafe_count_5000_price_500,cafe_count_5000_price_1000,cafe_count_5000_price_1500,cafe_count_5000_price_2500,cafe_count_5000_price_4000,cafe_count_5000_price_high,big_church_count_5000,church_count_5000,mosque_count_5000,leisure_count_5000,sport_count_5000,market_count_5000,price_doc

1,2011-08-20,43,27,4,NA,NA,NA,NA,NA,NA,Investment,Bibirevo,6407578.1,155572,0.189727117,6.99893e-5,9576,5001,5,10309,11065,5,0,240,1,0,7,3,no,0,16,1,no,no,no,no,no,no,no,no,86206,40477,45729,21154,11007,10147,98207,52277,45930,36211,10580,25631,9576,4899,4677,10309,5463,4846,23603,12286,11317,17508,9425,8083,18654,9709,8945,211,25,0,0,0,2,184,0,0,0,211,0,0,0,206,5,1,2.590241095,1.131259906,13.57511887,1.131259906,0.145699552,0.17797535,2.158587074,0.600973099,1.080934313,23.68346,1.804127,3.633334,5.419893032,65.03871639,1,5.419893032,6.905892968,1,0.274985143,3.299821714,0.992631058,no,1.42239141,10.9185867,13.10061764,13.67565705,15.15621058,1.422391404,1,no,3.830951404,5,1.305159492,no,14.23196091,101,24.2924061,1,18.152338,5.718518835,1.210027392,1.062513046,5.814134663,4.308127002,10.81417151,1.676258313,0.485841388,3.065047099,1.107594209,8.148590774,3.516512911,2.392353035,4.248035887,0.974742843,6.715025787,0.884350021,0.648487637,0.637188832,0.947961657,0.17797535,0.625783434,0.628186549,3.932040333,14.05304655,7.389497904,7.023704919,0.516838085,good,0,0,0,0,0,0,0,NA,NA,NA,0,0,0,0,0,0,0,0,0,0,0,1,0,7.36,0,1,30500,3,55600,19,527.78,888.89,708.33,1,10,4,3,1,0,0,1,2,0,0,6,1,14.27,6.92,3,39554,9,171420,34,566.67,969.7,768.18,1,14,11,6,2,0,0,1,2,0,0,7,1,11.77,15.97,9,188854,19,1244891,36,614.29,1042.86,828.57,1,15,11,6,2,1,0,1,2,0,0,10,1,11.98,13.55,12,251554,23,1419204,68,639.68,1079.37,859.52,5,21,22,16,3,1,0,2,4,0,0,21,1,13.09,13.31,29,807385,52,4036616,152,708.57,1185.71,947.14,12,39,48,40,9,4,0,13,22,1,0,52,4,5850000

2,2011-08-23,34,19,3,NA,NA,NA,NA,NA,NA,Investment,Nagatinskij Zaton,9589336.912,115352,0.372602044,0.049637257,6880,3119,5,7759,6237,8,0,229,1,0,6,1,yes,1,3,0,no,no,no,no,no,no,no,no,76284,34200,42084,15727,7925,7802,70194,35622,34572,29431,9266,20165,6880,3466,3414,7759,3909,3850,17700,8998,8702,15164,7571,7593,13729,6929,6800,245,83,1,0,67,4,90,0,0,0,244,1,1,143,84,15,2,0.936699728,0.647336757,7.620630408,0.635052534,0.147754269,0.273345319,0.550689737,0.065321162,0.966479097,1.317476,4.655004,8.648587,3.411993084,40.943917,2,3.641772591,4.679744508,2,0.065263344,0.78316013,0.698081318,no,9.503405157,3.103995954,6.444333466,8.132640073,8.698054189,2.887376585,2,no,3.103995974,4,0.694535727,no,9.242585522,32,5.706113234,2,9.034641872,3.489954443,2.72429538,1.246148739,3.419574049,0.725560431,6.910567711,3.424716092,0.668363679,2.000153804,8.97282283,6.127072782,1.161578983,2.543746975,12.64987875,1.47772267,1.852560245,0.686251693,0.519311324,0.688796317,1.072315063,0.273345319,0.967820571,0.471446524,4.841543888,6.829888847,0.709260033,2.358840498,0.23028691,excellent,25.14,0,0,0,0,0,5,860,1500,1180,0,1,3,0,0,1,0,0,1,0,0,0,0,26.66,0.07,2,86600,5,94065,13,615.38,1076.92,846.15,0,5,6,1,0,1,0,1,2,0,4,2,0,21.53,7.71,3,102910,7,127065,17,694.12,1205.88,950,0,6,7,1,2,1,0,1,5,0,4,9,0,22.37,19.25,4,165510,8,179065,21,695.24,1190.48,942.86,0,7,8,3,2,1,0,1,5,0,4,11,0,18.07,27.32,12,821986,14,491565,30,631.03,1086.21,858.62,1,11,11,4,2,1,0,1,7,0,6,19,1,10.26,27.47,66,2690465,40,2034942,177,673.81,1148.81,911.31,9,49,65,36,15,3,0,15,29,1,10,66,14,6000000

3,2011-08-27,43,29,2,NA,NA,NA,NA,NA,NA,Investment,Tekstil'shhiki,4808269.831,101708,0.112559644,0.118537385,5879,1463,4,6207,5580,7,0,1183,1,0,5,1,no,0,0,1,no,no,no,yes,no,no,no,no,101982,46076,55906,13028,6835,6193,63388,31813,31575,25292,7609,17683,5879,3095,2784,6207,3269,2938,14884,7821,7063,19401,9045,10356,11252,5916,5336,330,59,0,0,206,4,60,0,1,0,330,1,0,246,63,20,3,2.120998901,1.637996285,17.3515154,1.445959617,0.049101536,0.158071895,0.374847751,0.453172405,0.939275144,4.91266,3.381083,11.99648,1.277658039,15.33189647,3,1.277658039,1.701419537,3,0.328756044,3.945072522,0.468264622,no,5.60479992,2.927487097,6.963402995,8.054252314,9.067884956,0.647249803,3,no,2.927487099,4,0.70069112,no,9.540544478,5,6.710302485,3,5.777393501,7.50661249,0.772216104,1.60218297,3.682454651,3.562187704,5.75236835,1.375442778,0.733101062,1.239303854,1.978517187,0.767568769,1.952770629,0.621357002,7.682302975,0.097143527,0.841254102,1.510088854,1.48653302,1.543048836,0.391957389,0.158071895,3.178751487,0.755946015,7.92215157,4.273200485,3.156422843,4.958214283,0.190461977,poor,1.67,0,0,0,0,0,3,666.67,1166.67,916.67,0,0,2,1,0,0,0,0,0,0,0,0,0,4.99,0.29,0,0,0,0,9,642.86,1142.86,892.86,2,0,5,2,0,0,0,0,1,0,0,5,3,9.92,6.73,0,0,1,2600,14,516.67,916.67,716.67,2,4,6,2,0,0,0,0,4,0,0,6,5,12.99,12.75,4,100200,7,52550,24,563.64,977.27,770.45,2,8,9,4,1,0,0,0,4,0,0,8,5,12.14,26.46,8,110856,7,52550,41,697.44,1192.31,944.87,2,9,17,9,3,1,0,0,11,0,0,20,6,13.69,21.58,43,1478160,35,1572990,122,702.68,1196.43,949.55,10,29,45,25,10,3,0,11,27,0,4,67,10,5700000

4,2011-09-01,89,50,9,NA,NA,NA,NA,NA,NA,Investment,Mitino,12583535.69,178473,0.194702869,0.069753361,13087,6839,9,13670,17063,10,0,NA,1,0,17,6,no,0,11,4,no,no,no,no,no,no,no,no,21155,9828,11327,28563,14680,13883,120381,60040,60341,29529,9083,20446,13087,6645,6442,13670,7126,6544,32063,16513,15550,3292,1450,1842,24934,12782,12152,458,9,51,12,124,50,201,0,9,2,459,13,24,40,130,252,4,1.489049154,0.984536582,11.56562408,0.963802007,0.179440956,0.236455018,0.078090293,0.106124506,0.451173311,15.62371,2.01708,14.31764,4.2914325,51.49719001,4,3.816044582,5.271136062,4,0.131596959,1.579163513,1.200336487,no,2.677824281,14.60650078,17.45719794,18.30943312,19.48700542,2.677824284,1,no,2.780448941,17,1.999265421,no,17.47838035,83,6.734618018,1,27.6678632,9.522537611,6.348716334,1.767612439,11.17833328,0.583024969,27.89271688,0.811275289,0.62348431,1.950316967,6.483171621,7.385520691,4.923843177,3.549557568,8.789894266,2.163735157,10.9031613,0.622271644,0.599913582,0.934273498,0.8926743,0.236455018,1.03177679,1.561504846,15.30044908,16.99067736,16.04152067,5.02969633,0.465820158,good,17.36,0.57,0,0,0,0,2,1e3,1500,1250,0,0,0,2,0,0,0,0,0,0,0,0,0,19.25,10.35,1,11000,6,80780,12,658.33,1083.33,870.83,0,3,4,5,0,0,0,0,0,0,0,3,1,28.38,6.57,2,11000,7,89492,23,673.91,1130.43,902.17,0,5,9,8,1,0,0,1,0,0,0,9,2,32.29,5.73,2,11000,7,89492,25,660,1120,890,0,5,11,8,1,0,0,1,1,0,0,13,2,20.79,3.57,4,167000,12,205756,32,718.75,1218.75,968.75,0,5,14,10,3,0,0,1,2,0,0,18,3,14.18,3.89,8,244166,22,942180,61,931.58,1552.63,1242.11,4,7,21,15,11,2,1,4,4,0,0,26,3,13100000

5,2011-09-05,77,77,4,NA,NA,NA,NA,NA,NA,Investment,Basmannoe,8398460.622,108171,0.015233744,0.037316452,5706,3240,7,6748,7770,9,0,562,4,2,25,2,no,0,10,93,no,no,no,yes,yes,no,no,no,28179,13522,14657,13368,7159,6209,68043,34236,33807,26760,8563,18197,5706,2982,2724,6748,3664,3084,15237,8113,7124,5164,2583,2581,11631,6223,5408,746,48,0,0,643,16,35,0,3,1,746,371,114,146,62,53,5,1.257186453,0.876620232,8.266305238,0.68885877,0.247901208,0.376838057,0.258288769,0.236214054,0.392870988,10.68354,2.936581,11.90391,0.853960072,10.24752087,5,1.59589817,2.156283865,113,0.071480323,0.857763874,0.820294318,no,11.61665314,1.721833675,0.046809568,0.787593311,2.578670647,1.721833683,4,no,3.133530966,10,0.084112545,yes,1.59589817,113,1.423427954,4,6.515857089,8.671015673,1.638318096,3.632640421,4.587916559,2.60941961,9.15505713,1.969737724,0.220287667,2.544696,3.975401349,3.610753828,0.307915375,1.864637406,3.779781109,1.121702845,0.991682626,0.892667526,0.429052137,0.077900959,0.810801456,0.376838057,0.378755838,0.121680643,2.584369607,1.11248589,1.800124877,1.339652258,0.026102416,excellent,3.56,4.44,15,293699,1,45000,48,702.22,1166.67,934.44,3,17,10,11,7,0,0,1,4,0,2,3,0,3.34,8.29,46,420952,3,158200,153,763.45,1272.41,1017.93,8,39,45,39,19,2,1,7,12,0,6,7,0,4.12,4.83,93,1195735,9,445900,272,766.8,1272.73,1019.76,19,70,74,72,30,6,1,18,30,0,10,14,2,4.53,5.02,149,1625130,17,564843,483,765.93,1269.23,1017.58,28,130,129,131,50,14,1,35,61,0,17,21,3,5.06,8.62,305,3420907,60,2296870,1068,853.03,1410.45,1131.74,63,266,267,262,149,57,4,70,121,1,40,77,5,8.38,10.92,689,8404624,114,3503058,2283,853.88,1411.45,1132.66,143,566,578,552,319,108,17,135,236,2,91,195,14,16331452

6,2011-09-06,67,46,14,NA,NA,NA,NA,NA,NA,Investment,Nizhegorodskoe,7506452.02,43795,0.007670134,0.486245621,2418,852,2,2514,2012,3,0,NA,0,0,7,0,no,0,6,19,yes,no,no,yes,no,no,no,no,19940,9400,10540,5291,2744,2547,29660,15793,13867,8844,2608,6236,2418,1224,1194,2514,1328,1186,5866,3035,2831,4851,2329,2522,4632,2399,2233,188,24,0,0,147,2,15,0,0,0,188,0,5,152,25,6,6,2.735883907,1.593246481,18.37816963,1.531514136,0.145954816,0.113466218,1.073495427,1.497902638,0.256487453,7.18674,0.78033,14.07514,0.375311695,4.503740339,6,0.375311695,1.407418835,6,0.189227153,2.270725835,0.612447325,no,8.296086727,0.284868107,3.519388985,4.395057477,5.645795859,0.284868136,4,no,1.478528507,3,0.244670412,no,5.070196504,5,6.682088764,4,3.95950924,8.757686082,0.193126987,2.34156168,1.272894442,1.438003448,5.374563767,3.447863628,0.81041306,1.911842782,2.108923435,4.233094726,1.450974874,3.391116928,4.356122442,1.698723584,3.830021305,1.042261834,0.440707312,0.422357874,3.066285203,0.113466218,0.686931702,0.870446514,4.787705729,3.388809733,3.71355663,2.553423533,0.004469307,poor,0,19.42,5,227705,3,102000,7,1e3,1625,1312.5,3,0,1,2,1,0,0,0,0,0,0,0,0,0,40.27,10,275135,5,164000,9,883.33,1416.67,1150,3,1,1,3,1,0,0,3,1,0,0,1,0,0,50.64,18,431090,6,186400,14,718.18,1181.82,950,3,3,3,4,1,0,0,4,2,0,0,11,0,0.38,51.58,21,471290,14,683945,33,741.38,1258.62,1e3,4,5,13,8,2,1,0,6,5,0,0,21,1,1.82,39.99,54,1181009,29,1059171,120,737.96,1231.48,984.72,12,24,37,35,11,1,0,12,12,0,2,31,7,5.92,25.79,253,4274339,63,2010320,567,769.92,1280.08,1025,35,137,163,155,62,14,1,53,78,1,20,113,17,9100000

- CSV file

- 292 columns

- 30k rows

Samples & Features

1 row = 1 sample = 292 columns = 291 features

price_doc: sale price (this is the target variable)

id: transaction id

timestamp: date of transaction

full_sq: total area in square meters, including loggias, balconies and other non-residential areas

life_sq: living area in square meters, excluding loggias, balconies and other non-residential areas

floor: for apartments, floor of the building

max_floor: number of floors in the building

material: wall material

build_year: year built

num_room: number of living rooms

kitch_sq: kitchen area

state: apartment condition

product_type: owner-occupier purchase or investment

sub_area: name of the district- numeric (276)

- non-numeric (16)

https://php-ml.org/

PHP-ML

Machine Learning library for PHP

Load dataset

use Phpml\Dataset\CsvDataset;

$dataset = new CsvDataset('housing.csv', 291);

$dataset->getSamples();

$dataset->getTargets();

$dataset->getColumnNames();One sample

array(291) {

[0]=>

string(3) "635"

[1]=>

string(10) "2011-12-15"

[2]=>

string(2) "70"

[3]=>

string(2) "49"

[4]=>

string(2) "16"

...

string(2) "NA"

[11]=>

string(10) "Investment"

[12]=>

string(10) "Matushkino"

[13]=>

string(10) "4708040.47"

...Feature selection

use Phpml\Preprocessing\ColumnFilter;

$datasetColumns = ['age', 'income', 'kids', 'beersPerWeek'];

$filterColumns = ['income', 'beersPerWeek'];

$samples = [

[21, 100000, 1, 4],

[35, 120000, 0, 12],

[33, 200000, 4, 0],

];

$filter = new ColumnFilter($datasetColumns, $filterColumns);

$filter->transform($samples);

/*

[[100000, 4], [120000, 12], [200000, 0]]

*/Number conversion

use Phpml\Preprocessing\NumberConverter;

$samples = [

['1', '-4'],

['2.0', 3.0],

['3', '112.5'],

['5', '0.0004']

];

$targets = ['1', '1', '2', '2'];

$converter = new NumberConverter();

$converter->transform($samples, $targets);final class NumberConverter implements Preprocessor

{

/**

* @param mixed $nonNumericPlaceholder

*/

public function __construct(

bool $transformTargets = false,

$nonNumericPlaceholder = null

)

}Missing values

Missing values

use Phpml\Preprocessing\Imputer;

use Phpml\Preprocessing\Imputer\Strategy\MeanStrategy;

$samples = [

[1, null, 3, 4],

[4, 3, 2, 1],

[null, 6, 7, 8],

[8, 7, null, 5],

];

$imputer = new Imputer(null, new MeanStrategy());

$imputer->fit($samples);

$imputer->transform($samples);

/* [

[1, 2.66, 3, 4],

[4, 3, 2, 1],

[7, 6, 7, 8],

[8, 7, 6.66, 5],

]*/Model selection

use Phpml\Regression\DecisionTreeRegressor;

$model = new DecisionTreeRegressor($maxDepth = 5);

$model->train($samples, $targets);

$predictions = $model->predict($samples);

Validation

array(10) {

[0]=>

string(7) "5850000"

[1]=>

string(7) "6000000"

[2]=>

string(7) "5700000"

[3]=>

string(8) "13100000"

[4]=>

string(8) "16331452"

[5]=>

string(7) "9100000"

[6]=>

string(7) "5500000"

[7]=>

string(7) "2000000"

[8]=>

string(7) "5300000"

[9]=>

string(7) "2000000"

}

array(10) {

[0]=>

float(5478002.4543098)

[1]=>

float(4613047.2807292)

[2]=>

float(5478002.4543098)

[3]=>

float(8770788.9539153)

[4]=>

float(8770788.9539153)

[5]=>

float(6645729.5835831)

[6]=>

float(4613047.2807292)

[7]=>

float(5478002.4543098)

[8]=>

float(4613047.2807292)

[9]=>

float(4613047.2807292)

}

Train dataset != Test dataset

Metrics

accuracy_score

balanced_accuracy_score

average_precision_score

brier_score_loss

f1_score

log_loss

precision_score

recall_score

jaccard_score

roc_auc_scoreClassification

adjusted_mutual_info_score

adjusted_rand_score

completeness_score

fowlkes_mallows_score

homogeneity_score

mutual_info_score

normalized_mutual_info_score

v_measure_scoreClustering

explained_variance_score

max_error

mean_absolute_error

mean_squared_error

mean_squared_log_error

median_absolute_error

r2_scoreRegression

This metric is best to use when targets having exponential growth, such as population counts, average sales of a commodity over a span of years etc.

MSLE: Mean squared logarithmic error

Metrics

MSLE: Mean squared logarithmic error

Best from kaggle:

GradientBoosting

Test dataset: ~0.30087

Our model:

Train dataset: ~0.41242

Test dataset: ~0.31355

Persistence

use Phpml\ModelManager;

$modelManager = new ModelManager();

$modelManager

->saveToFile($model, 'housing-model.phpml');$model = $modelManager

->restoreFromFile('housing-model.phpml');

$model->predict([$sample]);Workflow

use Phpml\Regression\DecisionTreeRegressor;

use Phpml\Preprocessing\NumberConverter;

use Phpml\Pipeline;

use Phpml\Preprocessing\ColumnFilter;

use Phpml\Preprocessing\Imputer;

use Phpml\Imputer\Strategy\MeanStrategy;

$model = new Pipeline([

new ColumnFilter(

$trainDataset->getColumnNames(),

['life_sq', 'full_sq', 'floor', 'build_year']

),

new NumberConverter(true),

new Imputer(null, new MeanStrategy()),

], new DecisionTreeRegressor(5));

$model->train($samples, $targets);Feature union

use Phpml\FeatureUnion;

$union = new FeatureUnion([

new Pipeline([

new ColumnFilter($columns, ['sex']),

new LambdaTransformer(function (array $sample) {

return $sample[0];

}),

new OneHotEncoder(),

]),

new Pipeline([

new ColumnFilter($columns, ['age', 'income']),

new NumberConverter(),

new Imputer(null, new MeanStrategy()),

]),

]);

$union->fitAndTransform($samples, $targets);Deployment

AWS Lambda

mnapoli/bref

curl -X POST \

https://3qv1qfxlmj.execute-api.us-east-1.amazonaws.com/Prod/housing \

-H 'content-type: multipart/form-data;' \

-F life_sq=22 \

-F full_sq=30 \

-F floor=1 \

-F build_year=2000php-ai/php-ml-lambda-examples

Deployment

php-ai/php-ml-lambda-examples

$model = (new ModelManager())

->restoreFromFile('housing.phpml');

echo json_encode(['price' => round($model->predict([[

$_POST['life_sq'],

$_POST['full_sq'],

$_POST['floor'],

$_POST['build_year'],

]])[0])]);$trainDataset = new CsvDataset(__DIR__.'/../data/housing-train.csv', 291);

$samples = $trainDataset->getSamples();

$targets = $trainDataset->getTargets();

$model = new Pipeline([

new ColumnFilter($trainDataset->getColumnNames(), ['life_sq', 'full_sq', 'floor', 'build_year']),

new NumberConverter(true),

new Imputer(null, new MeanStrategy()),

], new DecisionTreeRegressor());

$model->train($samples, $targets);

echo 'MSLE: ' . Regression::meanSquaredLogarithmicError($targets, $model->predict($samples)) . PHP_EOL;

$testDataset = new CsvDataset(__DIR__.'/../data/housing-test.csv', 290);

$predicted = $model->predict($testDataset->getSamples());

$lines = ['id,price_doc'];

foreach ($testDataset->getSamples() as $index => $sample) {

$lines[] = sprintf('%s,%s', $sample[0], $predicted[$index]);

}

file_put_contents(__DIR__.'/../data/housing-sub.csv', implode(PHP_EOL, $lines));

$modelManager = new ModelManager();

$modelManager->saveToFile($model, __DIR__.'/../data/housing-model.phpml');

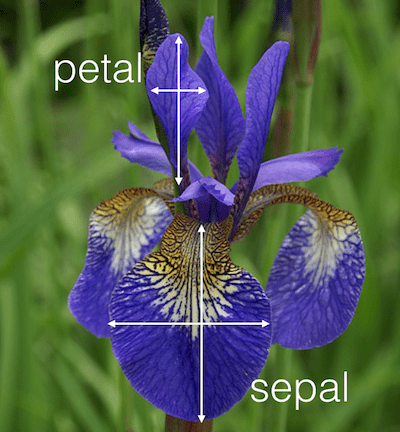

Types of problems

Classification

5.1,3.8,1.6,0.2,setosa

4.6,3.2,1.4,0.2,setosa

5.3,3.7,1.5,0.2,setosa

5,3.3,1.4,0.2,setosa

7,3.2,4.7,1.4,versicolor

6.4,3.2,4.5,1.5,versicolor

6.9,3.1,4.9,1.5,versicolor

5.5,2.3,4,1.3,versicolor

5.9,3,5.1,1.8,virginica

5.1,3.5,1.4,0.2,setosa

4.9,3,1.4,0.2,setosa

4.7,3.2,1.3,0.2,setosa

4.6,3.1,1.5,0.2,setosa

5,3.6,1.4,0.2,setosa

5.4,3.9,1.7,0.4,setosa

4.6,3.4,1.4,0.3,setosa

5,3.4,1.5,0.2,setosa

4.4,2.9,1.4,0.2,setosa

4.9,3.1,1.5,0.1,setosa

5.4,3.7,1.5,0.2,setosa

4.8,3.4,1.6,0.2,setosa

4.8,3,1.4,0.1,setosa

4.3,3,1.1,0.1,setosa

5.8,4,1.2,0.2,setosa

5.7,4.4,1.5,0.4,setosa

5.4,3.9,1.3,0.4,setosa

5.1,3.5,1.4,0.3,setosa

5.7,3.8,1.7,0.3,setosa

5.1,3.8,1.5,0.3,setosa

5.4,3.4,1.7,0.2,setosa

5.1,3.7,1.5,0.4,setosa

4.6,3.6,1,0.2,setosa

5.1,3.3,1.7,0.5,setosa

4.8,3.4,1.9,0.2,setosa

5,3,1.6,0.2,setosa

5,3.4,1.6,0.4,setosa

5.2,3.5,1.5,0.2,setosa

5.2,3.4,1.4,0.2,setosa

4.7,3.2,1.6,0.2,setosa

4.8,3.1,1.6,0.2,setosa

5.4,3.4,1.5,0.4,setosa

5.2,4.1,1.5,0.1,setosa

5.5,4.2,1.4,0.2,setosa

4.9,3.1,1.5,0.1,setosa

5,3.2,1.2,0.2,setosa

5.5,3.5,1.3,0.2,setosa

4.9,3.1,1.5,0.1,setosa

4.4,3,1.3,0.2,setosa

5.1,3.4,1.5,0.2,setosa

5,3.5,1.3,0.3,setosa

4.5,2.3,1.3,0.3,setosa

4.4,3.2,1.3,0.2,setosa

5,3.5,1.6,0.6,setosa

5.1,3.8,1.9,0.4,setosa

4.8,3,1.4,0.3,setosa

5.1,3.8,1.6,0.2,setosa

4.6,3.2,1.4,0.2,setosa

5.3,3.7,1.5,0.2,setosa

5,3.3,1.4,0.2,setosa

7,3.2,4.7,1.4,versicolor

6.4,3.2,4.5,1.5,versicolor

6.9,3.1,4.9,1.5,versicolor

5.5,2.3,4,1.3,versicolor

6.5,2.8,4.6,1.5,versicolor

5.7,2.8,4.5,1.3,versicolor

6.3,3.3,4.7,1.6,versicolor

4.9,2.4,3.3,1,versicolor

6.6,2.9,4.6,1.3,versicolor

5.2,2.7,3.9,1.4,versicolor

5,2,3.5,1,versicolor

5.9,3,4.2,1.5,versicolor

6,2.2,4,1,versicolor

6.1,2.9,4.7,1.4,versicolor

5.6,2.9,3.6,1.3,versicolor

6.7,3.1,4.4,1.4,versicolor

5.6,3,4.5,1.5,versicolor

5.8,2.7,4.1,1,versicolor

6.2,2.2,4.5,1.5,versicolor

5.6,2.5,3.9,1.1,versicolor

5.9,3.2,4.8,1.8,versicolor

6.1,2.8,4,1.3,versicolor

6.3,2.5,4.9,1.5,versicolor

6.1,2.8,4.7,1.2,versicolor

6.4,2.9,4.3,1.3,versicolor

6.6,3,4.4,1.4,versicolor

6.8,2.8,4.8,1.4,versicolor

6.7,3,5,1.7,versicolor

6,2.9,4.5,1.5,versicolor

5.7,2.6,3.5,1,versicolor

5.5,2.4,3.8,1.1,versicolor

5.5,2.4,3.7,1,versicolor

5.8,2.7,3.9,1.2,versicolor

6,2.7,5.1,1.6,versicolor

5.4,3,4.5,1.5,versicolor

6,3.4,4.5,1.6,versicolor

6.7,3.1,4.7,1.5,versicolor

6.3,2.3,4.4,1.3,versicolor

5.6,3,4.1,1.3,versicolor

5.5,2.5,4,1.3,versicolor

5.5,2.6,4.4,1.2,versicolor

6.1,3,4.6,1.4,versicolor

5.8,2.6,4,1.2,versicolor

5,2.3,3.3,1,versicolor

5.6,2.7,4.2,1.3,versicolor

5.7,3,4.2,1.2,versicolor

5.7,2.9,4.2,1.3,versicolor

6.2,2.9,4.3,1.3,versicolor

5.1,2.5,3,1.1,versicolor

5.7,2.8,4.1,1.3,versicolor

6.3,3.3,6,2.5,virginica

5.8,2.7,5.1,1.9,virginica

7.1,3,5.9,2.1,virginica

6.3,2.9,5.6,1.8,virginica

6.5,3,5.8,2.2,virginica

7.6,3,6.6,2.1,virginica

4.9,2.5,4.5,1.7,virginica

7.3,2.9,6.3,1.8,virginica

6.7,2.5,5.8,1.8,virginica

7.2,3.6,6.1,2.5,virginica

6.5,3.2,5.1,2,virginica

6.4,2.7,5.3,1.9,virginica

6.8,3,5.5,2.1,virginica

5.7,2.5,5,2,virginica

5.8,2.8,5.1,2.4,virginica

6.4,3.2,5.3,2.3,virginica

6.5,3,5.5,1.8,virginica

7.7,3.8,6.7,2.2,virginica

7.7,2.6,6.9,2.3,virginica

6,2.2,5,1.5,virginica

6.9,3.2,5.7,2.3,virginica

5.6,2.8,4.9,2,virginica

7.7,2.8,6.7,2,virginica

6.3,2.7,4.9,1.8,virginica

6.7,3.3,5.7,2.1,virginica

7.2,3.2,6,1.8,virginica

6.2,2.8,4.8,1.8,virginica

6.1,3,4.9,1.8,virginica

6.4,2.8,5.6,2.1,virginica

7.2,3,5.8,1.6,virginica

7.4,2.8,6.1,1.9,virginica

7.9,3.8,6.4,2,virginica

6.4,2.8,5.6,2.2,virginica

6.3,2.8,5.1,1.5,virginica

6.1,2.6,5.6,1.4,virginica

7.7,3,6.1,2.3,virginica

6.3,3.4,5.6,2.4,virginica

6.4,3.1,5.5,1.8,virginica

6,3,4.8,1.8,virginica

6.9,3.1,5.4,2.1,virginica

6.7,3.1,5.6,2.4,virginica

6.9,3.1,5.1,2.3,virginica

5.8,2.7,5.1,1.9,virginica

6.8,3.2,5.9,2.3,virginica

6.7,3.3,5.7,2.5,virginica

6.7,3,5.2,2.3,virginica

6.3,2.5,5,1.9,virginica

6.5,3,5.2,2,virginica

6.2,3.4,5.4,2.3,virginica

5.9,3,5.1,1.8,virginica

Classification

use Phpml\Classification\KNearestNeighbors;

use Phpml\CrossValidation\RandomSplit;

use Phpml\Dataset\Demo\IrisDataset;

use Phpml\Metric\Accuracy;

$dataset = new IrisDataset();

$split = new RandomSplit($dataset);

$classifier = new KNearestNeighbors();

$classifier->train(

$split->getTrainSamples(),

$split->getTrainLabels()

);

$predicted = $classifier->predict($split->getTestSamples());

echo sprintf("Accuracy: %s",

Accuracy::score($split->getTestLabels(), $predicted)

);

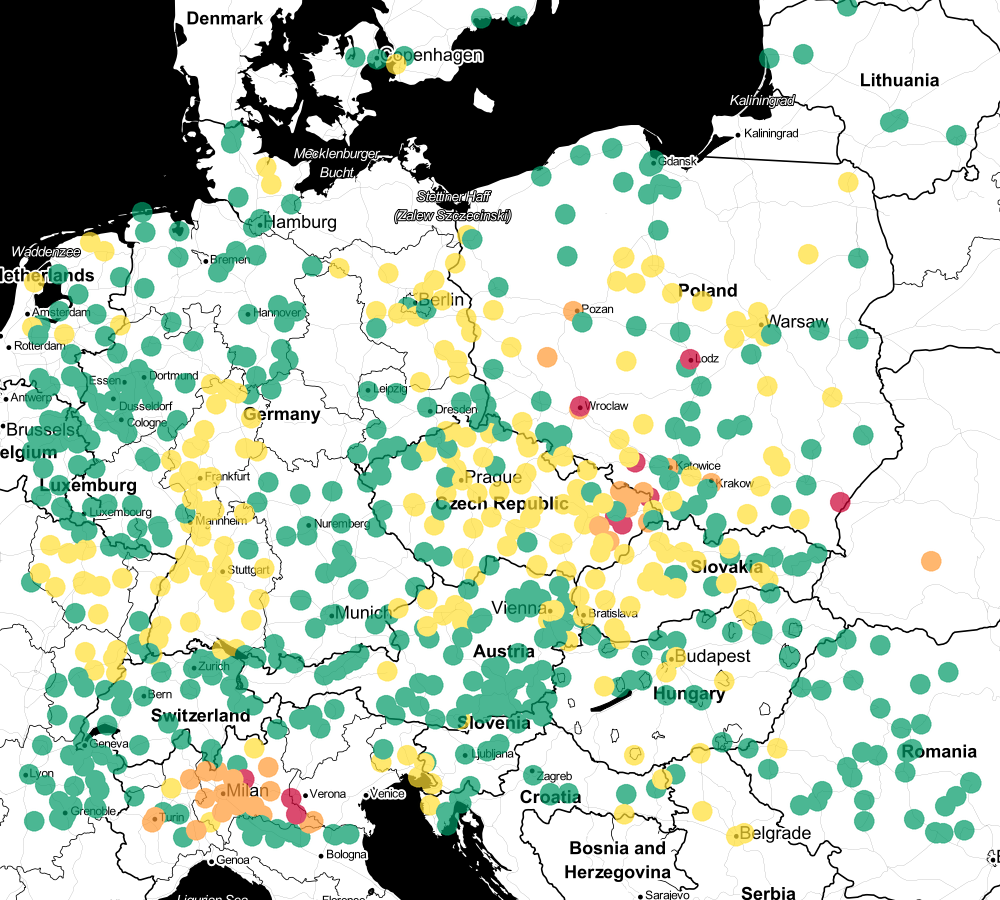

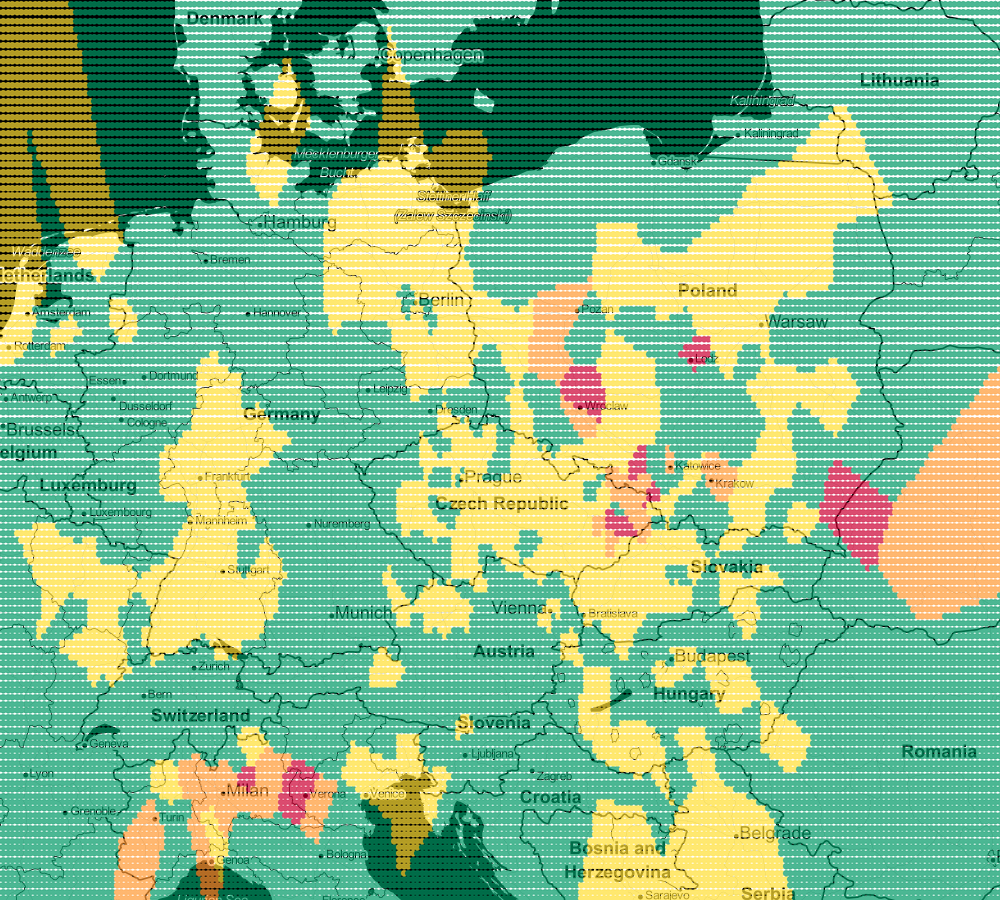

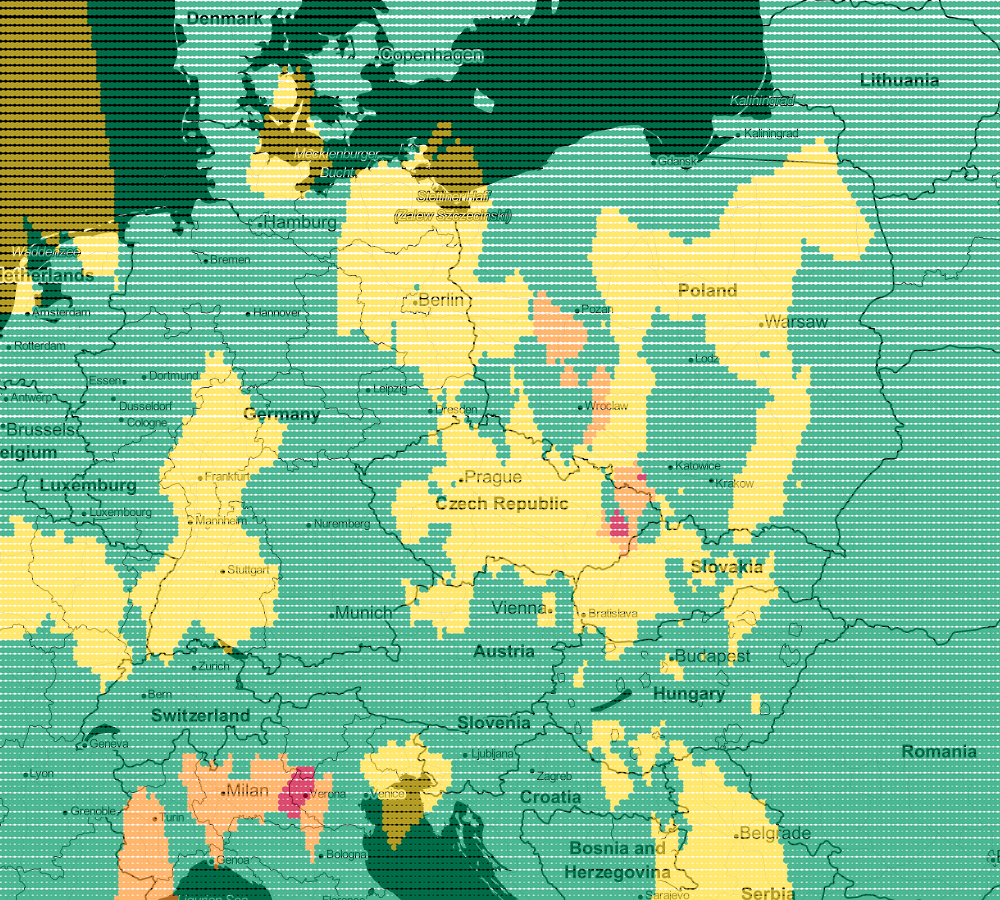

Classification

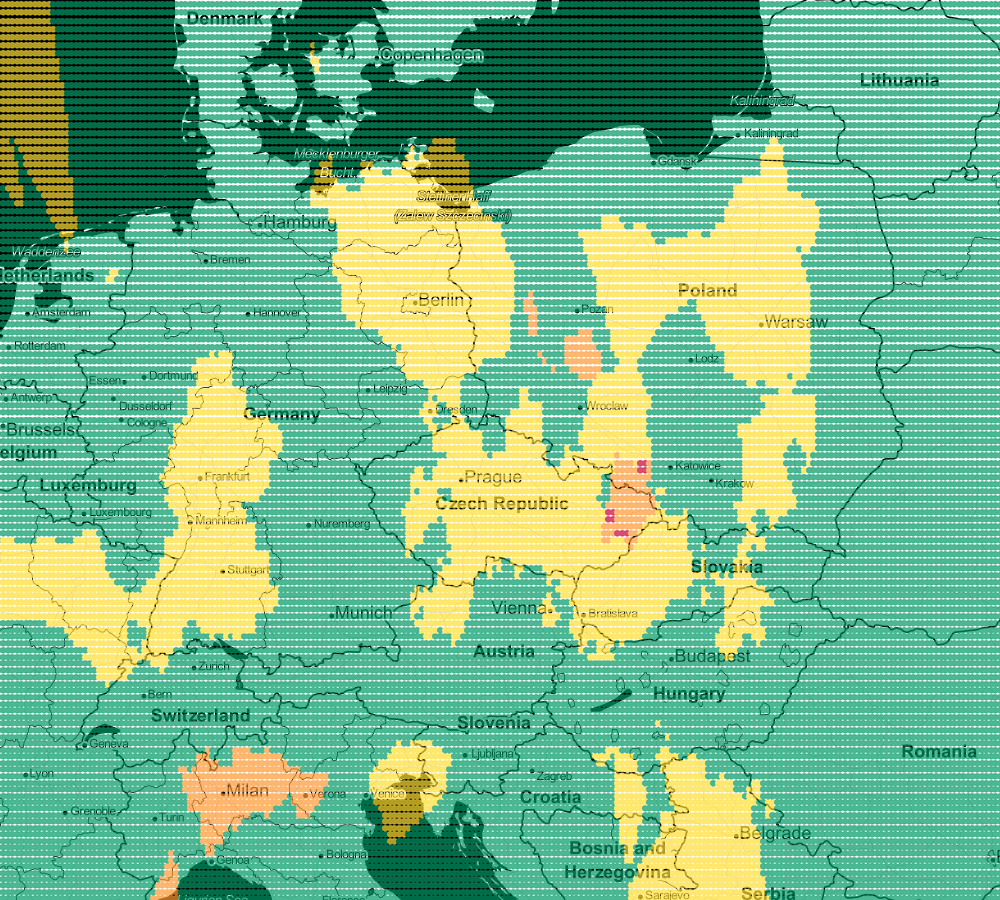

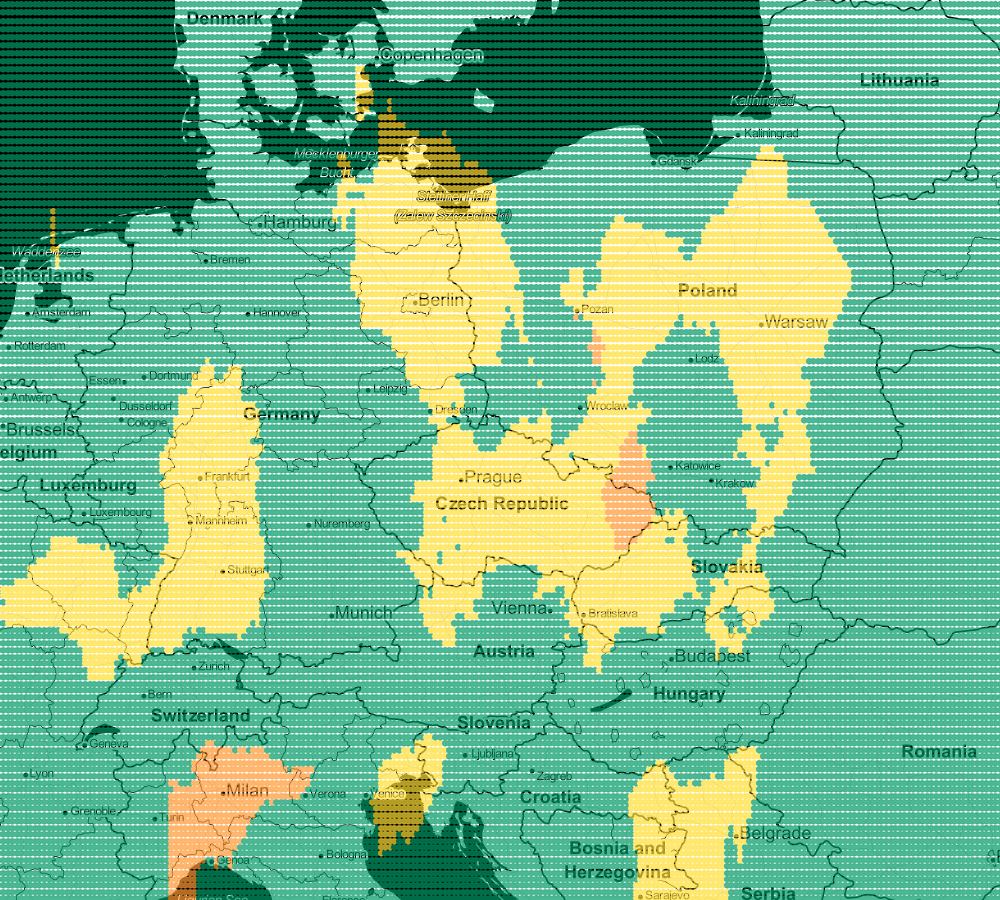

51.403007;7.208546;good

52.2688736;10.5267696;good

52.235083;5.181552;good

47.5292;9.9267;good

47.9704873;17.7852936;moderate

51.4625;13.526666666667;moderate

48.7344444;19.1128073;good

46.815277777778;9.8558333333333;good

51.1506269;14.968707;good

47.041668;15.433056;good

50.303207830366;6.0017362891249;good

47.178333333333;14.676666666667;good

47.102253726073;9.3375502158063;good

47.5818083;12.1724111;good

50.97;9.8;moderate

47.146155;5.551039;good

51.64265556;15.12780833;good

54.353333333333;18.635277777778;good

50.5425641;12.7792228;good

52.234504;6.919494;moderate

50.467528;13.412696;good

51.233652208391;5.1639788468472;good

52.14325;19.233225;good

45.14254358;10.04384767;unhealthy for sensitive

49.228472;17.675083;unhealthy for sensitive

50.80425833;8.76932778;moderate

48.396866667006;9.9789750003815;moderate

53.4708393;7.4848308;good

47.871158;17.273464;good

48.33472;16.729445;good

47.409443;15.253333;goodVisualization

$minLat = 41.34343606848294;

$maxLat = 57.844750992891;

$minLng = -16.040039062500004;

$maxLng = 29.311523437500004;

$step = 0.1;

$k = 3;

$dataset = new CsvDataset(__DIR__.'/../data/air.csv', 2, false, ';');

$estimator = new KNearestNeighbors($k);

$estimator->train($dataset->getSamples(), $dataset->getTargets());

$lines = [];

for ($lat=$minLat; $lat<$maxLat; $lat+=$step) {

for ($lng=$minLng; $lng<$maxLng; $lng+=$step) {

$lines[] = sprintf('%s;%s;%s', $lat, $lng, $estimator->predict([[$lat, $lng]])[0]);

}

}

file_put_contents(__DIR__.'/../data/airVis.csv', implode(PHP_EOL, $lines));

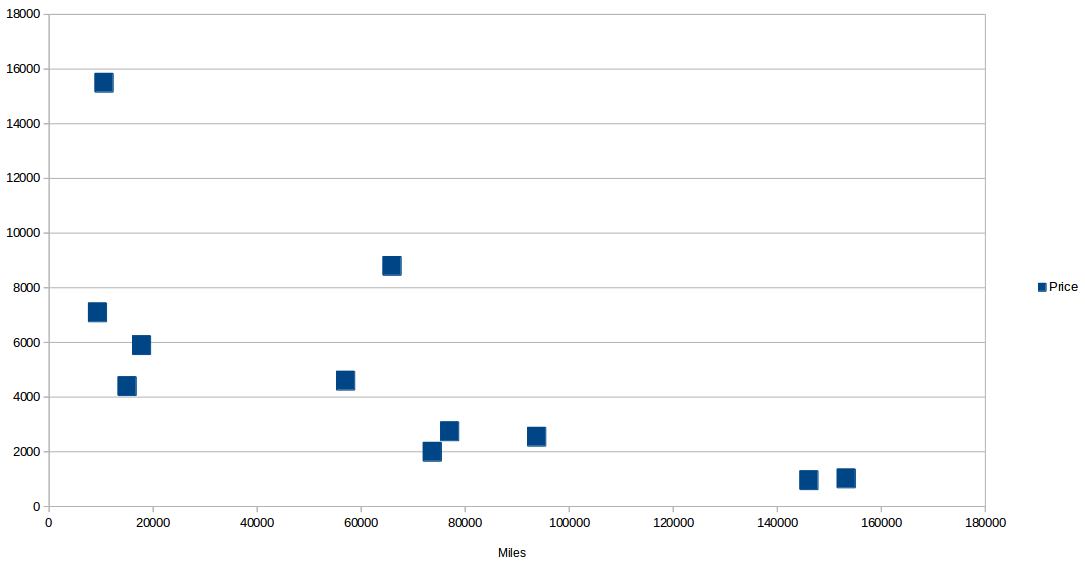

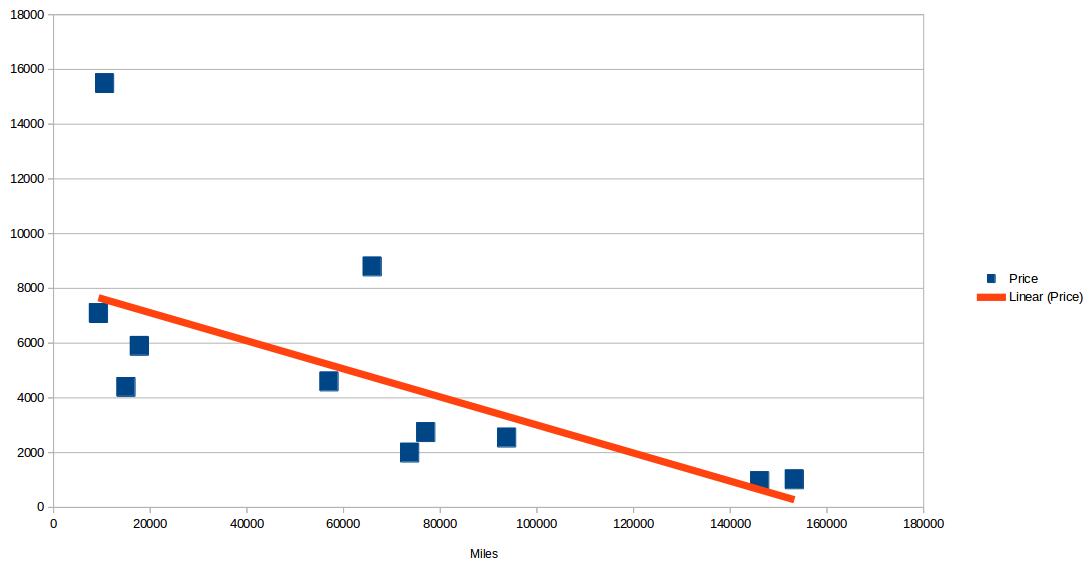

Regression

Miles Price

9300 7100

10565 15500

15000 4400

15000 4400

17764 5900

57000 4600

65940 8800

73676 2000

77006 2750

93739 2550

146088 960

153260 1025

Regression

use Phpml\Regression\LeastSquares;

$samples = [[9300], [10565], [15000], [15000], [17764], [57000], [65940], [73676], [77006], [93739], [146088], [153260]];

$targets = [7100, 15500, 4400, 4400, 5900, 4600, 8800, 2000, 2750, 2550, 960, 1025];

$regression = new LeastSquares();

$regression->train($samples, $targets);

$regression->getCoefficients();

$regression->getIntercept();

$price = $regression->predict([35000]);

Clustering

41.793935909;-87.625680278

41.907274031;-87.722791892

41.851296671;-87.706458801

41.775963639;-87.615517372

41.794879;-87.63179049

41.799461412;-87.596206318

41.989599401;-87.660256868

42.019398729;-87.67543958

42.004487311;-87.679846425

42.009087258;-87.690171862

41.799518433;-87.590997844

41.875039579;-87.743690267

41.875198392;-87.717479393

41.78640901;-87.649813179

41.766229647;-87.577855722

41.900062396;-87.620884259

41.744708666;-87.616371298

41.7737319;-87.651916442

41.692289426;-87.647852131

41.874236291;-87.674657583

41.761450225;-87.623211368

41.831030756;-87.62442424741.974853031;-87.713545123

41.974605662;-87.660819291

41.815419529;-87.702711186

41.750341521;-87.657371388

41.854659562;-87.716303651

41.834650408;-87.62843175

41.793435216;-87.70876482

41.894904052;-87.626344479

41.894993069;-87.746918939

41.90984267;-87.729545576

41.967477901;-87.739224006

41.87522978;-87.728549617

41.765946803;-87.595563723

41.908222431;-87.679234761

41.882757453;-87.709603286

41.876121224;-87.641003973

41.809372853;-87.704024967

41.977043475;-87.76899404

41.943664148;-87.646353396

41.759350571;-87.623168543

41.693840666;-87.613406835

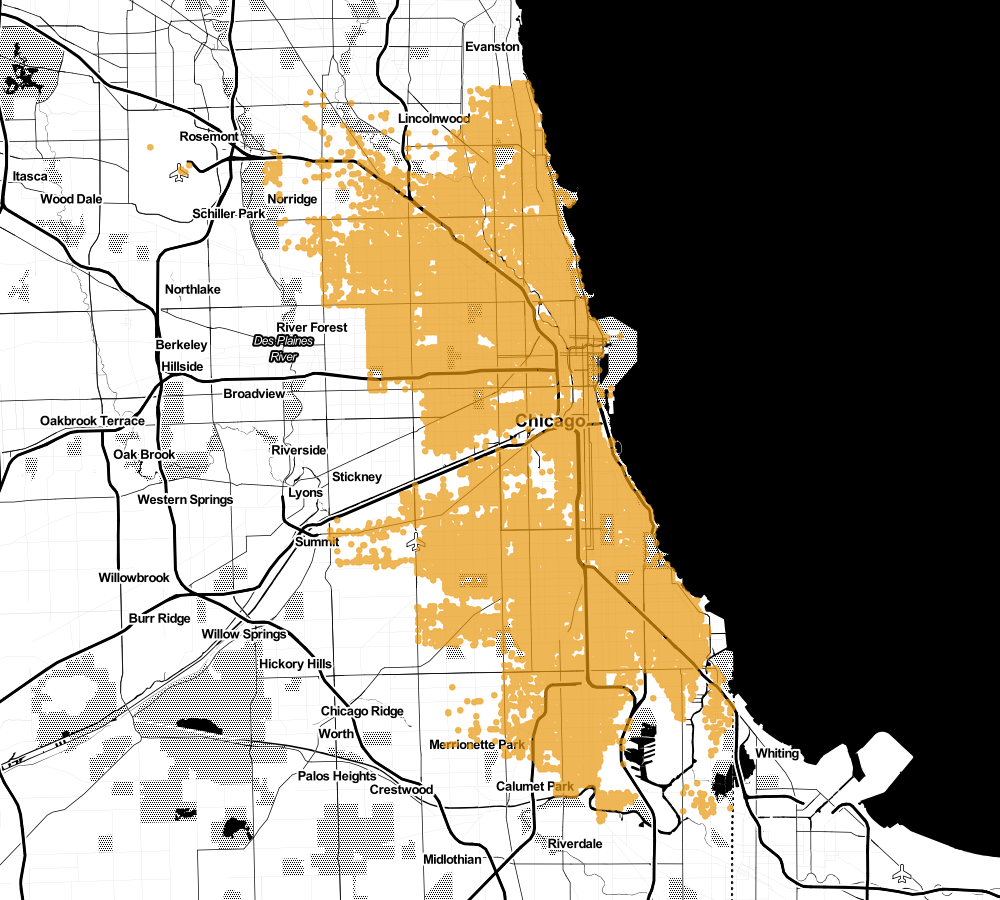

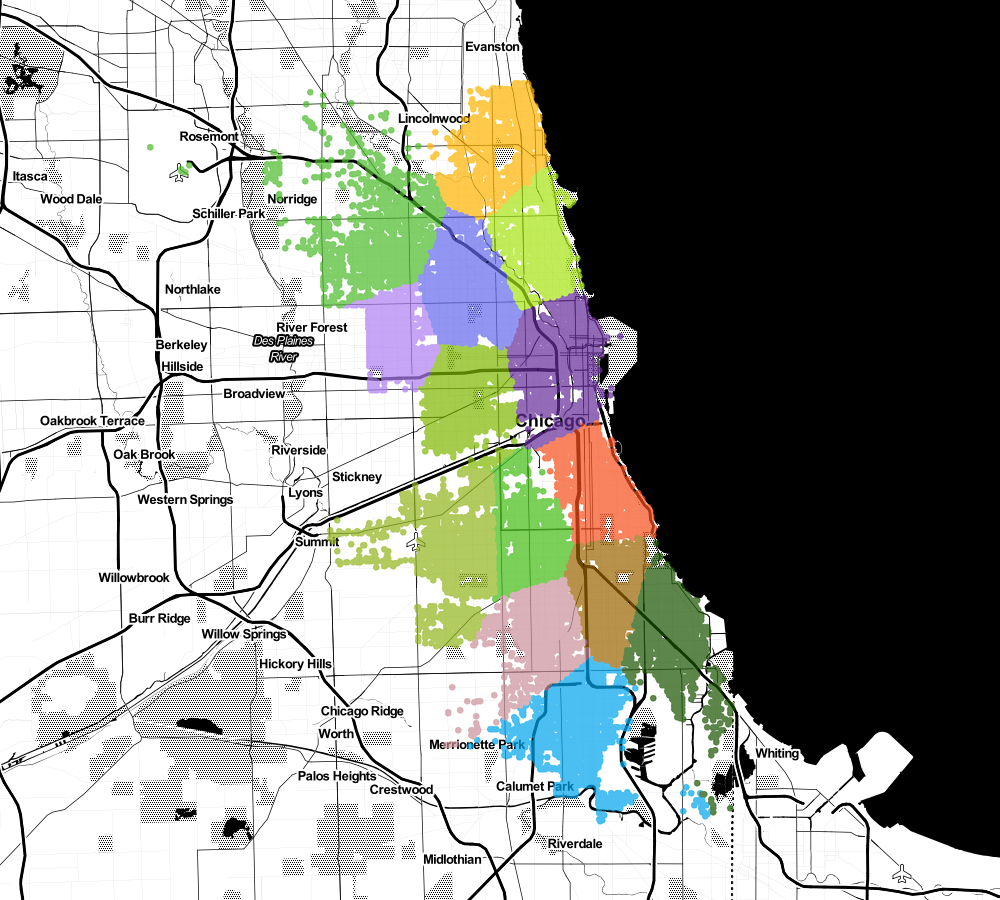

41.964351639;-87.661000678Clustering

Clustering

Clustering

Clustering

use Phpml\Clustering\KMeans;

$samples = [

[1, 1], [8, 7], [1, 2],

[7, 8], [2, 1], [8, 9]

];

$kmeans = new KMeans(2);

$clusters = $kmeans->cluster($samples);

$clusters = [

[[1, 1], [1, 2], [2, 1]],

[[8, 7], [7, 8], [8, 9]]

];Feature Extraction

use Phpml\FeatureExtraction\TokenCountVectorizer;

use Phpml\Tokenization\WhitespaceTokenizer;

$samples = [

'Lorem ipsum dolor sit amet dolor',

'Mauris placerat ipsum dolor',

'Mauris diam eros fringilla diam',

];

$vectorizer = new TokenCountVectorizer(new WhitespaceTokenizer());

$vectorizer->fit($samples);

$vectorizer->getVocabulary()

$vectorizer->transform($samples);

$tokensCounts = [

[0 => 1, 1 => 1, 2 => 2, 3 => 1, 4 => 1, 5 => 0, 6 => 0, 7 => 0, 8 => 0, 9 => 0],

[0 => 0, 1 => 1, 2 => 1, 3 => 0, 4 => 0, 5 => 1, 6 => 1, 7 => 0, 8 => 0, 9 => 0],

[0 => 0, 1 => 0, 2 => 0, 3 => 0, 4 => 0, 5 => 1, 6 => 0, 7 => 2, 8 => 1, 9 => 1],

];Model selection

use Phpml\CrossValidation\RandomSplit;

use Phpml\CrossValidation\StratifiedRandomSplit;

use Phpml\Dataset\ArrayDataset;

$dataset = new ArrayDataset(

$samples = [[1], [2], [3], [4]],

$labels = ['a', 'a', 'b', 'b']

);

$randomSplit = new RandomSplit($dataset, 0.5);$dataset = new ArrayDataset(

$samples = [[1], [2], [3], [4], [5], [6], [7], [8]],

$labels = ['a', 'a', 'a', 'a', 'b', 'b', 'b', 'b']

);

$split = new StratifiedRandomSplit($dataset, 0.5);- Feature Selection

- Dimensionality Reduction

- Datasets

- Models Management

- Neural Network

- Metric

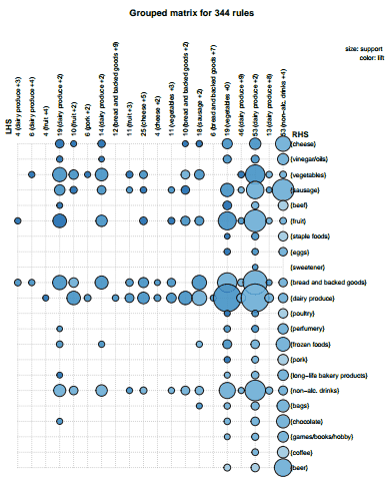

- Association Rule Learning

- Ensemble Algorithms

- Math

https://github.com/php-ai/php-ml

Summary

ML is all about the proccess

- Define a problem

- Gather your data

- Prepare your data for ML

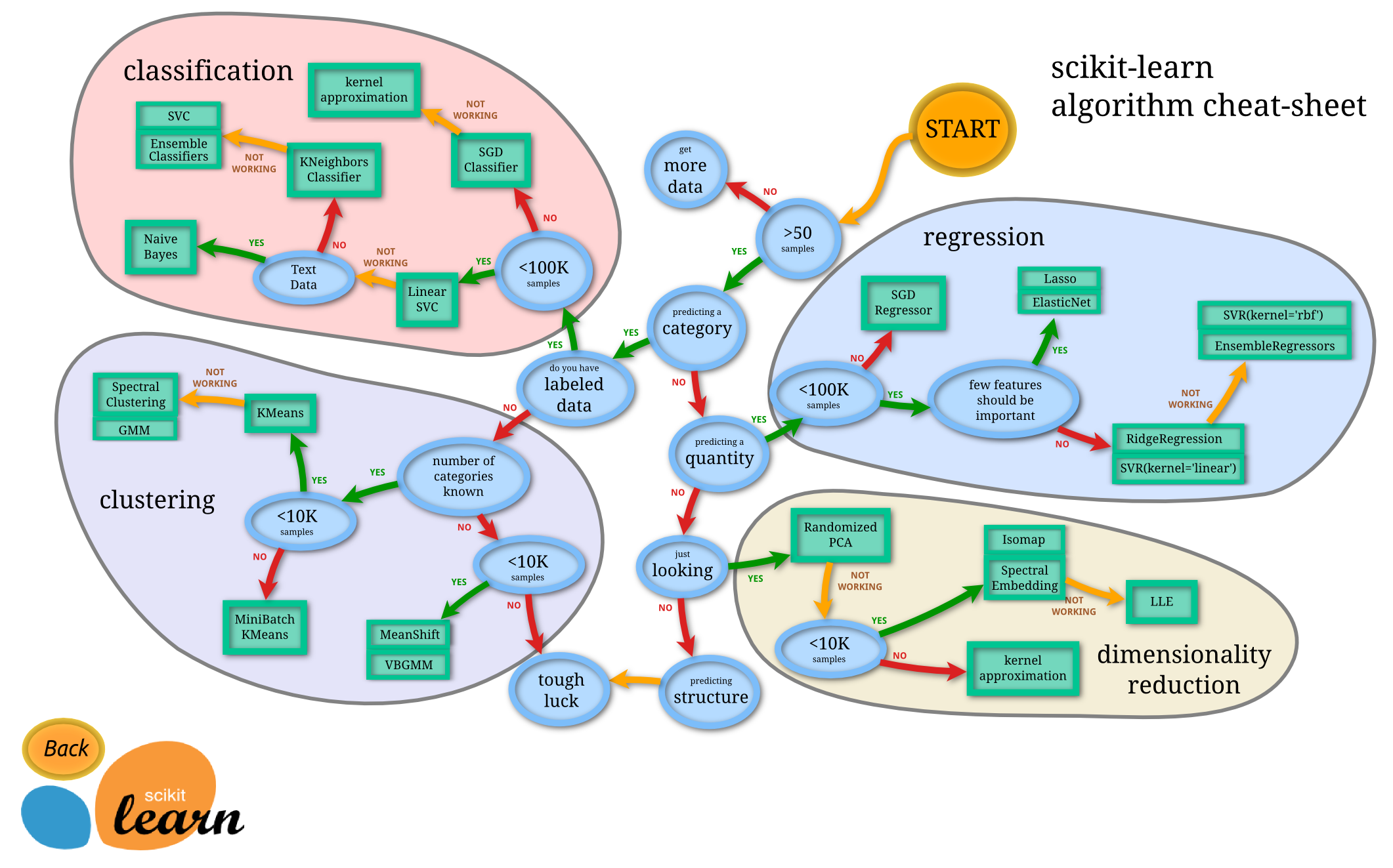

- Select algorithm

- Train model

- Tune parameters

- Select final model

- Validate final model

- Deploy and maintenance

source: http://scikit-learn.org/stable/tutorial/machine_learning_map/index.html

Summary

- the most important is question, data is secondary (but also important)

- many algorithms and techniques

- application can be very simple but also extremely sophiticated

- sometimes difficult to find correct answer

- base math skills are important

Summary

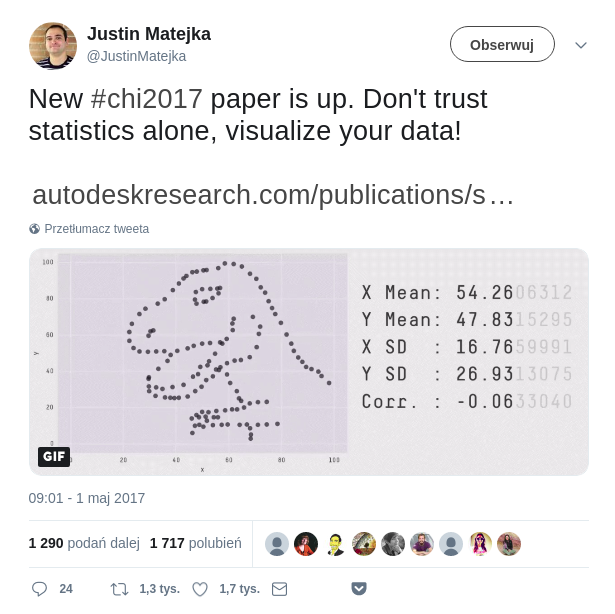

https://twitter.com/JustinMatejka/status/859075295059562498

PHP 7.0

206,128 instances classified in 30 seconds

(6,871 per second)

https://github.com/syntheticminds/php7-ml-comparison

Python 2.7

106,879 instances classified in 30 seconds

(3,562 per second)

NodeJS v5.11.1

245,227 instances classified in 30 seconds

(8,174 per second)

Java 8

16,809,048 instances classified in 30 seconds

(560,301 per second)PHP 7.1

302,931 instances classified in 30 seconds

(10,098 per second)PHP 7.2

365,568 instances classified in 30 seconds

(12,186 per second)PHP 7.3

408,667 instances classified in 30 seconds

(13,622 per second)

Event Storming

Where to begin?

kaggle.com

What to read?

Q&A

Thanks for listening

@ ArkadiuszKondas

https://arkadiuszkondas.com

https://php-ml.org/

https://slides.com/arkadiuszkondas/machine-learning-in-php-2019/

https://joind.in/talk/98e38

Machine Learning - how to start to teach the machine - PHP Russia 2019

By Arkadiusz Kondas

Machine Learning - how to start to teach the machine - PHP Russia 2019

The main goal of Machine Learning is to create intelligent systems that can improve and acquire new knowledge using input data. In practice, this translates into the use of one of hundreds of different available algorithms. This lecture is an introduction to ML from the total basics. We will learn the basic vocabulary and types of problems which the ML allows. I will also present the technique of building the whole pipeline, with the help of which we will go through all stages of ML: data processing (preprocessing), selection of algorithms and evaluation of its effectiveness.

- 3,393