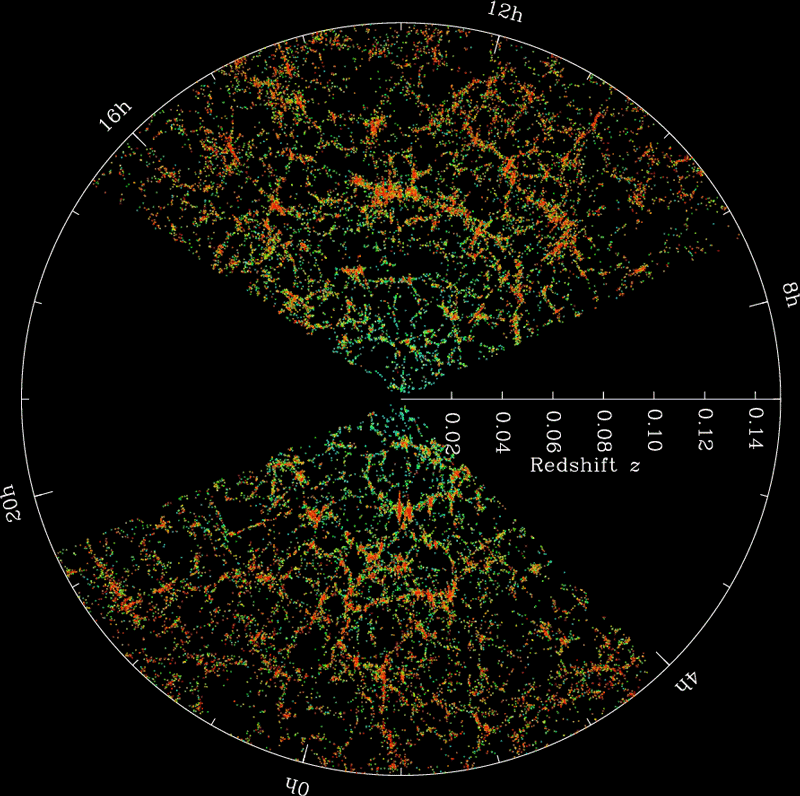

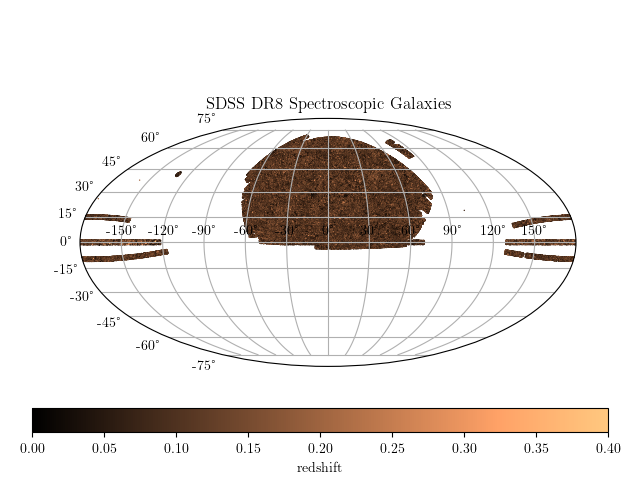

Redshift surveys in a nutshell

Learning summary statistics with ML

Carolina Cuesta-Lazaro

19th January 2022 - Waterloo Astronomy Seminar

Collaborators: Cheng-Zong Ruan, Yosuke Kobayashi, Alexander Eggemeier, Pauline Zarrouk, Sownak Bose, Takahiro Nishimichi, Baojiu Li, Carlton Baugh

The golden days of Cosmology:

A five parameter Universe

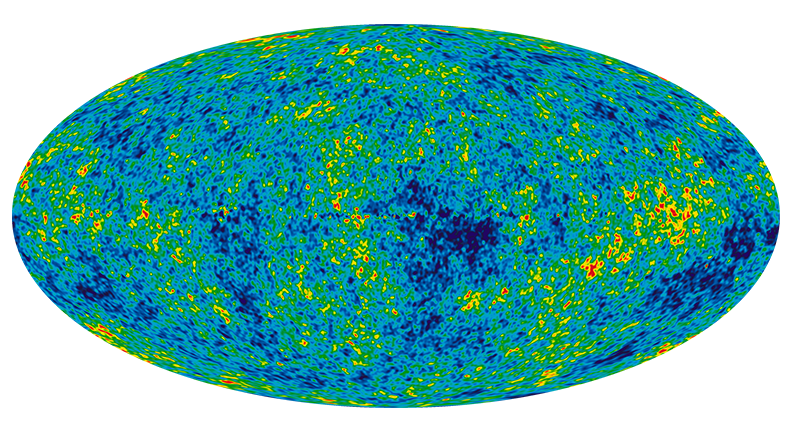

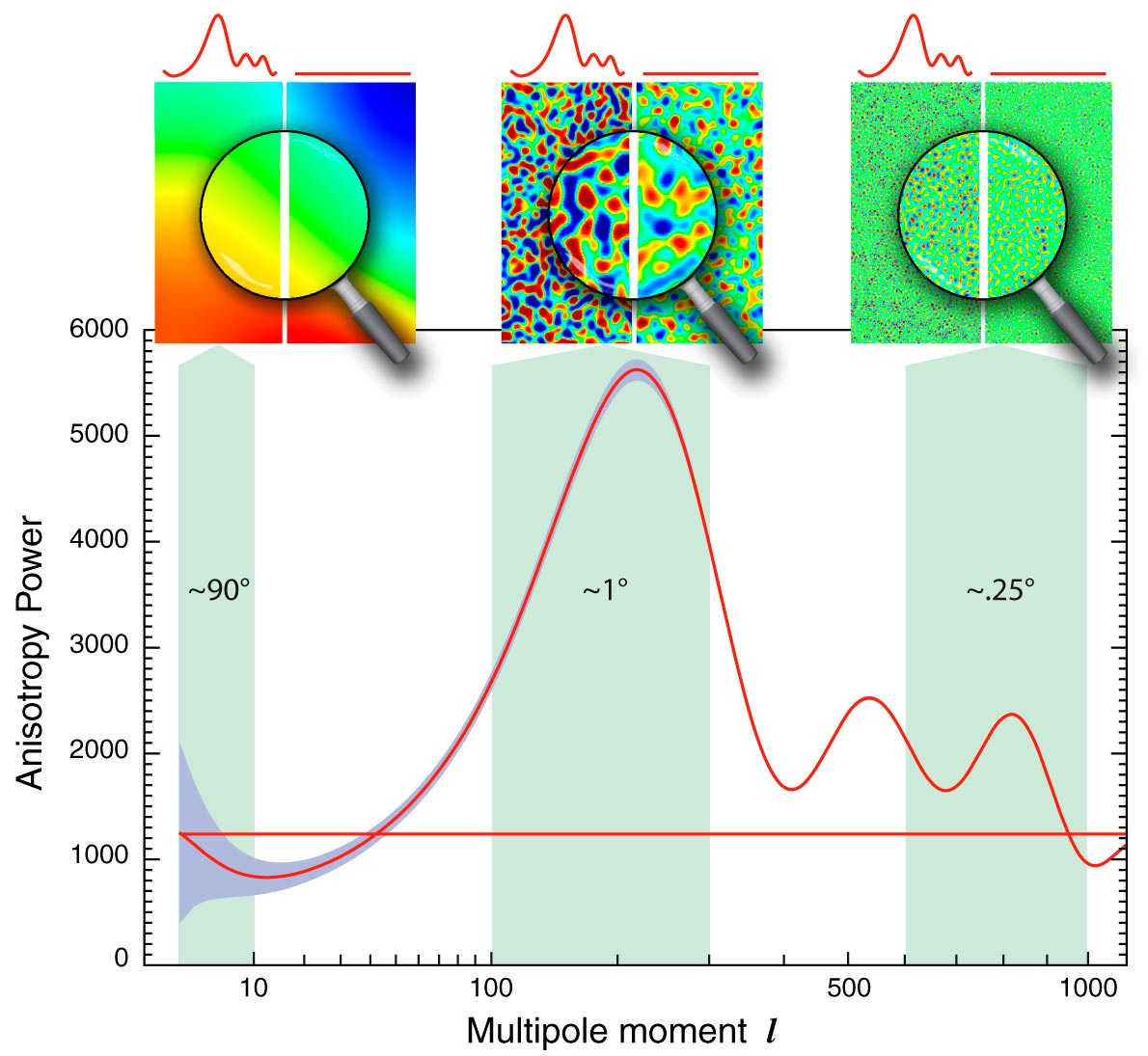

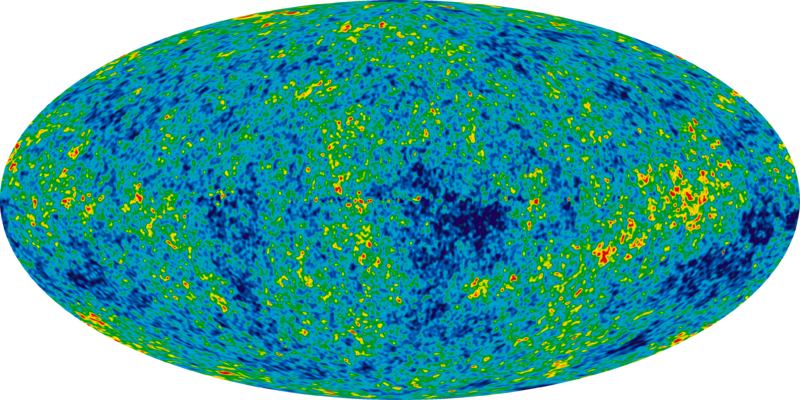

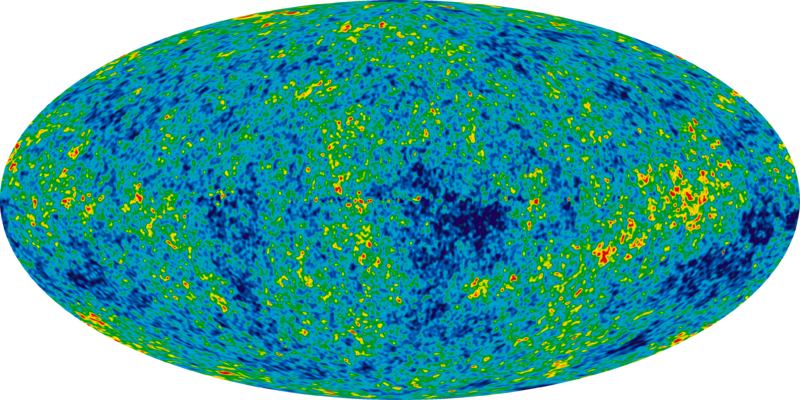

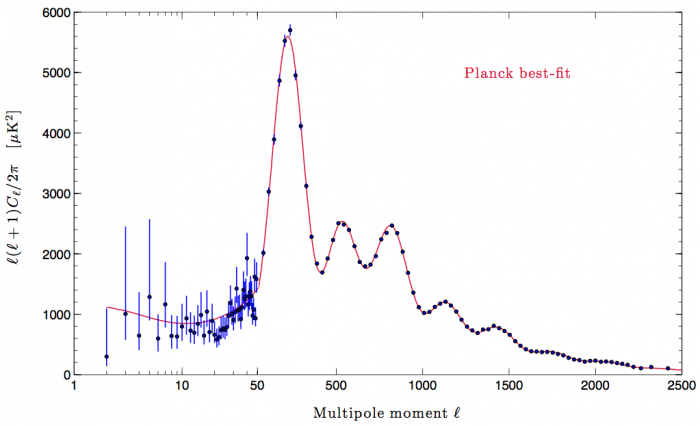

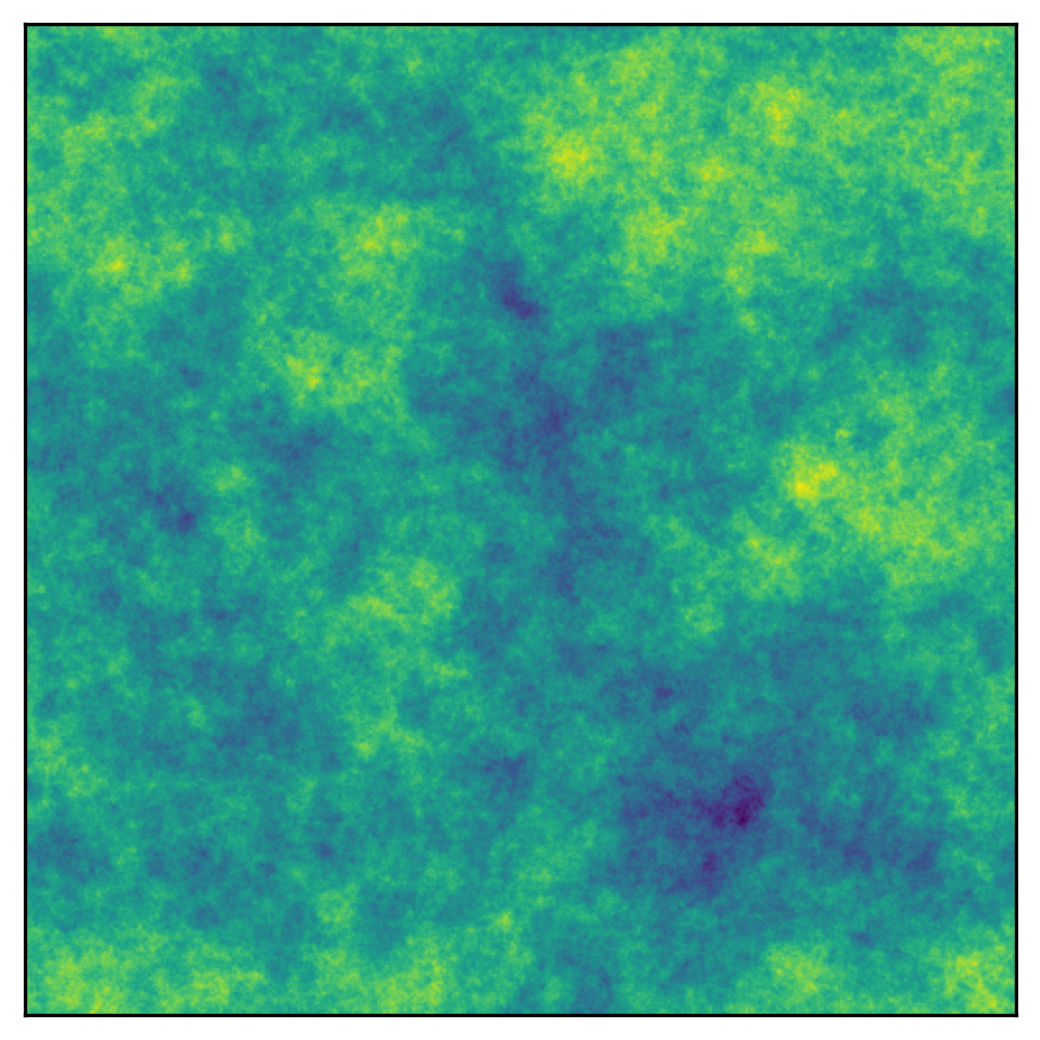

Initial Conditions

Dynamics

Dark energy

Dark matter

Ordinary matter

Amplitude initial density field

Scale dependence

Linear

Credit: NASA / WMAP SCIENCE TEAM

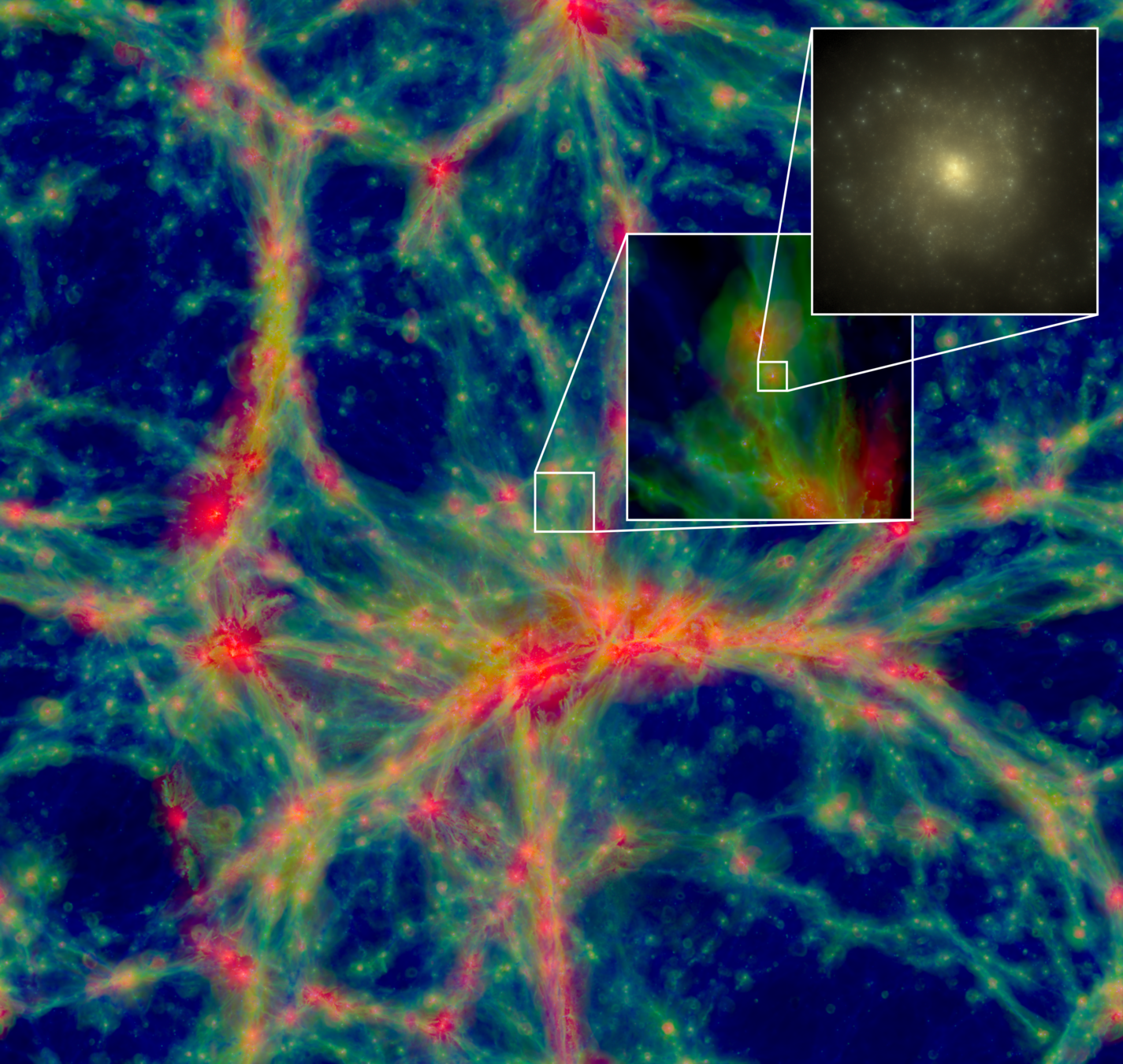

GALAXY CLUSTERING

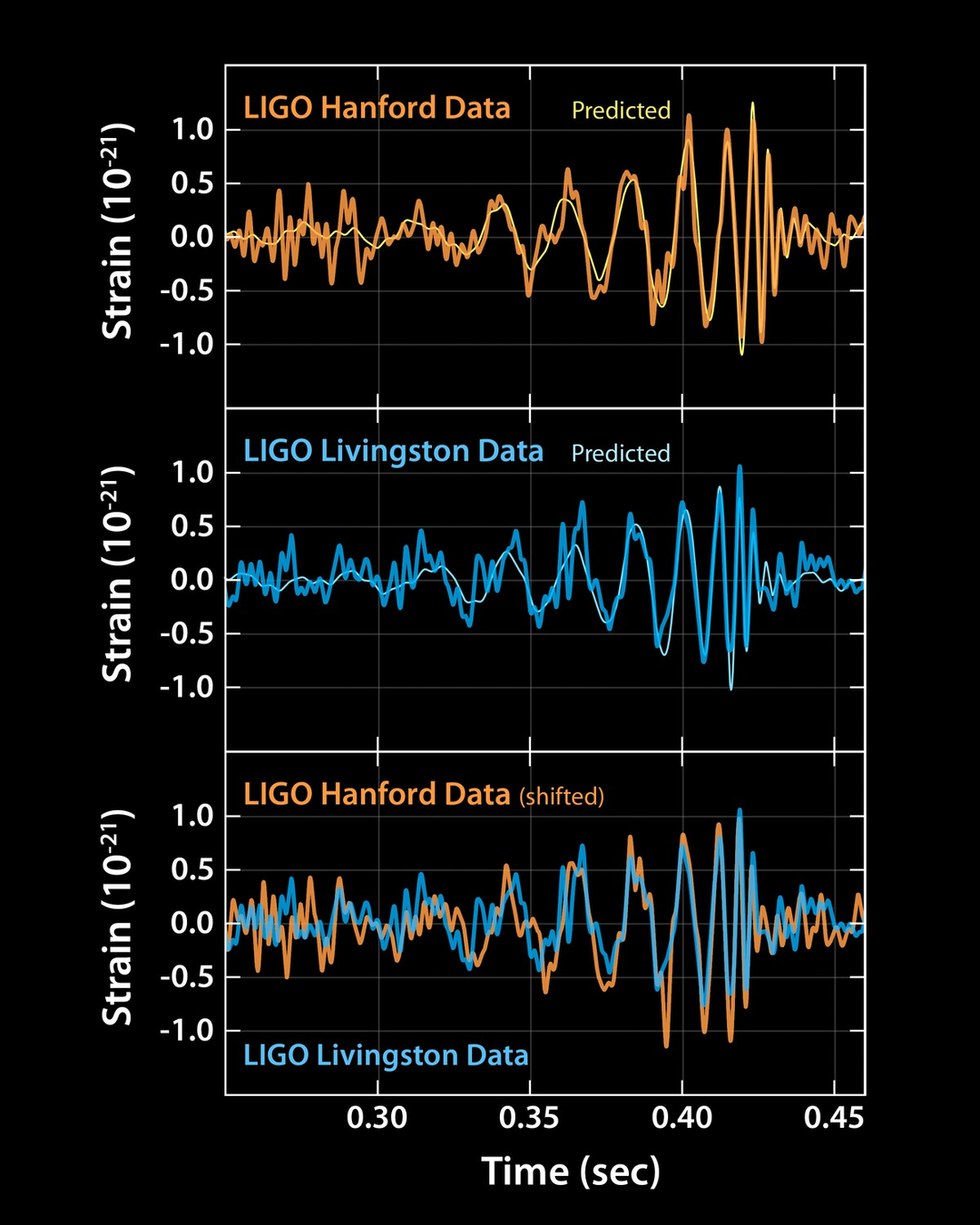

GRAVITATIONAL WAVES

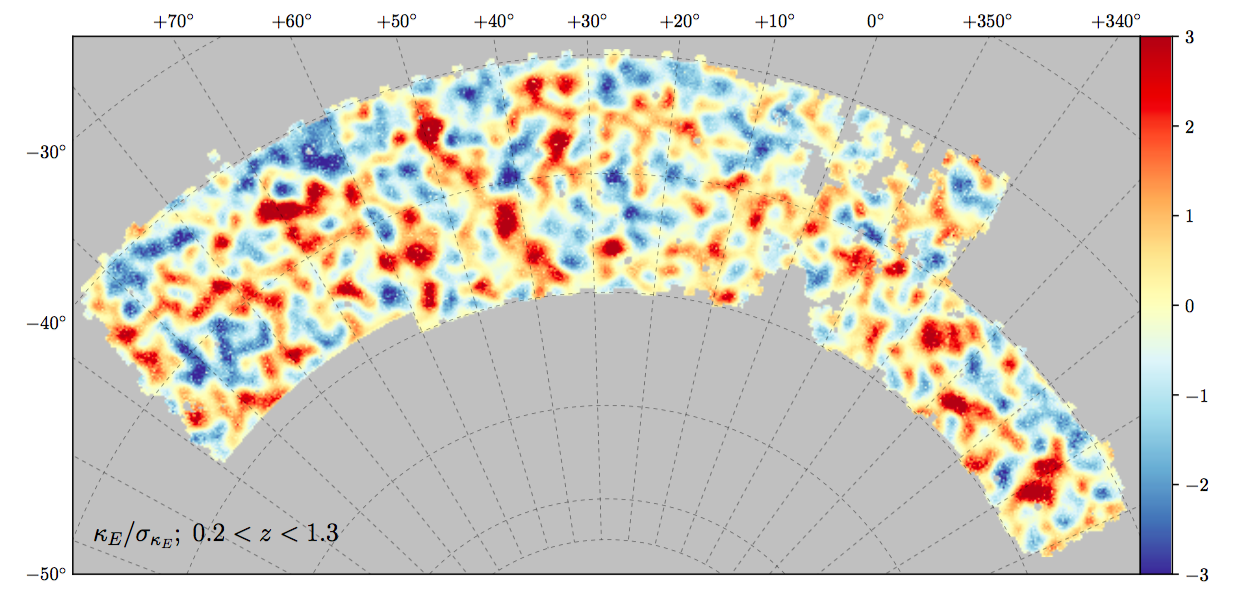

GRAVITATIONAL LENSING

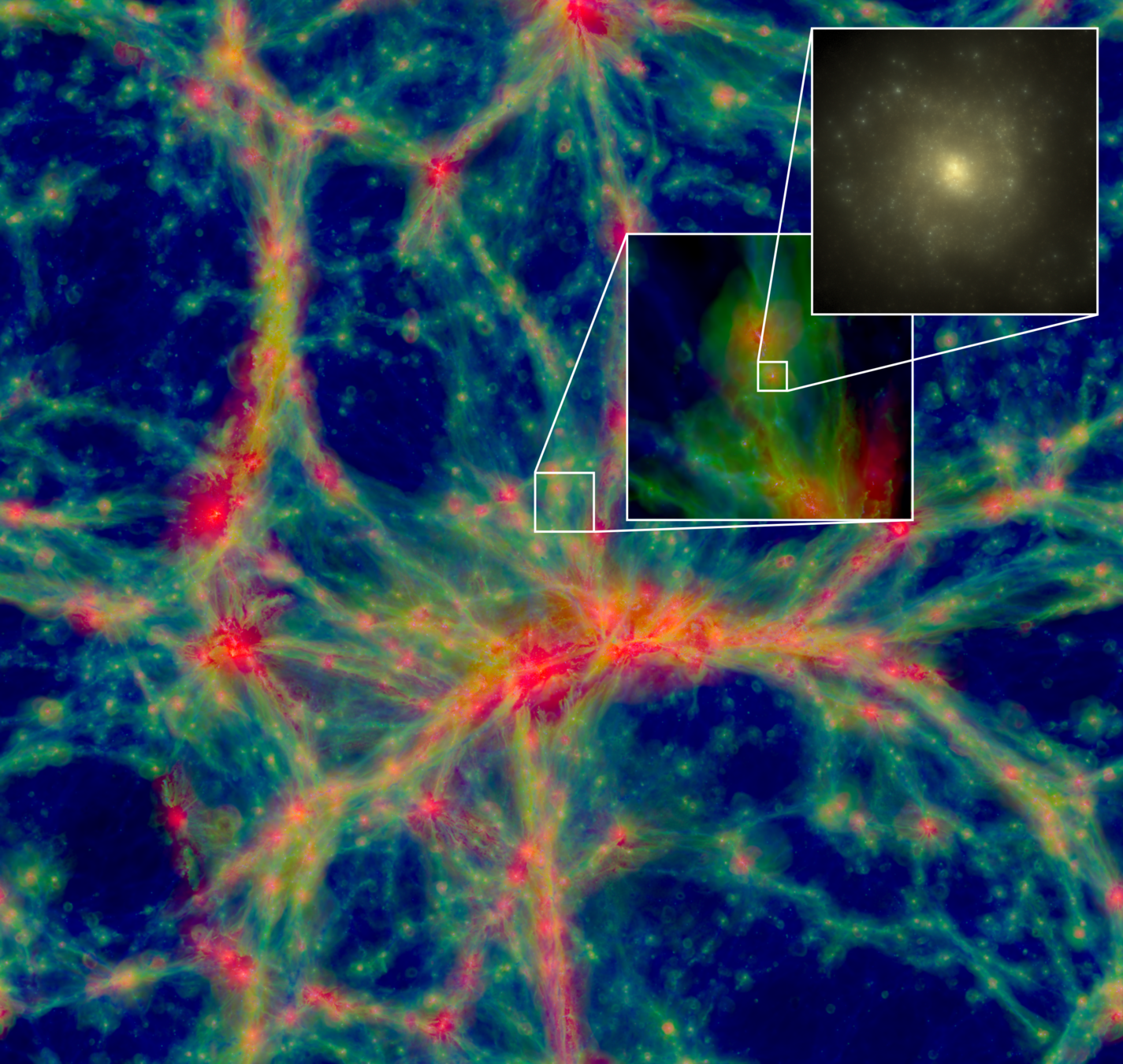

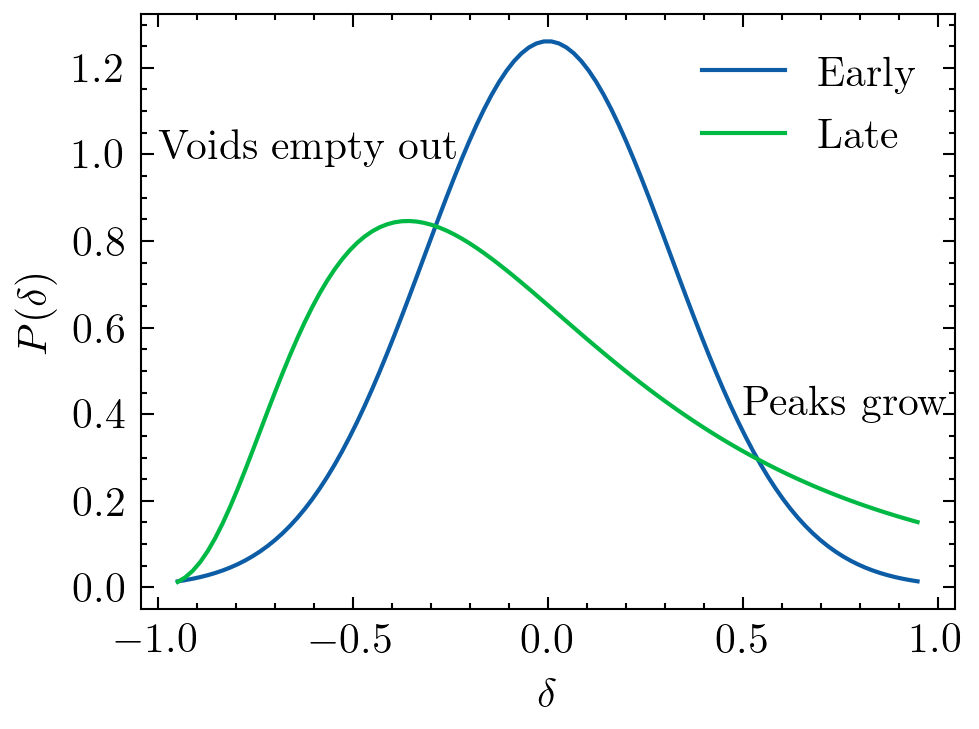

Early Universe

~linear

Gravity

Late Universe

Non-linear

Credit: S. Codis+16

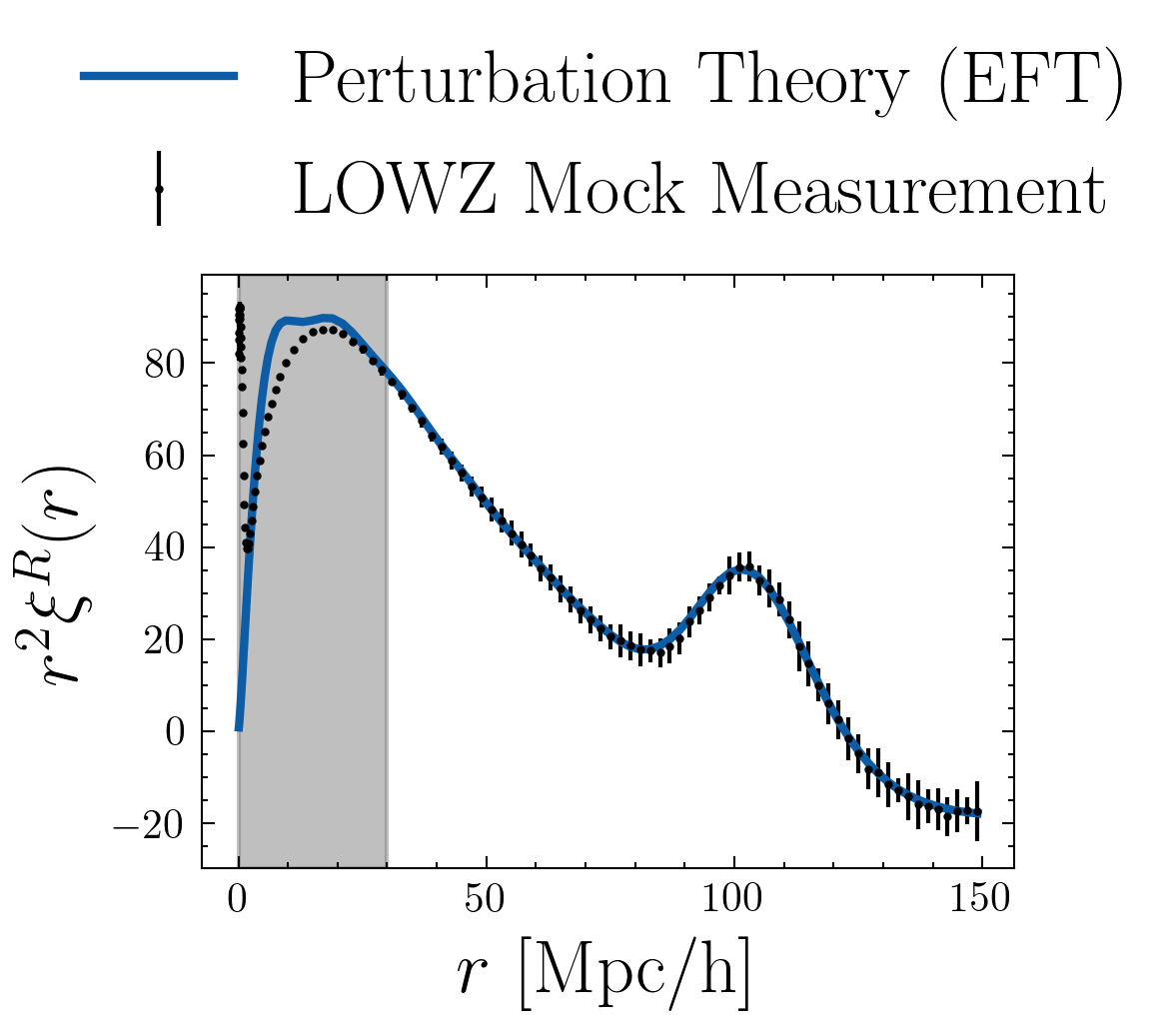

Non-linearity = PT predictions inaccurate

Credit: S. Codis+16

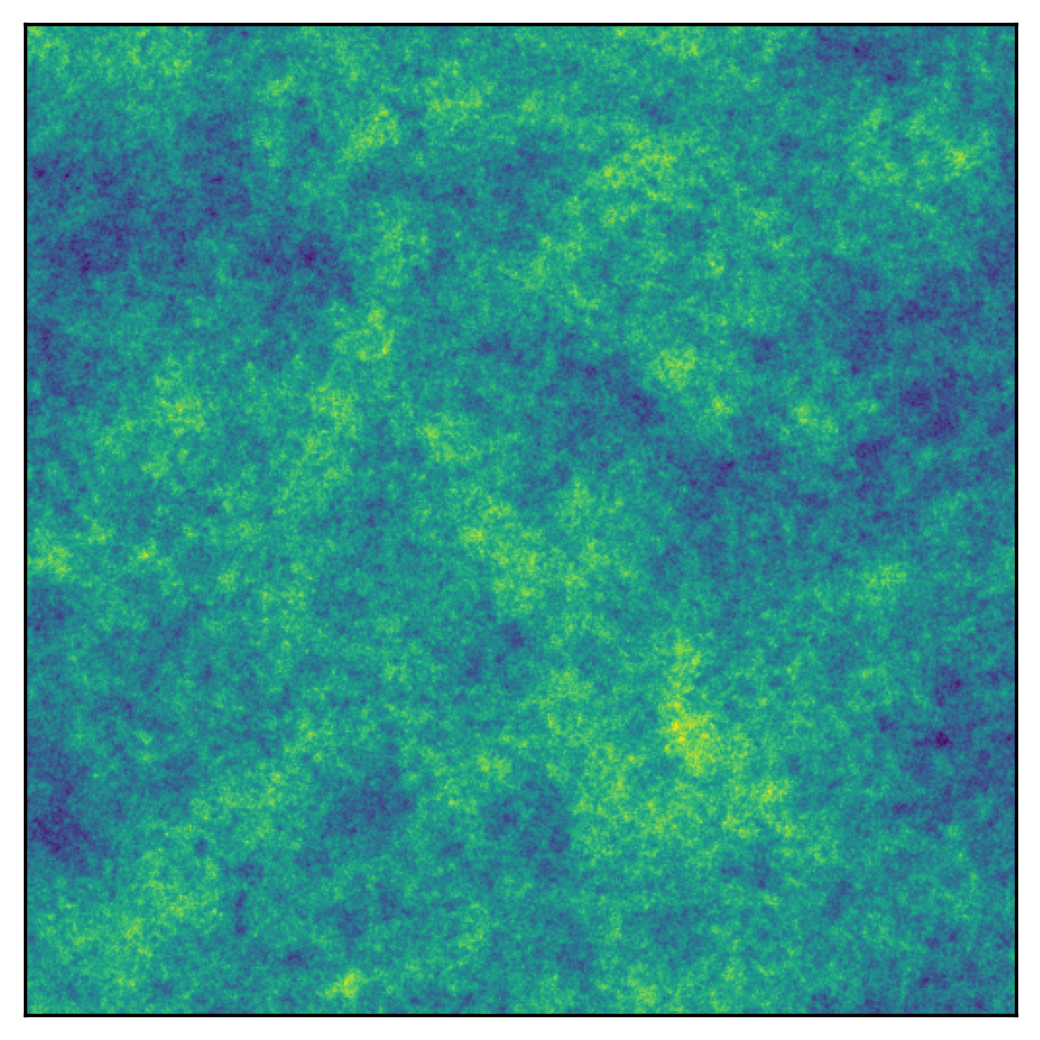

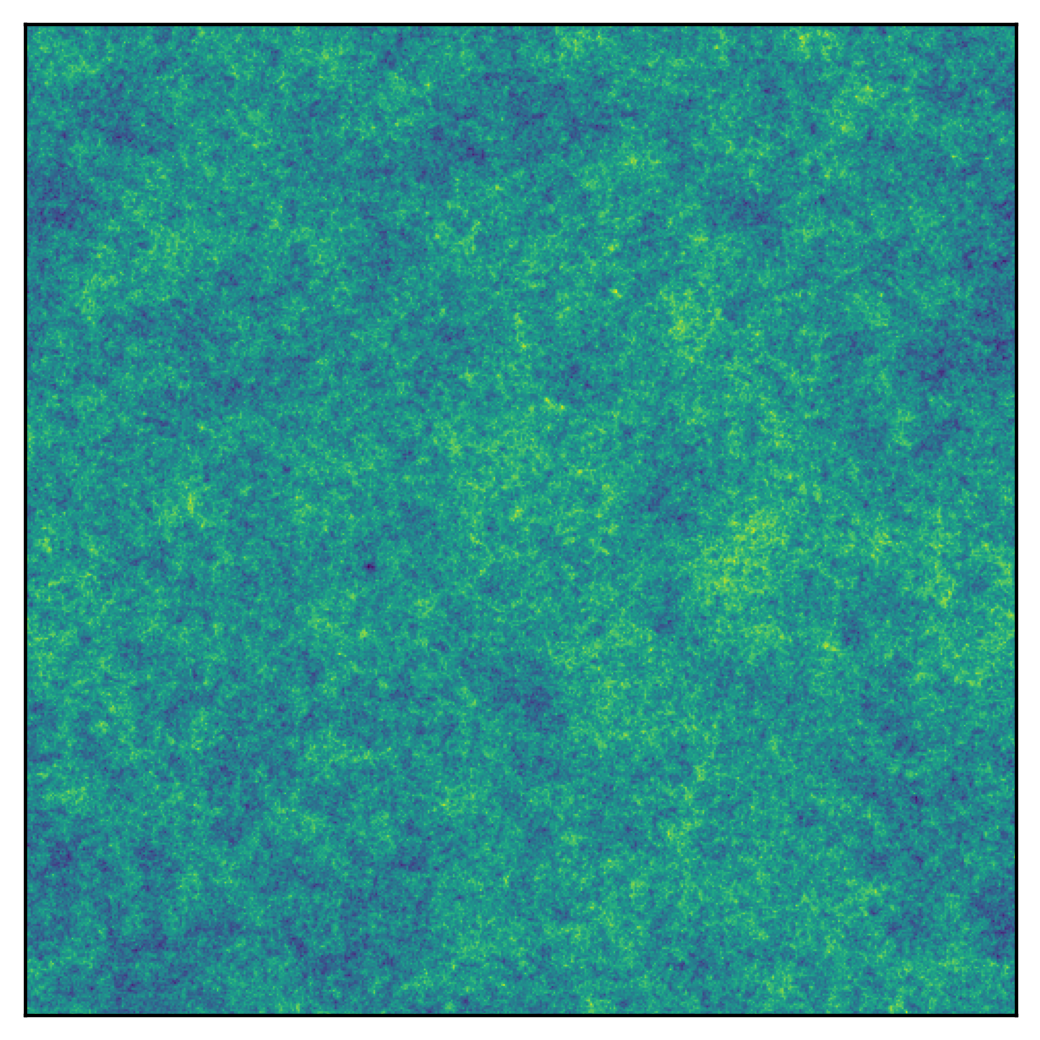

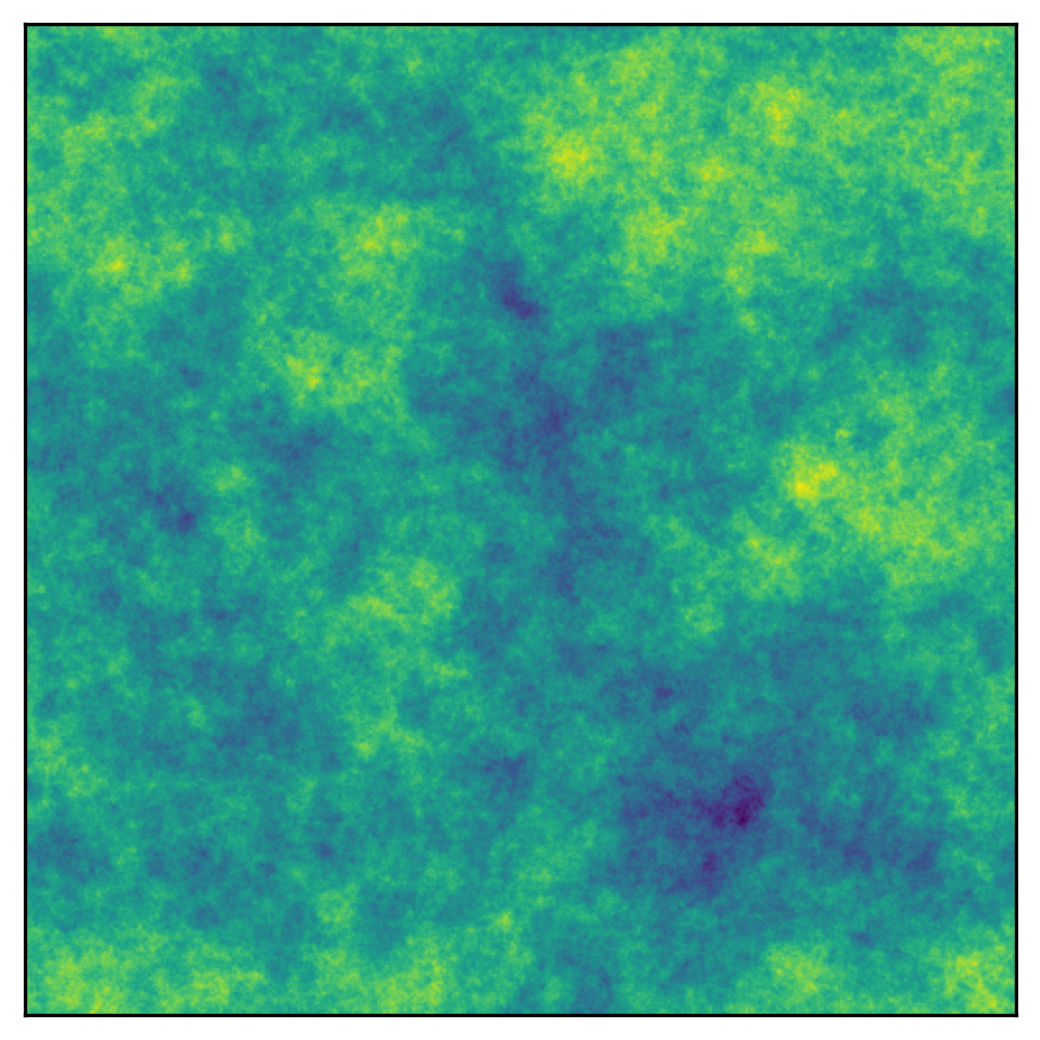

Early Universe

~linear

Gravity

Late Universe

Non-linear

Credit: S. Codis+16

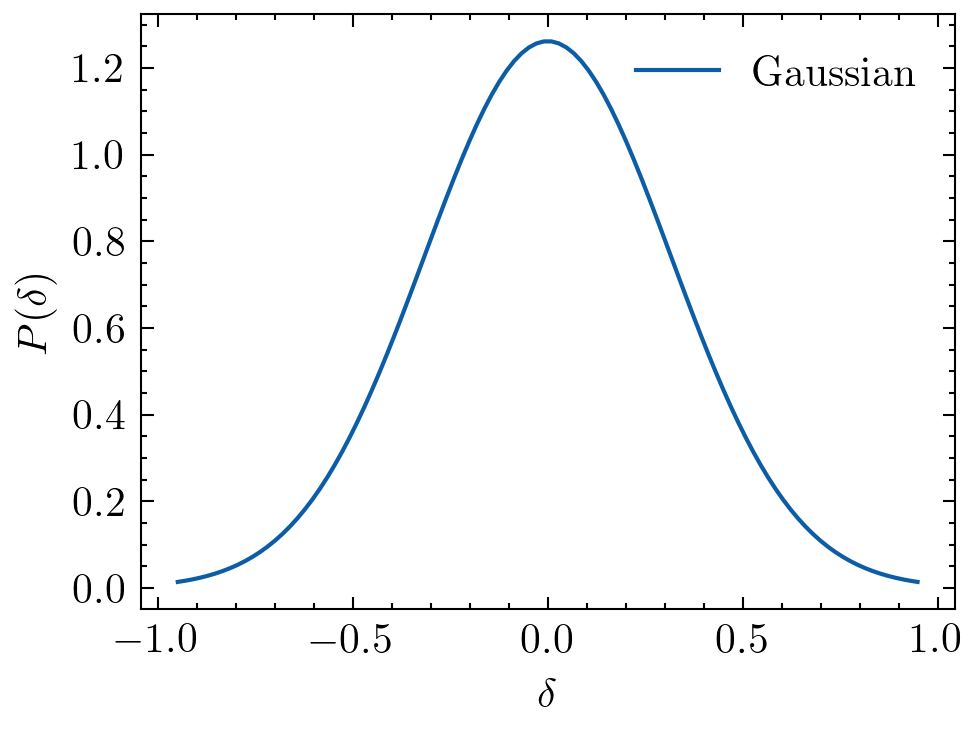

Non-Guassianity

Second moment not optimal

Machine Learning as a solution to

- Non-linearities Produce accurate predictions based on N-body simulations

- Non-Gaussianity Extract cosmological information at the field level

Space-time

geometetry

Energy content

Adding new degrees of freedom

- To the energy content (dynamic) DARK ENERGY

- To the way space-time geometry reacts to the energy content MODIFIED GRAVITY (FIFTH FORCES)

?

Fifth forces modify structure growth

GROWTH

- GRAVITY

- FIFTH FORCE

+ EXPANSION

Credit: Cartoon depicting Willem de Sitter as Lambda from Algemeen Handelsblad (1930).

Cosmology =

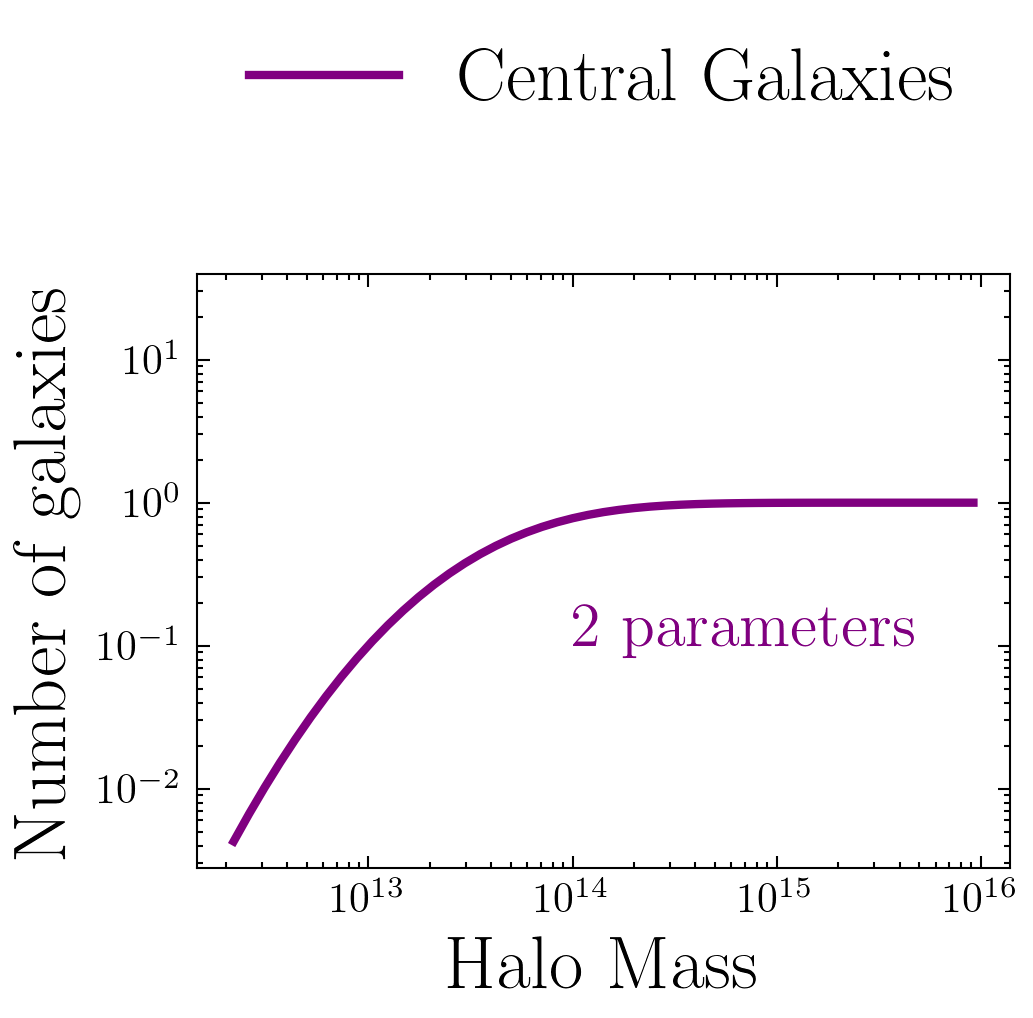

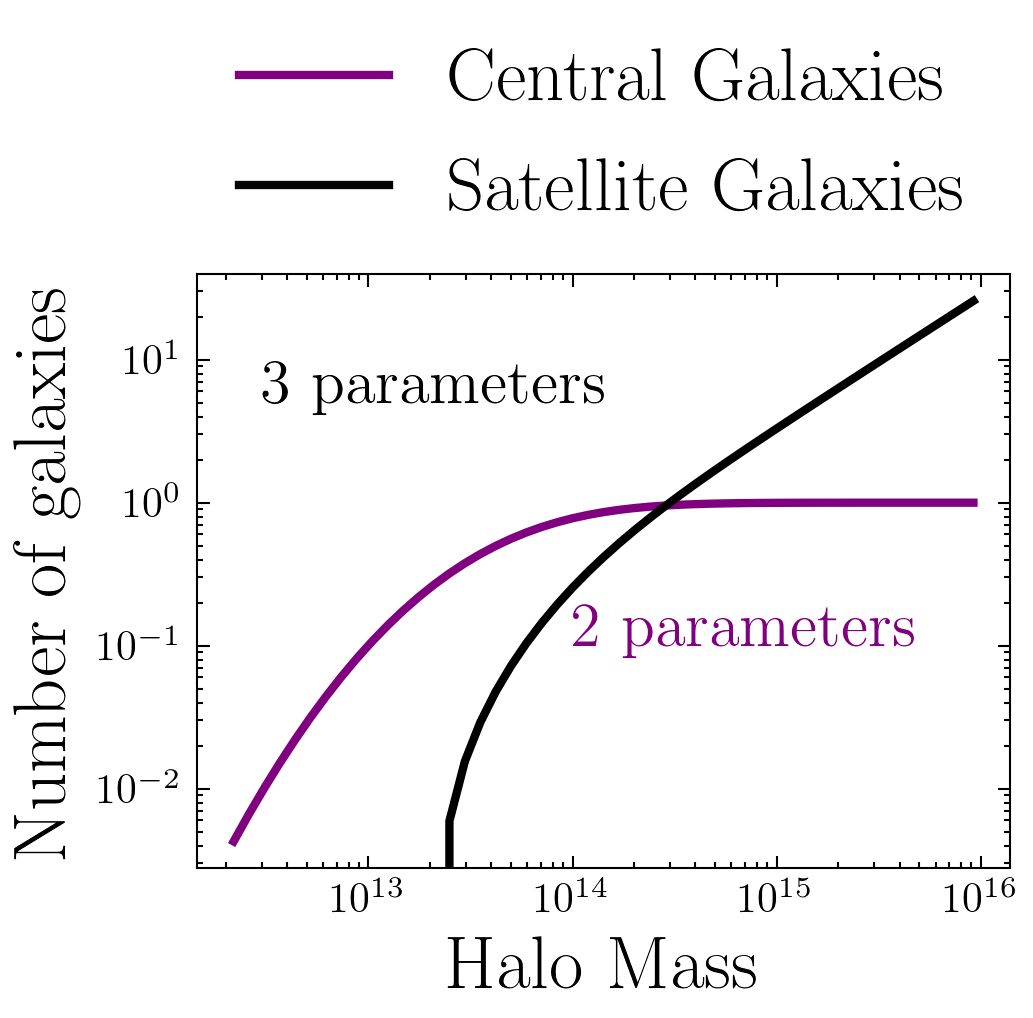

Main Assumptions

- Galaxies don't impact dark matter clustering

- Number of galaxies depends on halo mass only

- We don't know the Initial Conditions

- Data is very high dimensional

- Large number of parameters to constrain

- N-body sims extremely slow to run! (Sampling parameter space > O(10^6) calls)

Cosmology =

Galaxy =

?

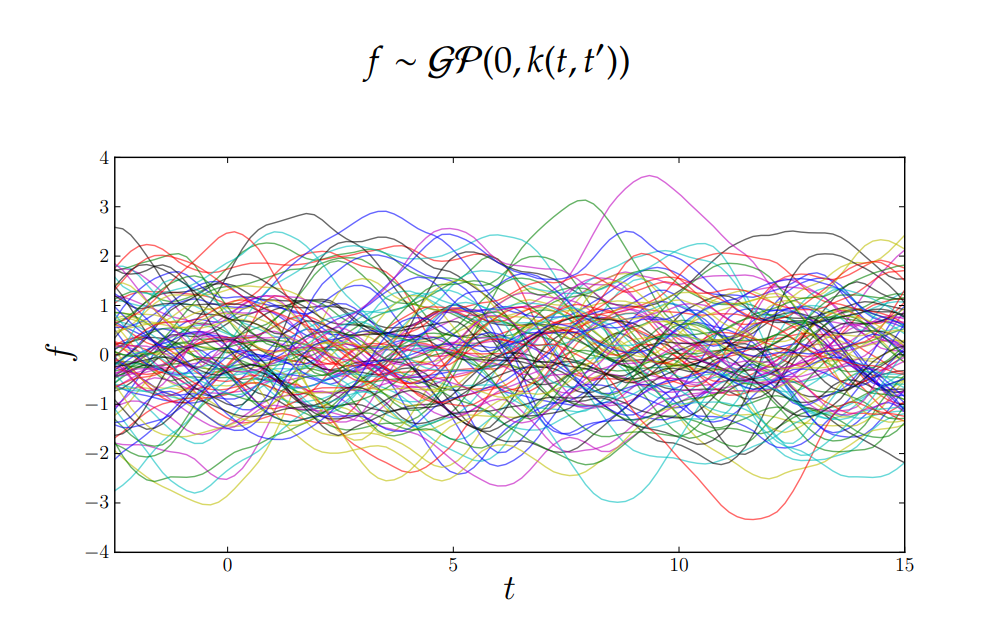

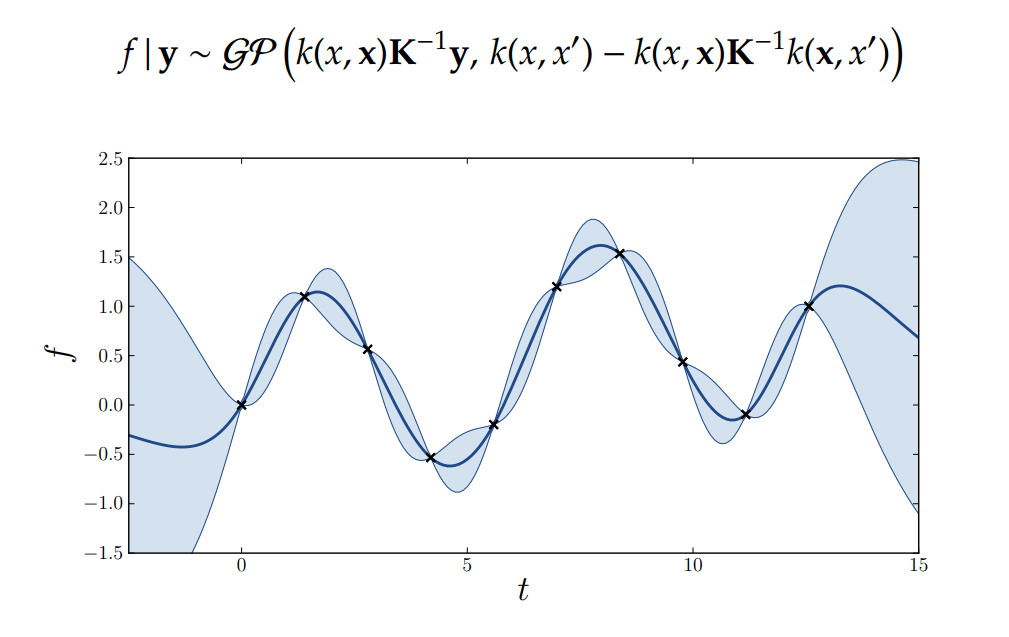

Summarise the data

N-body simulations

How to emulate?

Credit: James Hensman

Credit: James Hensman

Optimize the marginal likelihood: Analytical solution!

Pros

- Easy to get going

- Small number of free parameters

Cons

- Scales badly with training set size O(n^3)

- Scales badly with number of input features

Credit: https://cs231n.github.io/convolutional-networks/

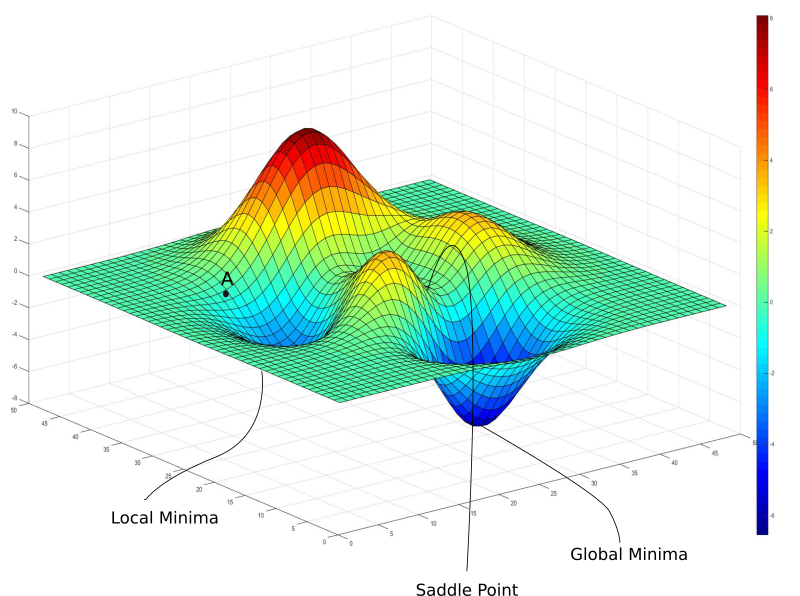

Loss Value

Weights

Weights

+

+

+

+

Network A

Network B

Pros

- Fast, does not scale with n

- Can model large input features

Cons

- Prone to overfitting: But ways to avoid it

- "Harder" to train (requires more exploration)

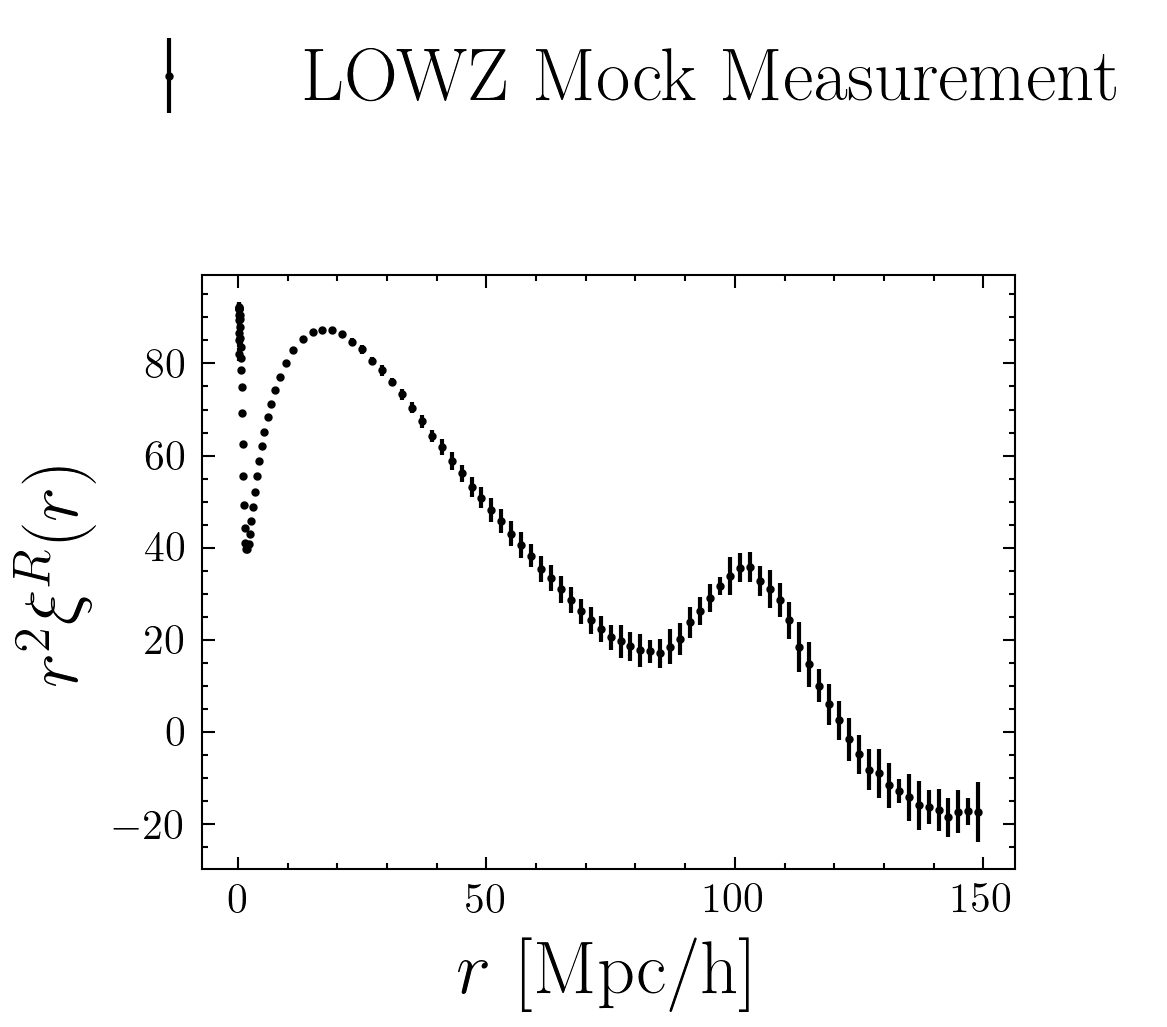

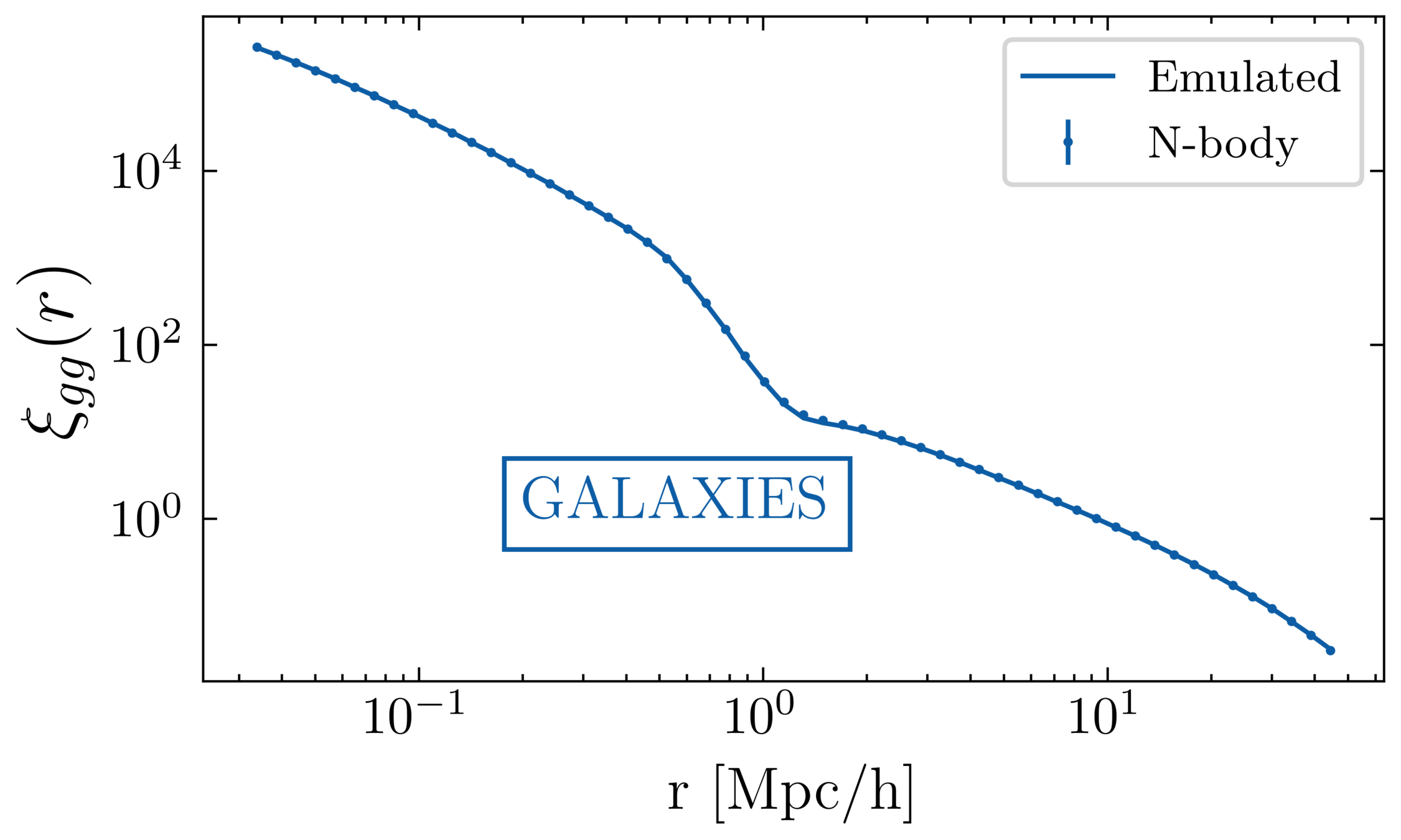

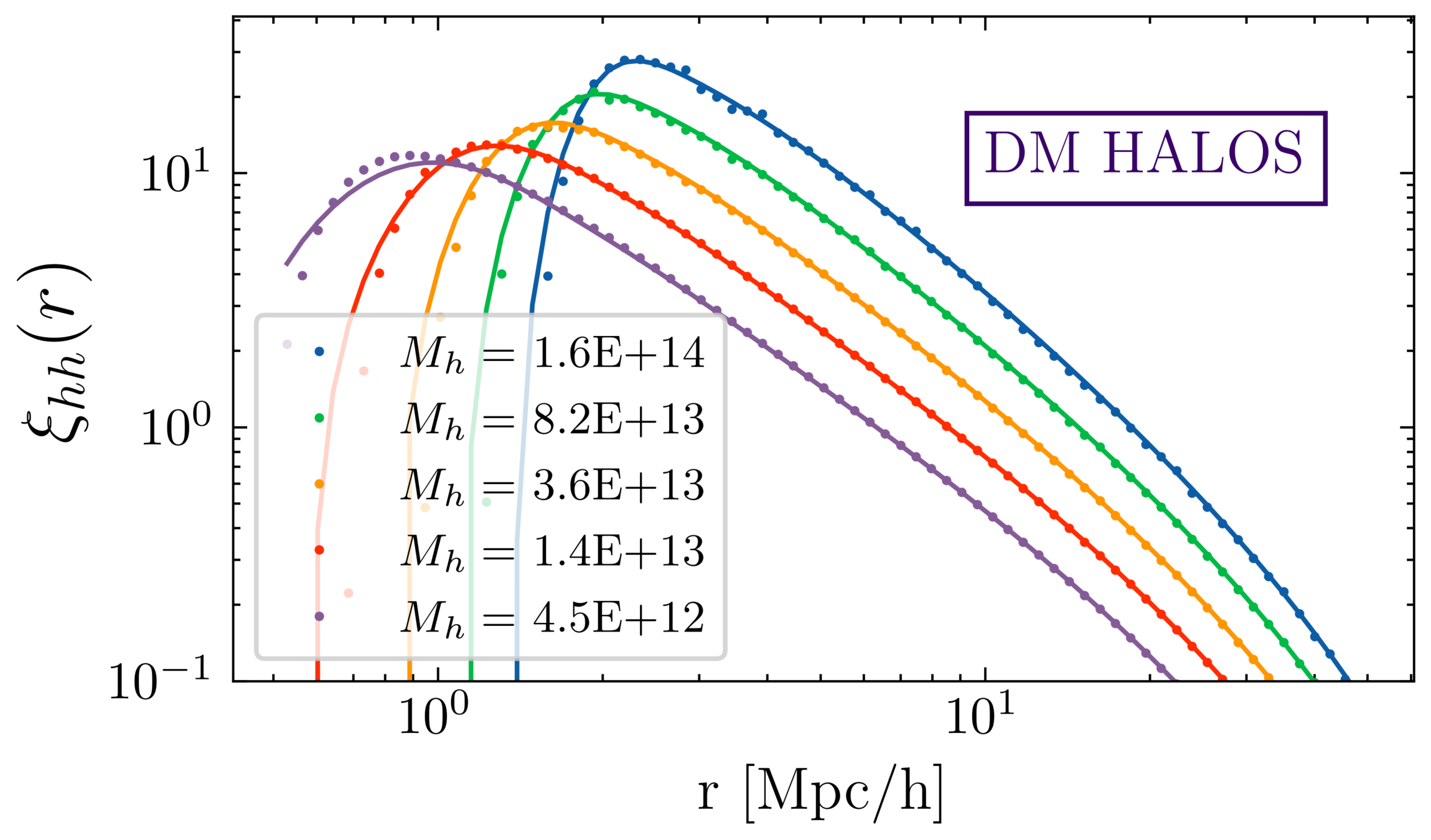

What to emulate?

- Flexibility: Vary galaxy tracers, and their cross-correlations. Marginalising over g requires flexible g!

-

1% accuracy1-sigma accuracy:- Emulator only as good as data used for training

- Simplify input/output relation through physical models

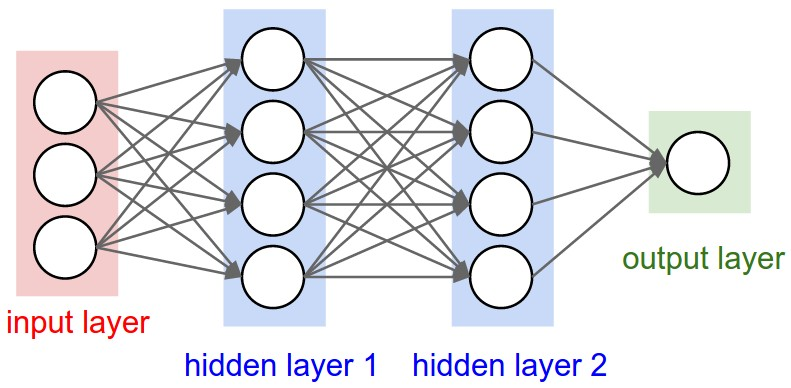

Neural Net

Analytical

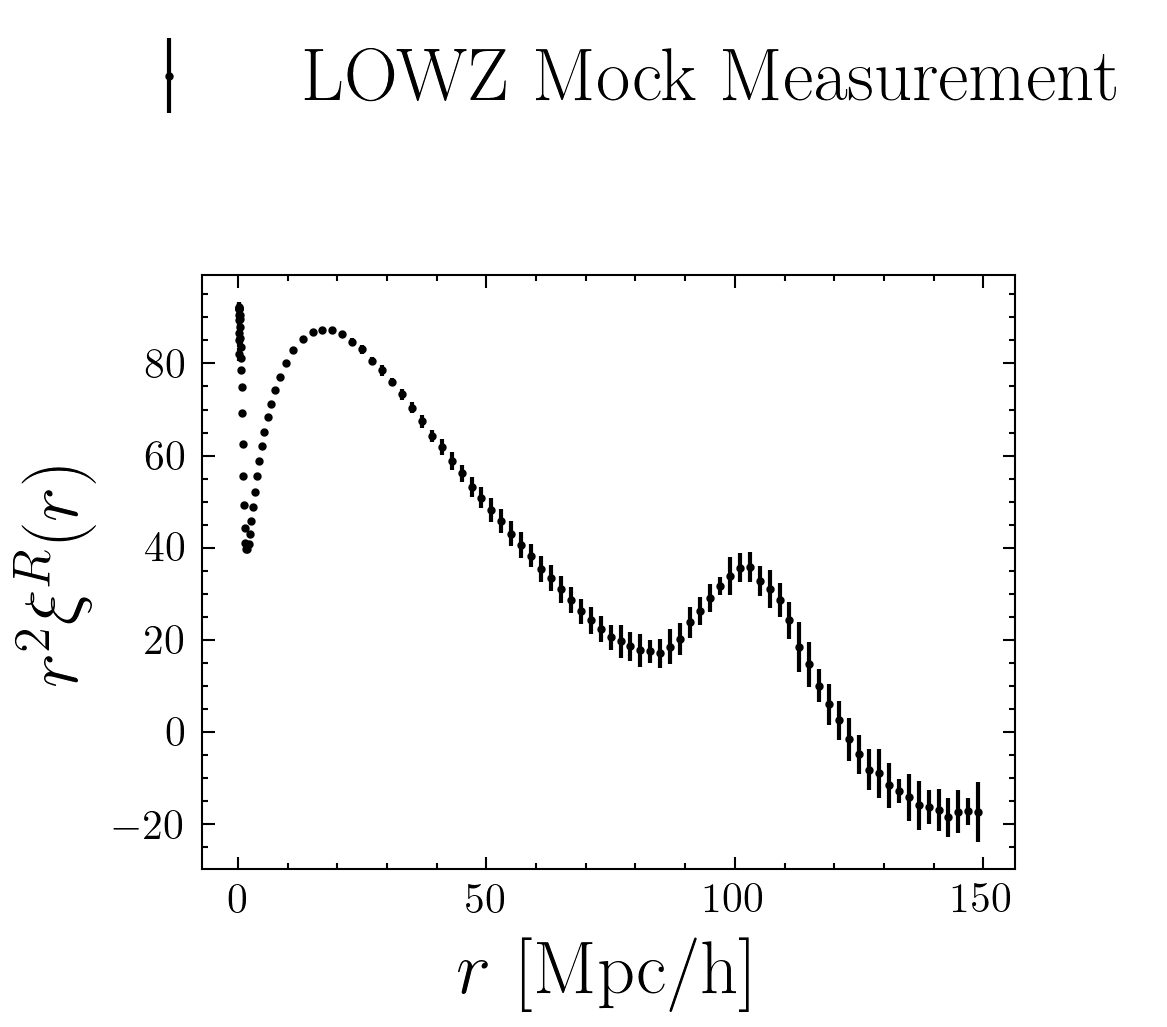

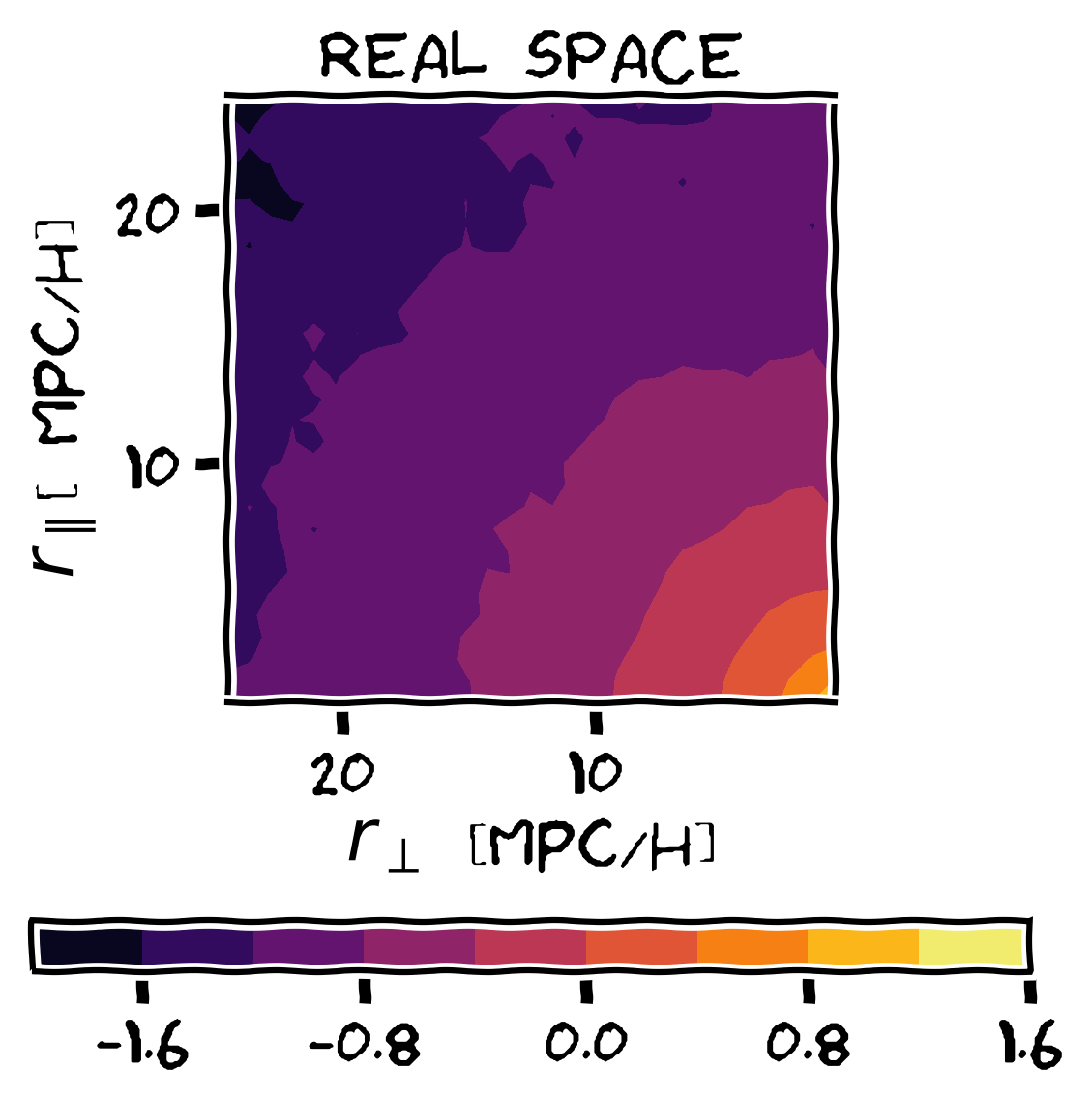

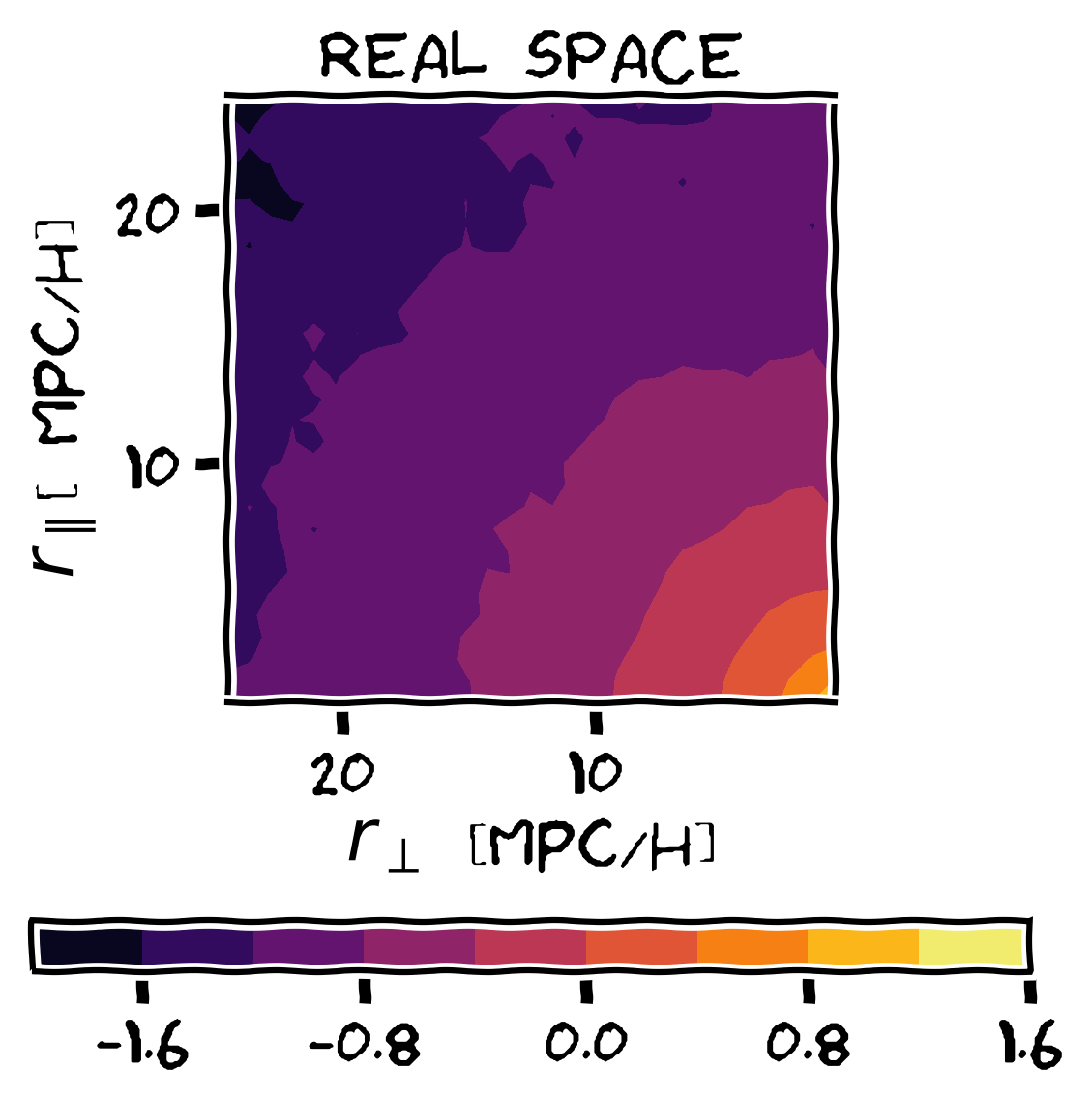

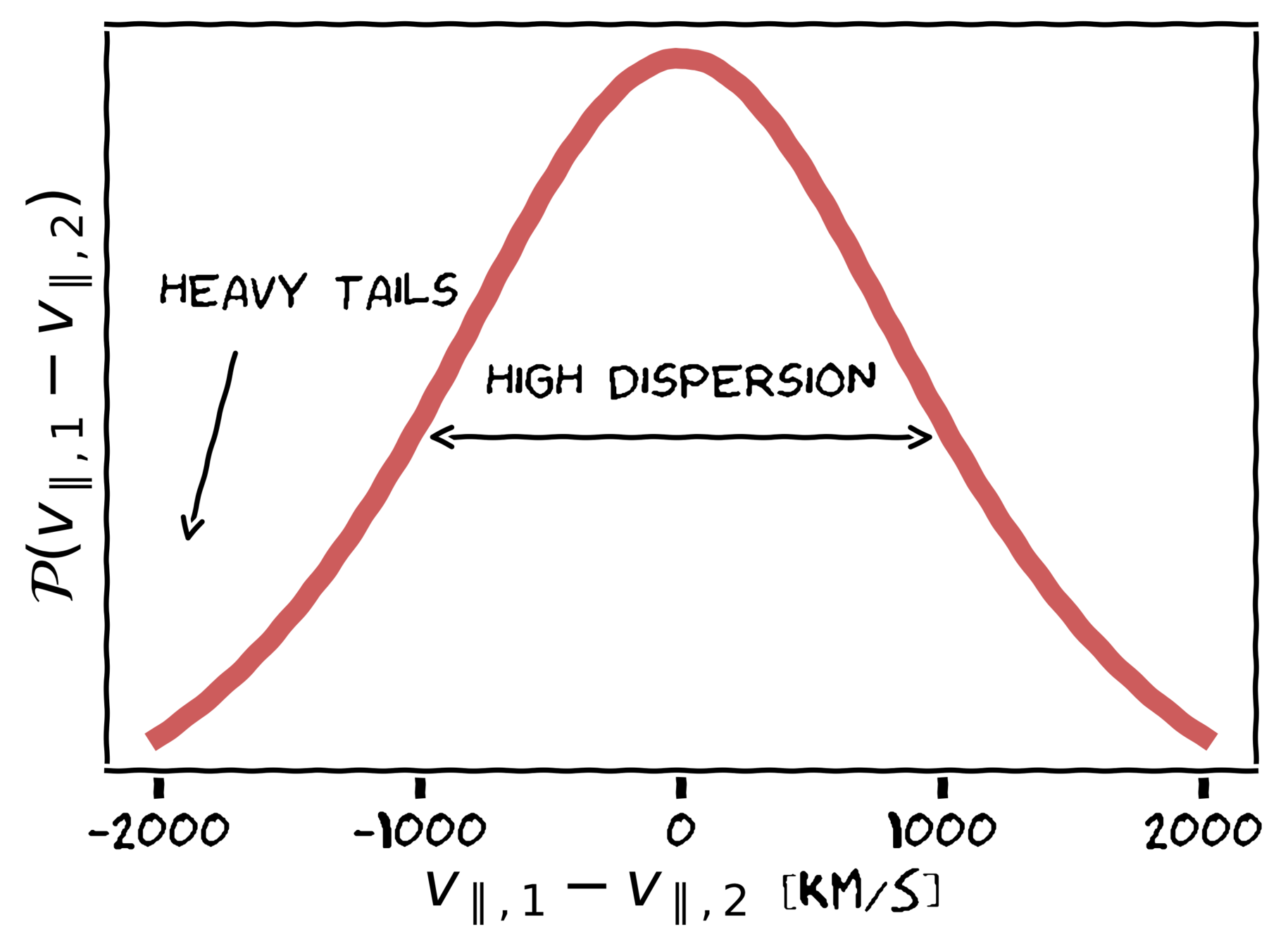

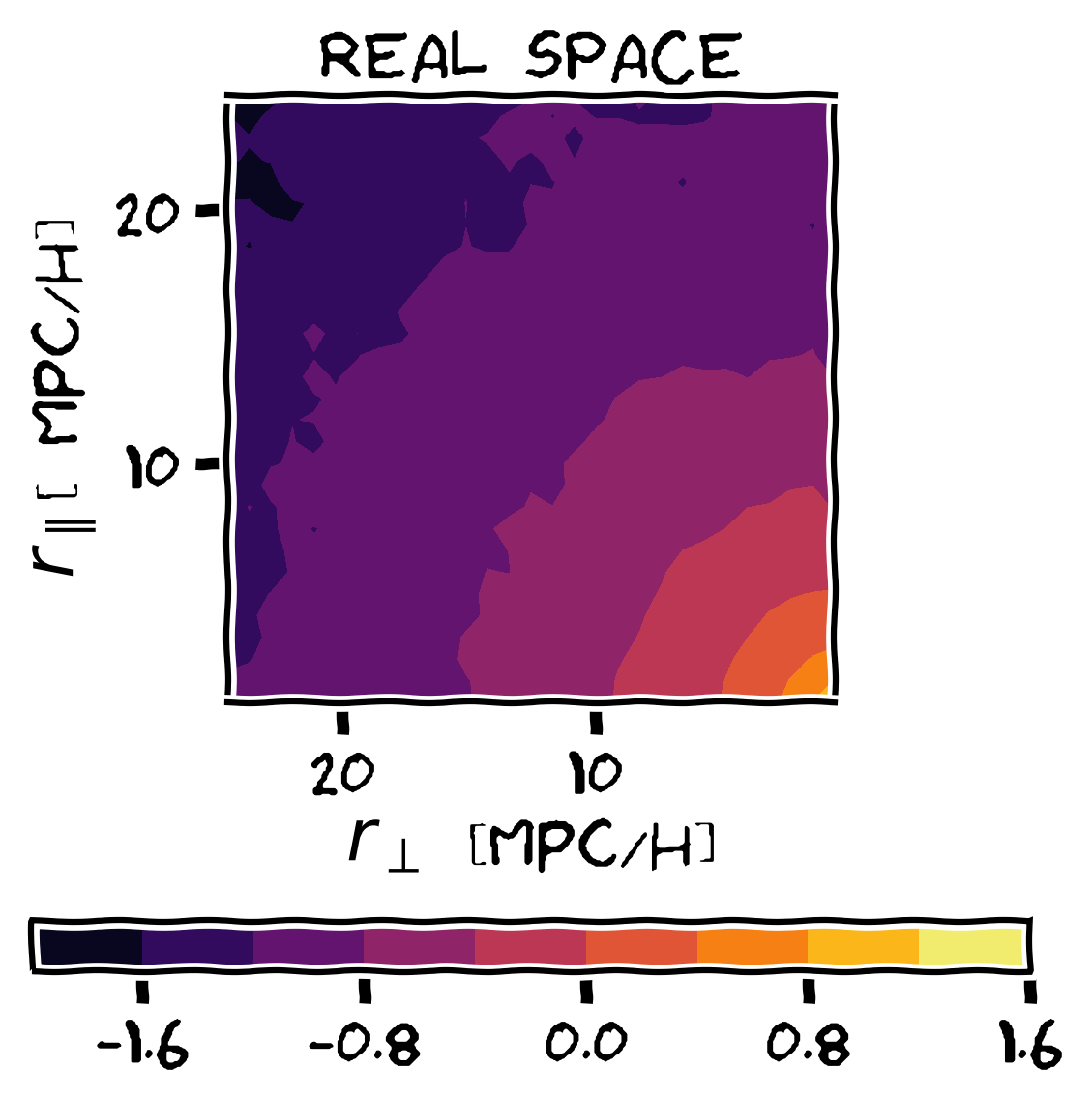

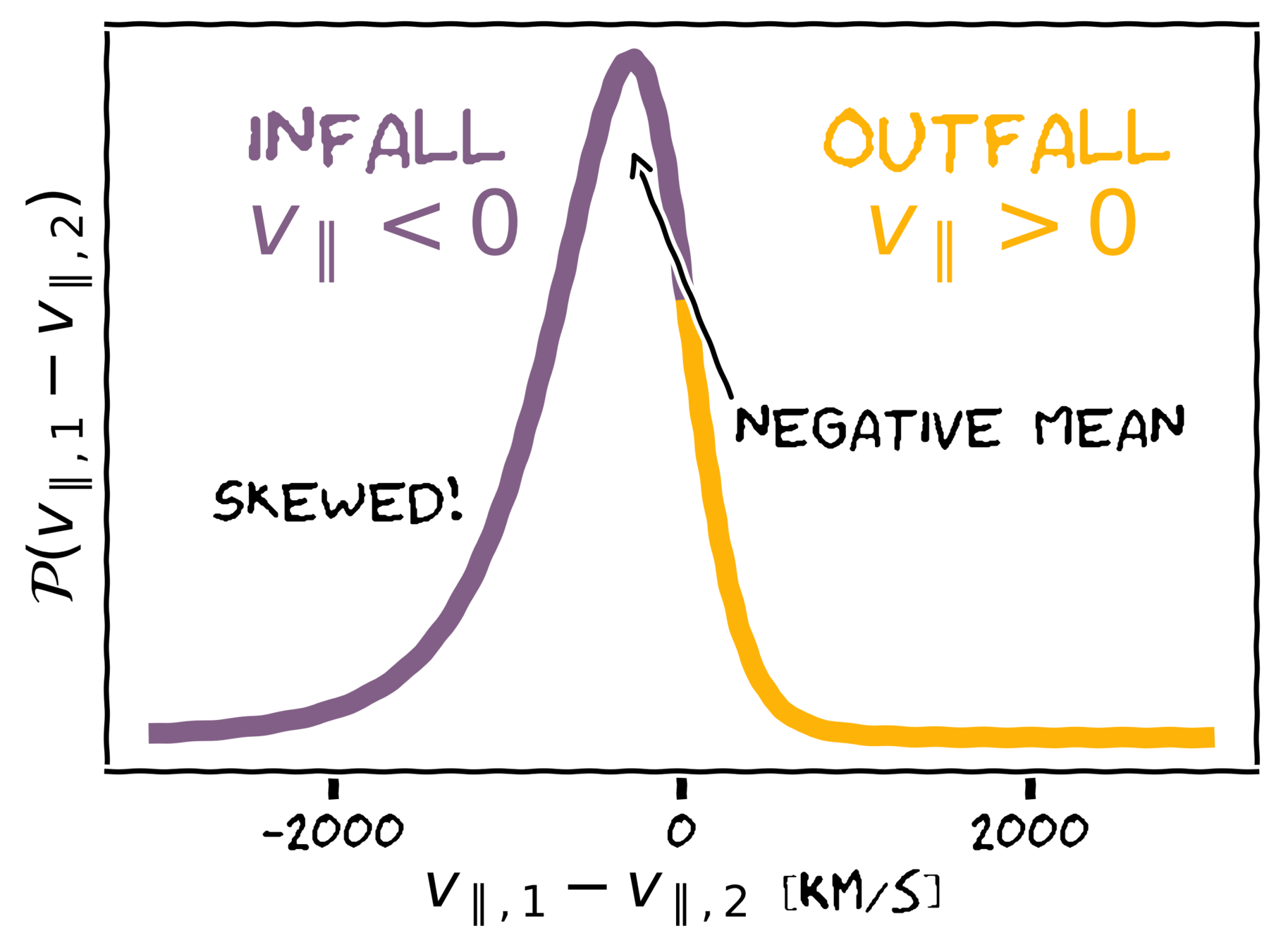

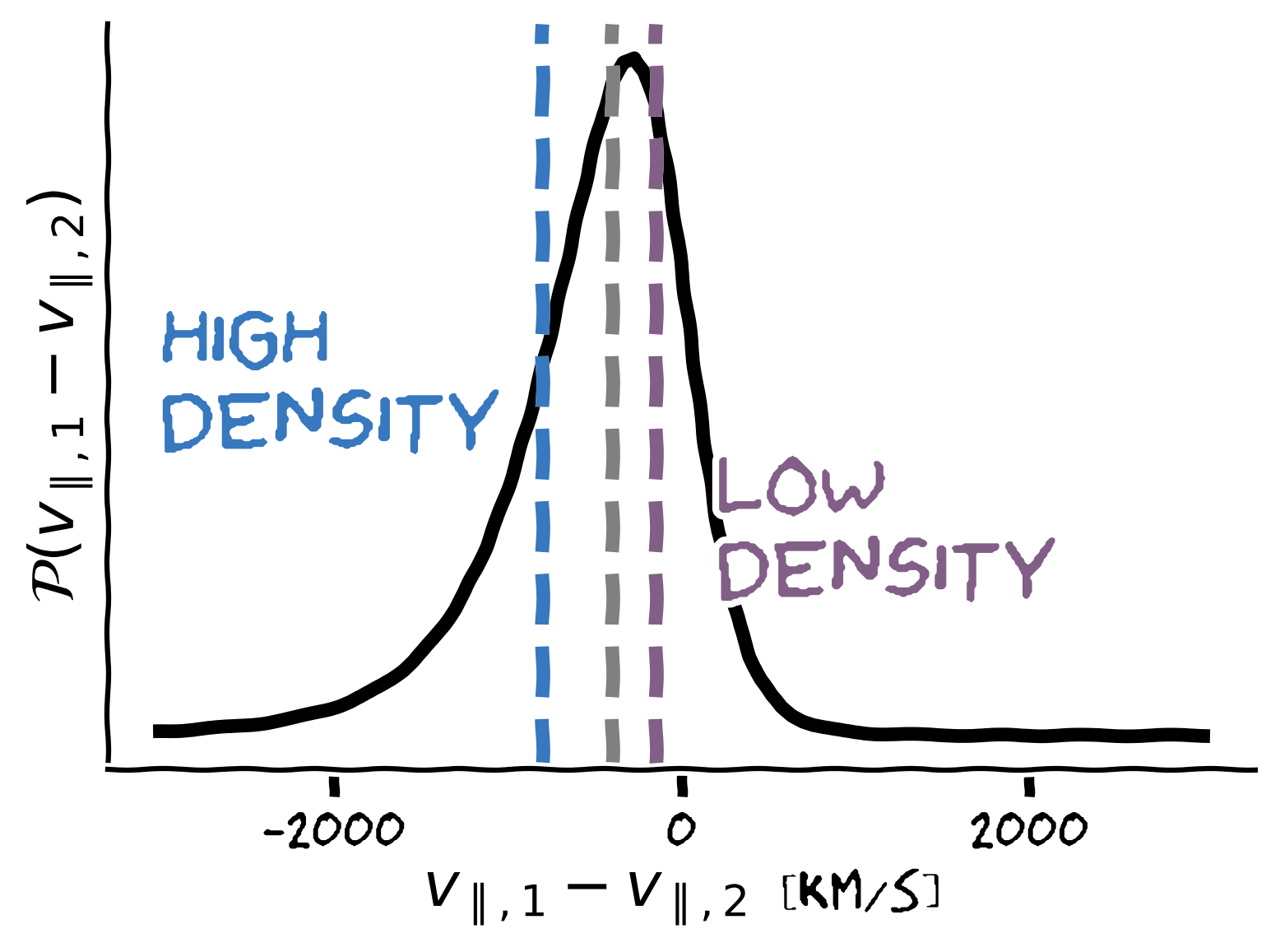

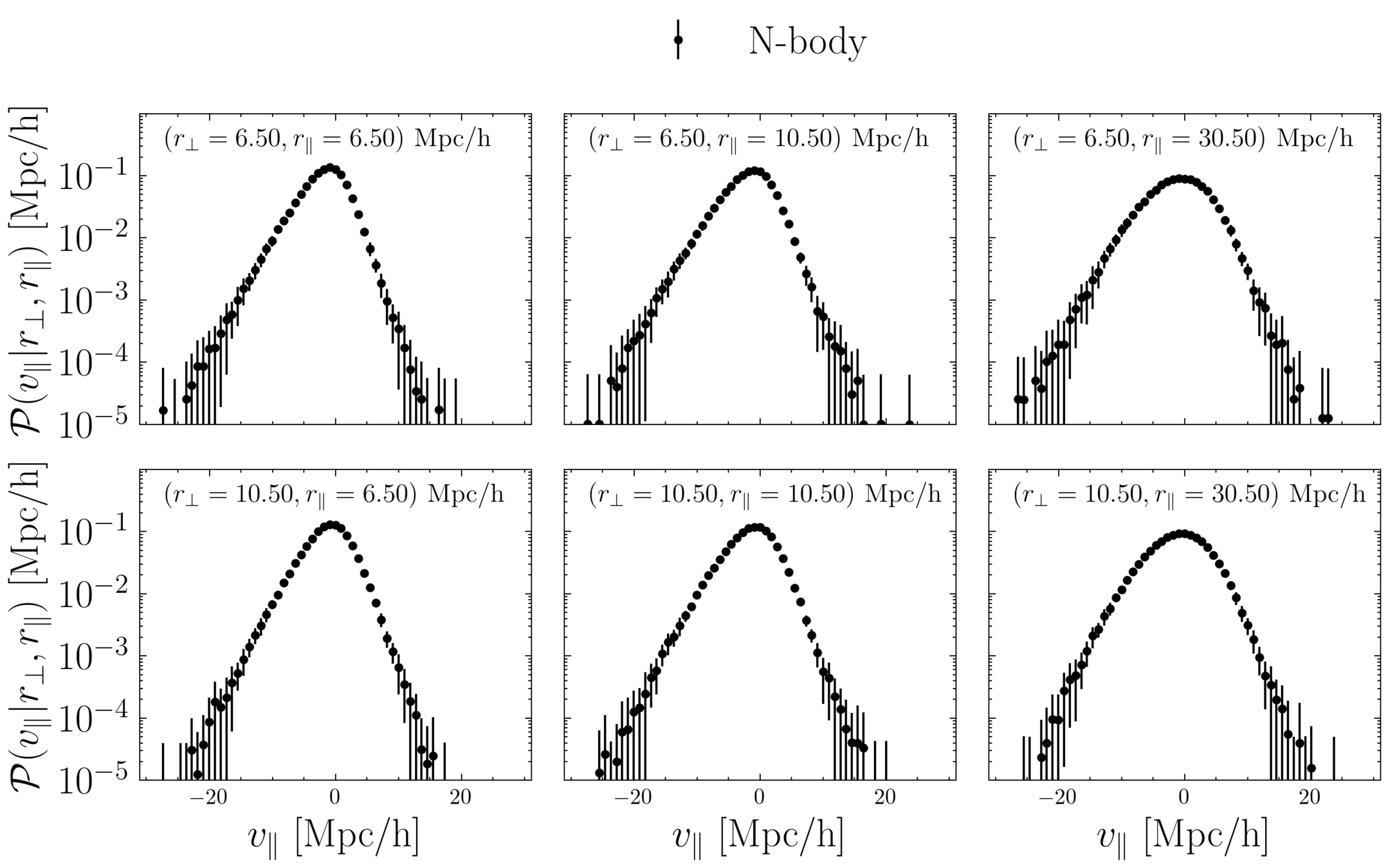

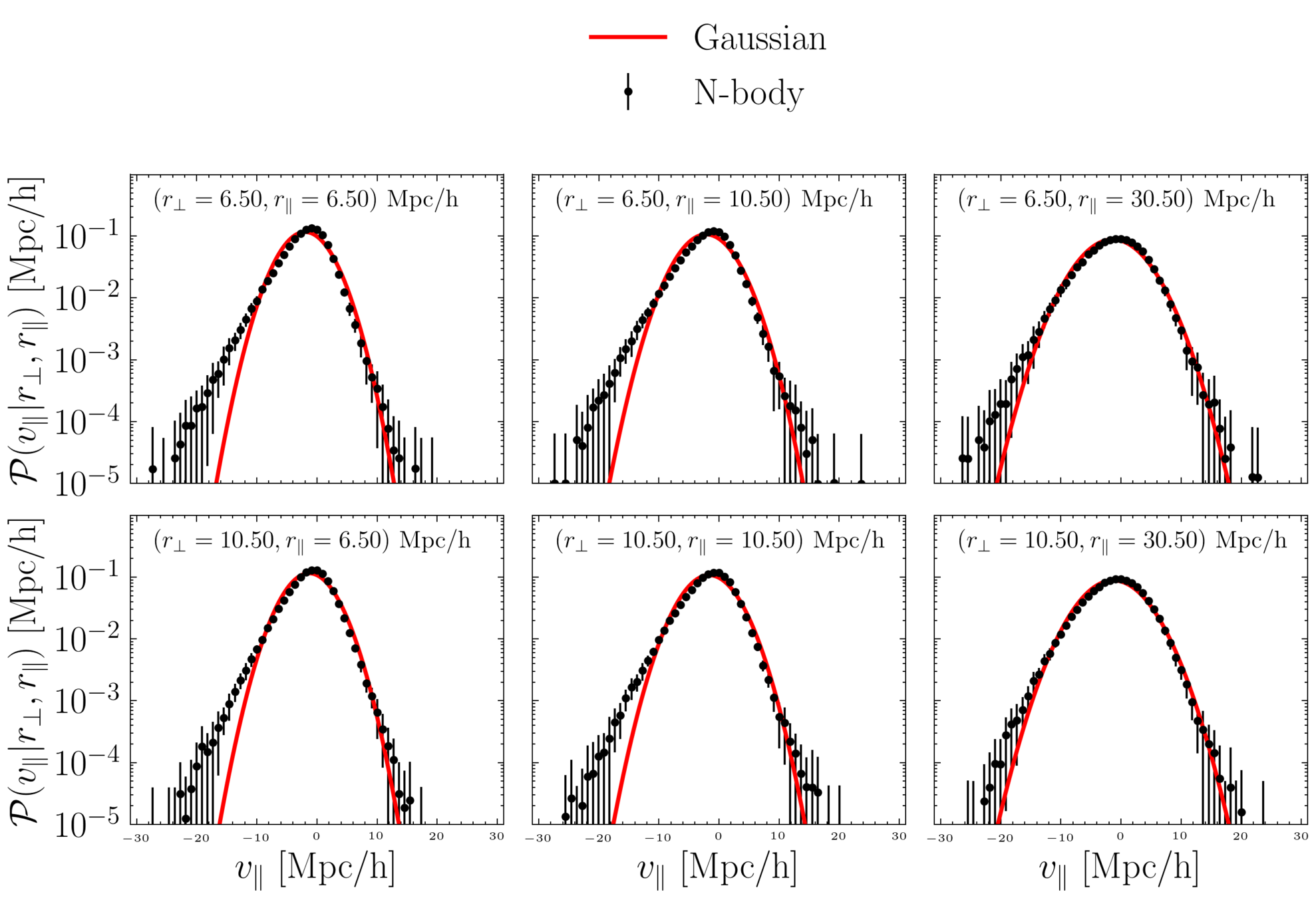

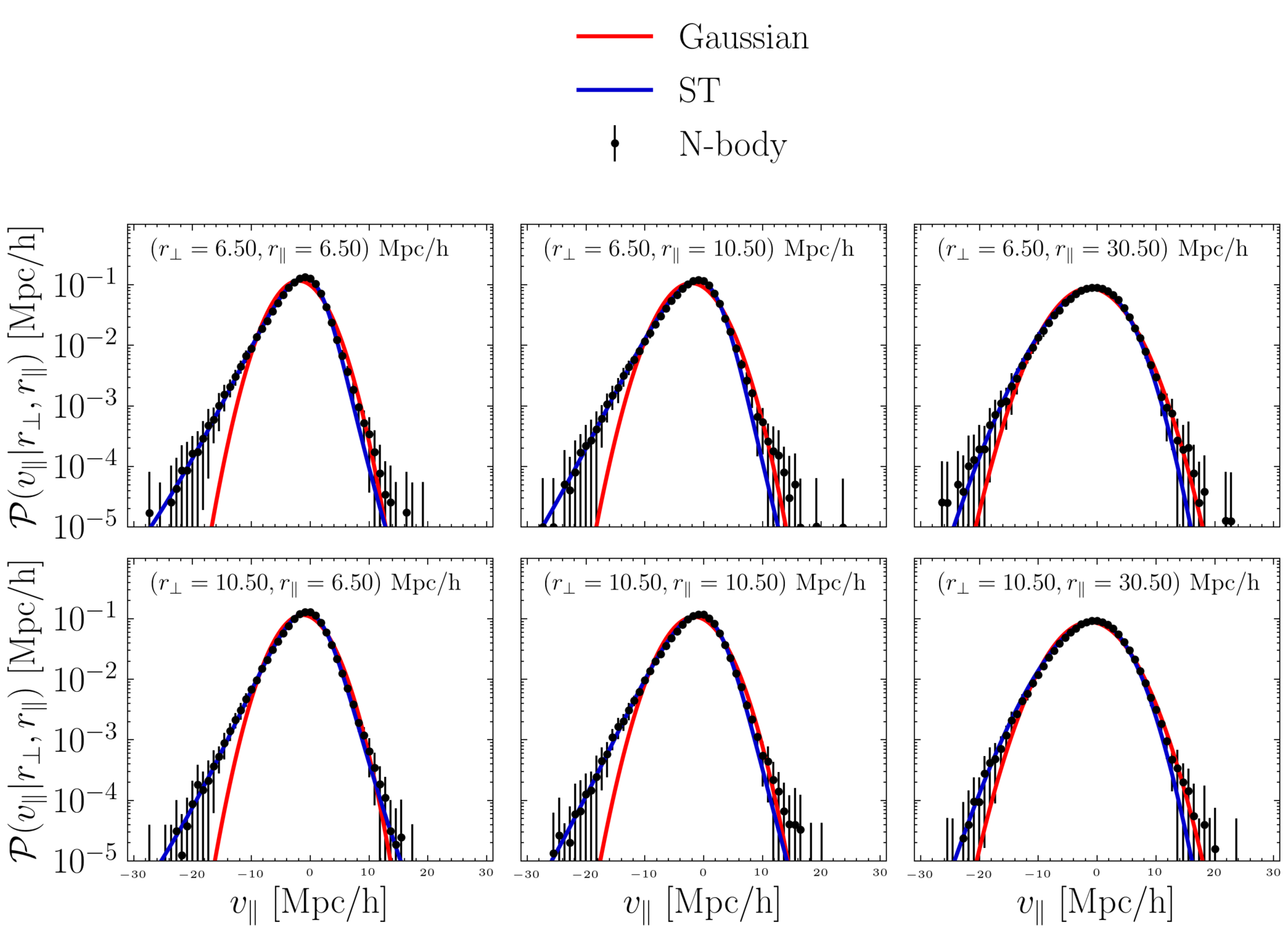

The Streaming Model

PAIRWISE VELOCITY

DISTRIBUTION

Probability of finding a pair of galaxies at distance r

Virial motions within halos

Infall towards halos

On large scales,

slowly varying function of

n = 4 reproduces clustering down to small scales

INFALL

OUTFLOW

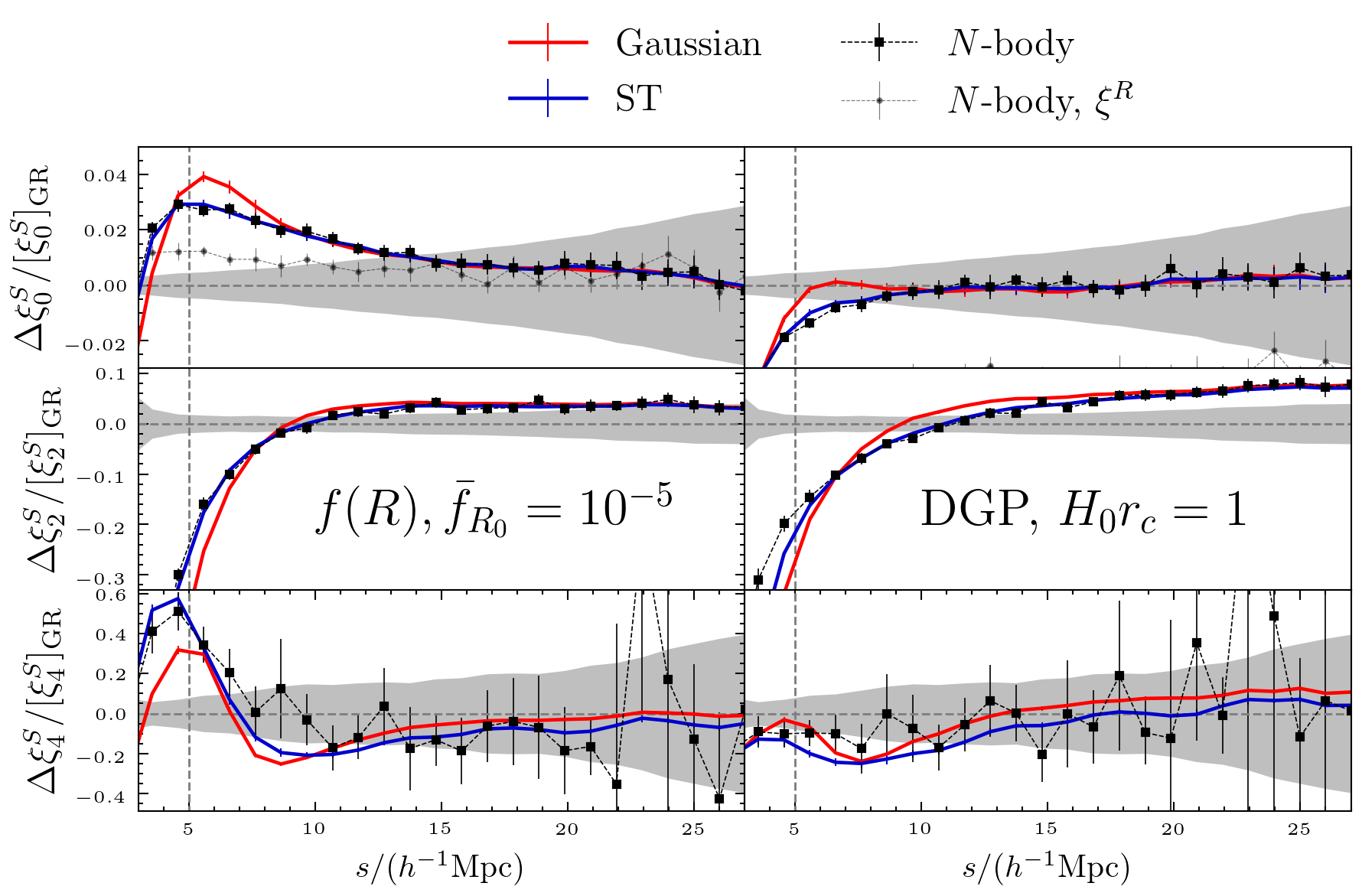

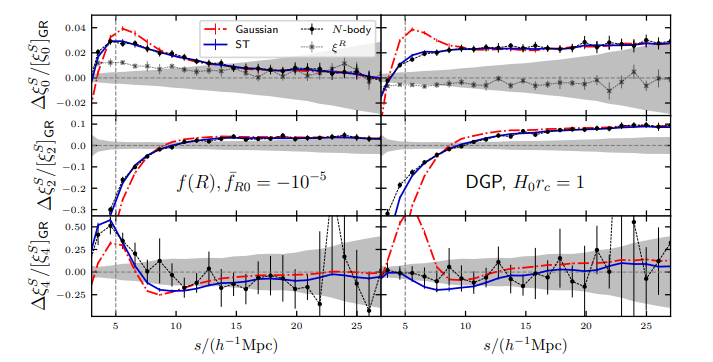

Two representative extensions to General Relativity:

- The background expansion is the same as LCDM

- One parameter to describe deviations from LCDM

How do these vary with cosmological parameters on small scales?

Described by four parameters

Code available on github soon!

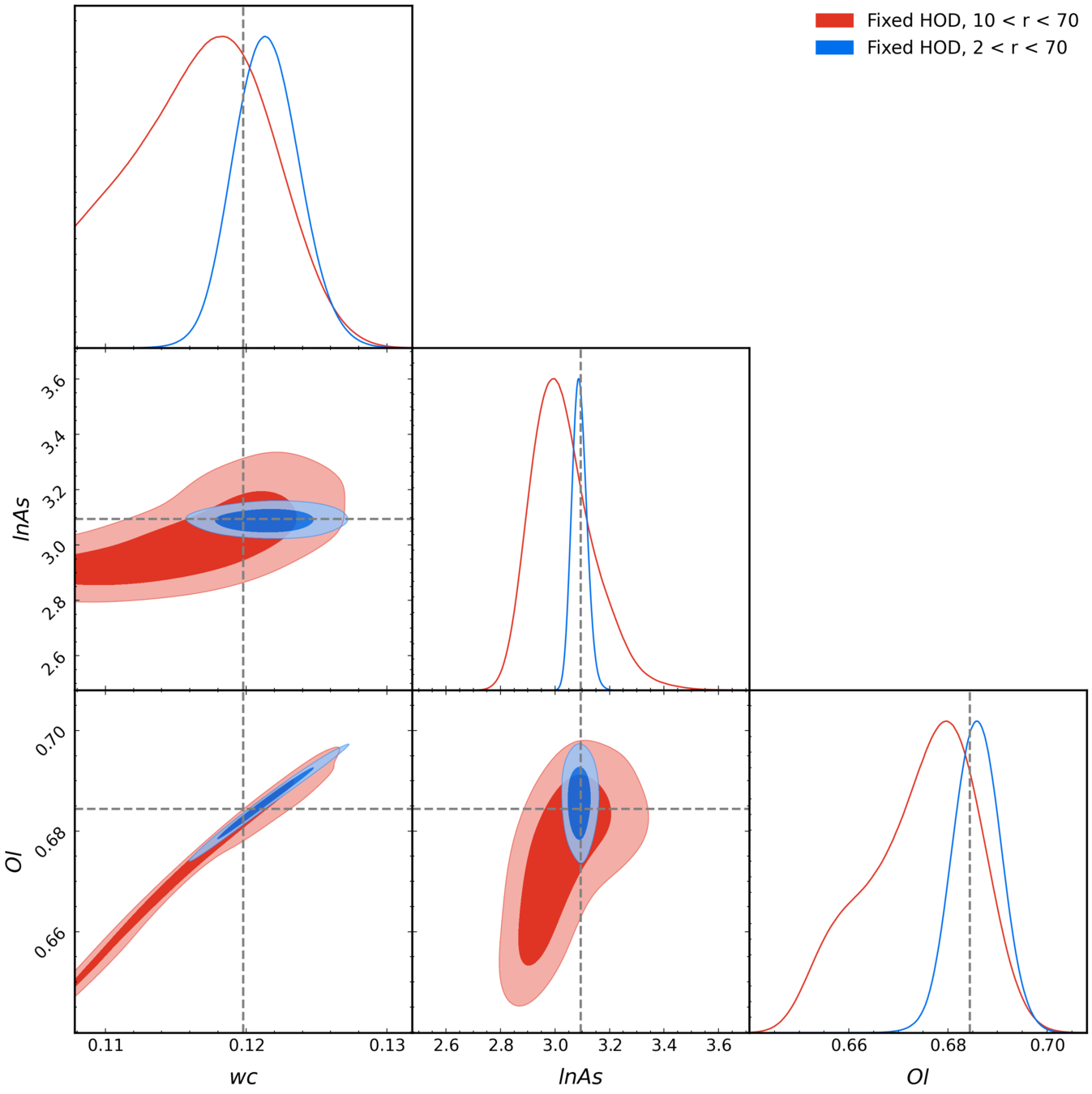

Likelihood evaluations

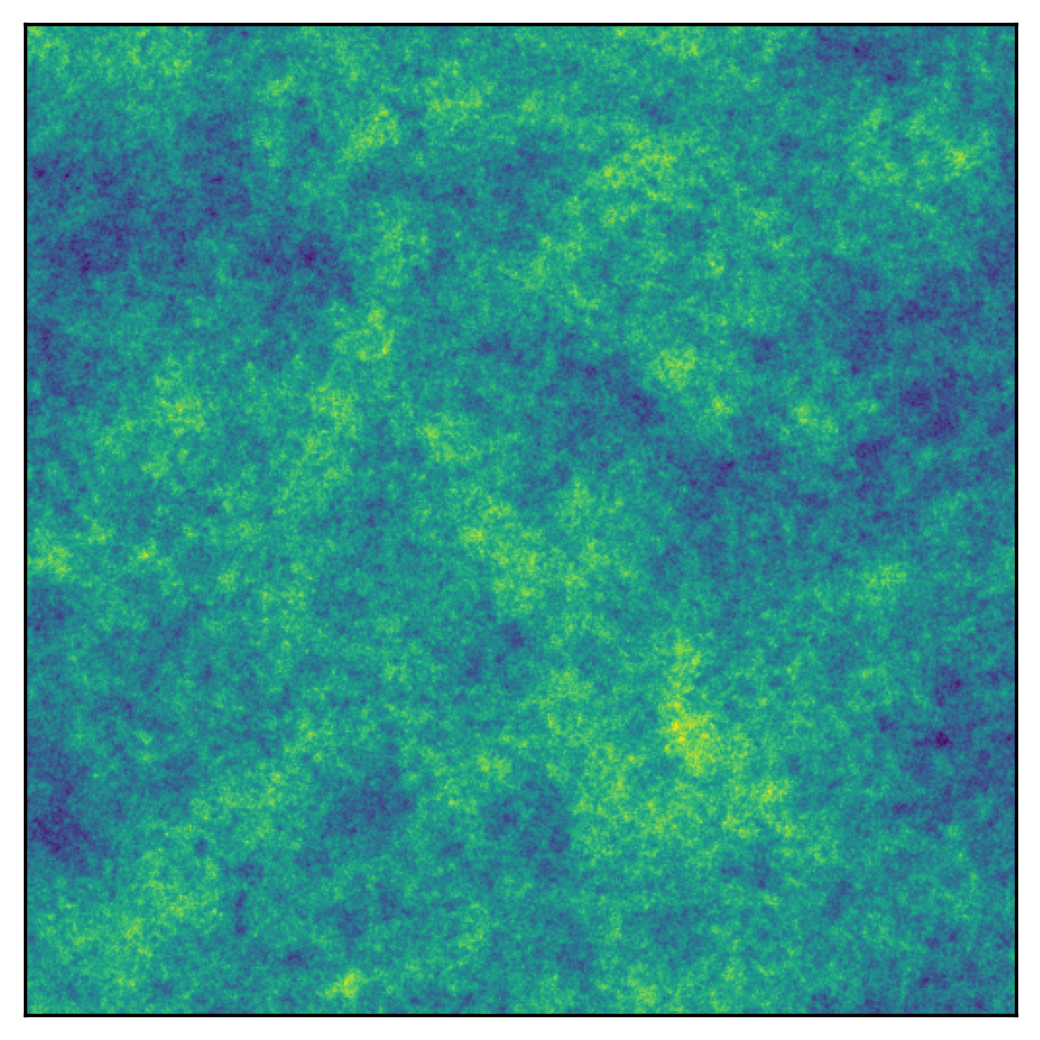

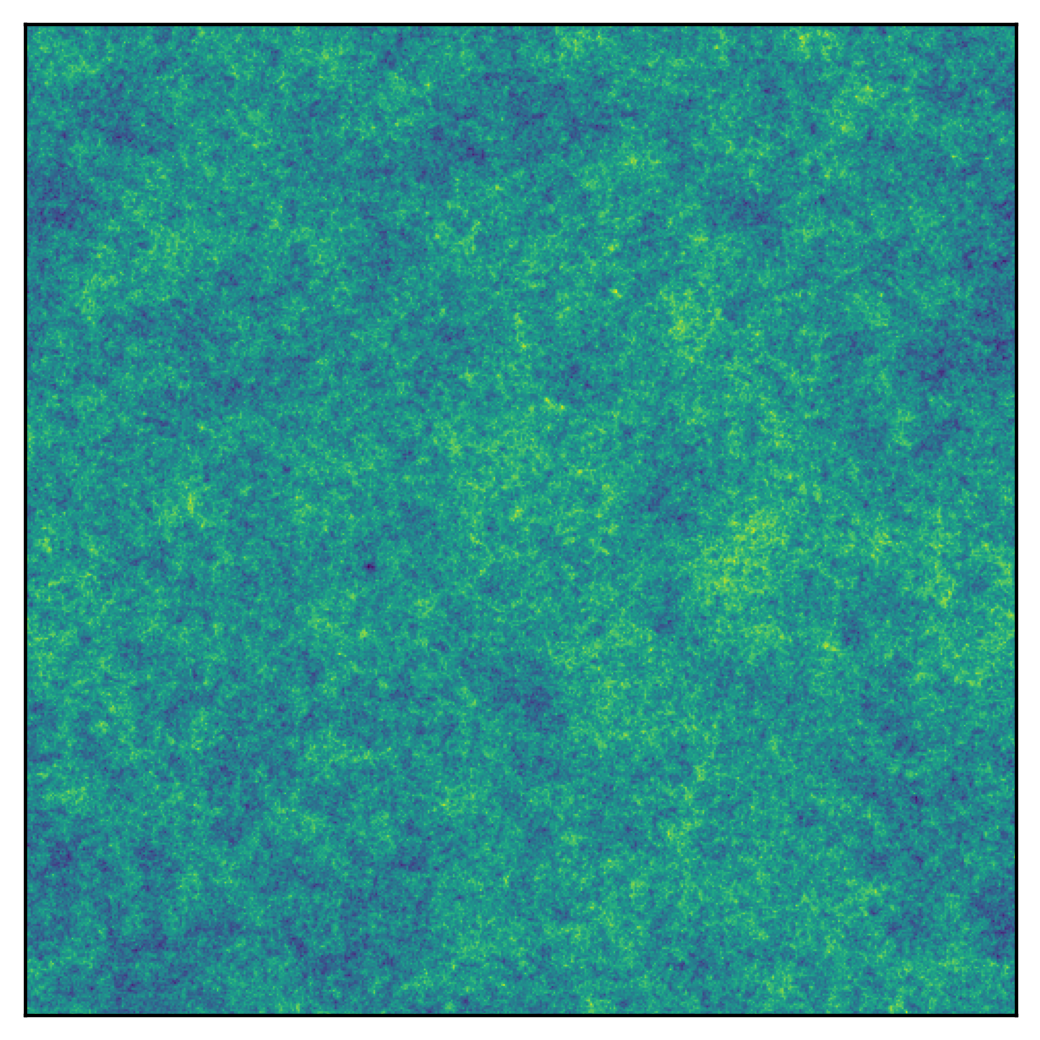

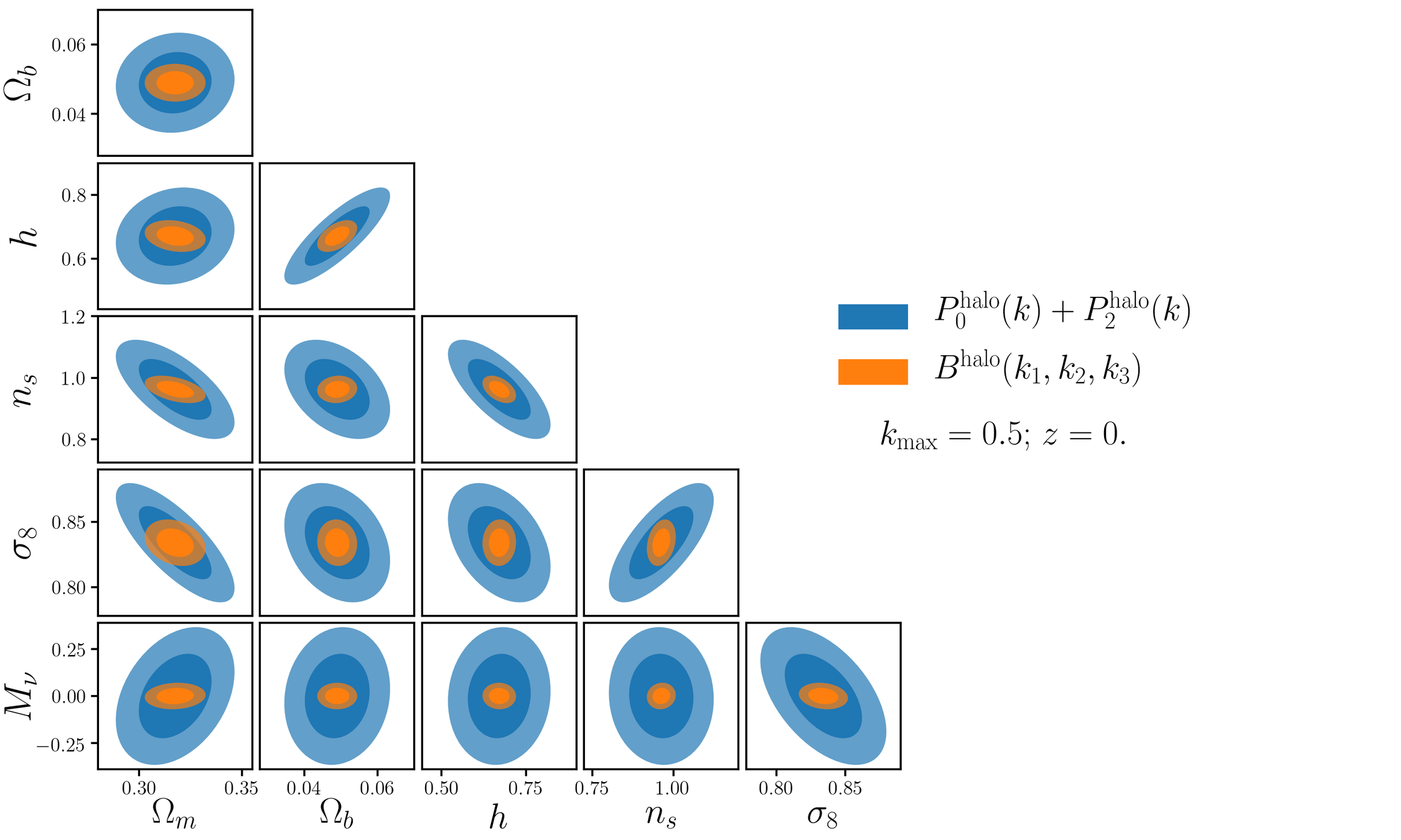

But... How much information are we ignoring??

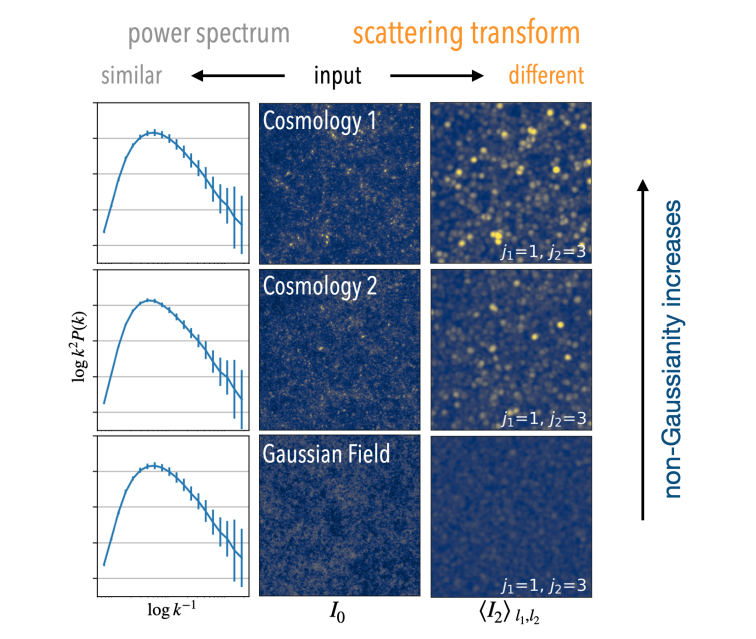

Credit: ChangHoon Hahn et al https://arxiv.org/abs/2012.02200

P

B

r

r1

r2

r3

Credit: Sihao Cheng et al https://arxiv.org/pdf/2006.08561.pdf

Input

x

Neural network

f

Representation

(Summary statistic)

r = f(x)

Output

o = g(r)

Increased interpretability through structured inputs

Modelling cross-correlations

ML and cosmology

- ML to accelerate non-linear predictions: allow MCMC sampling of non-linear scales

- Precision of future surveys: what and how we emulate will have a big impact on cosmological constraints

- Can ML extract **all** the information that there is at the field-level in the non-linear regime?

- Compare data and simulations, point us to the missing pieces?

deck

By carol cuesta

deck

- 622