Bayesian sparse regression

Dimitrije Marković

DySCO meeting 16.02.2022

Sparse regression in GLMs

Sparse regression in GLMs

Outline

- Bayesian regression

- Hierarchical priors

- Shrinkage priors

- QR decomposition

- Pairwise interactions

Linear regression model

Simulated data

Generative model

Posterior distribution

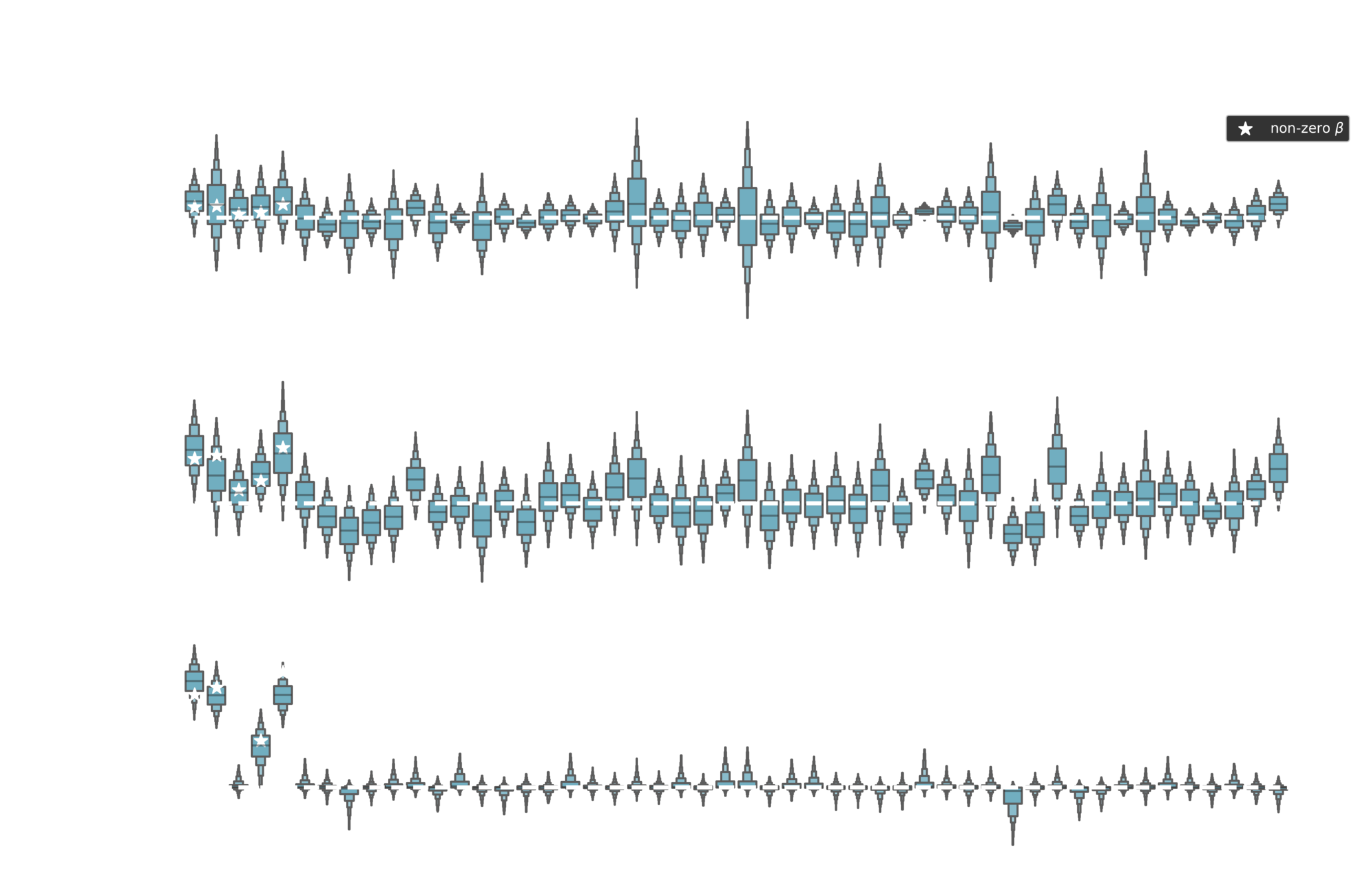

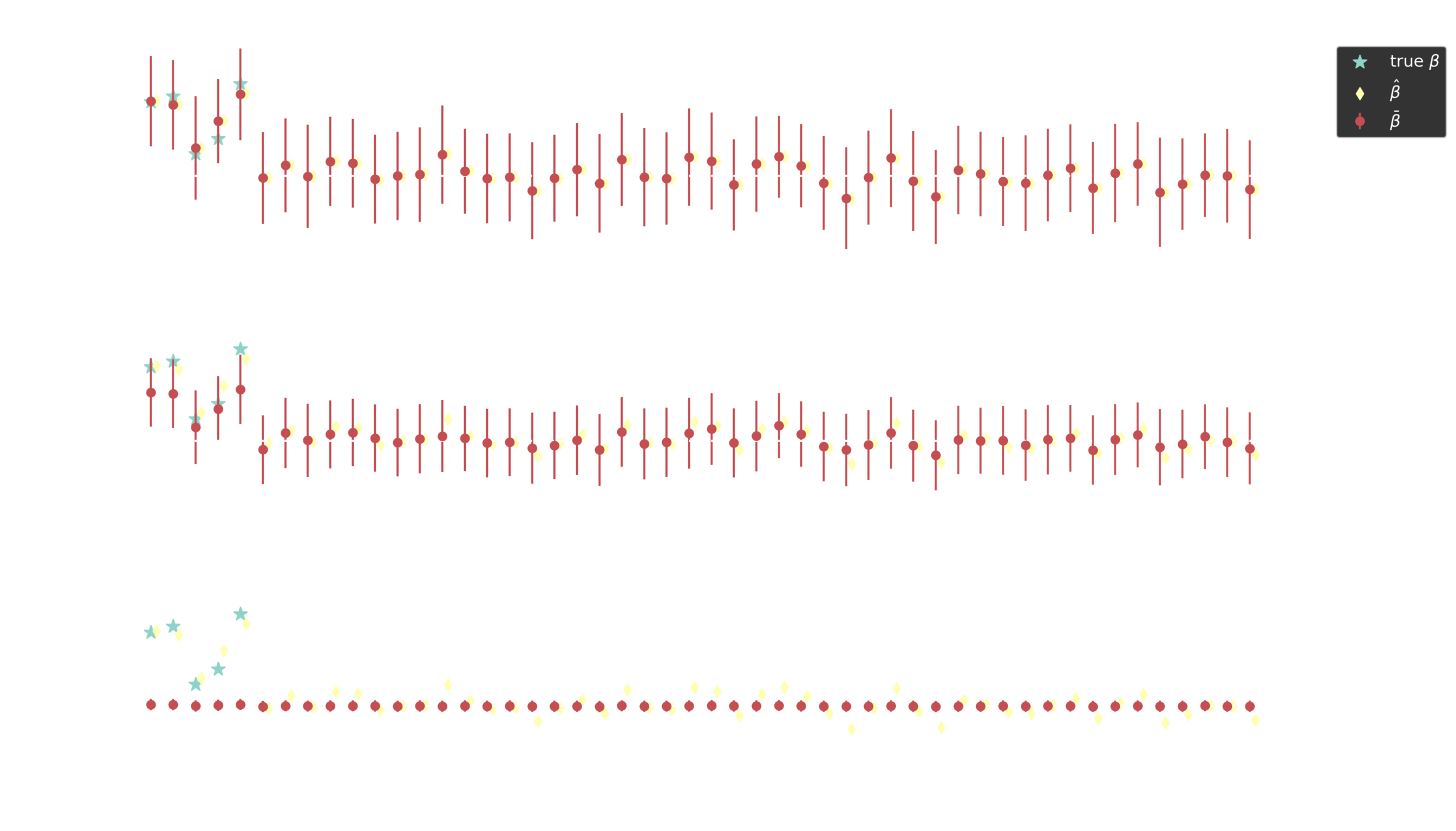

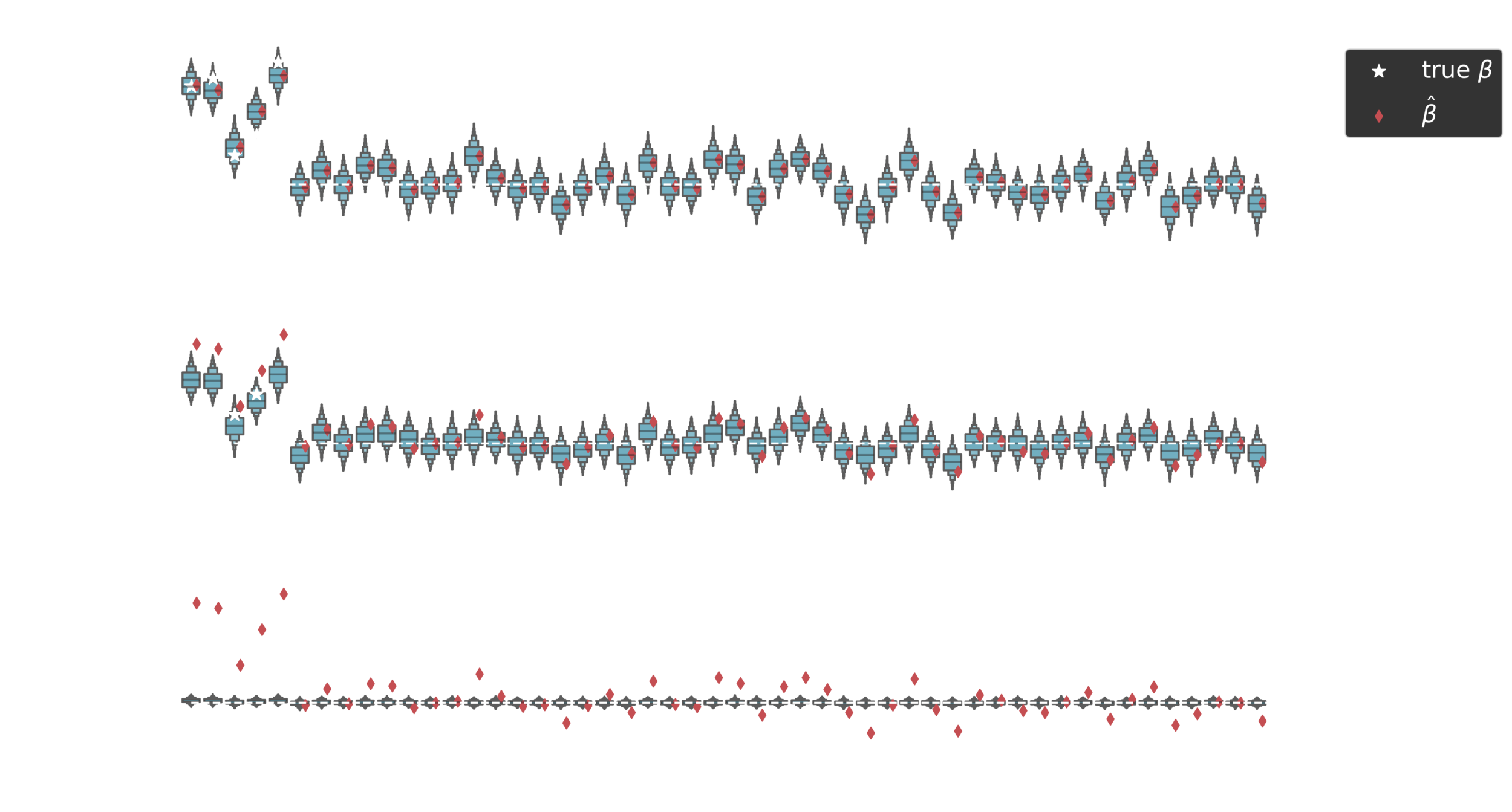

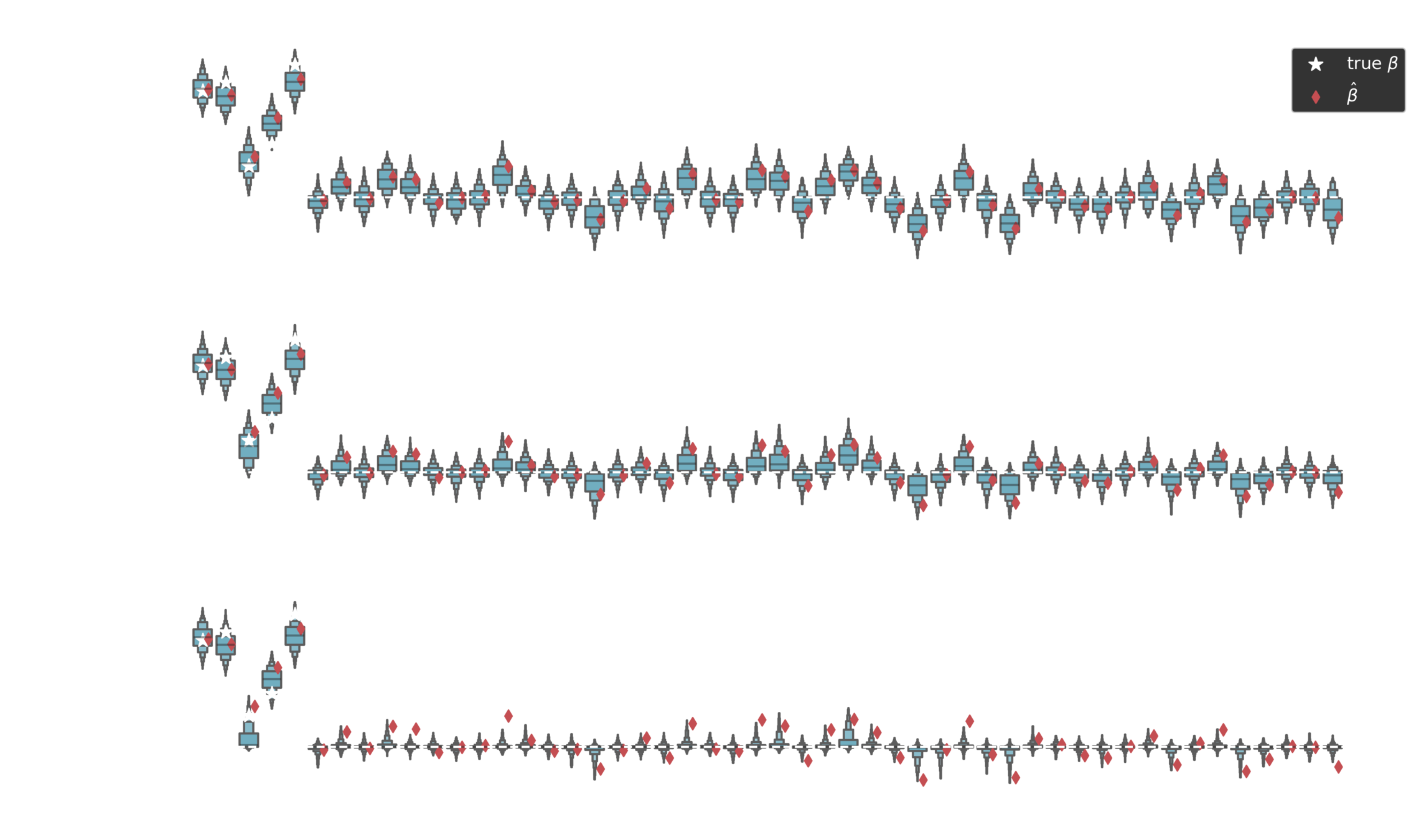

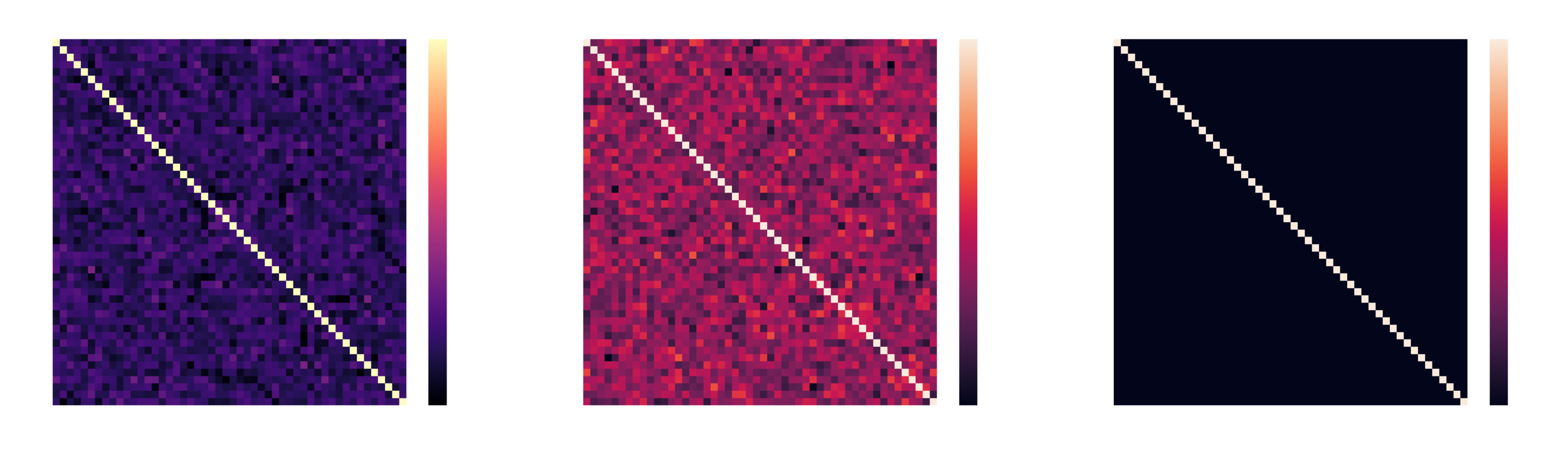

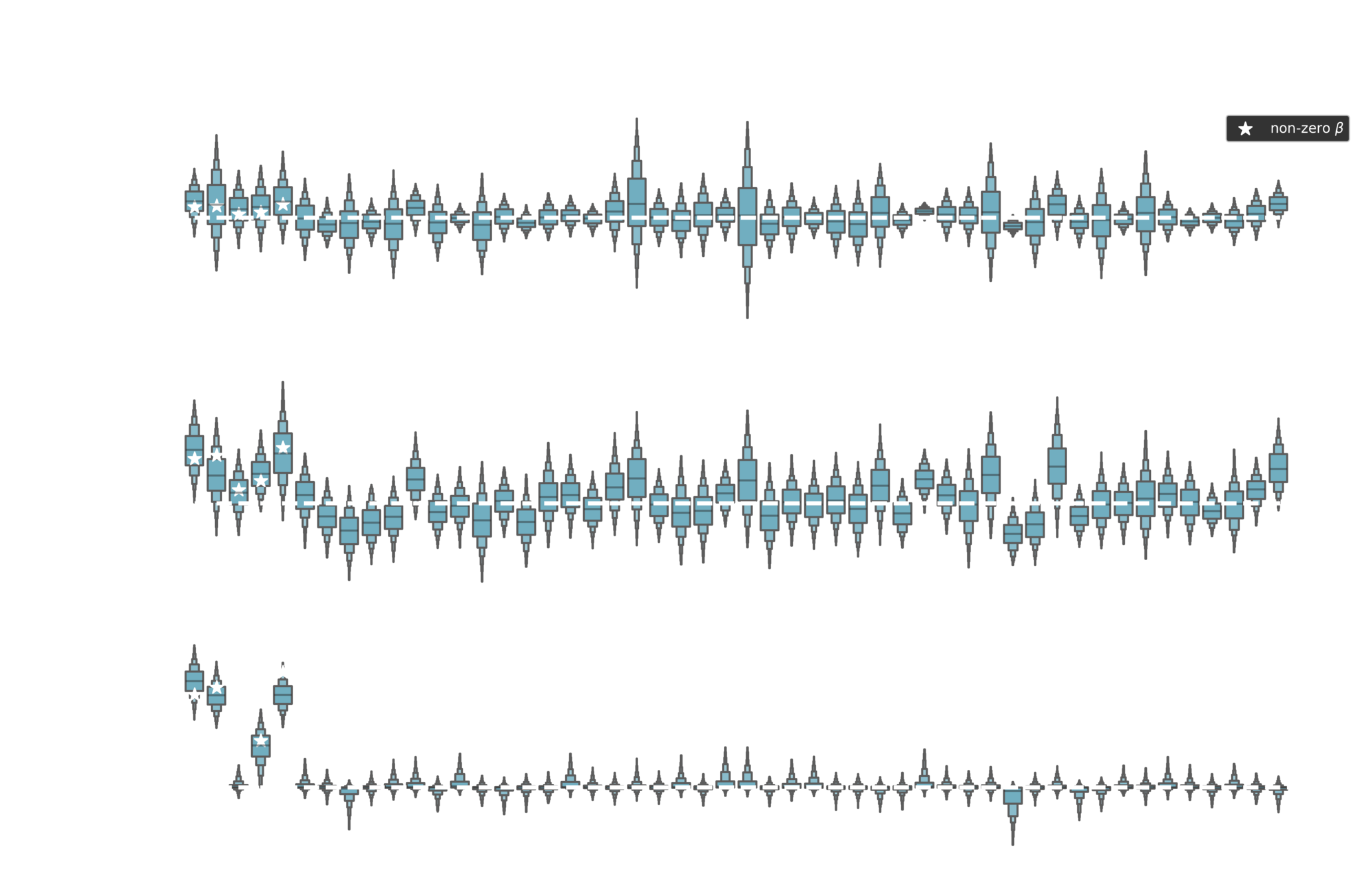

Posterior estimates

Shrinkage factor

Piironen, Juho, and Aki Vehtari. "Sparsity information and regularization in the horseshoe and other shrinkage priors." Electronic Journal of Statistics 11.2 (2017): 5018-5051.

Generative model

Posterior estimates

Horseshoe prior

Piironen, Juho, and Aki Vehtari. "Sparsity information and regularization in the horseshoe and other shrinkage priors." Electronic Journal of Statistics 11.2 (2017): 5018-5051.

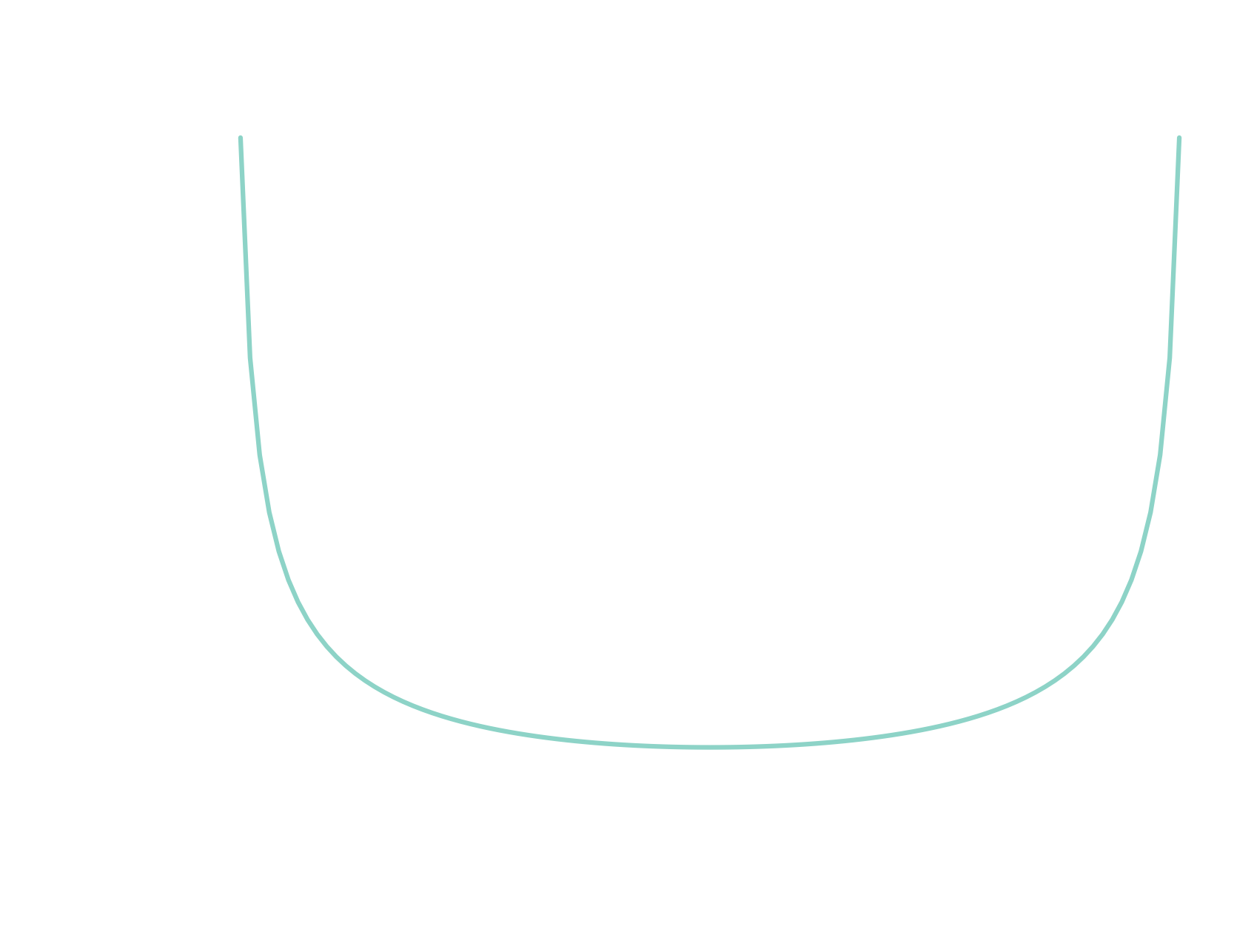

Half-Cauchy distribution

Hierarchical generative model

Posterior estimates

Non-zero coefficients

Piironen, Juho, and Aki Vehtari. "Sparsity information and regularization in the horseshoe and other shrinkage priors." Electronic Journal of Statistics 11.2 (2017): 5018-5051.

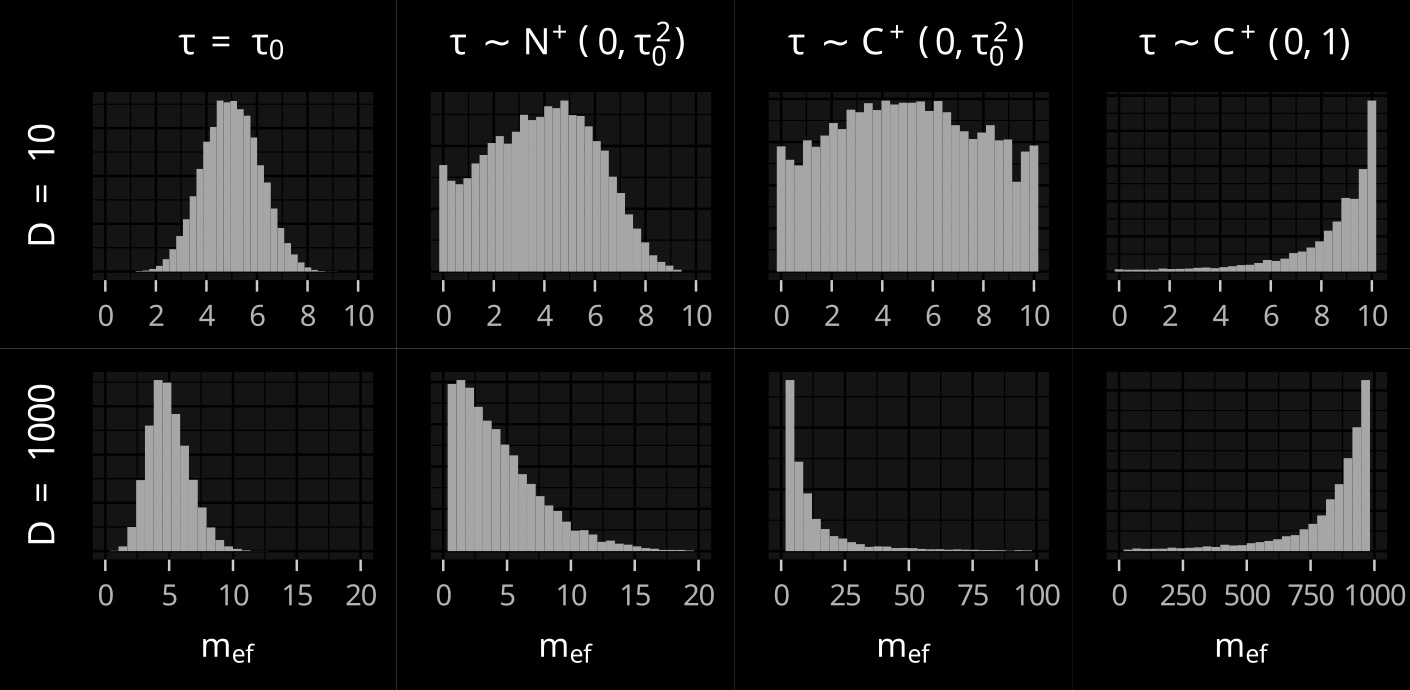

Effective number of

non-zero coefficients

Expected effective number

Effective number

Piironen, Juho, and Aki Vehtari. "Sparsity information and regularization in the horseshoe and other shrinkage priors." Electronic Journal of Statistics 11.2 (2017): 5018-5051.

Hierarchical shrinkage

Piironen, Juho, and Aki Vehtari. "Sparsity information and regularization in the horseshoe and other shrinkage priors." Electronic Journal of Statistics 11.2 (2017): 5018-5051.

prior constraint on the

number of nonzero coefficients

\( \rightarrow \)

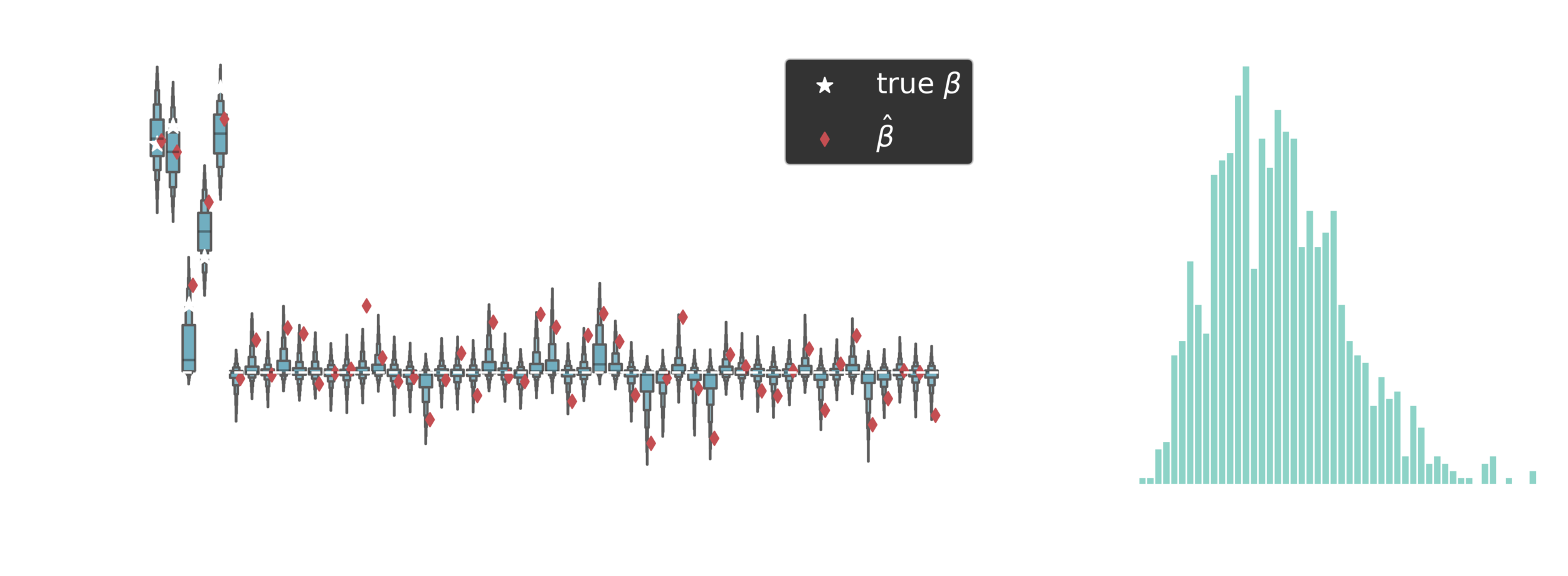

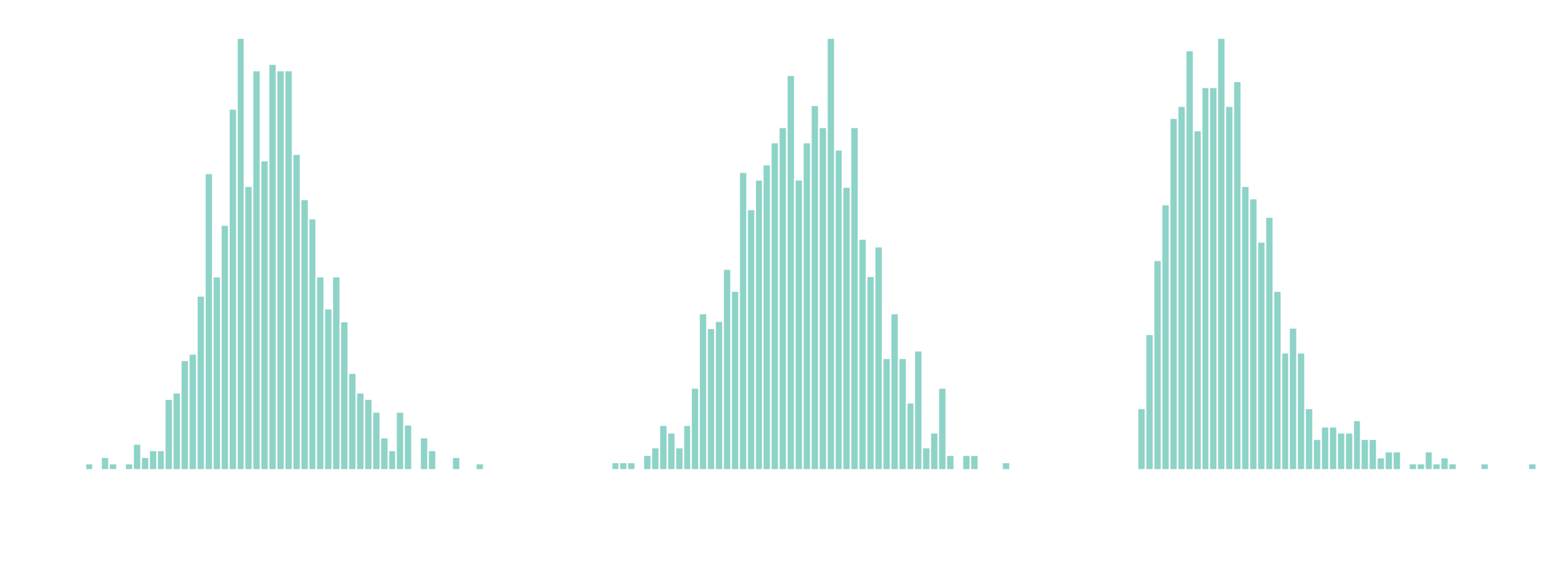

Posterior estimates

Posterior estimates

Outline

- Bayesian regression

- Hierarchical priors

- Shrinkage priors

- QR decomposition

- Pairwise interactions

QR decomposition

Simulated data

Posterior estimates

Outline

- Bayesian regression

- Hierarchical priors

- Shrinkage priors

- QR decomposition

- Pairwise interactions

Pairwise Interactions

Agrawal, Raj, et al. "The kernel interaction trick: Fast bayesian discovery of pairwise interactions in high dimensions." International Conference on Machine Learning. PMLR, 2019.

linear regression?

Structural prior

Agrawal, Raj, et al. "The kernel interaction trick: Fast bayesian discovery of pairwise interactions in high dimensions." International Conference on Machine Learning. PMLR, 2019.

The kernel interaction trick

Agrawal et al. demonstrates how one can express the linear regression problem (with pairwise interactions) as a Gaussian process model using specific kernel structure.

The Gaussian process models have better scaling with D, but further approximations are needed for larger N.

To me it is unclear if the approach can be applied to nonlinear GLMs.

Discussion

Hierarchical shrinkage priors are essential for separating signal from noise in the presence of correlations.

Structural shrinkage priors help separate linear

and pairwise contributions to the "responses".

https://github.com/dimarkov/pybefit

Generalises to Logistic regression, Poisson regression, etc.

Bayesian sparse regression

By dimarkov

Bayesian sparse regression

- 171