Generative vs discriminative probabilistic models

Discriminative classification

Given a training set \( \{\pmb{x}_n, \pmb{c}_n \}\) consisting of features \( \pmb{x}_n \) (predictors) and target vectors \( \pmb{c}_n \) solve:

Generative classification

can exploit unlabelled data in addition to labelled data.

requires potentially expensive data labeling.

better generalization performance.

Bernardo, J. M., et al. "Generative or discriminative? getting the best of both worlds." Bayesian statistics 8.3 (2007): 3-24.

Mackowiak, Radek, et al. "Generative classifiers as a basis for trustworthy image classification." Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2021.

Wu, Ying Nian, et al. "A tale of three probabilistic families: Discriminative, descriptive, and generative models." Quarterly of Applied Mathematics 77.2 (2019): 423-465.

Ho, Nhat, et al. "Neural rendering model: Joint generation and prediction for semi-supervised learning." arXiv preprint arXiv:1811.02657 (2018).

Hsu, Anne, and Thomas Griffiths. "Effects of generative and discriminative learning on use of category variability." Proceedings of the Annual Meeting of the Cognitive Science Society. Vol. 32. No. 32. 2010.

Mixture models

Variational free energy

Exponential family

Mixture models

Iterative updating

Mixture models

A link to multinomial regression

Classification with MM

1. Given a training set \( \{\pmb{x}_n, c_n \}\) map labels \( c_n \) to one hot encoded vectors \( \pmb{e}[c_n] \)

2. Update parameters

3. Given a test set \( \{\pmb{x}^*_n \}\) predict labels as

Discriminative classification

1. Given a training set \( \{\pmb{x}_n, c_n \}\) learn model parameters using (approximate) inference

2. Given a test set \( \{\pmb{x}^*_n \}\) predict labels as

Wojnowicz, Michael T., et al. "Easy Variational Inference for Categorical Models via an Independent Binary Approximation." International Conference on Machine Learning. PMLR, 2022.

Semi-supervised learning

1. Given fully labeled \( \{\pmb{x}_n, c_n \}\) and unlabeled \(\{x_l^*\}\) datasets learn model parameters

2. Predict labels for the unlabeled dataset as

Gaussian mixture model

Normal-Inverse-Wishart prior

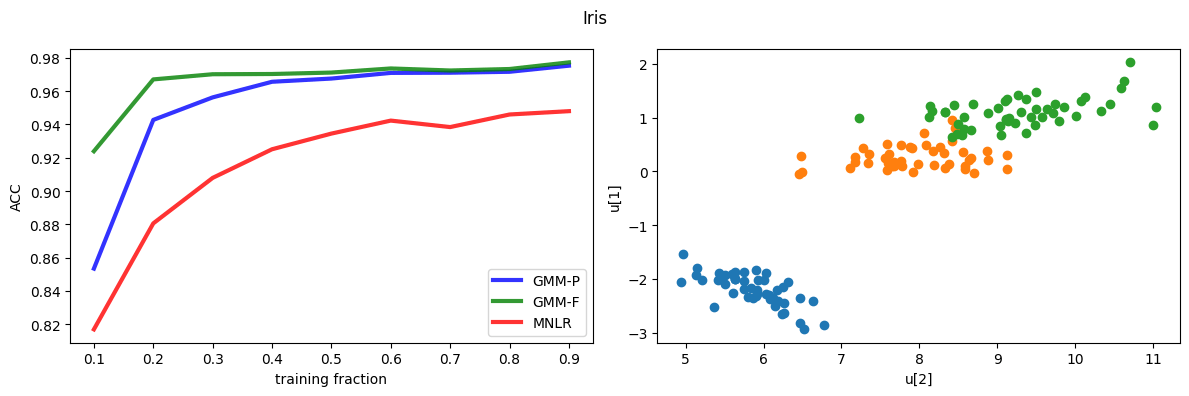

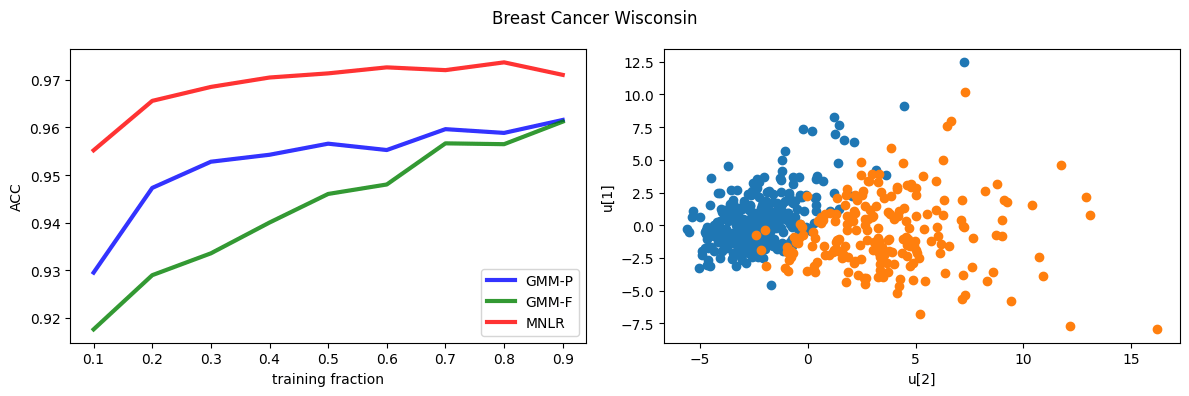

Comparison

Comparison

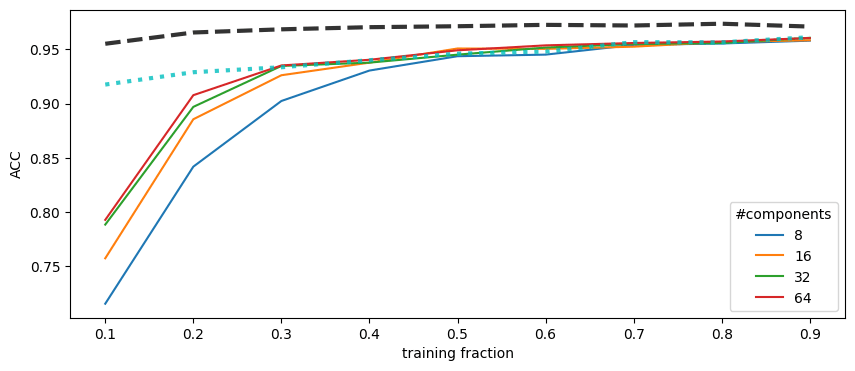

Mixture of mixtures

Prediction

parameters become a function of datapoints

Example

can we improve GM approach with sparse structural priors?

NNs as hierarchical MMs

Generative model

rsLDS

Linderman, Scott, et al. "Bayesian learning and inference in recurrent switching linear dynamical systems." Artificial intelligence and statistics. PMLR, 2017.

Generative vs discriminative probabilistic models

By dimarkov

Generative vs discriminative probabilistic models

- 172