Aim 3: Posterior Sampling and Uncertainty

October 18, 2022

Score SDE (refresher)

0. Ito process

1. [Anderson, 82] Reverse-time SDE

\(\implies\)

2. [Song, 21] SDE Score Network

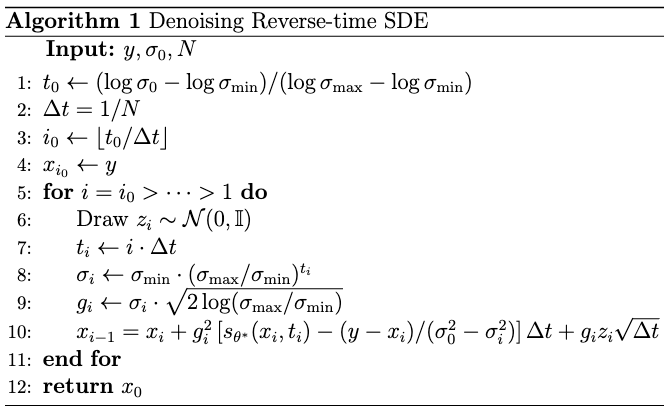

Denoising Reverse-time SDE (no drift)

Forward-time SDE

We use

\(x(0)\)

\(\sigma_{\min} = 0.01\)

\(x(T=1)\)

\(\sigma_{\max} = 1\)

Denoising reverse-time SDE (Euler)

We have

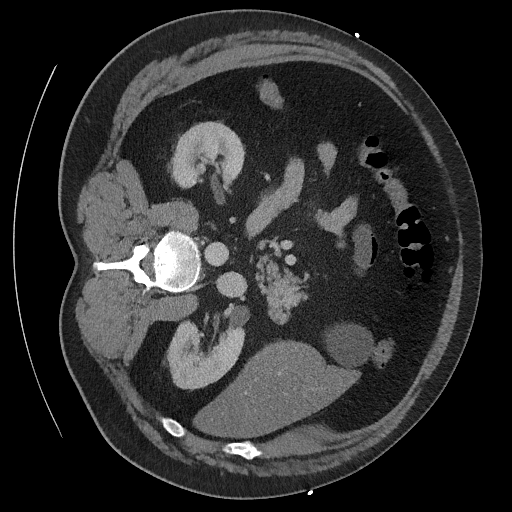

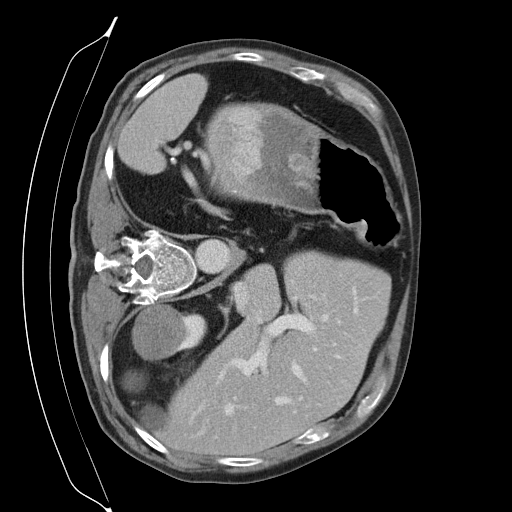

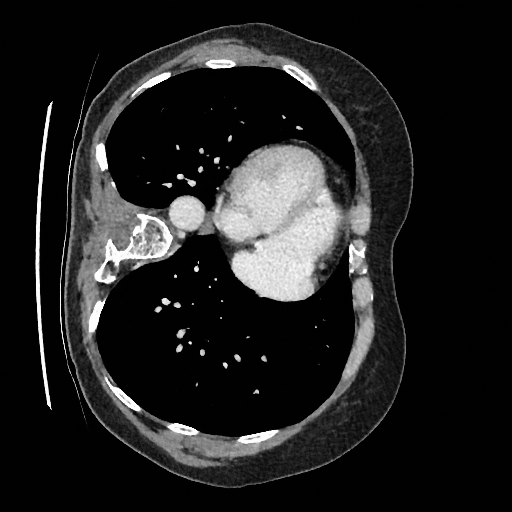

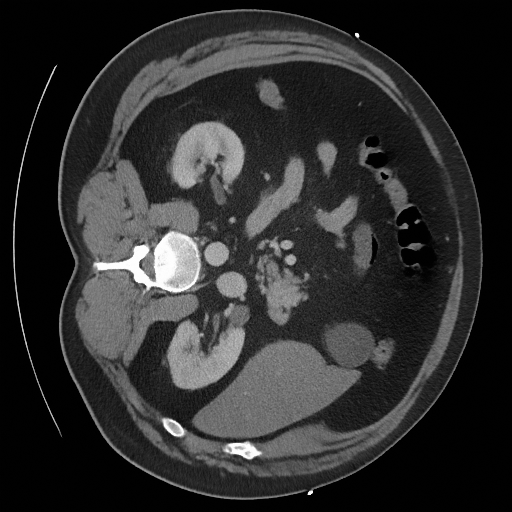

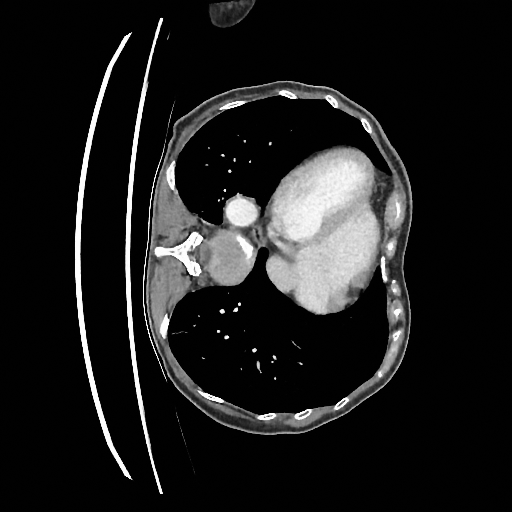

Training a continuous-time score network on Abdomen CT

Hardware: 8 NVIDIA RTX A5000 (24 GB of RAM each)

Sampling results

Original

Original

Perturbed

Samples

Sampling results

Original

Original

Perturbed

Sampled

Sampling results

Some notions of uncertainty

Mean

Standard Deviation

Quantile

Some notions of uncertainty

Mean

Standard Deviation

Quantile

Next steps

How to grant these notions of uncertainty with guarantees? For example

. "How far is the true image from a new sample?''

. "How likely is it to observe an unrealistic sample?''

. "How do we know if the computed empirical distribution contains the ground truth?''

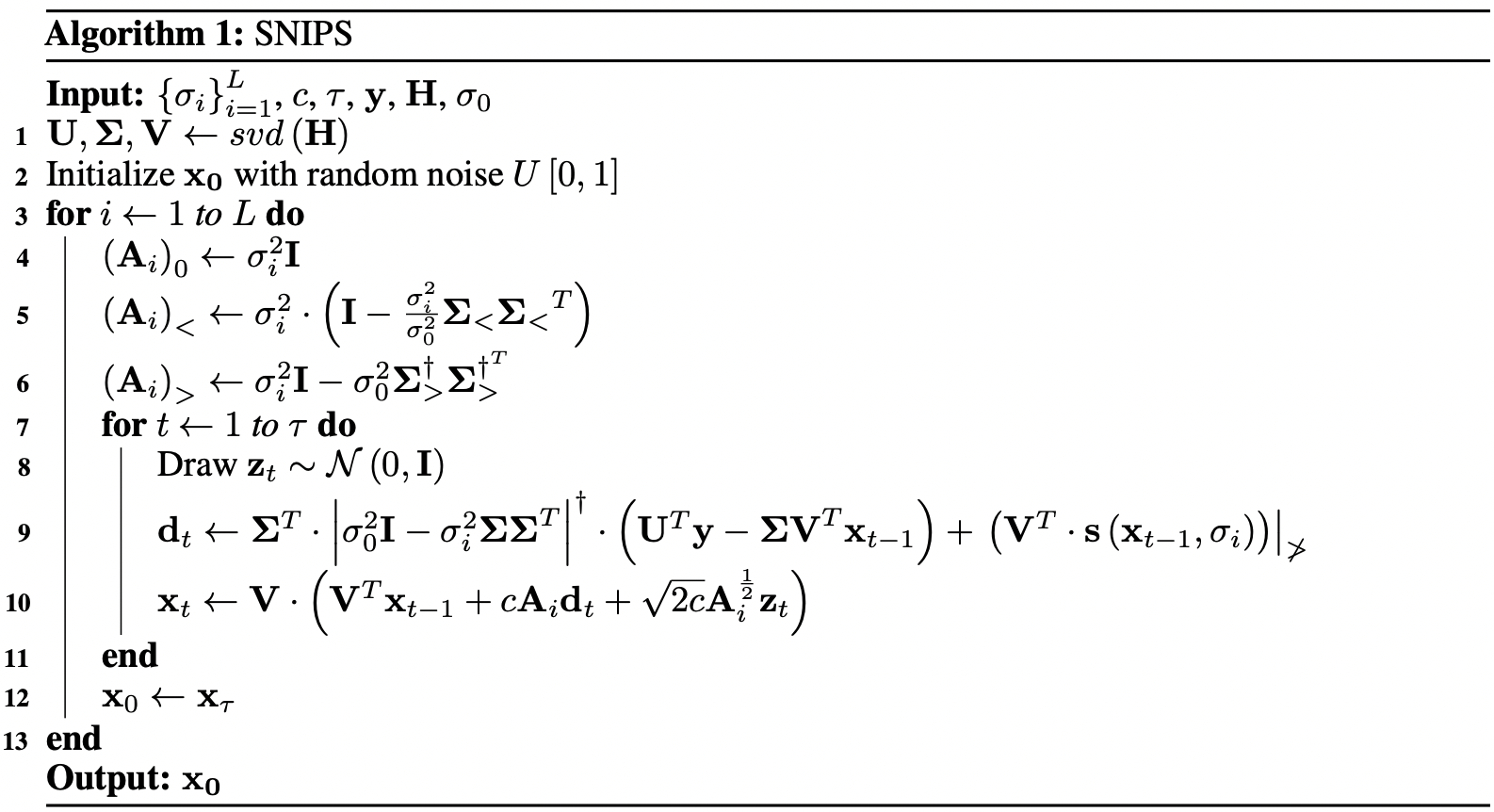

Solving general linear inverse problems:

SNIPS [Kawar, 21]

Main idea: Sample in the SVD space of \(H\)

Solving general linear inverse problems:

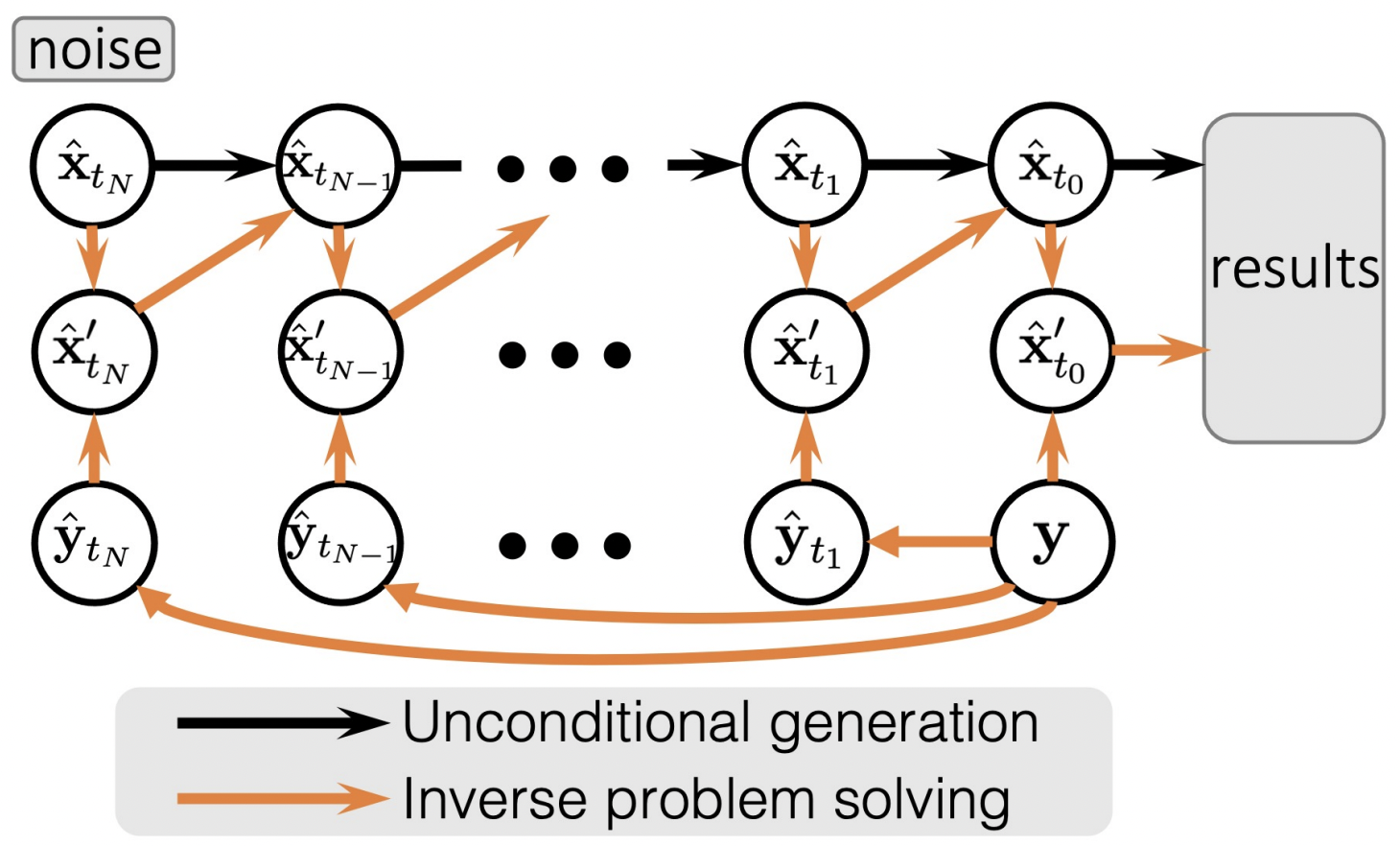

Solving Inverse Problems in Medical Imaging [Song, 22]

Main idea: Avoid the SVD decomposition by constructing \(\{\hat{y}_{t_i}\}_{i=0}^N\)

[10/18/22] Aim 3: Posterior Sampling and Uncertainty

By Jacopo Teneggi

[10/18/22] Aim 3: Posterior Sampling and Uncertainty

- 163