Learning Bayes-optimal

dendritic opinion pooling

Jakob Jordan,

João Sacramento, Willem Wybo,

Mihai A. Petrovici* & Walter Senn*

Department of Physiology, University of Bern, Switzerland

Kirchhoff-Institute for Physics, Heidelberg University, Germany

Institute of Neuroinformatics, UZH / ETH Zurich, Switzerland

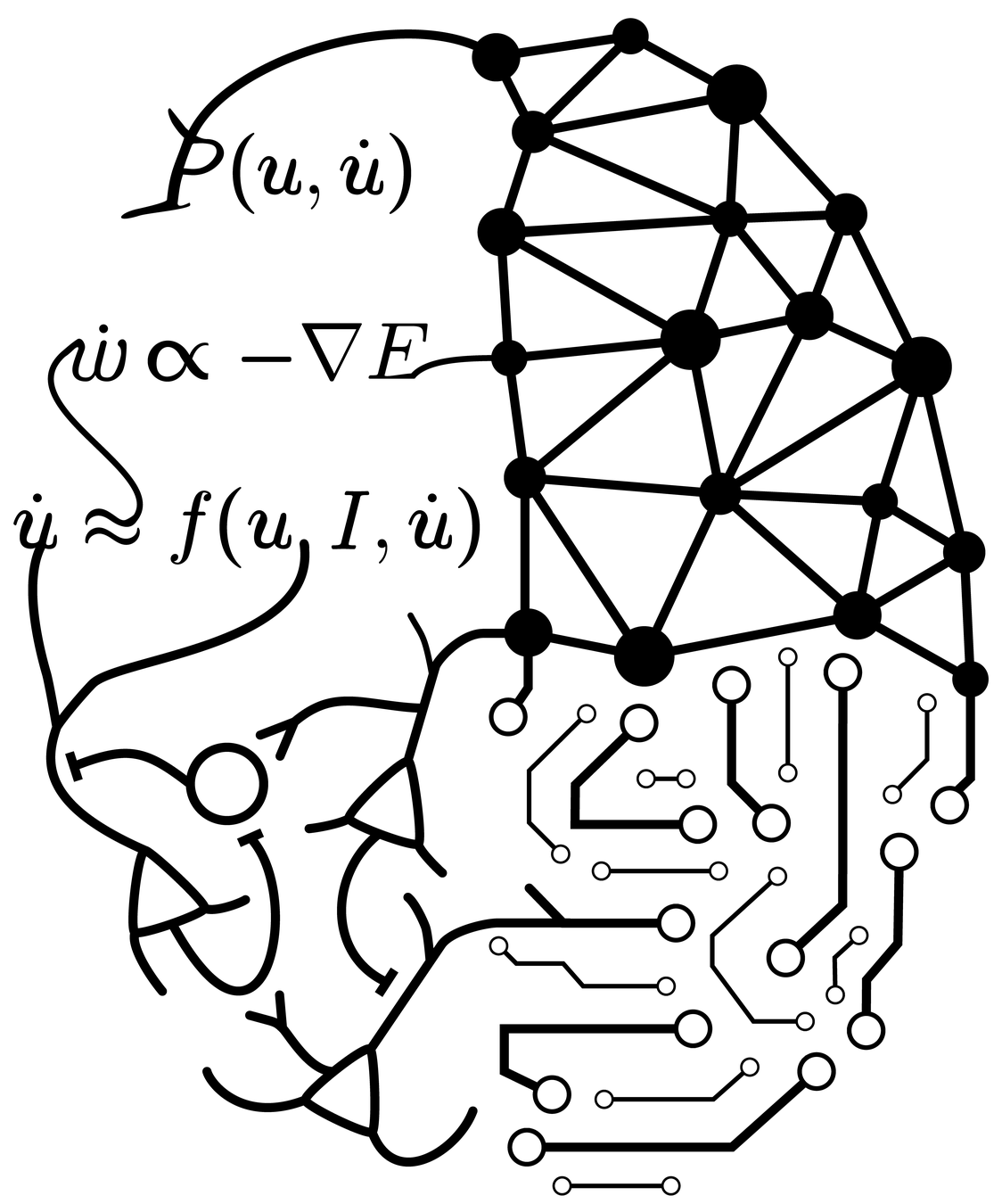

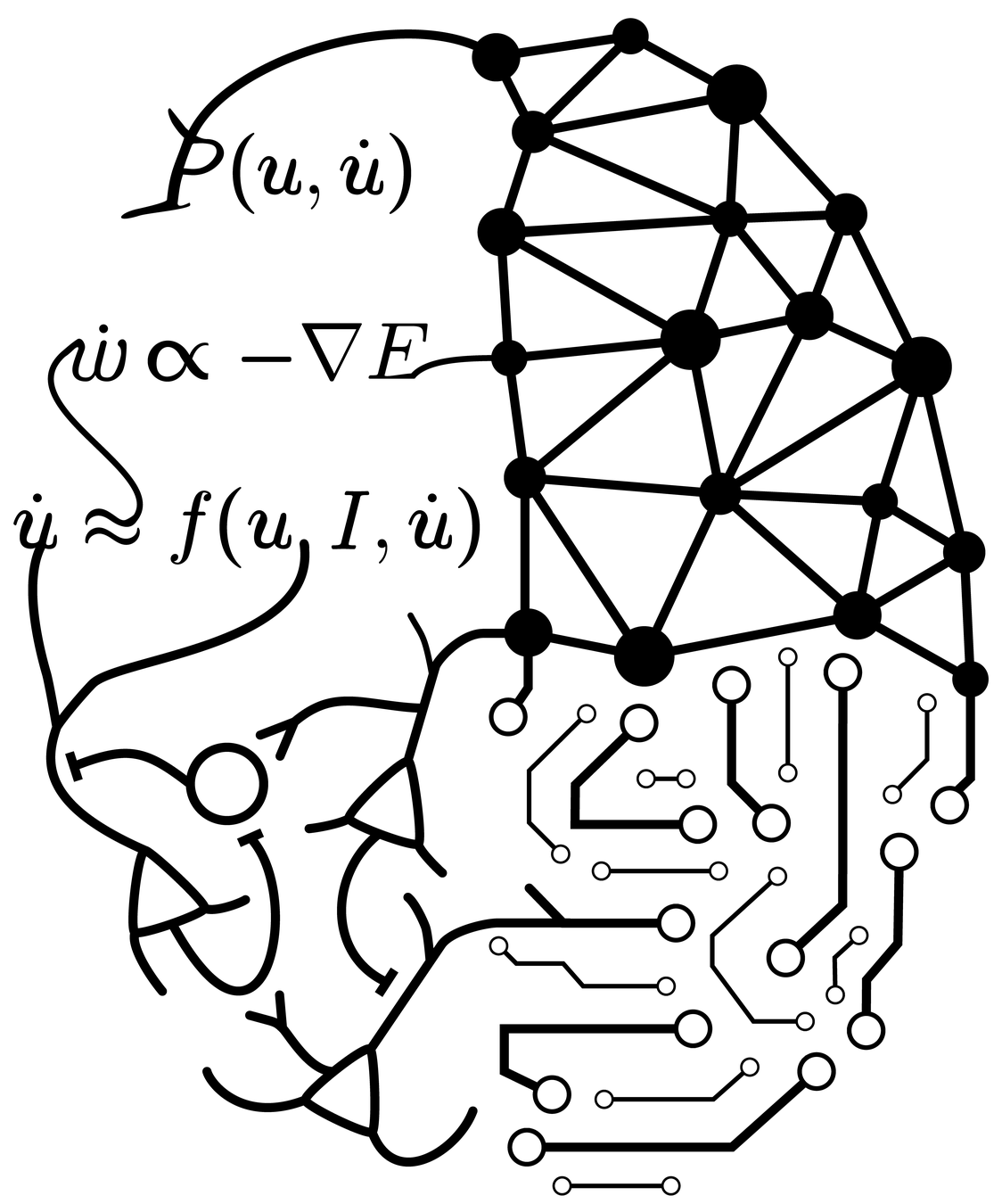

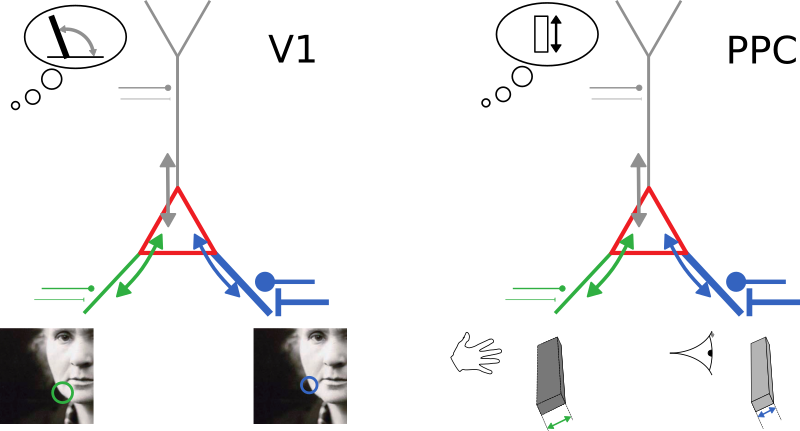

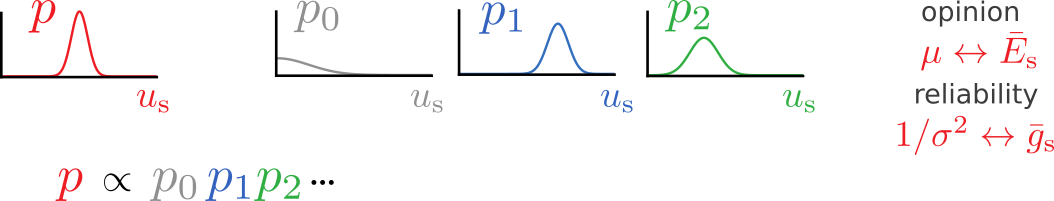

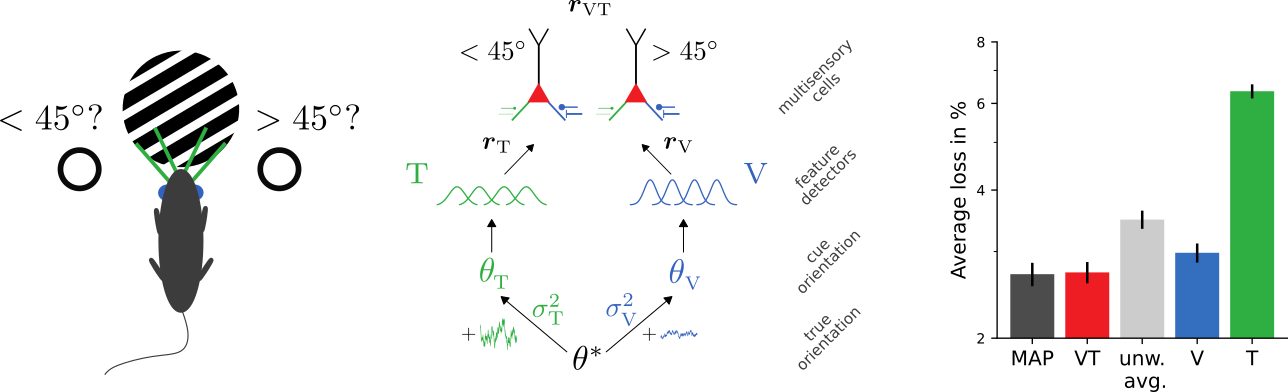

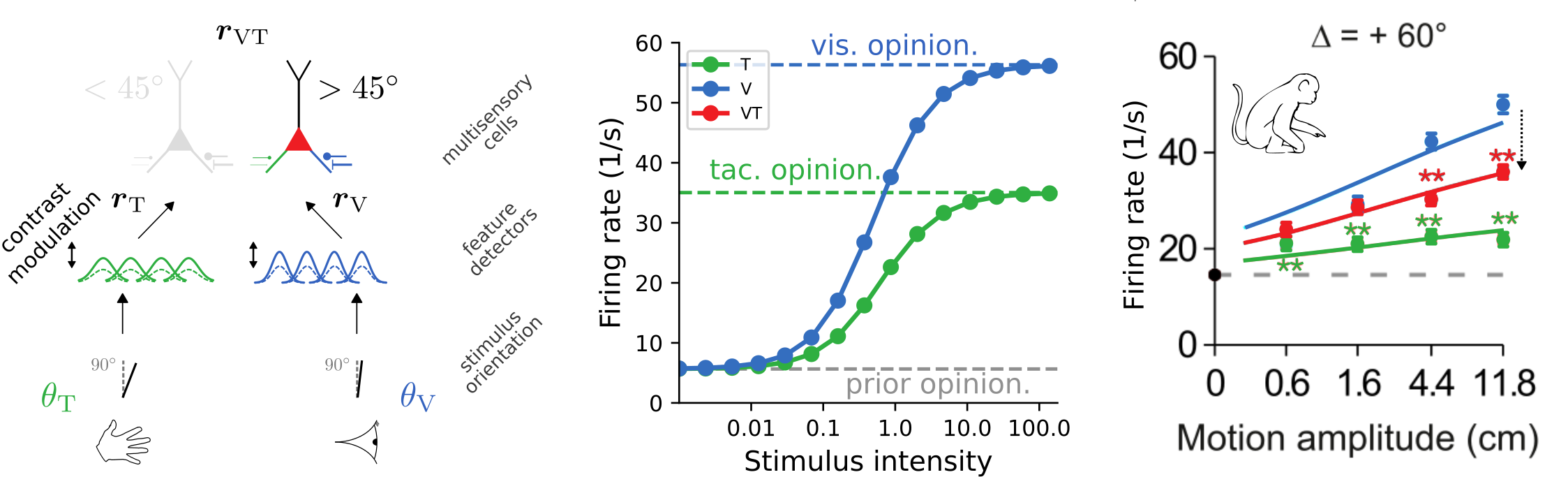

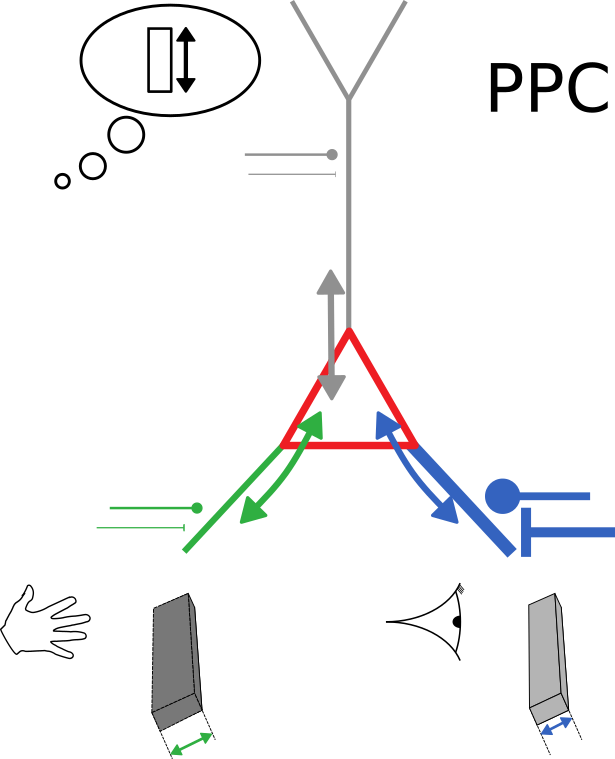

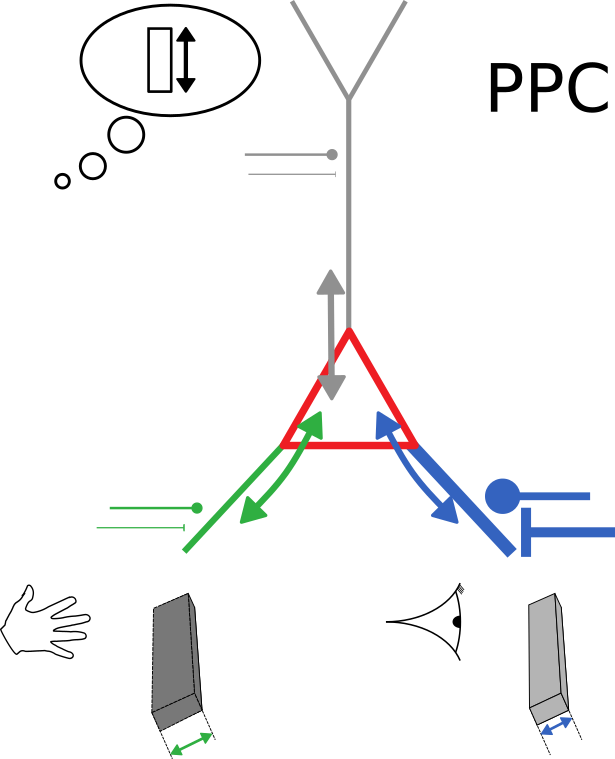

Cue integration is a fundamental computational principle of cortex

Neurons with conductance-based synapses

naturally implement probabilistic cue integration

An observation

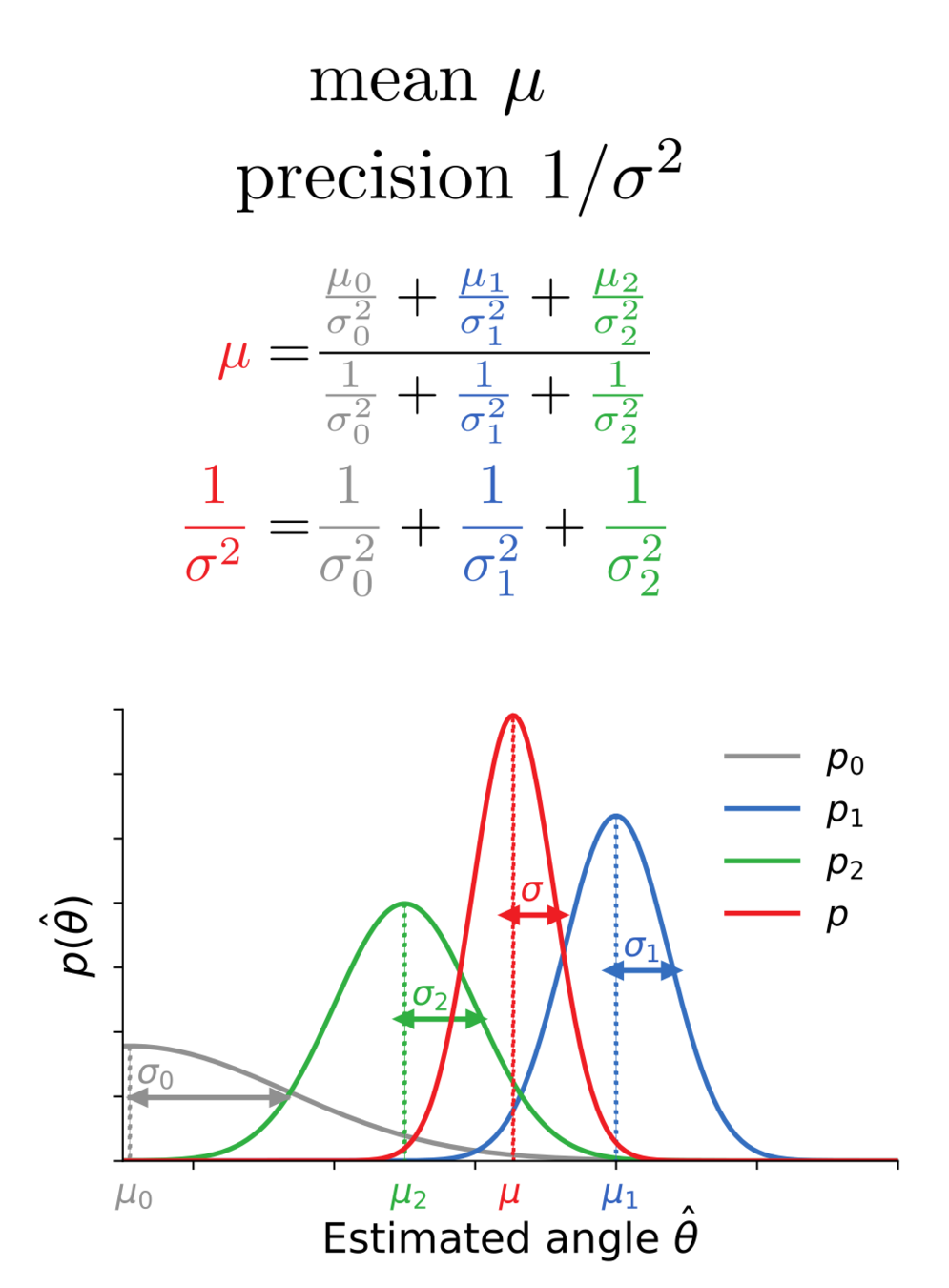

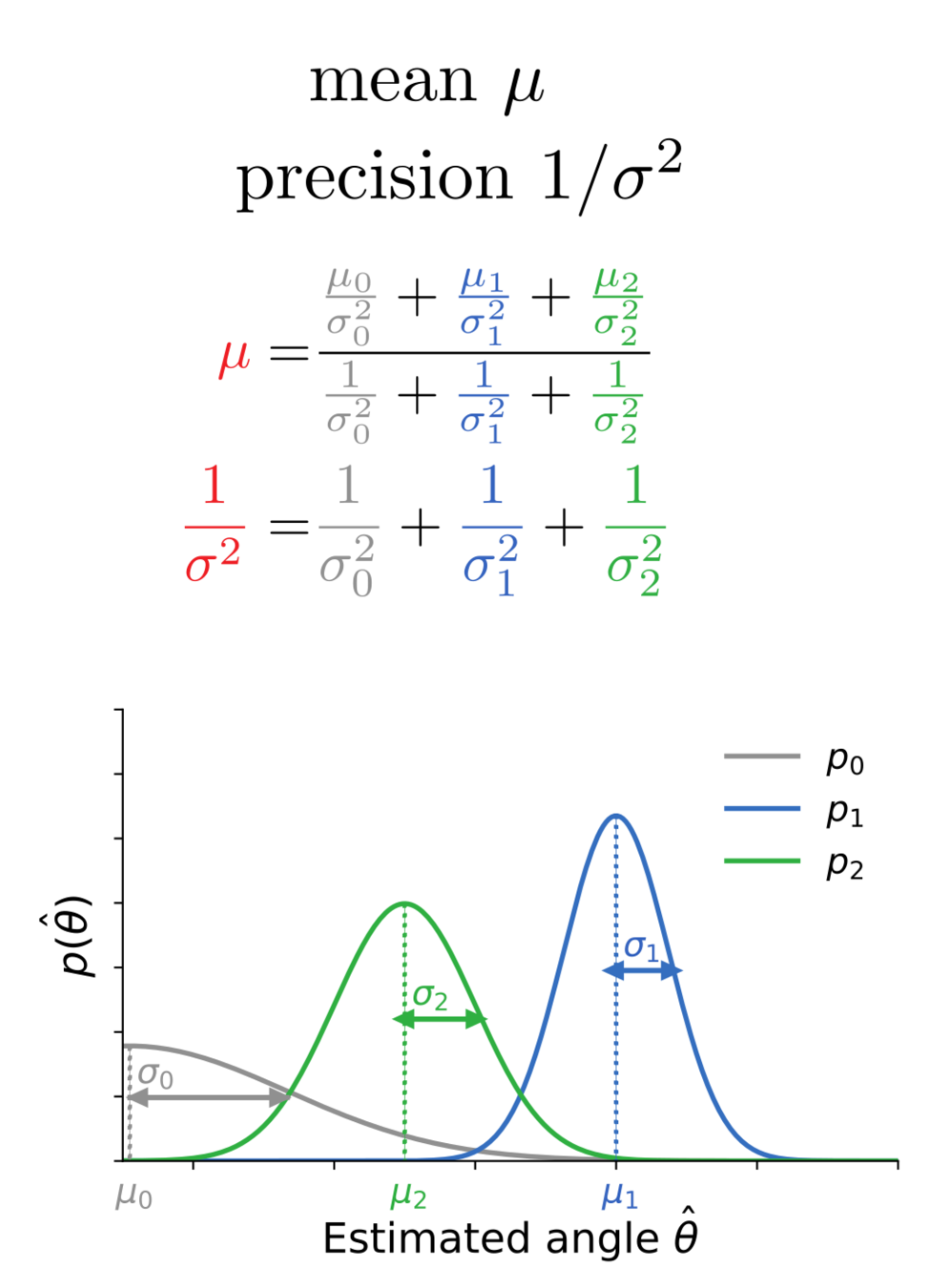

Bayes-optimal inference

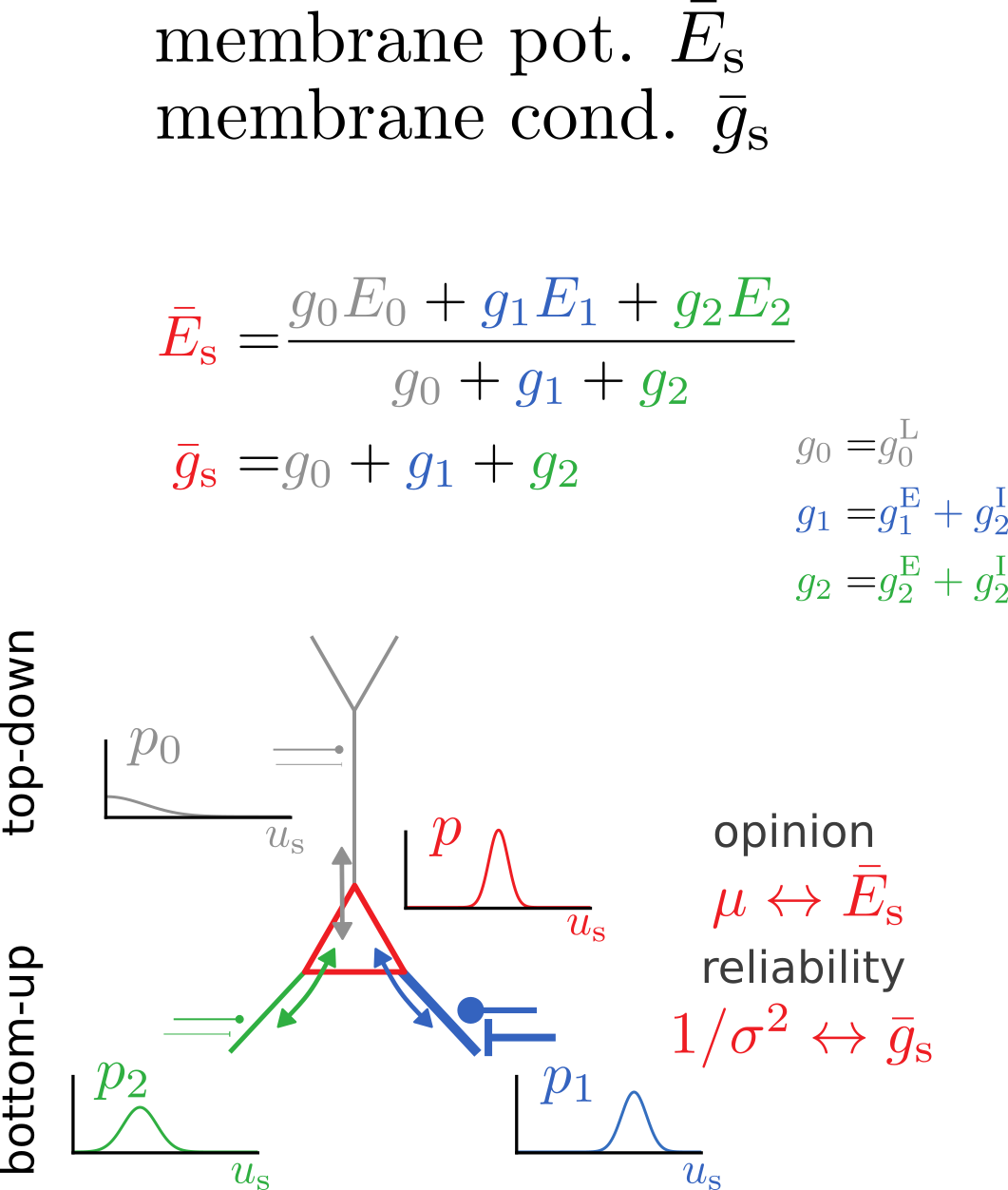

Bidirectional voltage dynamics

Membrane potential dynamics from noisy gradient ascent

Average membrane potentials

= reliability-weighted opinions

Membrane potential variance

= 1/total reliability

Synaptic plasticity from stochastic gradient ascent

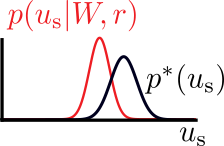

Synaptic plasticity modifies excitatory/inhibitory synapses

- in approx. opposite directions to match the mean

- in identical directions to match the variance

\(u_\text{s}^*\): sample from target distribution \(p^*(u_\text{s})\)

target

actual

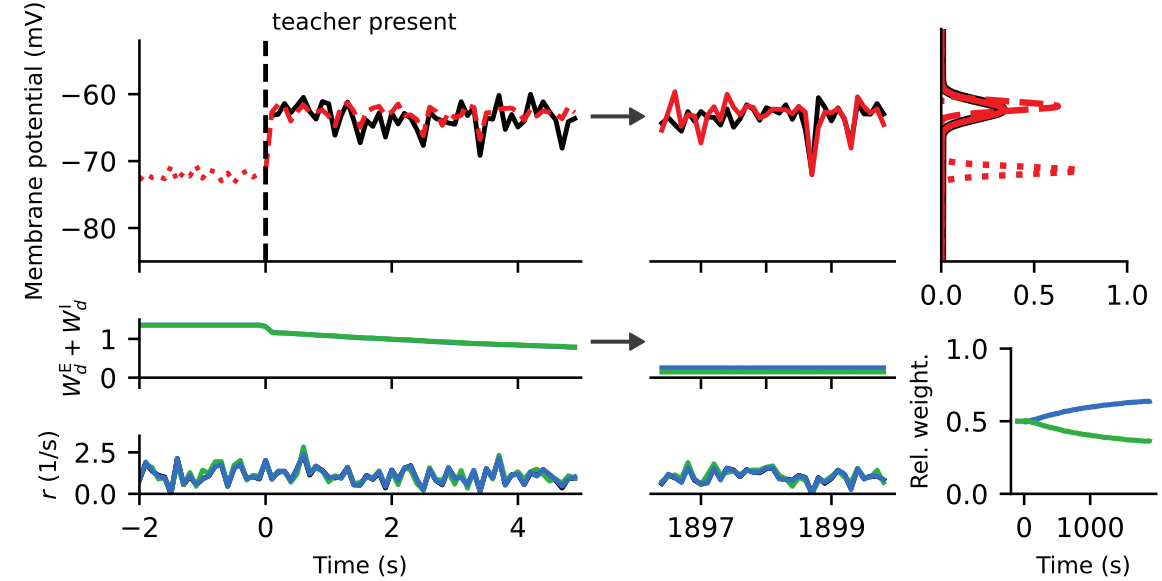

Synaptic plasticity performs

error-correction and reliability matching

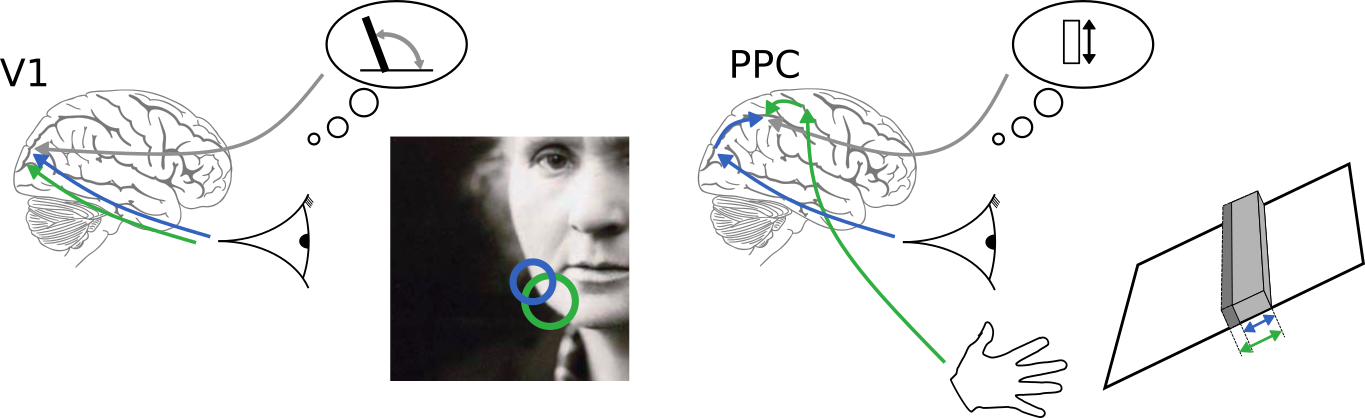

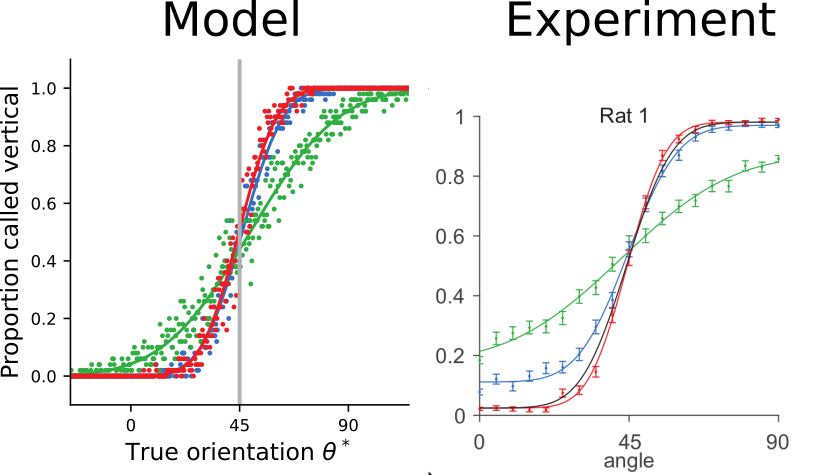

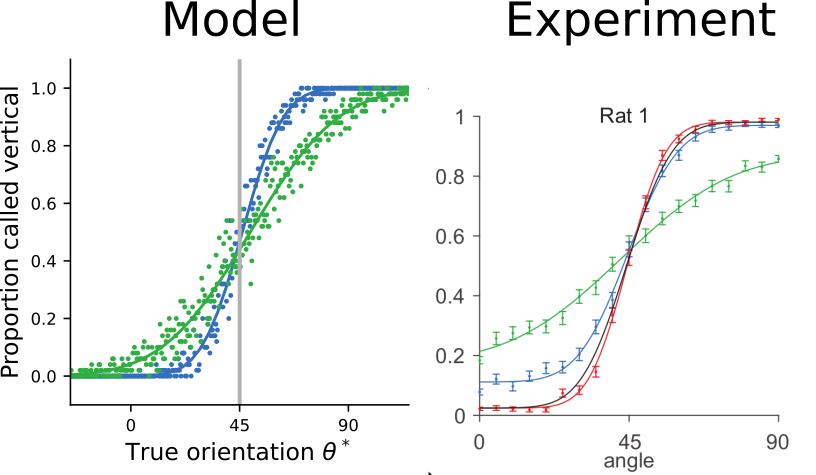

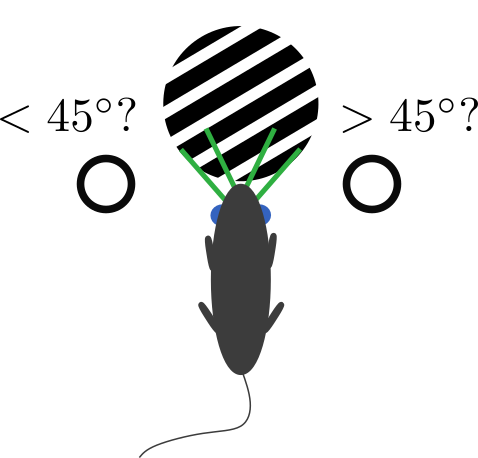

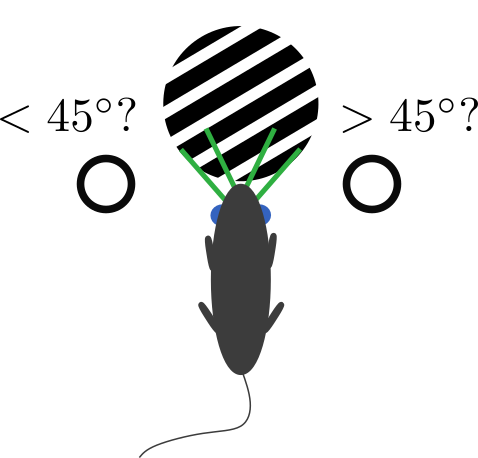

Learning Bayes-optimal inference of orientations from multimodal stimuli

The trained model approximates ideal observers

and reproduces psychophysical signatures of experimental data

[Nikbakht et al., 2018]

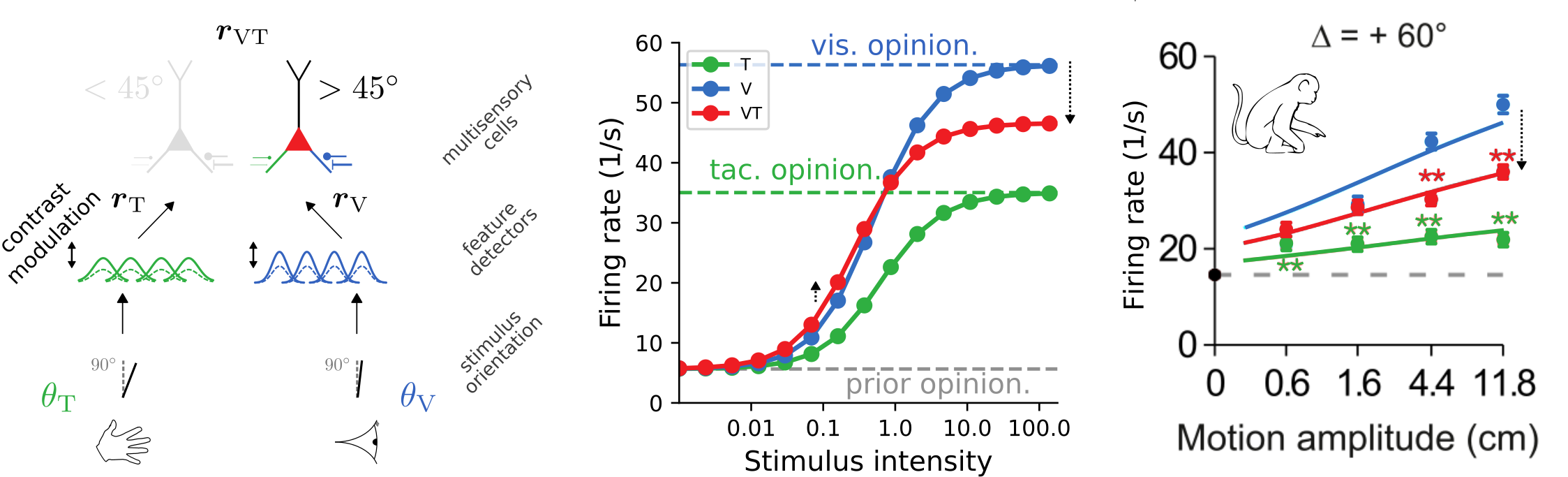

Cross-modal suppression as

reliability-weighted opinion pooling

The trained model exhibits cross-modal suppression:

- at low stimulus intensities, firing rate is larger bimodal condition

- at high stimulus intensities, firing rate is smaller in bimodal condition

- example prediction for experiments: strength of suppression depends on relative reliabilities of the two modalities

[Ohshiro et al., 2017]

Summary & Outlook

- Neuron models with conductance-based synapses naturally implement computations required for probabilistic cue integration

- Our plasticity rules matches the somatic potential distribution to a target distribution & weights pathways according to reliability

- A model trained in a multisensory cue integration tasks reproduces behavioral and neuronal experimental data

- The direct connection between normative and mechanistic descriptions allows for predictions on the systems as well as cellular level

Summary & Outlook

- Neuron models with conductance-based synapses naturally implement computations required for probabilistic cue integration

- Our plasticity rules matches the somatic potential distribution to a target distribution & weights pathways according to reliability

- A model trained in a multisensory cue integration tasks reproduces behavioral and neuronal experimental data

- The direct connection between normative and mechanistic descriptions allows for predictions on the systems as well as cellular level

- Next: work out (new) detailed pre-/"post"dictions for specific experimental setups

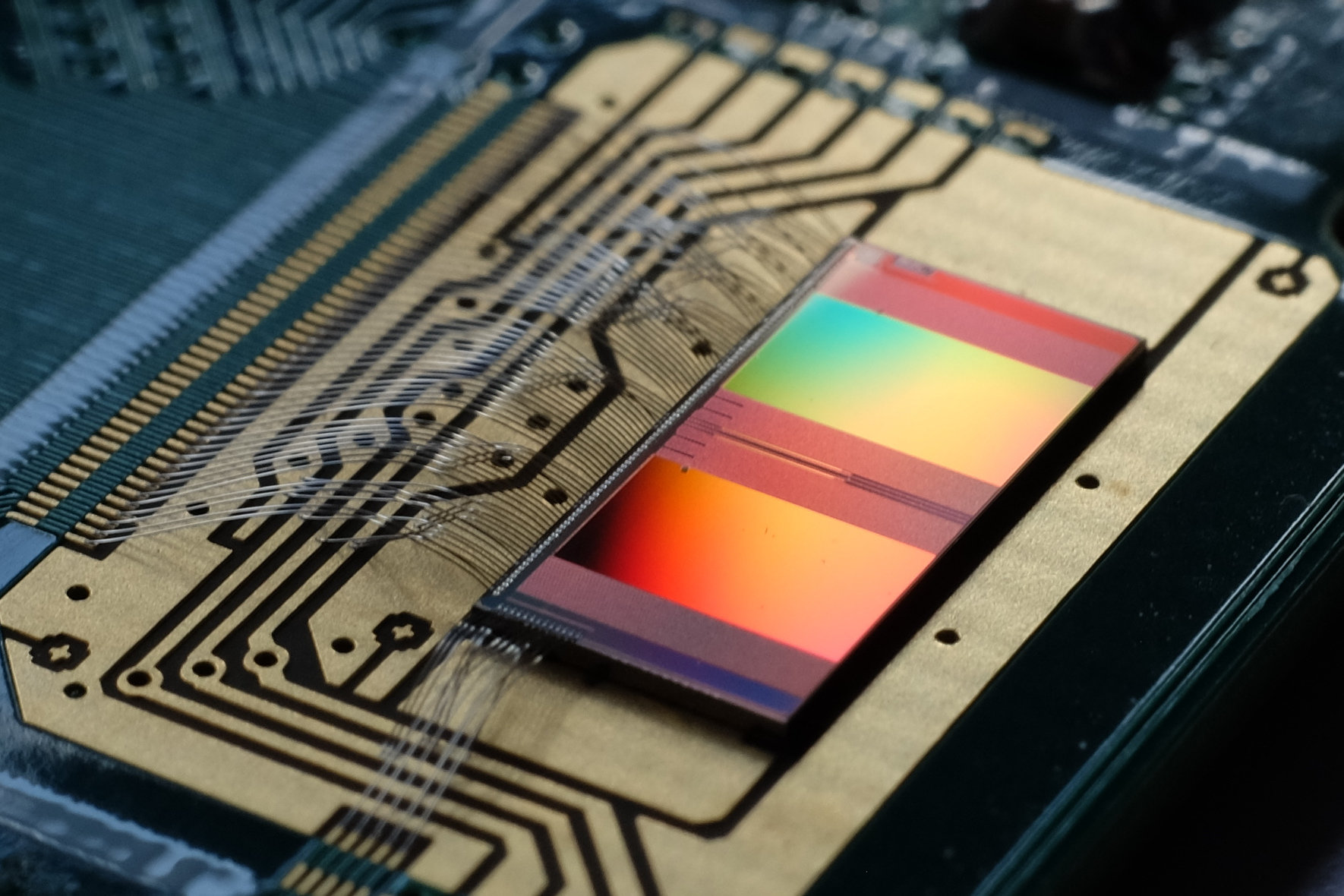

- Analog neuromorphic systems present a fitting substrate: non-linear differential eq. tricky to integrate

[blog-thebrain.org]

[Billaudelle et al., 2020]

NICE 2021

By jakobj

NICE 2021

- 309