Machine Learning

Nicholas Browning

Support Vector Machines (SVM)

Kernel Methods

Kernel Ridge Regression

Bayesian Probability Theory

Bayesian Networks

Naive Bayes

Perceptrons

Neural Networks

Principle Component Analysis

Dimensionality Reduction

Learning Theory

RDF Networks

Collaborative Filtering

Regression

GA-QMML: Prediction of Molecular Properties (QM), through Genetic Algorithm (GA) Optimisation and Non-Linear Kernel Ridge Regression (ML)

Topics of Seminar Series

Ridge Regression

-

Machine Learning introduction

-

Types of Learning

-

Supervised, Semisupervised, Unsupervised, Reinforcement

-

-

ML Applications

-

Classification, Regression, Clustering, Recommender Systems, Embedding

-

-

Theoretical Introduction: Regression

-

Bias-Variance trade-off, Overfitting, Regularization

-

Today

What is Machine Learning?

“A computer program is said to learn from experience E with respect to some task T and some performance measure P, if its performance on T, as measured by P, improves with experience E.” -- Tom Mitchell, Carnegie Mellon University

Supervised

Unsupervised

Semisupervised

Reinforcement Learning

Types of Machine Learning

ML Applications

From data to discrete classes

Classification

Spam Filtering

Object Detection

Weather Prediction

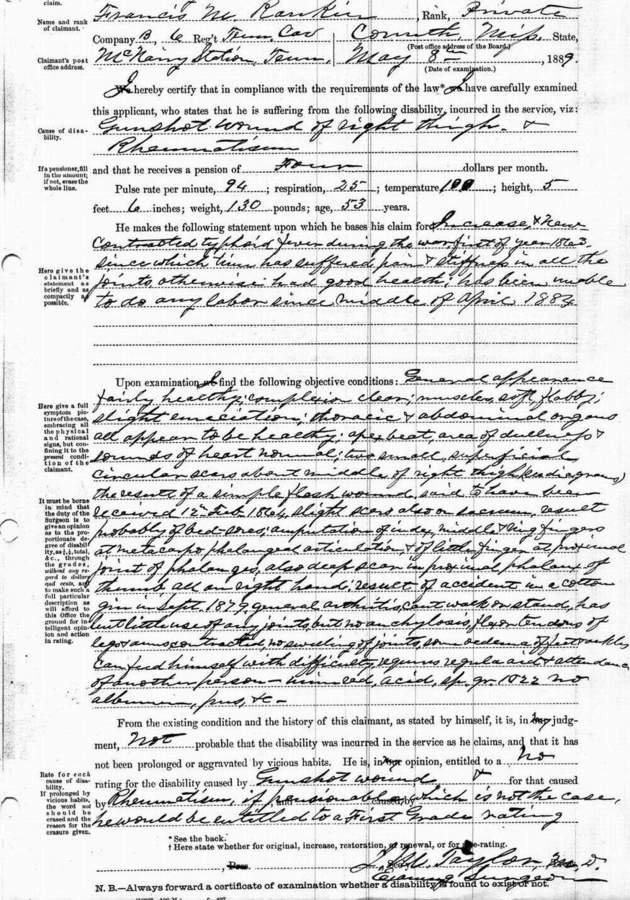

Medical Diagnosis

?

?

?

?

?

?

?

?

Predicting numeric value

Regression

Stock Markets

Weather Prediction

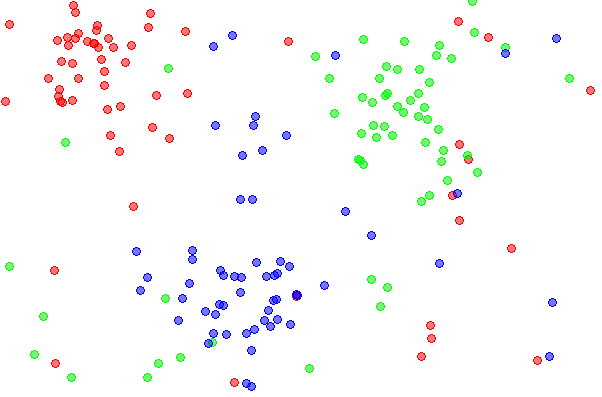

Discovering structure in data

Clustering

Natural document clusters of bio-medical research

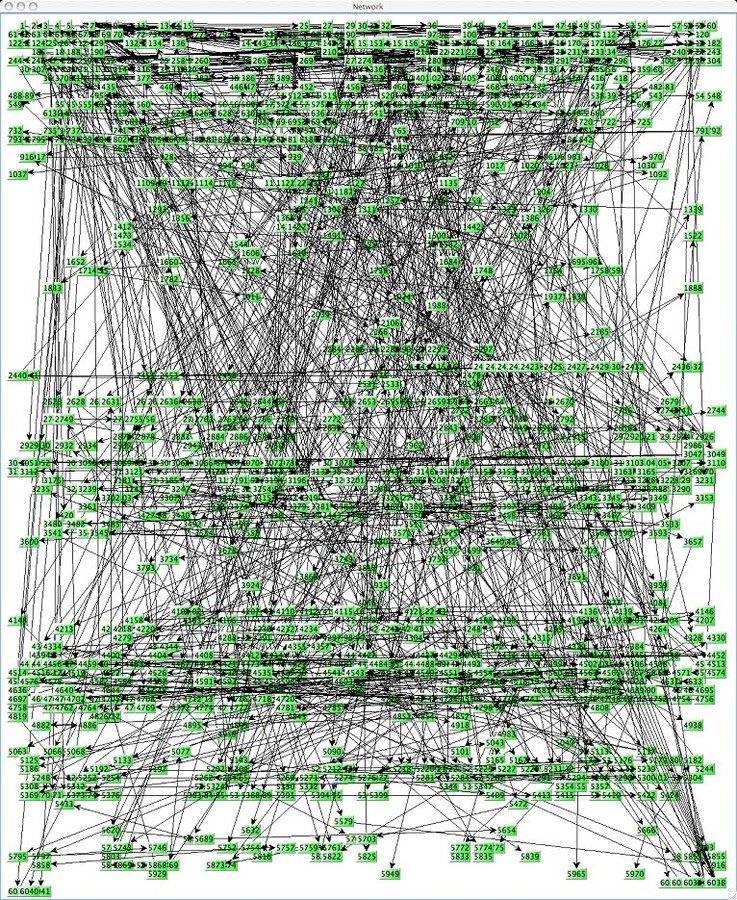

Finding what user might want

Recommender Systems

Visualising data

Embedding

Article classification via t-Distributed Stochastic Neighbor Embedding (t-SNE)

Word classification via t-SNE

Picture classification via t-SNE

LinkedIn social graph. Blue: cloud computing, Green: big data, dark orange: co-workers, light orange: law school, purple: former employer

Machine Learning Algorithms

Decision trees

K-nearest Neighbour

Linear Regression

Naive Bayes and Bayesian Networks

"Naive" conditional independance assumption

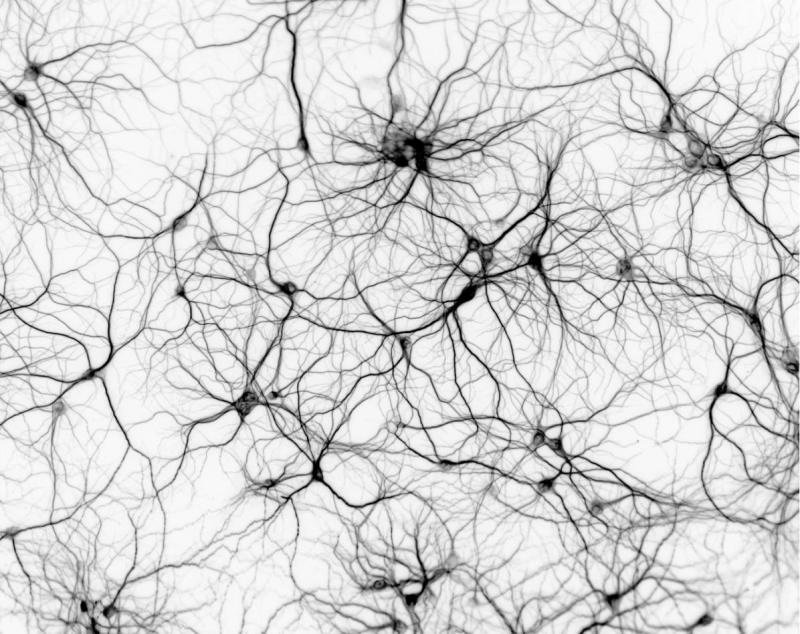

perceptrons and neural Networks

Support Vector Machines (SVM)

Supervised Learning:

Goal

An Example

Linear Regression

- Measures how well you expect to represent the "true" solution

- Decreases with model complexity

Learning Bias

- Measures how sensitive given learner is to a specific dataset

- Decreases with simpler model

Learning Variance

Simple models may not fit the data

Complex models may not be applicable to new, as of yet unseen data

choice of hypothesis class introduces learning bias

more complex class, less bias, but more variance

Bias-Variance Tradeoff

Error can be decomposed:

Choice of hypothesis class introduces learning bias

Bias-Variance Tradeoff

Overfitting

Training Set Error:

Overfitting

Prediction Error:

Why doesn't training error approximate prediction error?

training error good estimate for single w, but w was optimised with respect to training data, and found that w was good for this set of samples

Generalisation

Test Set Error

Given dataset D, randomly split into two parts:

- Training data

- Test data

Use Training Data to optimise w

For the final output w', evaluate error once using:

Generalisation

A learning algorithm overfits the training data if it outputs a solution w' when there exists another solution w'' such that:

Overfitting typically leads to very large parameter choices

Regularised regression aims to impose a complexity restriction by penalising large weights

Generalisation

better on training data

worse on testing data

Ridge Regression

Lasso Regression

Regularisation

Larger

more penalty, smoother function, more bias

Smaller

more flexible, more variance

Regularisation

Randomly divide data into k equal parts

Learn classifier

Estimate error of

on validation set

k-Fold Cross Validation

using data not in

ML Pipeline

END PROGRAM.

Machine Learning

By Nick Browning

Machine Learning

An introductory seminar to machine learning (ML), with real-world examples, a brief discussion of ML algorithms, and a worked example (linear regression) highlighting the bias-variance tradeoff, generalisation, overfitting and the necessity for regularisation.

- 1,358