Testing 101

Extremely opinionated,

use on your own risk,

don't trust anyone

talk by that white dude with beautiful voice

Grant me the serenity to omit tests for things that aren’t worth testing,

Courage to write tests for things that deserve test coverage,

And wisdom to know the difference.

- Dan Abramov, sort of famous dude dunno

A small pray before long road

Everyone tell testing is great, I just don't know how to do it

Testing is daunting 😫

What is a good test?

Where do I start?

TDD?? BDD?? Acceptance??

Exploration??

How would I test my test?

Why Do We Want Tests?

- To verify that things that we created are working (to some extend)

- To catch bugs in the code before users will

- To guard our code from unintended use or malicious users

- To document how the application works

- To be able to refactor functionality with confidence

To have a good sleep at night

What To Test?

Test everything, Yo

Recent

Expertise

Core

Problematic

Risk

Parts of Application

Recent

Expertise

Core

Problematic

Risk

I'm in Trouble BINGO

Bob developed new core functionality, sadly he got sick and the code is so complex nobody can read it

Functionality

- Start with trivial happy path

- Try to think about interesting/sensitive input/output

- Test error states

- Test logic branches

- Take it a bit further with Equivalence partitioning and Boundary value analysis

Pro-tip: Machines are better at generating unexpected values, see Hypothesis package

How much tests do I need??

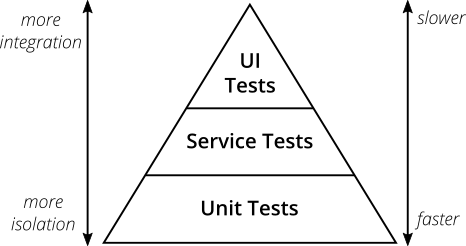

Mike Cohn Testing Pyramid

10% E2E

20% Func

70% Unit

10% End-to-End

These kind of tests run against real-life application, with all it dependencies, emulating user flows.

20% Functional Tests

Also can be called integration tests, they test how different parts of the application work with each other in the context of the feature

70% Unit Tests

Fastest type of test as they should not do any database requests or writing to the disc, they test one function, one class in isolation.

Unit Tests

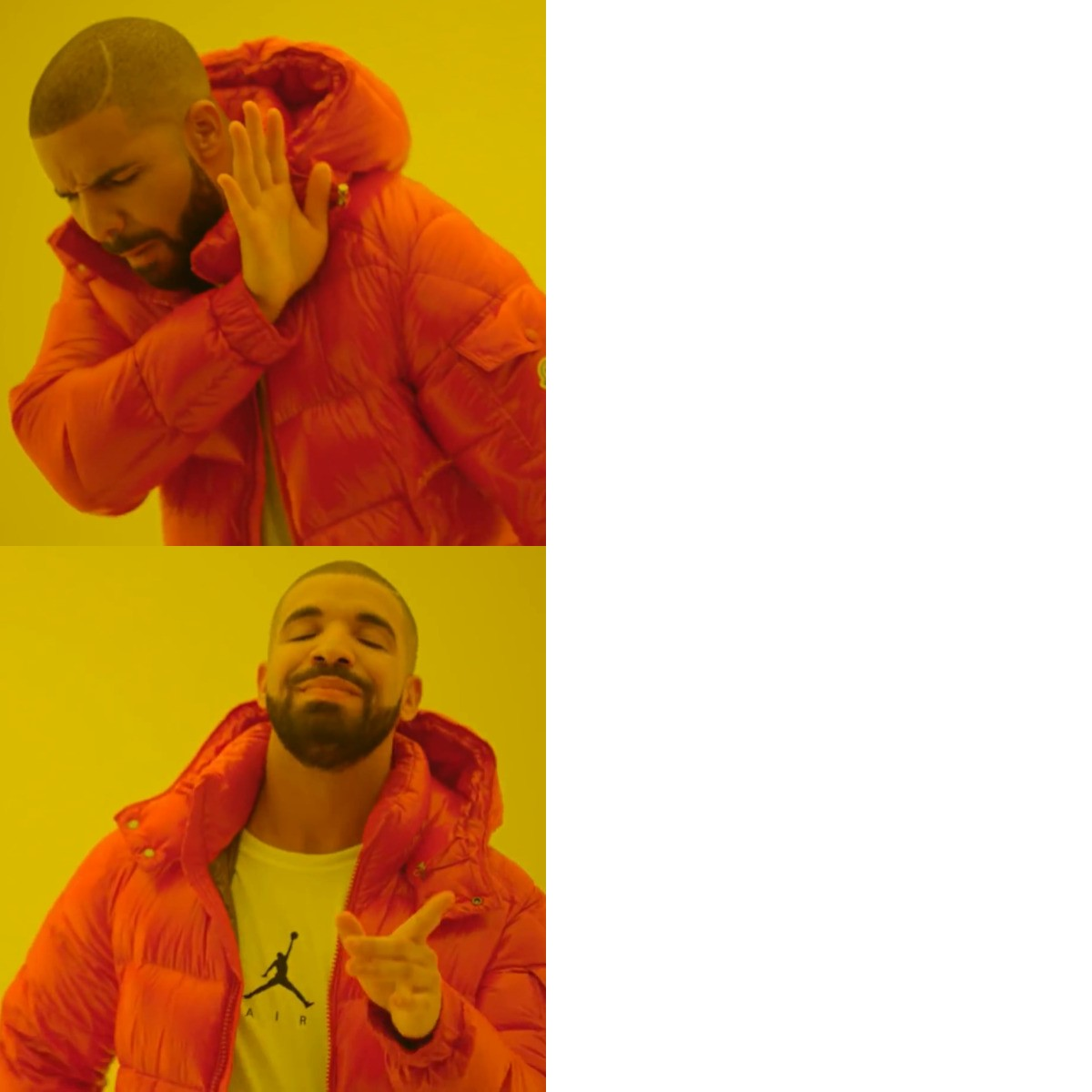

Content Warning: Subjective opinion

- Tests have to be blazing fast or they won't get run

- Unit tests should not touch the database or write to disc

- Jumping through hoops to make your tests faster just makes them harder to maintain and is a form of premature optimization

- You might complicate your system design just for a sake of unit testing

- You can have 10 unit tests and still for end-user nothing will work

Service Tests

Integration Tests

Functional Tests

Neither Tests

You are free to use whatever, but it is not a user journey yet

EVIL TWIN!

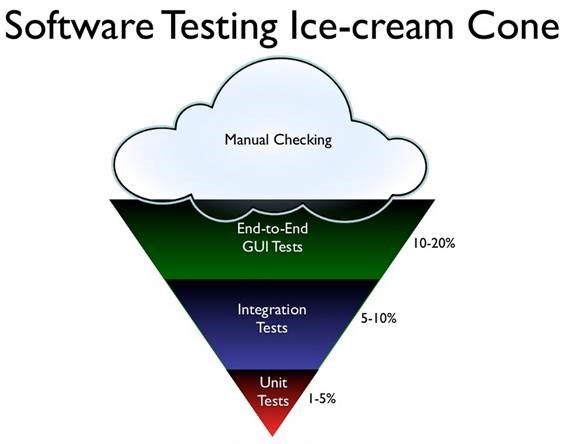

Testing Ice-Cream Cone

Anton's Reasoning

- Isolated tests are a LIE, no functionality exists without context

- Difference in speed between unit test and functional on modern hardware is not huge anymore

- I have written many unit tests, then boot the project and test it manually - in most cases the functionality was not working even thought tests were passing

- Logically make sense to me

- 70% E2E for UI user flows

- 20% Integration tests for management commands and celery tasks

- 10% Unit tests for algorithmic pure functions without dependencies

A Good Test

- Focused on one thing

- Partially Isolated

- Short

- Readable

Everything will be reversed for E2E tests, lol

Framework

methodology/approach

Given

Everything we need to setup to successfully reproduce a test in isolation

When

The part of the code under test

Then

This is the part where you confirm your expectations

import pytest

@pytest.mark.django_db

def test_get_dataframe_for_buy_act_with_reports(

buy_act, company, report_with_report_file

):

# GIVEN Act of buying attached to a Report with report file

# and report_file contains 3 rows, 2 of them with unrelated

# acts_rows and one with attached buy_acts

buy_act.seller = company

buy_act.save()

report_with_report_file.act_of_purchase.add(buy_act)

# WHEN we creating a dataframe for XLSX export

df = buy_act.get_dataframe()

# THEN it should contain only related to act report rows

assert df.shape[0] == 1

for row in df.itertuples(index=False, name="Row"):

assert row.buyer == company.name- Communicate the purpose of your test more clearly

- Focus your thinking while writing the test

- Make test writing faster

- Make it easier to re-use parts of your test

- Highlight the assumptions you are making about the test preconditions

- Highlight what outcomes you are expecting and testing against.

Who's testing the tests?

Especially crucial when adding tests for existing functionality

- Inverse code in testing functionality to make your test fail

- Make feature code return different result

- Inverse your assertion in the test case so it should fail

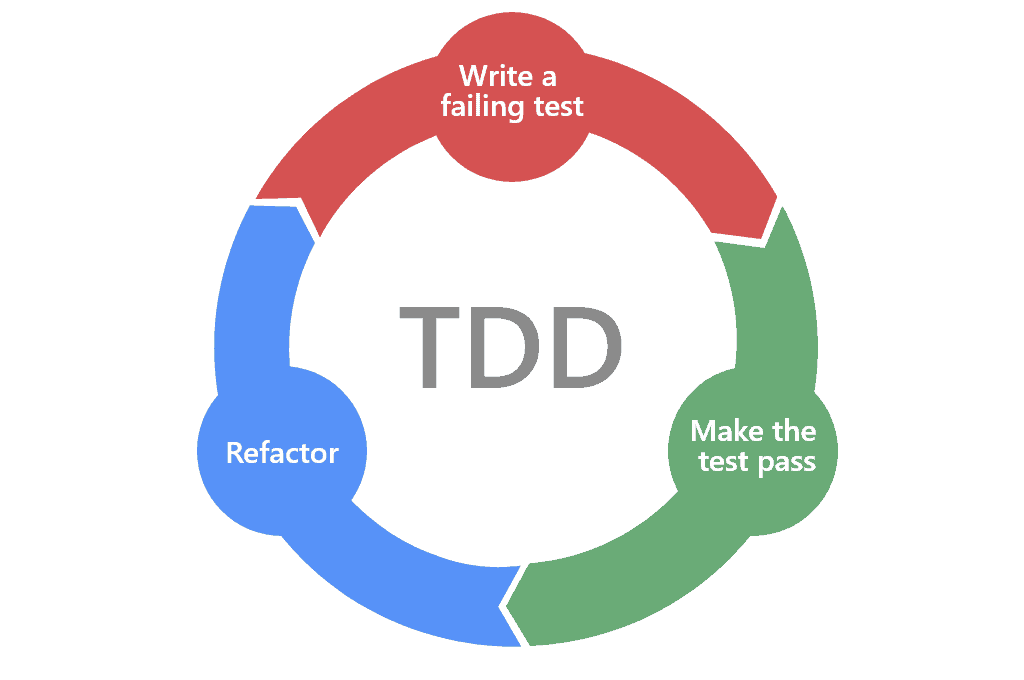

All cool kids do

Test-Driven-Development

Test First Development

- Write the specification

- Write the tests to the specification

- Write the code until all of the tests pass

Practical Example

class VerificationAttemptResultView(GenericAPIView):

"""Returns Verification results of the request as they sent to the Monolith"""

authentication_classes = [authentication.TokenAuthentication]

permission_classes = [permissions.IsAuthenticated]

serializer_class = VerificationAttemptResultSerializer

def post(self, request: Request) -> Response:

verifier = self.get_serializer(data=request.data)

if not verifier.is_valid():

return Response(status=400)

data = verifier.data

if data["type"] == VerificationType.TYPE_PROOF_OF_ADDRESS:

return Response(status=400)

try:

verification_request = VerificationRequest.objects.get(

user_id=data["user_id"],

verification_reference=data["verification_reference"],

)

except VerificationRequest.DoesNotExist:

return Response(status=404)

...

if no_history_created or attempt_is_missing:

return Response(status=500)

...

if last_verification_history_record.status not in FINAL_STATES:

return Response(status=400)

return Response(json.loads(last_verification_attempt.verification_results))

Why do we want to test it?

- ✅ RECENT

- ✅ CORE - this endpoint allows client to re-fetch data if something is broken on their side

- ✅ RISK - this view has a lot of logic branches, hard to track execution

- ❌ Problematic - we don't know yet

- ❌ Expertise - code is mediocre, not too easy, not too hard to read

How we will test?

- Test permissions

- Test invalid inputs

- Test logic branches

- Test happy path

def test_attempt_response_view_permissions(api_client):

# GIVEN unauthorized client

# WHEN it does request to fetch results

res = api_client.post(reverse("verification_attempt_result"), data={"foo": "bar"})

# THEN permissions should be checked and correct code returned

assert res.status_code == 4011. Permissions check

2. Validation Checks

@pytest.mark.django_db

def test_attempt_response_view_invalid_data(authenticated_api_client):

# GIVEN authenticated client and invalid data

invalid_data = {"user_id": 123, "foo": "bar"}

# WHEN client makes request to results endpoint

res = authenticated_api_client.post(

reverse("verification_attempt_result"),

data={"user_id": 123, "foo": "bar"},

)

# THEN validation error is returned with correct error code

assert res.status_code == 400

response_body = res.json()

assert response_body["error"]["message"] == "Validation Error"

@pytest.mark.django_db

def test_attempt_response_view_proof_of_address_not_implemented(authenticated_api_client):

# GIVEN request data with not implemented yet functionality

data = {

"user_id": "test",

"verification_reference": "ZZZ",

"type": VerificationType.TYPE_PROOF_OF_ADDRESS,

}

# WHEN making request to result endpoint

res = authenticated_api_client.post(

reverse("verification_attempt_result"),

data=data,

)

# THEN Not implemented message should be part of response

assert res.status_code == 400

response_body = res.json()

assert "not implemented" in response_body["error"]["message"]3. Logic Branches

@pytest.mark.django_db

def test_attempt_response_view_missing_request(authenticated_api_client):

# GIVEN valid request body AND no verification request saved in DB

verification_attempt_request_data = {

"user_id": "test",

"verification_reference": "ZZZ",

}

# WHEN request made to result endpoint

res = authenticated_api_client.post(

reverse("verification_attempt_result"),

data=verification_attempt_request_data,

)

# THEN Not found code should be returned without body

assert res.status_code == 404

assert res.content == b""

@pytest.mark.django_db

def test_attempt_response_view_missing_attempt(authenticated_api_client):

# GIVEN valid request body AND verification request without attempt

verification_attempt_request_data = {

"user_id": "test",

"verification_reference": "ZZZ",

}

VerificationRequestF.create(

user_id=verification_attempt_request_data["user_id"],

verification_reference=verification_attempt_request_data[

"verification_reference"

],

)

# WHEN request made to result endpoint

res = authenticated_api_client.post(

reverse("verification_attempt_result"),

data=verification_attempt_request_data,

)

# THEN 500 should be returned as no request should exist without attempt

assert res.status_code == 500

assert res.content == b""

@pytest.mark.django_db

def test_attempt_response_view_attempt_is_pending(authenticated_api_client):

# GIVEN valid request body

# AND verification request with attempt

# AND Attempt status is WAITING

verification_attempt_request_data = {

"user_id": "test",

"verification_reference": "ZZZ",

}

VerificationAttemptF.create(

status=VerificationAttemptStatus.WAITING,

verification_request__user_id="test",

verification_request__verification_reference="ZZZ",

)

# WHEN request made to result endpoint

res = authenticated_api_client.post(

reverse("verification_attempt_result"),

data=verification_attempt_request_data,

)

# THEN 400 should be returned with the specific "PENDING" reason

assert res.status_code == 400

response_body = res.json()

assert response_body["error"]["message"] == "Verification attempt is pending"

4. Happy Path

@pytest.mark.django_db

def test_attempt_response_view_happy_path(authenticated_api_client):

# GIVEN valid request body

# AND verification request with attempt

# AND Attempt status is COMPLETED

verification_attempt_request_data = {

"user_id": "test",

"verification_reference": "ZZZ",

}

verification_results = {"status": VerificationStatus.APPROVED.value}

VerificationAttemptF.create(

status=VerificationAttemptStatus.COMPLETED,

verification_results=json.dumps(verification_results),

verification_request__user_id=verification_attempt_request_data["user_id"],

verification_request__verification_reference=verification_attempt_request_data[

"verification_reference"

],

)

# WHEN request made to result endpoint

res = authenticated_api_client.post(

reverse("verification_attempt_result"),

data=verification_attempt_request_data,

)

# THEN request is successful and we return results

assert res.status_code == 200

assert res.json() == verification_results

How to start with testing?

- Analyze if functionality needs to be tested :)

- Think how thoroughly it needs to be tested (maybe some edge-cases are not important)

- Plan your test cases

- Happy path

- Sad paths

- Error handling

- Boundaries

- Write compact and separate cases using Giving Then When method

- Validate that your tests are working

THANK

YOU

Such empty

Resources

Podcasts

Articles

Testing 101

By Anton Alekseev

Testing 101

Extremely opinionated, use it on your own risk!

- 99