Kubeflow

Data Science on Steroids

About Me

saschagrunert

mail@

.de

The Evolution of Machine Learning

1950

Stochastic Neural Analog Reinforcement Calculator (SNARC) Maze Solver

2000

Data Science

Workflow

Data Source Handling

fixed set of technologies

changing set of input data

Exploration

Regression?

Classification?

Supervised?

Unsupervised?

Baseline Modelling

Results

Trained Model

Results to share

Results

Deployment

Automation?

Infastructure?

Yet Another Machine Learning Solution?

YES!

Cloud Native

Commercially available of-the-shelf (COTS) applications

The awareness of an application to run inside something like Kubernetes.

vs

Can we improve

the classic data scientists workflow

by utilizing Kubernetes?

announced 2017

abstracting machine learning best practices

Deployment

and

Test Setup

SUSE CaaS Platform

github.com/SUSE/skuba

> skuba cluster init --control-plane 172.172.172.7 caasp-cluster

> cd caasp-cluster> skuba node bootstrap --target 172.172.172.7 caasp-master> skuba node join --role worker --target 172.172.172.8 caasp-node-1

> skuba node join --role worker --target 172.172.172.24 caasp-node-2

> skuba node join --role worker --target 172.172.172.16 caasp-node-3> cp admin.conf ~/.kube/config

> kubectl get nodes -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

caasp-master Ready master 2h v1.15.2 172.172.172.7 <none> SUSE Linux Enterprise Server 15 SP1 4.12.14-197.15-default cri-o://1.15.0

caasp-node-1 Ready <none> 2h v1.15.2 172.172.172.8 <none> SUSE Linux Enterprise Server 15 SP1 4.12.14-197.15-default cri-o://1.15.0

caasp-node-2 Ready <none> 2h v1.15.2 172.172.172.24 <none> SUSE Linux Enterprise Server 15 SP1 4.12.14-197.15-default cri-o://1.15.0

caasp-node-3 Ready <none> 2h v1.15.2 172.172.172.16 <none> SUSE Linux Enterprise Server 15 SP1 4.12.14-197.15-default cri-o://1.15.0Storage Provisioner

> helm install nfs-client-provisioner \

-n kube-system \

--set nfs.server=caasp-node-1 \

--set nfs.path=/mnt/nfs \

--set storageClass.name=nfs \

--set storageClass.defaultClass=true \

stable/nfs-client-provisioner

> kubectl -n kube-system get pods -l app=nfs-client-provisioner -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nfs-client-provisioner-777997bc46-mls5w 1/1 Running 3 2h 10.244.0.91 caasp-node-1 <none> <none>Load Balancing

> kubectl apply -f https://raw.githubusercontent.com/google/metallb/v0.8.1/manifests/metallb.yaml---

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: default

protocol: layer2

addresses:

- 172.172.172.251-172.172.172.251> kubectl apply -f metallb-config.ymlDeploying Kubeflow

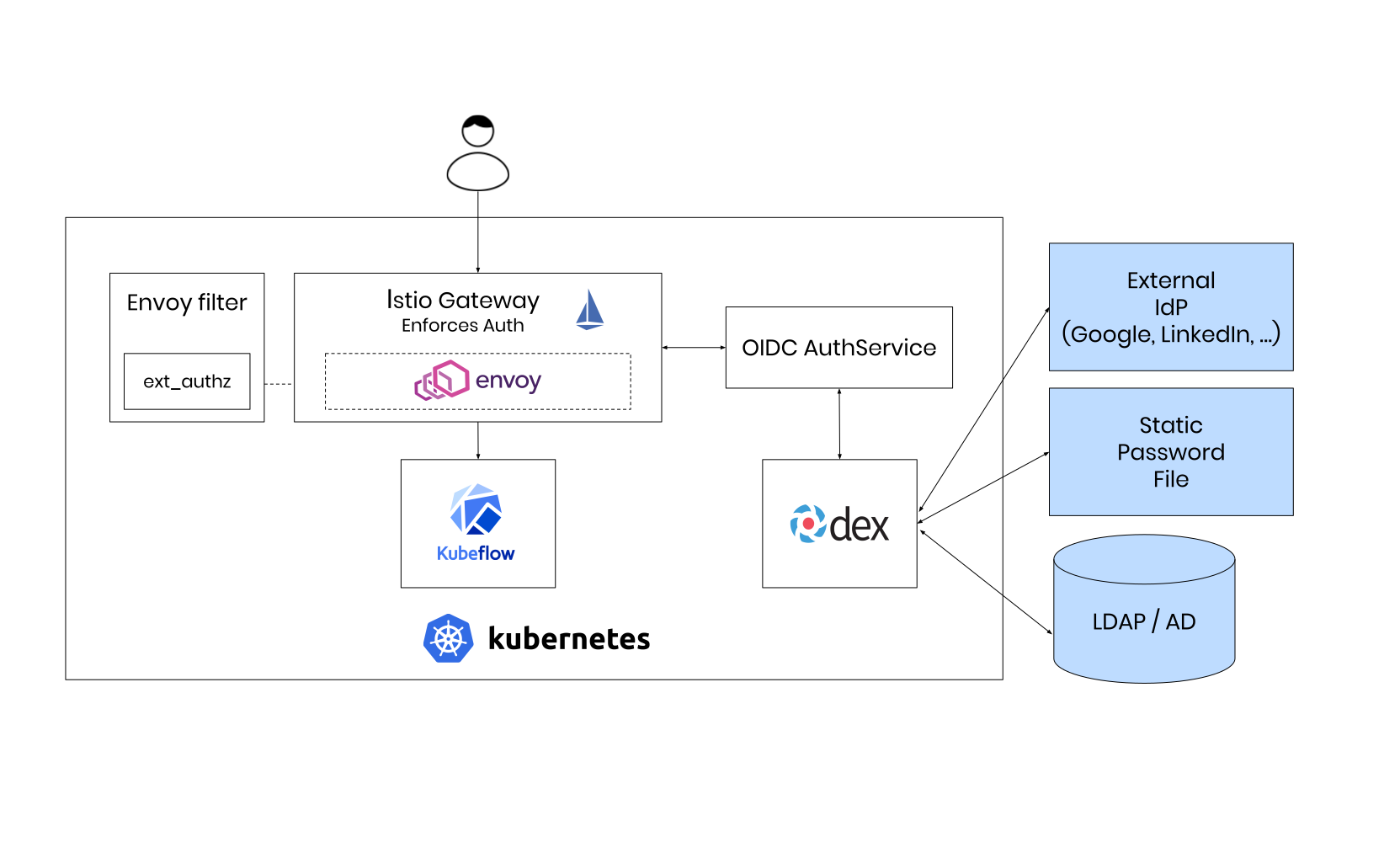

> wget -O kfctl_existing_arrikto.yaml \

https://raw.githubusercontent.com/kubeflow/manifests/v0.7-branch/kfdef/kfctl_existing_arrikto.0.7.0.yaml> kfctl apply -V -f kfctl_existing_arrikto.yamlavailable command line tool called kfctl

Credentials for the default user are

admin@kubeflow.org:12341234

Improving the Data Scientists Workflow

Machine Learning Pipelines

reusable end-to-end machine learning workflows via pipelines

separate Python SDK

pipeline steps are executed directly in Kubernetes

within its own pod

A first pipeline step

executes a given command within a container

input and output can be passed around

from kfp.dsl import ContainerOp

step1 = ContainerOp(name='step1',

image='alpine:latest',

command=['sh', '-c'],

arguments=['echo "Running step"'])Execution order

Execution order can be made dependent

Arange components using step2.after(step1)

step1 = ContainerOp(name='step1',

image='alpine:latest',

command=['sh', '-c'],

arguments=['echo "Running step"'])

step2 = ContainerOp(name='step2',

image='alpine:latest',

command=['sh', '-c'],

arguments=['echo "Running step"'])

step2.after(step1)Creating a pipeline

Pipelines are created using the @pipeline decorator and compiled afterwards via compile()

from kfp.compiler import Compiler

from kfp.dsl import ContainerOp, pipeline

@pipeline(name='My pipeline', description='')

def pipeline():

step1 = ContainerOp(name='step1',

image='alpine:latest',

command=['sh', '-c'],

arguments=['echo "Running step"'])

step2 = ContainerOp(name='step2',

image='alpine:latest',

command=['sh', '-c'],

arguments=['echo "Running step"'])

step2.after(step1)

if __name__ == '__main__':

Compiler().compile(pipeline)When to use ContainerOp?

Useful for deployment tasks

Running of complex training scenarios

(make use of training scripts)

Getting the pipeline to run

Pipelines are compiled via dsl-compile

> sudo pip install \

https://storage.googleapis.com/ml-pipeline/release/latest/kfp.tar.gz

> dsl-compile --py pipeline.py --output pipeline.tar.gzRunning pipelines from Notebooks

- Create and run pipelines by importing the kfp package

- Can be useful to save notebook resources

- Enables developers to combine prototyping with creating an automated training workflow

from kfp.compiler import Compiler

# Setup the pipeline

pipeline_func = training_pipelinep

pipeline_filename = pipeline_func.__name__ + '.pipeline.yaml'

# Compile it

Compiler().compile(pipeline_func, pipeline_filename)Running pipelines from Notebooks

import kfp

client = kfp.Client()

try:

experiment = client.create_experiment("Prototyping")

except Exception:

experiment = client.get_experiment(experiment_name="Prototyping")arguments = {'pretrained': 'False'}

run_name = pipeline_func.__name__ + ' test_run'

run_result = client.run_pipeline(

experiment.id,

run_name,

pipeline_filename,

arguments)Continuous Integration

Kubeflow provides a REST API

That’s it.

https://github.com/saschagrunert/

kubeflow-data-science-on-steroids

https://slides.com/saschagrunert/

kubeflow-containerday-2019

Kubeflow - Data Science on Steroids (ContainerDay.it 2019)

By Sascha Grunert

Kubeflow - Data Science on Steroids (ContainerDay.it 2019)

A presentation about Kubeflow

- 1,722