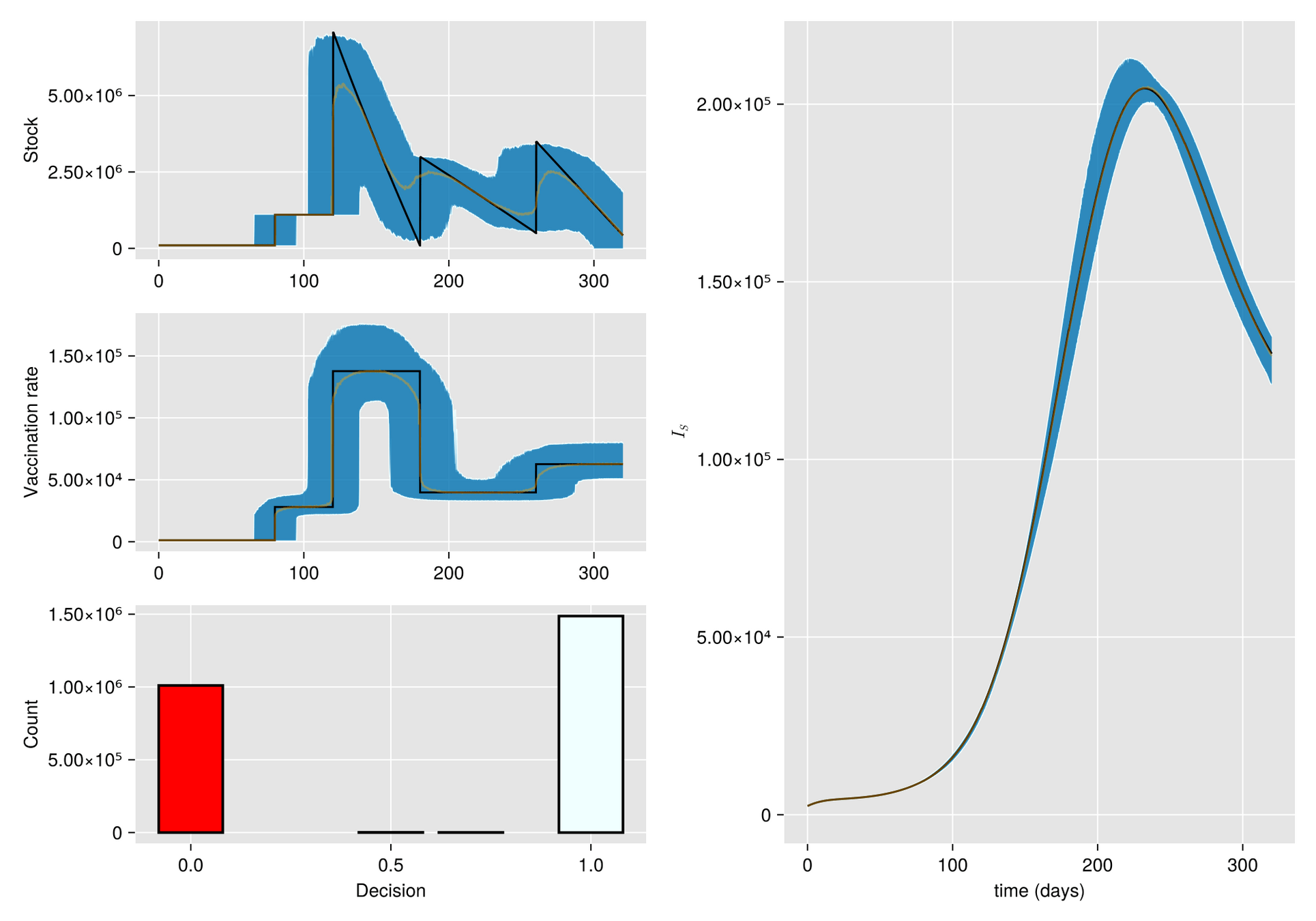

A model for optimizing vaccine usage through a sequence of decisions to manage stock uncertainty

Towards Reinforcement learning

Modeling and Uncertainty Quantification (SMUQ). Organized by the Sociedade Brasileira de Matemática Aplicada e Computacional (SBMAC) and Instituto Universitario de Matemática Multidisciplinar (IMM) will be held in València (Spain) from July 8th to July 9th, 2024.

Yofre H. Garcia

Saúl Diaz-Infante Velasco

Jesús Adolfo Minjárez Sosa

sauldiazinfante@gmail.com

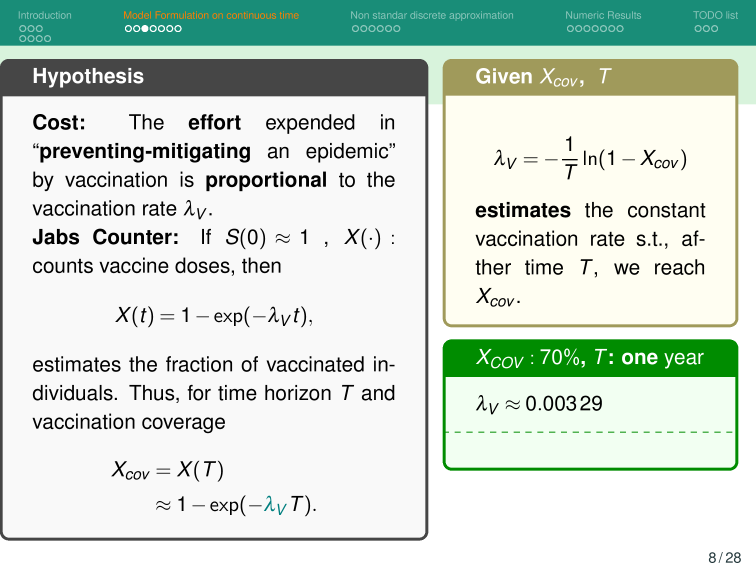

When a vaccine is in short supply, sometimes refraining from vaccination is the best response—at least for a while.

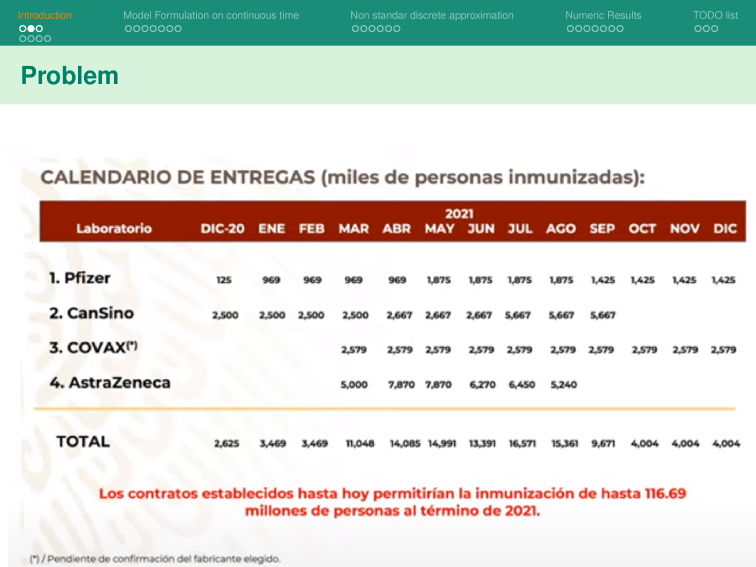

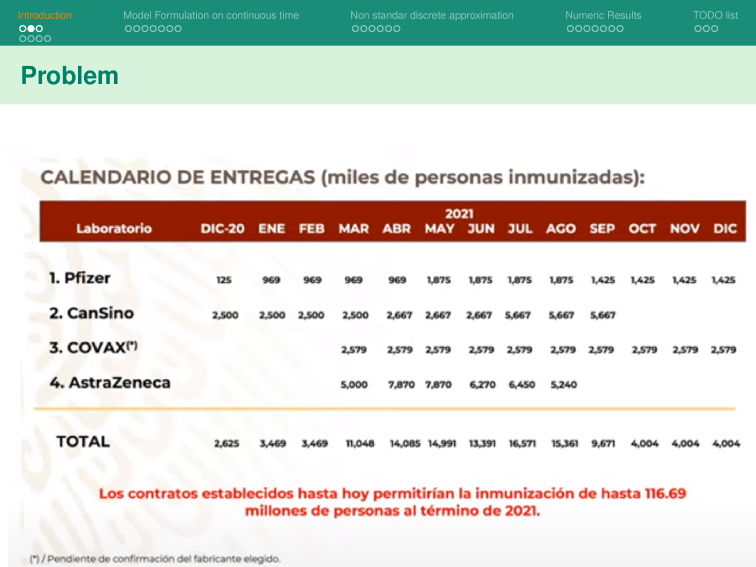

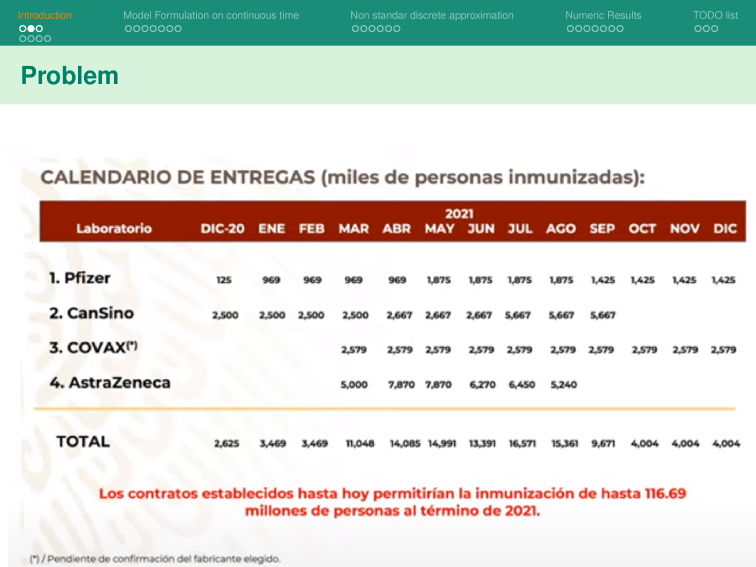

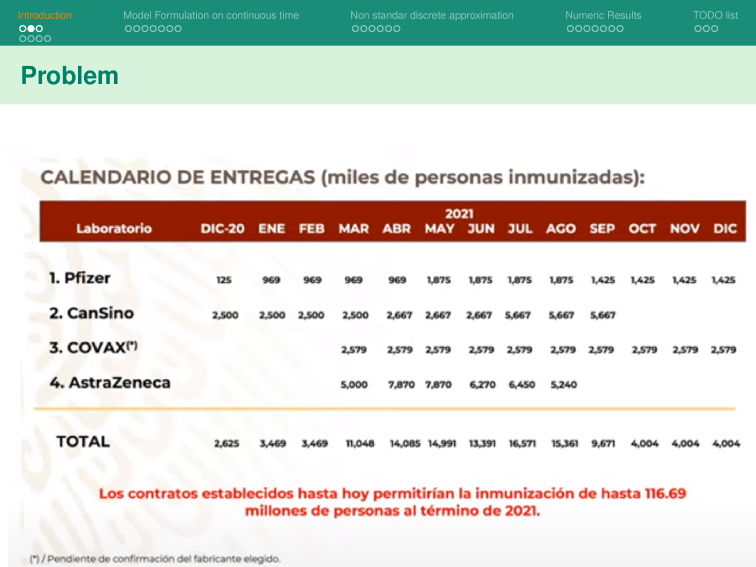

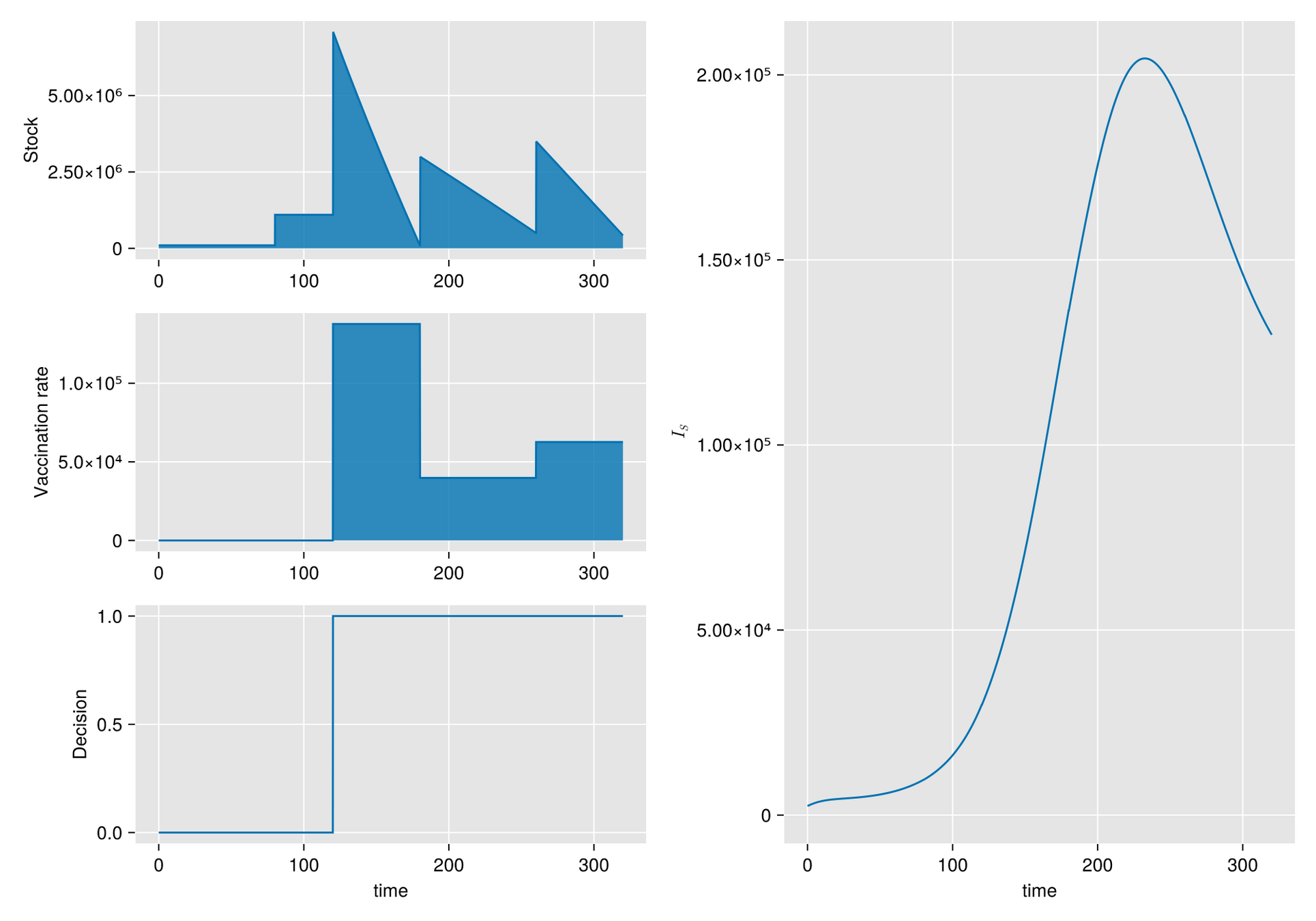

On December 02, 2020, the Mexican government announced a delivery plan for vaccines by Pfizer-BioNTech and other firms as part of the COVID-19 vaccination campaign.

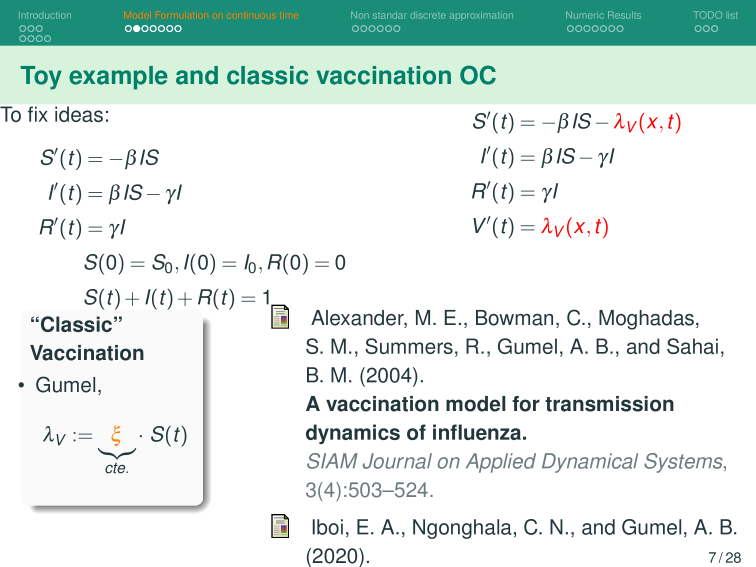

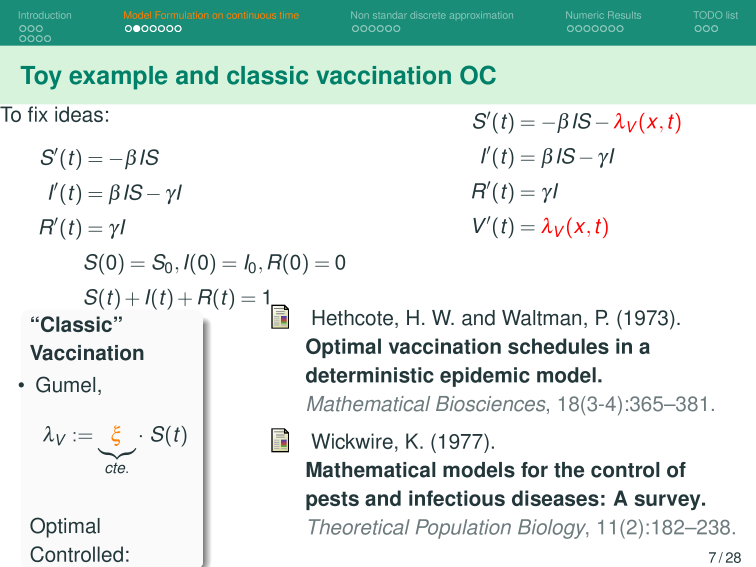

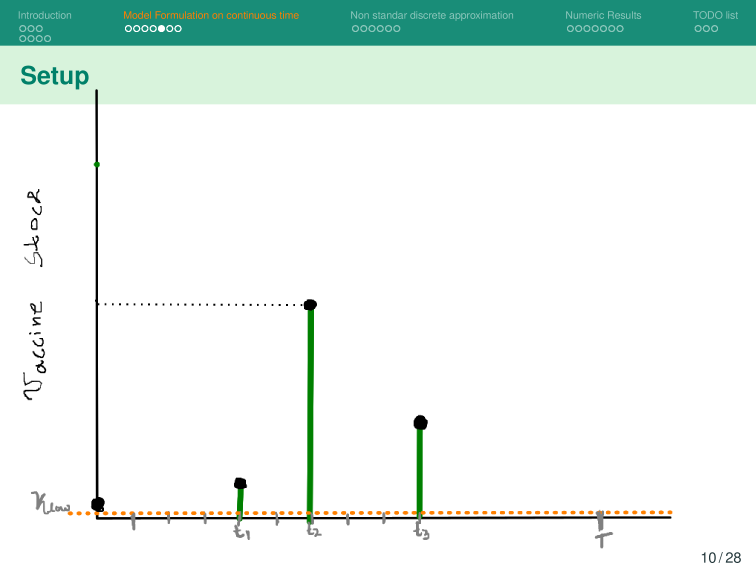

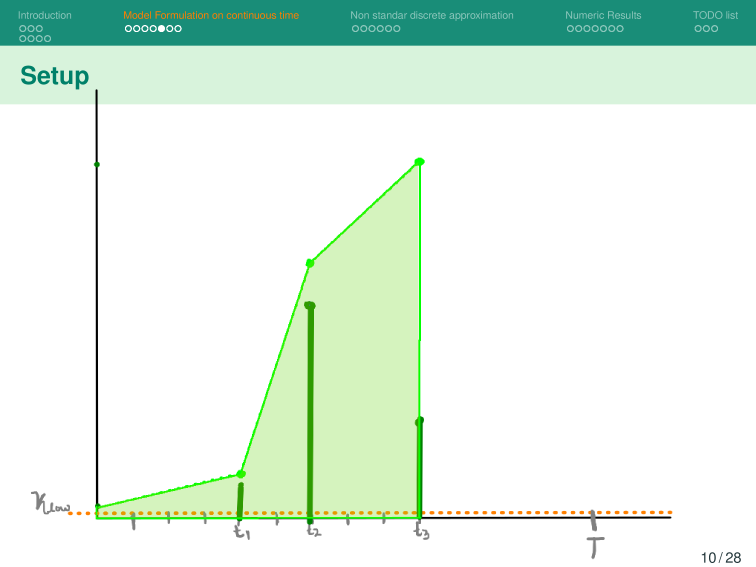

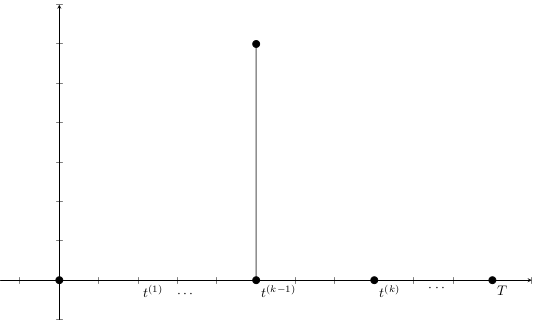

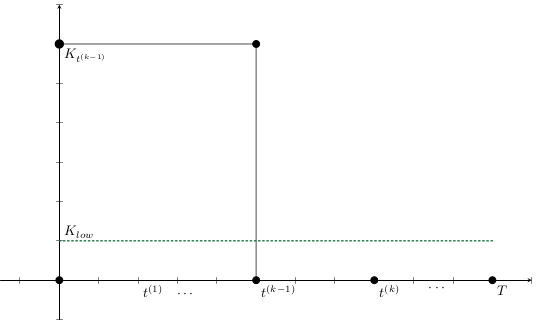

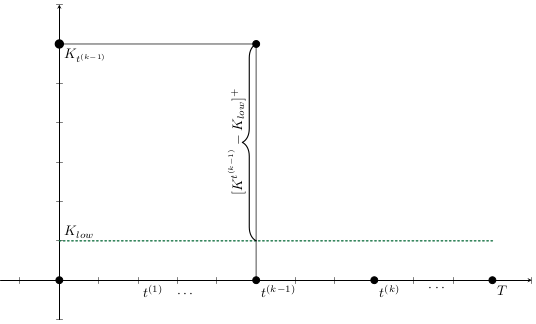

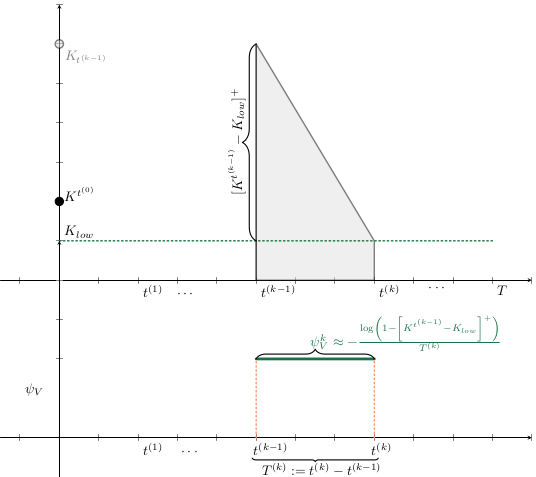

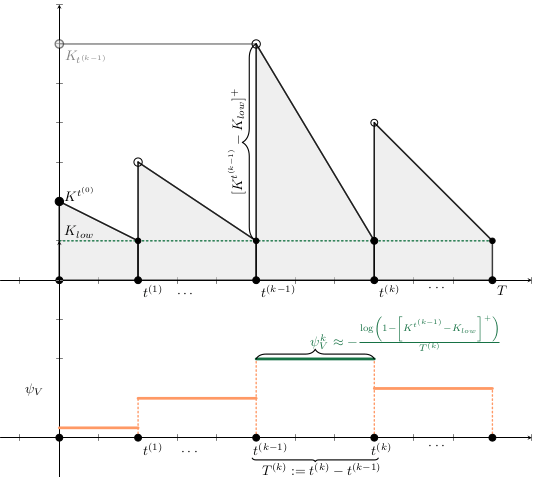

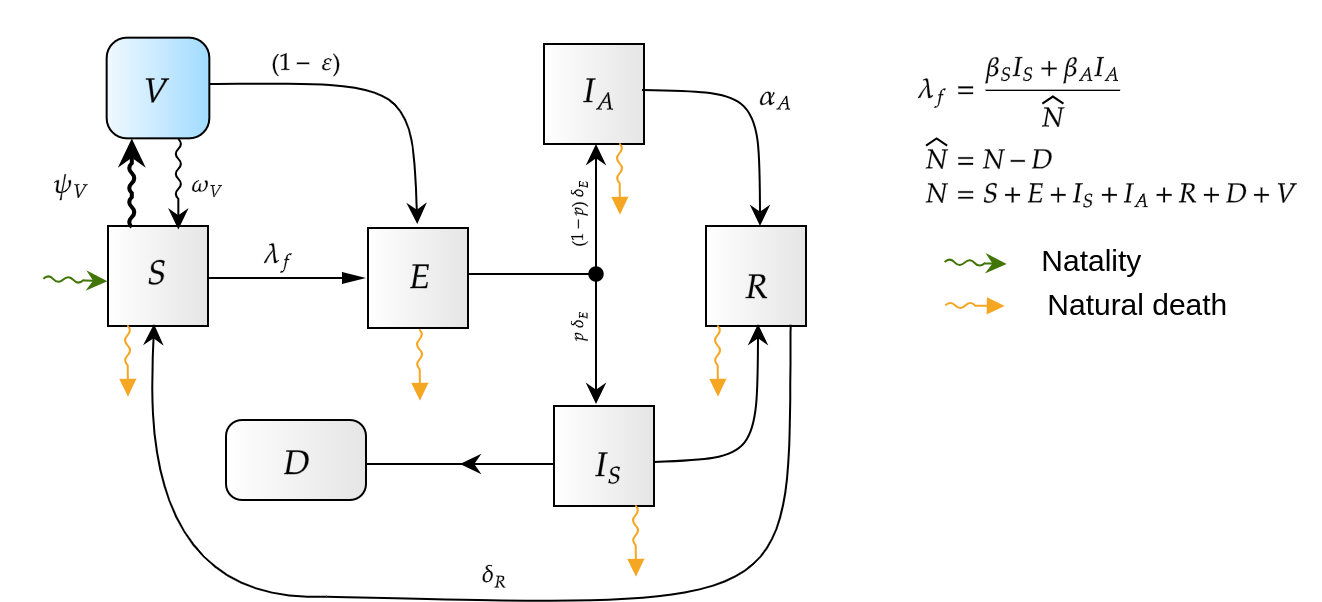

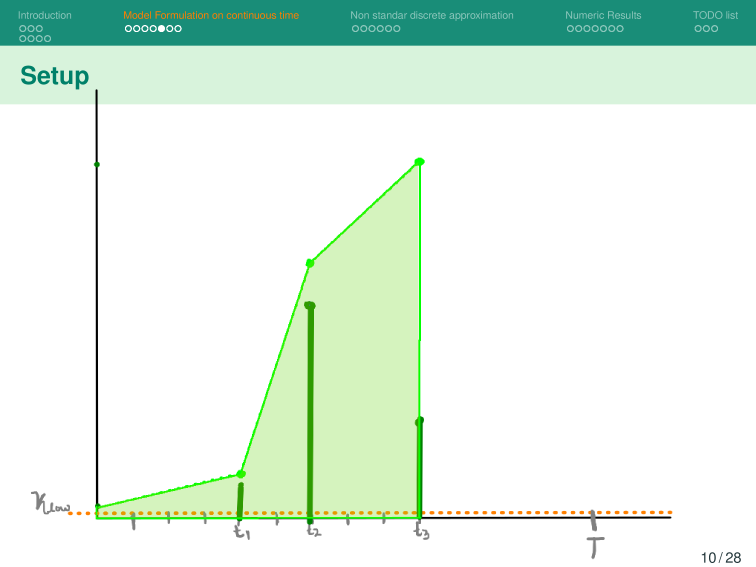

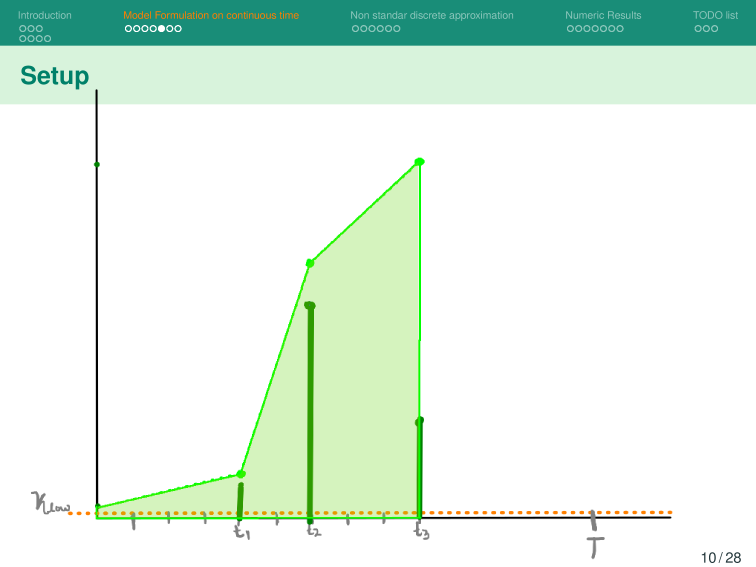

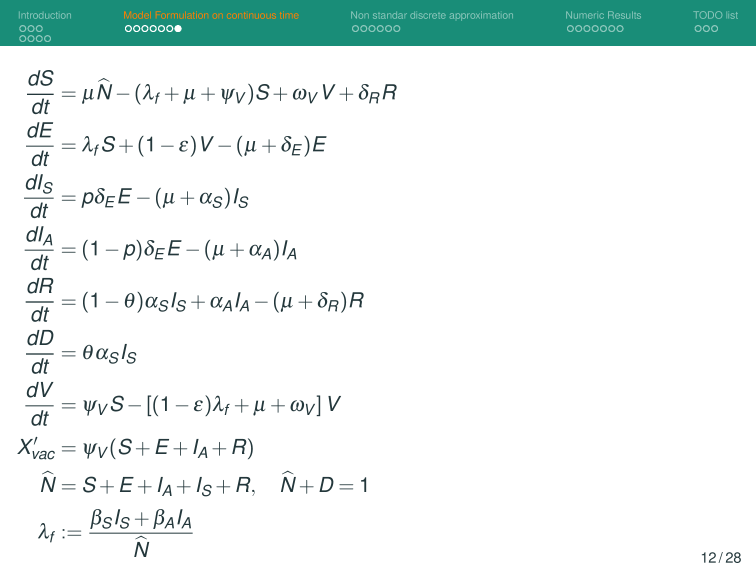

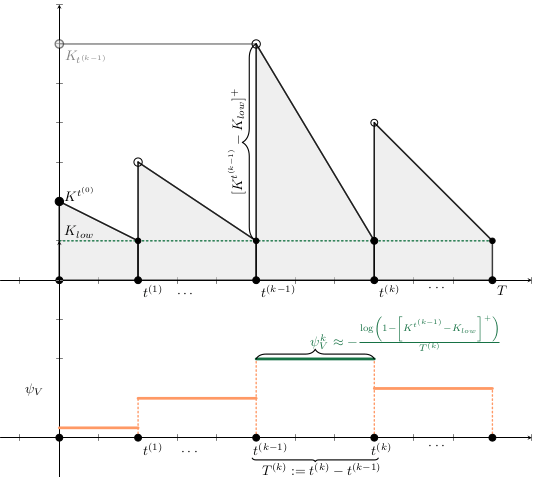

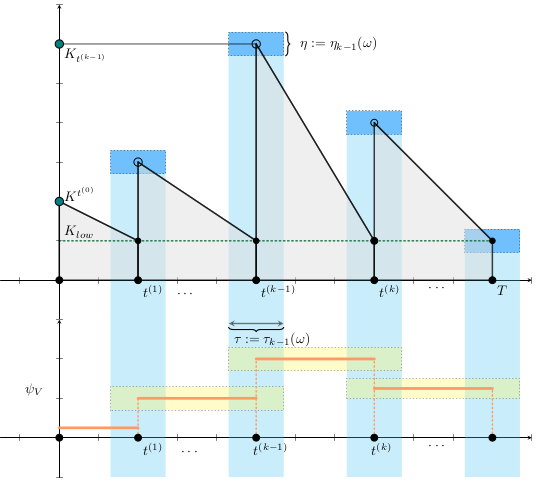

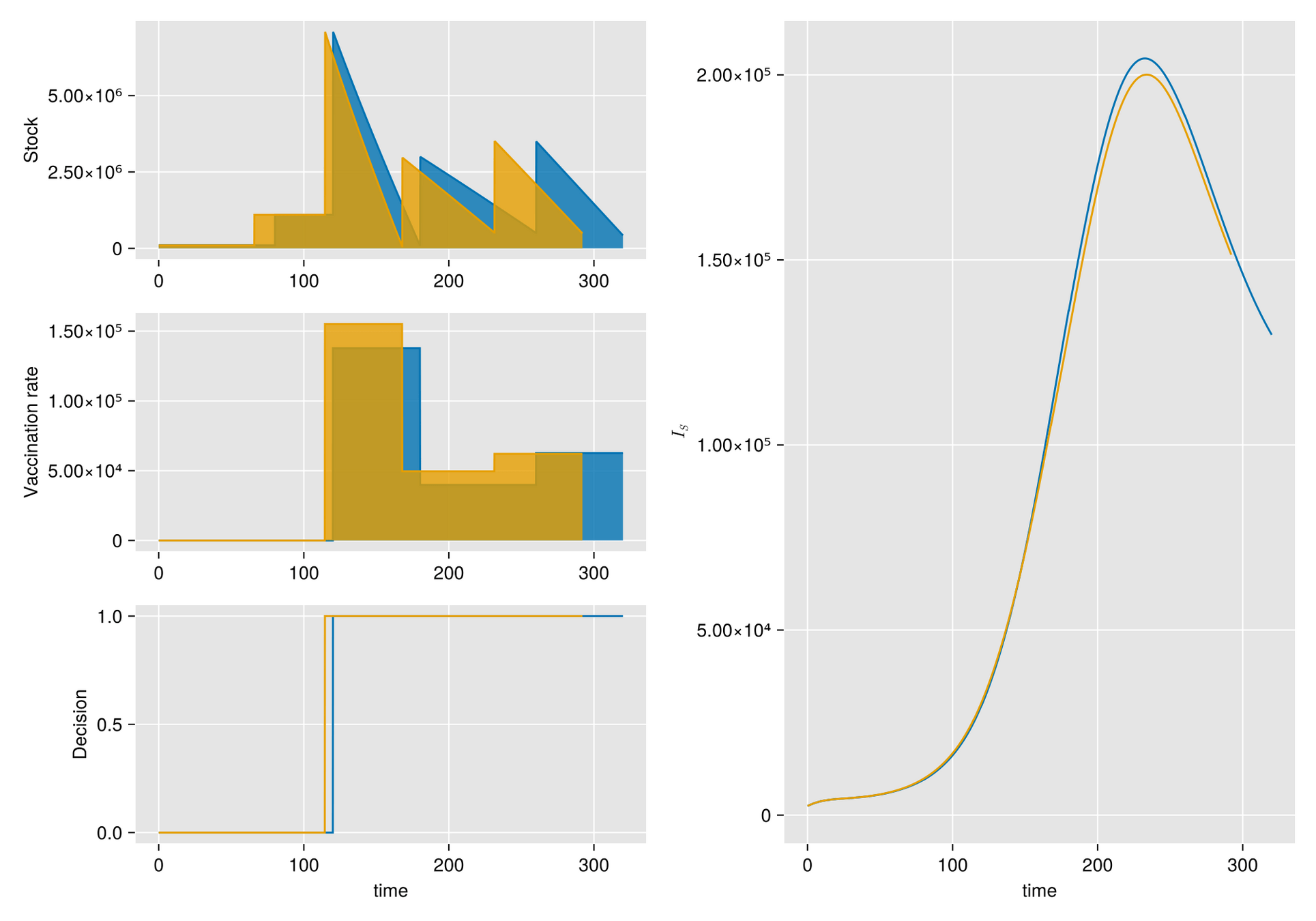

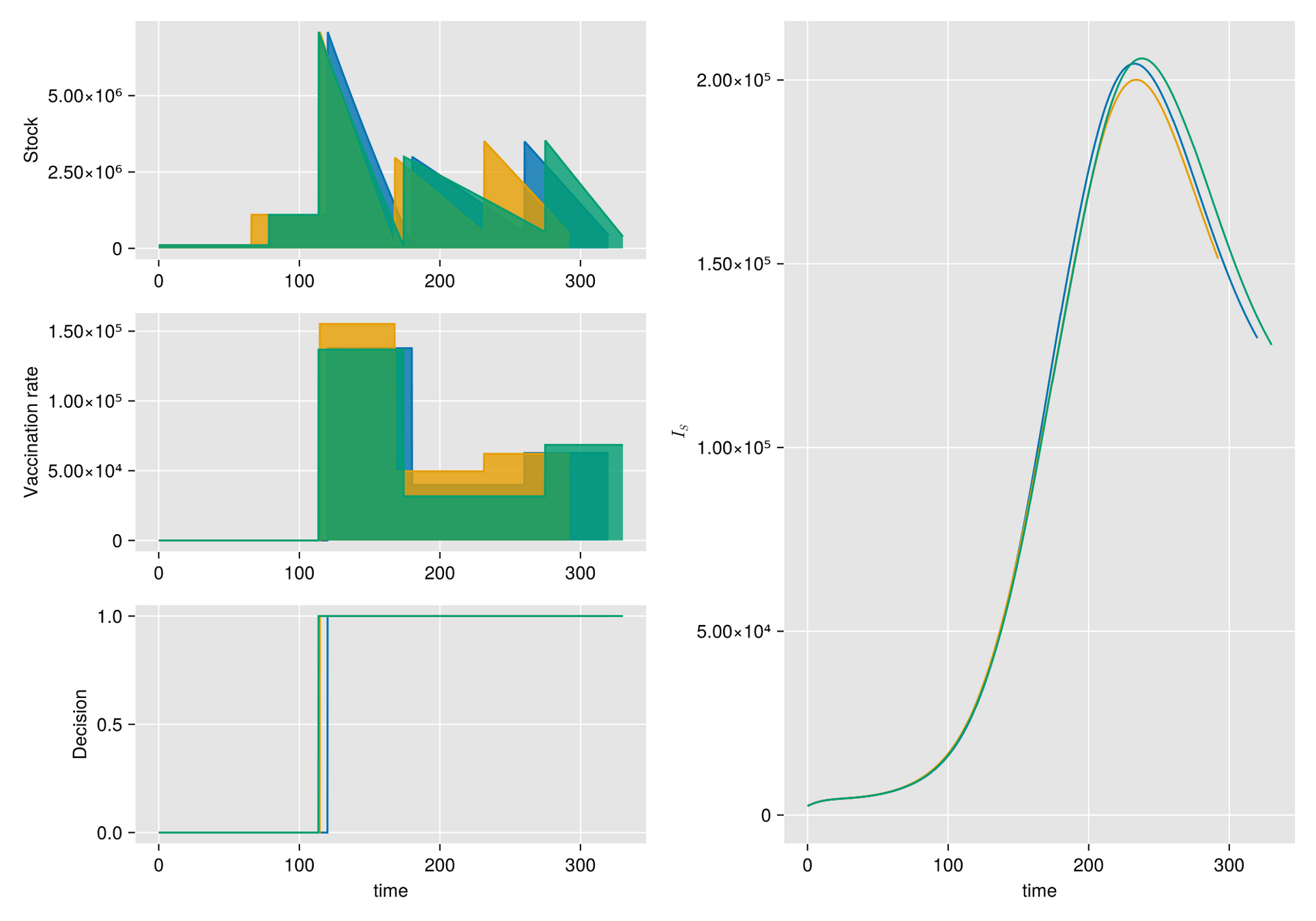

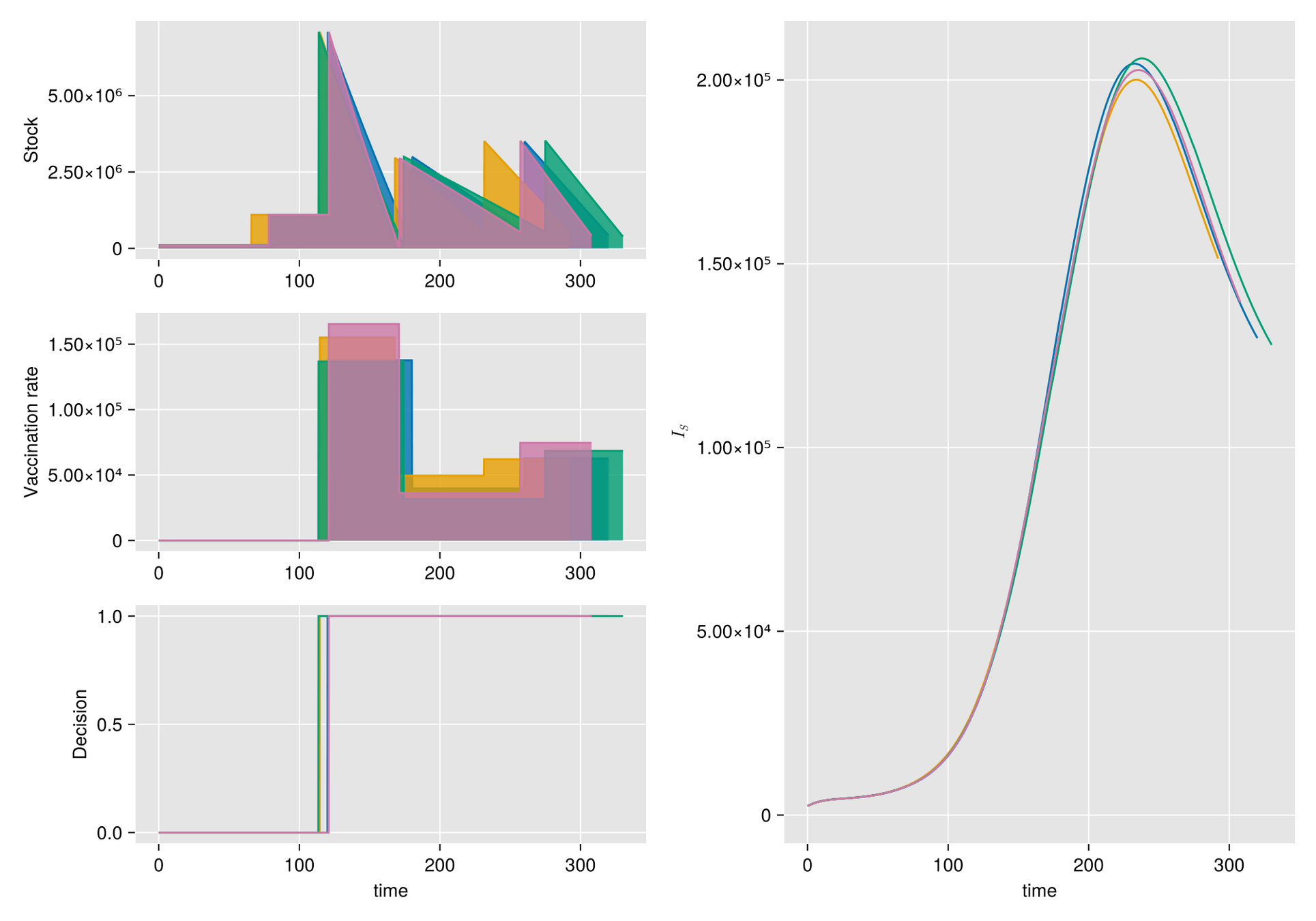

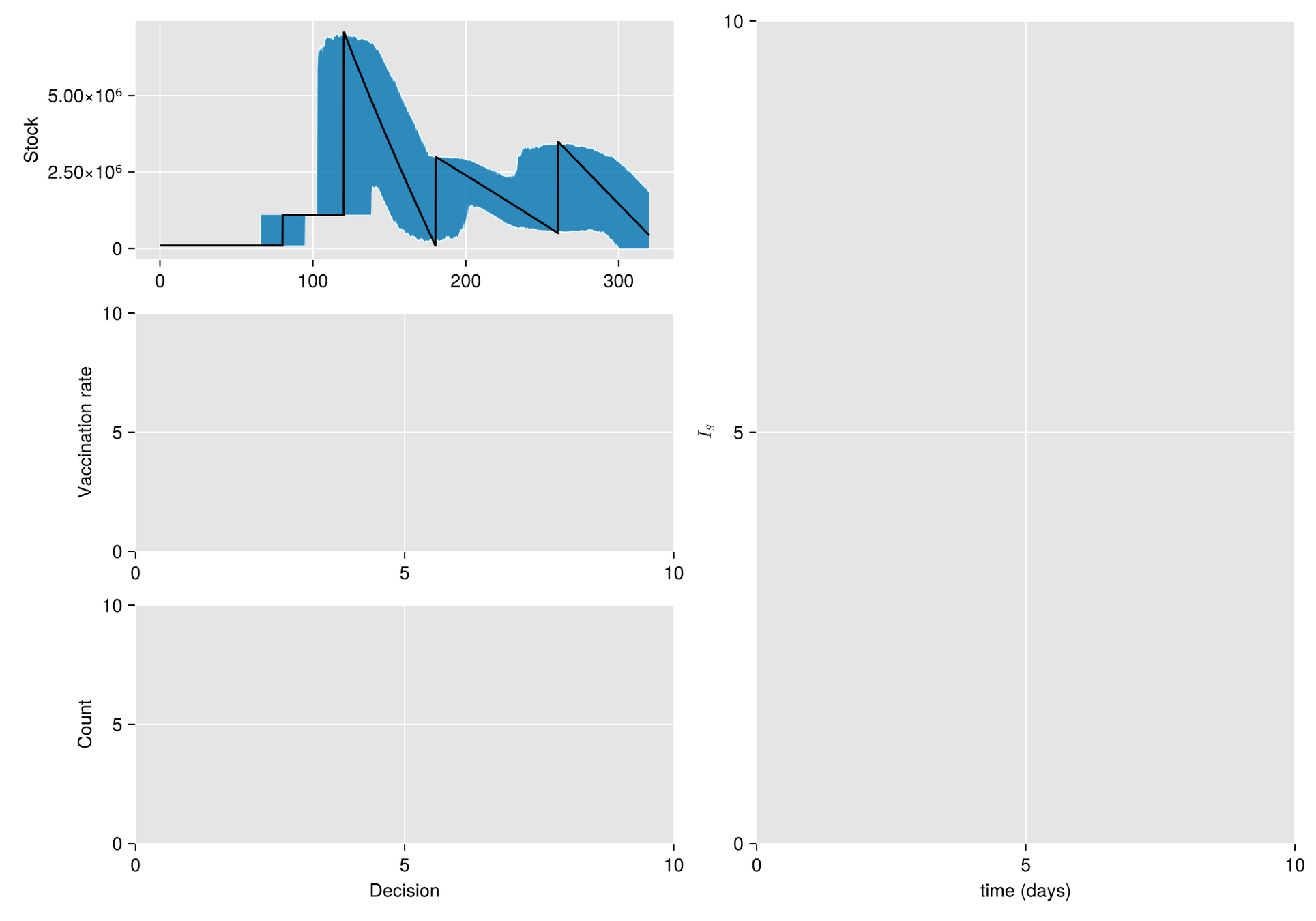

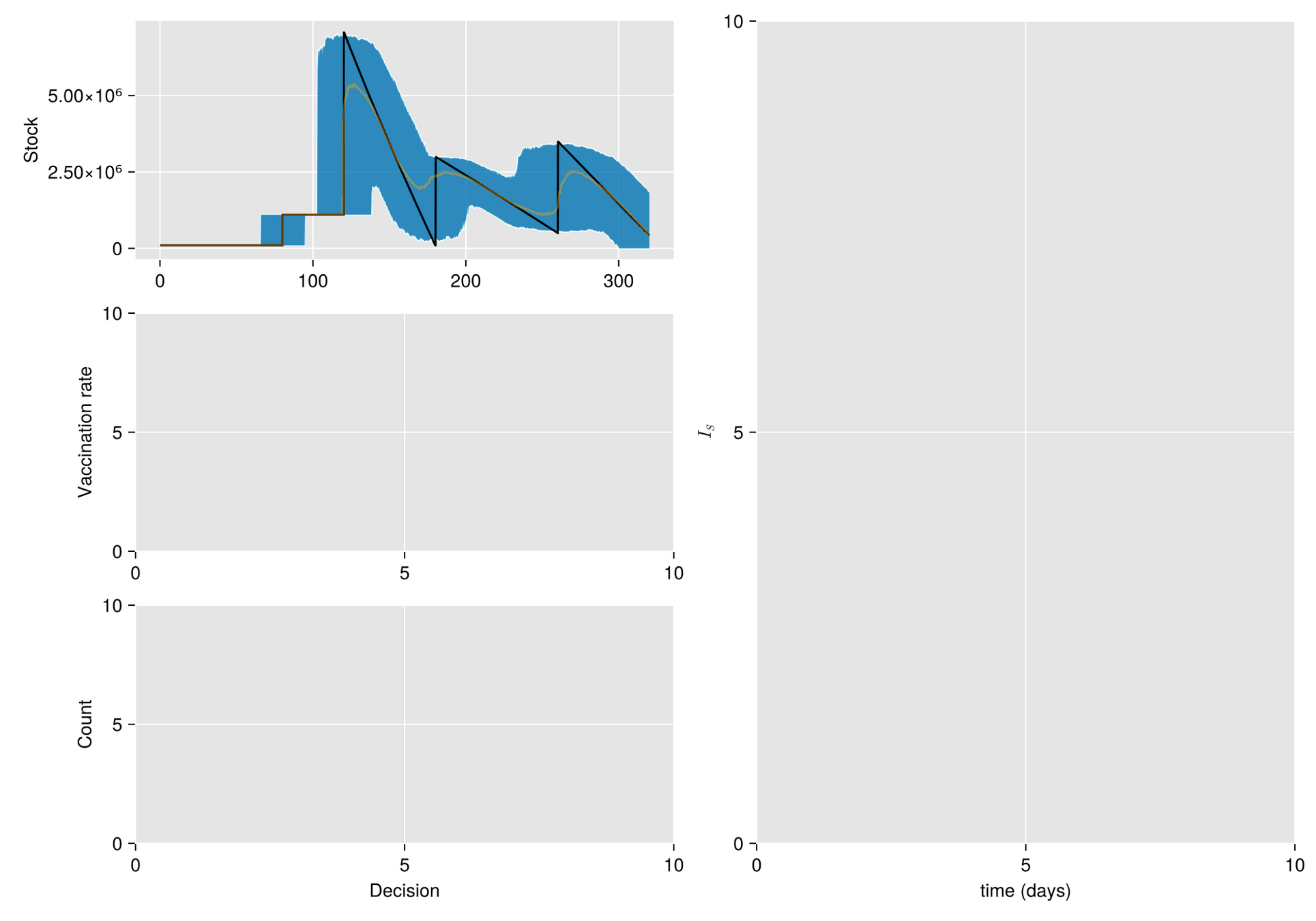

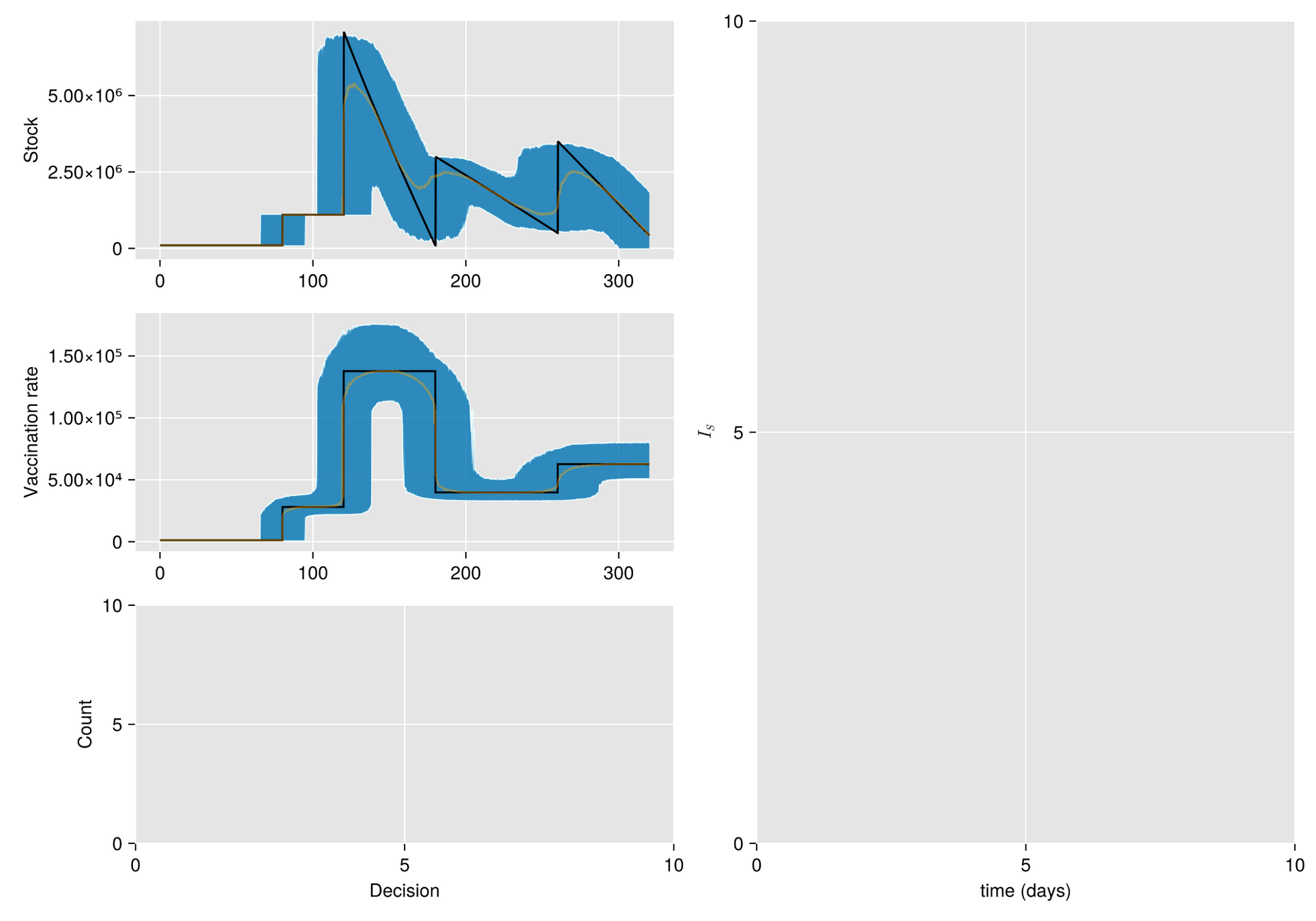

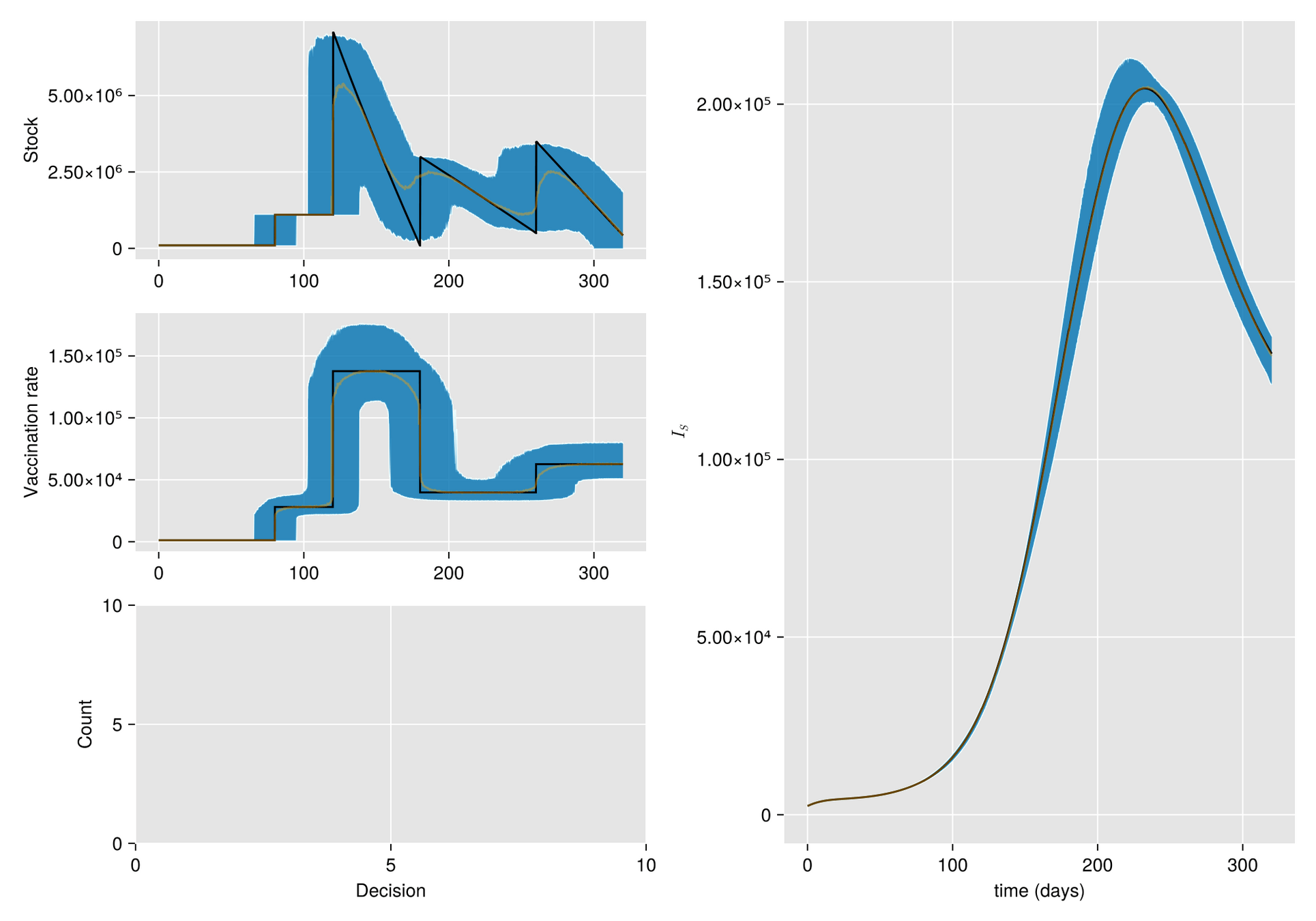

Given a shipment of vaccines calendar, describe the stock management with backup protocol and quantify random fluctuations

due to schedule or quantity.

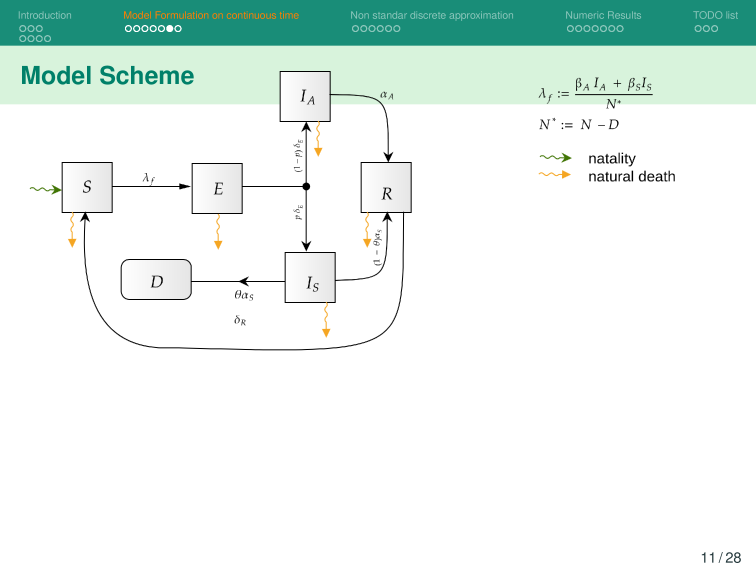

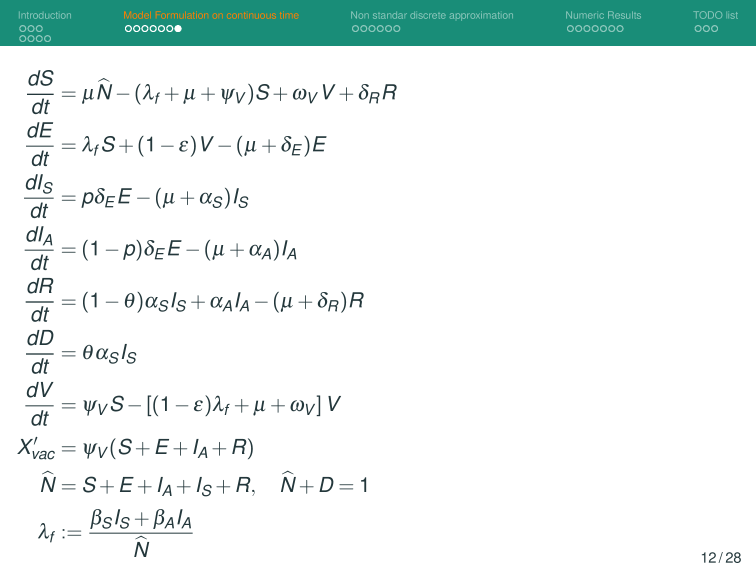

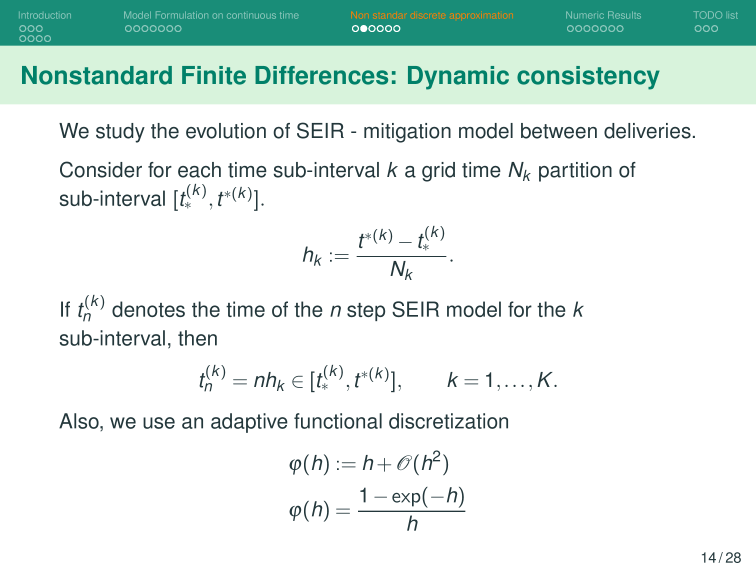

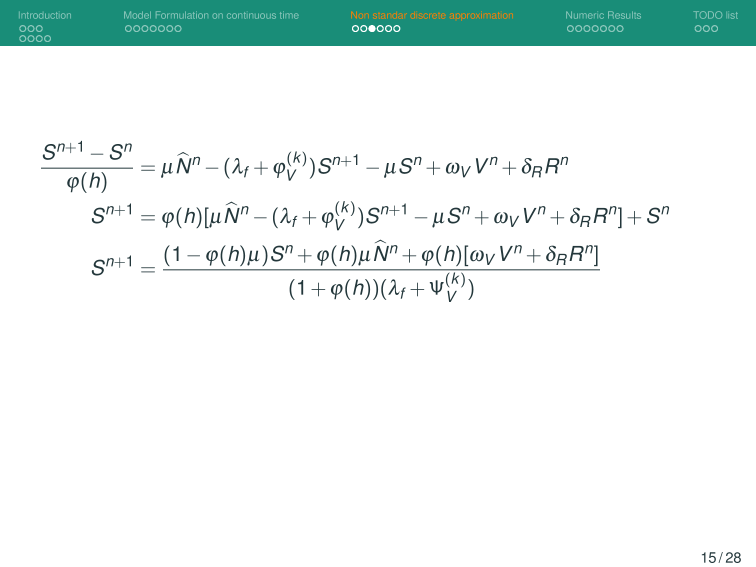

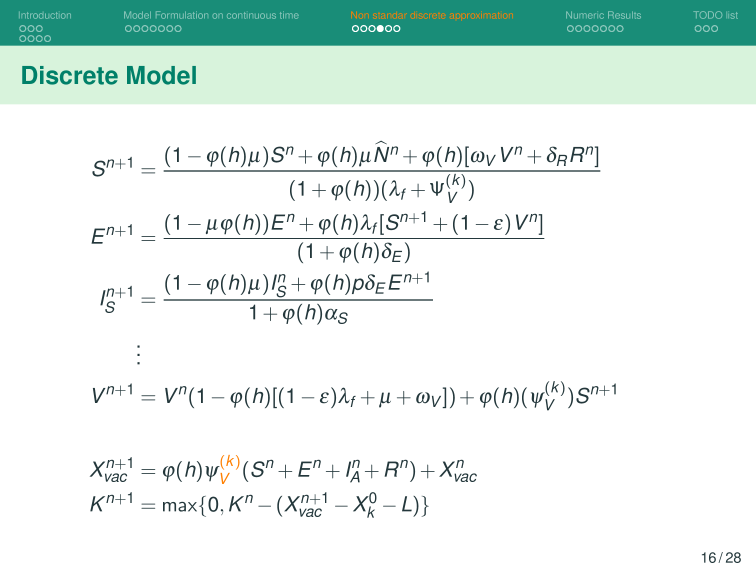

Then, incorporate this dynamic into an ODE system that describes the disease and evaluates its response accordingly.

Text

Nonlinear control: HJB and DP

Given

Goal:

Desing

to follow

s. t. optimize cost

Agent

Agent

action

state

reward

Discounted return

Total return

Dopamine Reward

Agent

Deterministic Control

https://slides.com/sauldiazinfantevelasco/code-6bf335/fullscreen

Gracias!!

SMUQ-2024

By Saul Diaz Infante Velasco

SMUQ-2024

SMUQ-2024

- 164