Inventory management of scarce vaccine for epidemic control:

Yofre H. Garcia

Saúl Diaz-Infante Velasco

Jesús Adolfo Minjárez Sosa

sauldiazinfante@gmail.com

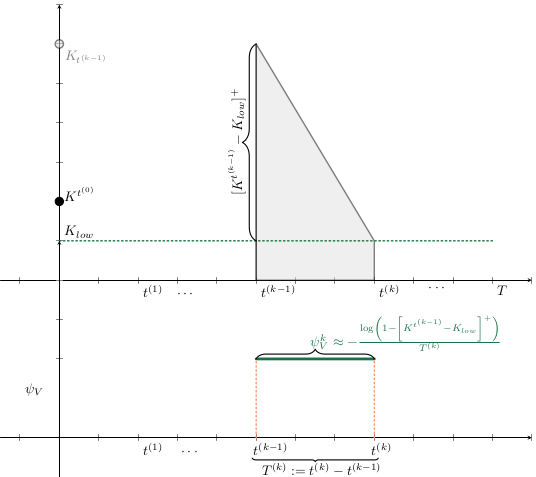

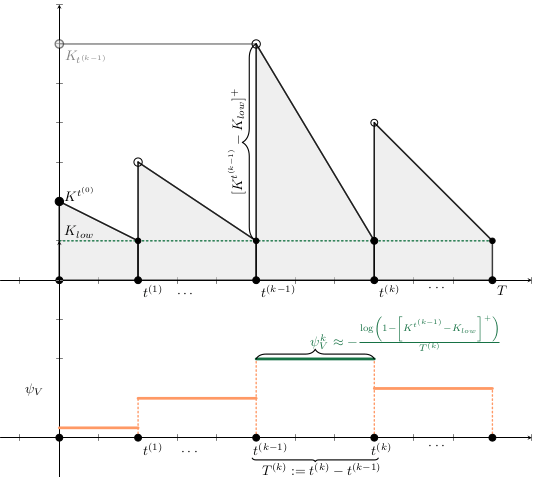

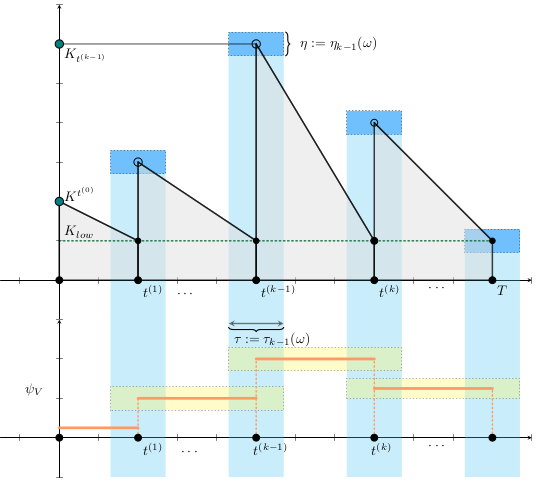

Uncertainty quantification of time for deliveries and order sizes based on a model of sequential decisions

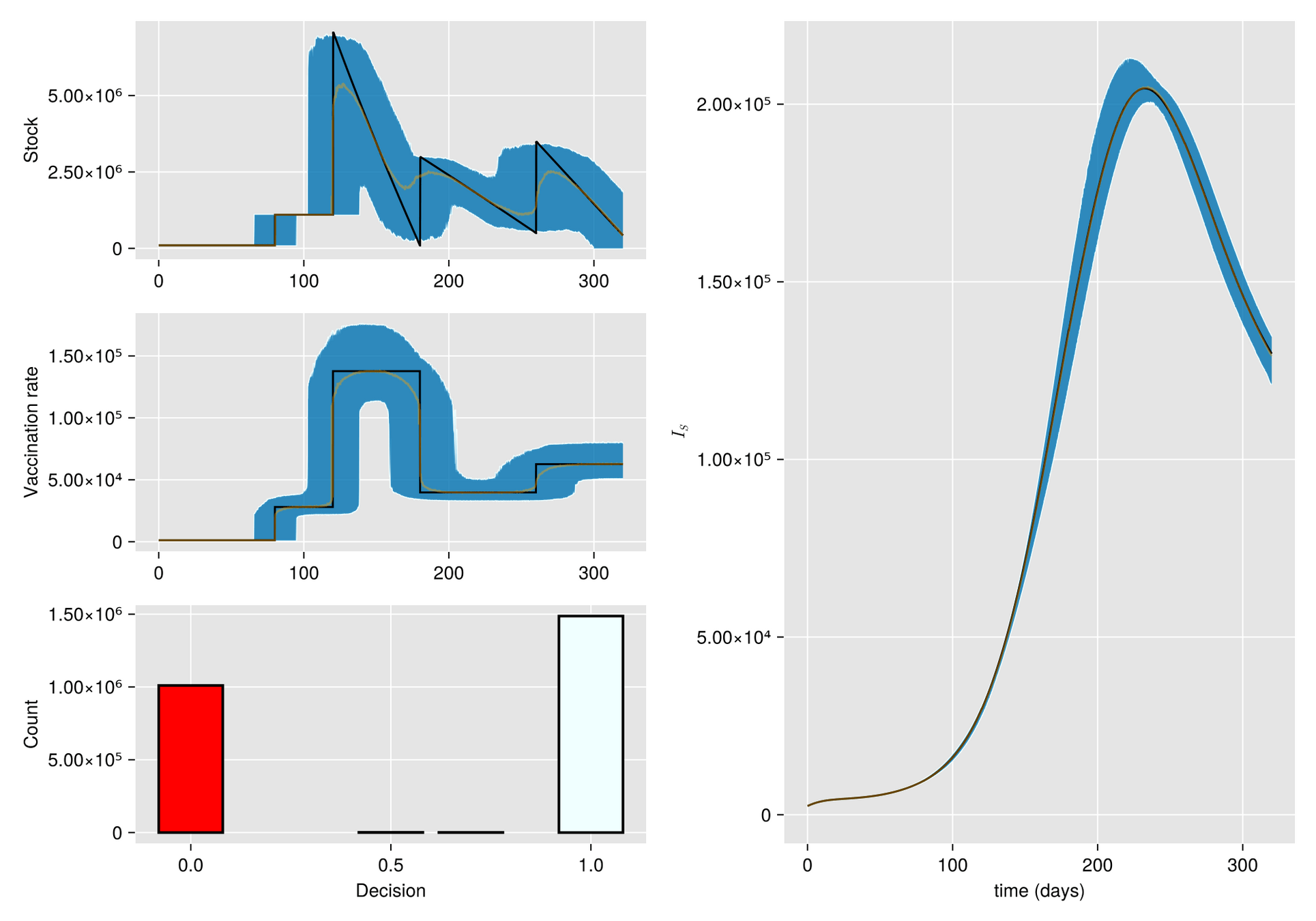

Argument. When there is a shortage of vaccines, sometimes the best response is to refrain from vaccination, at least for a while.

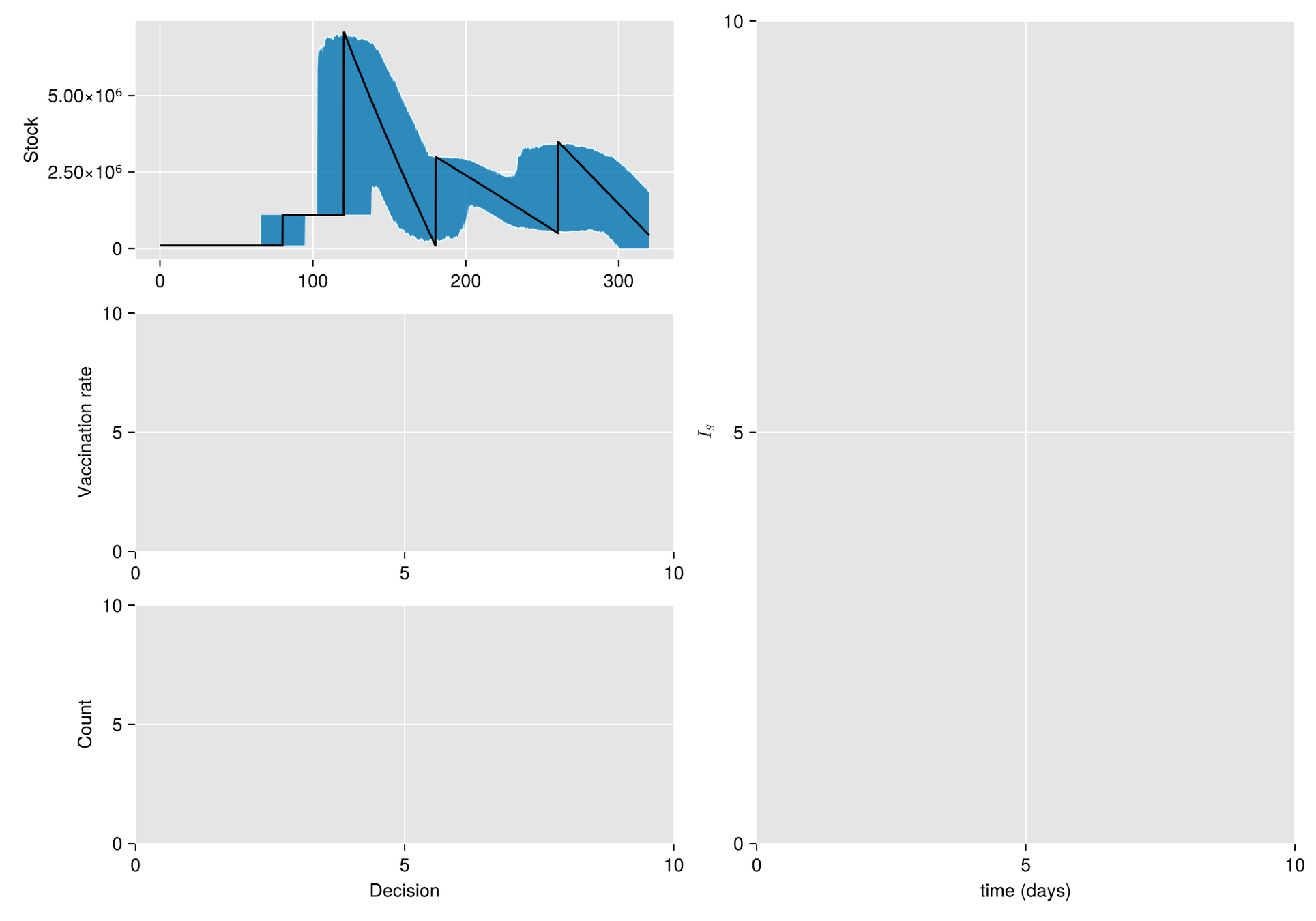

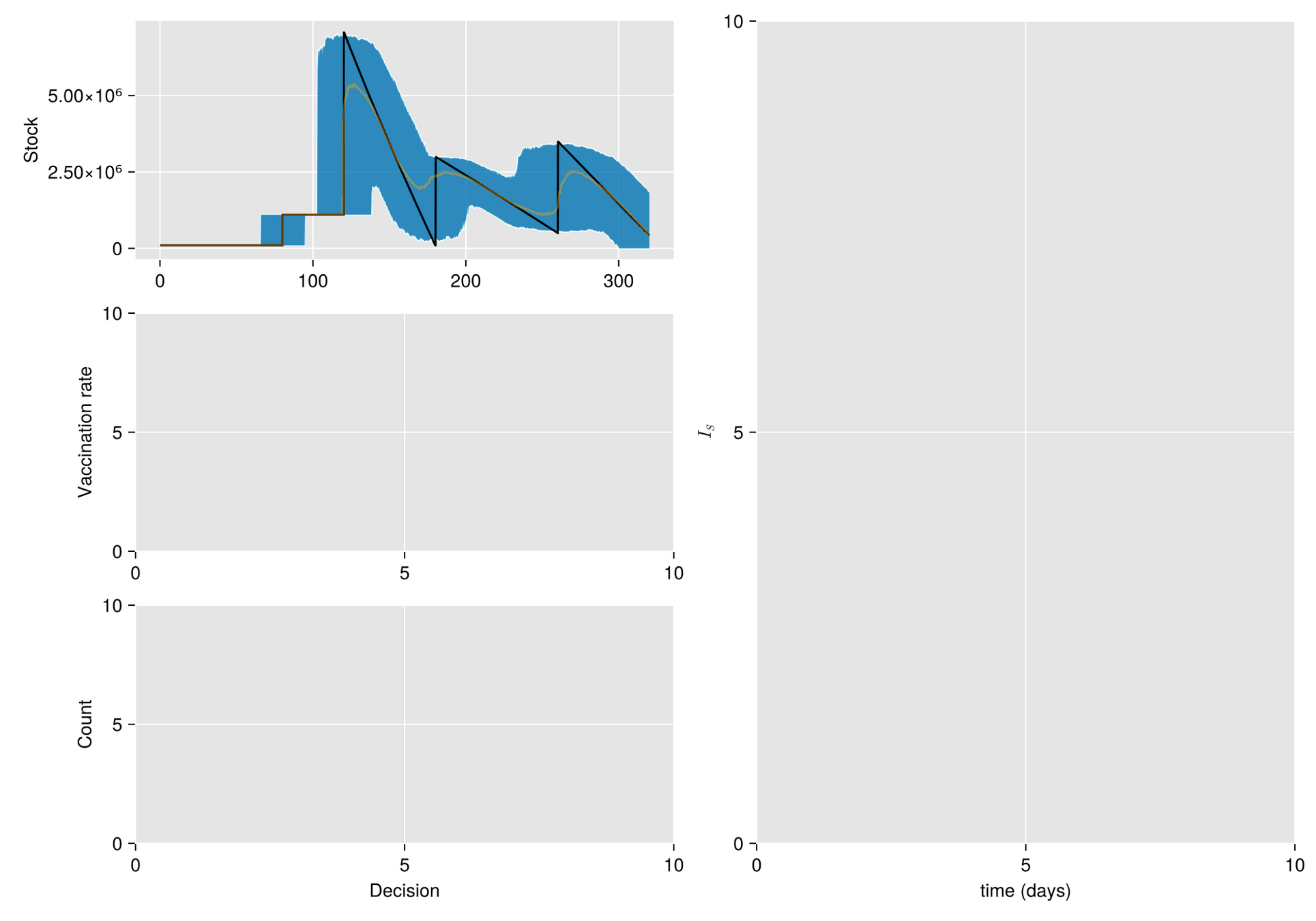

Hypothesis. Under these conditions, inventory management suffers significant random fluctuations

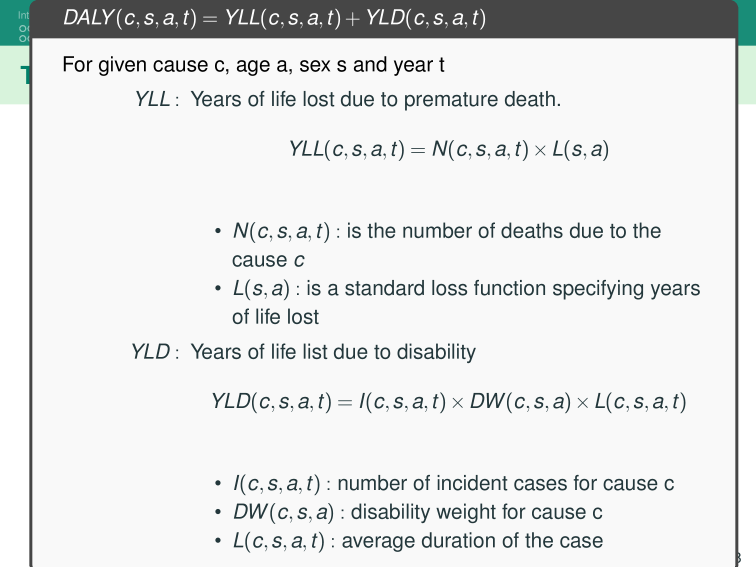

Objective. Optimize the management of vaccine inventory and its effect on a vaccination campaign

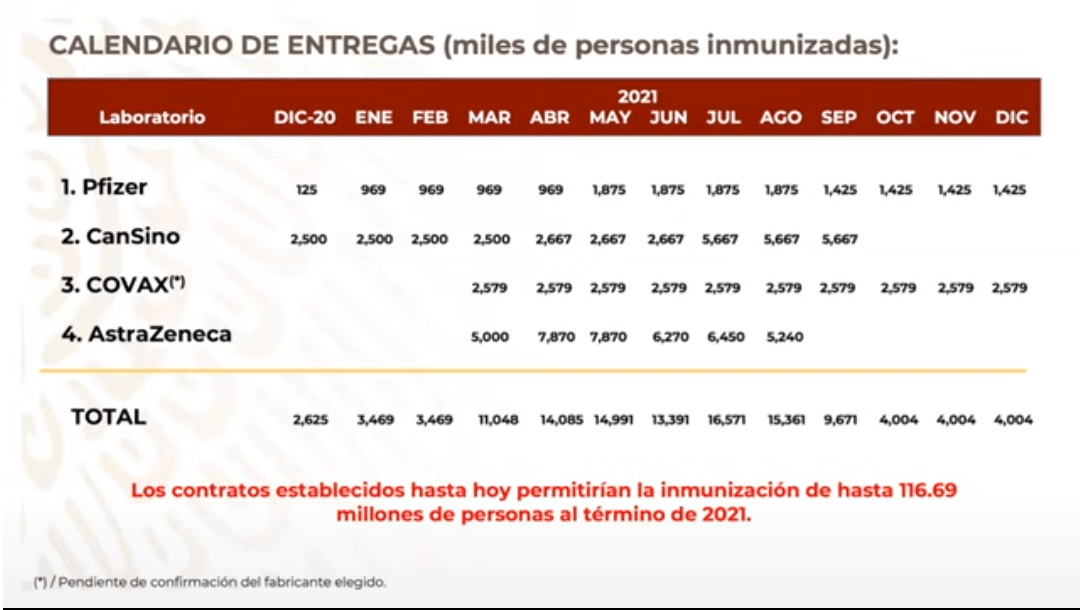

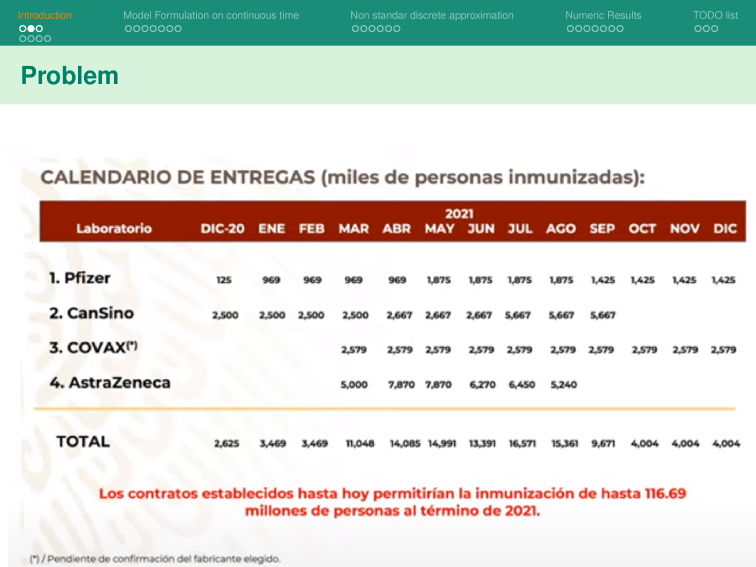

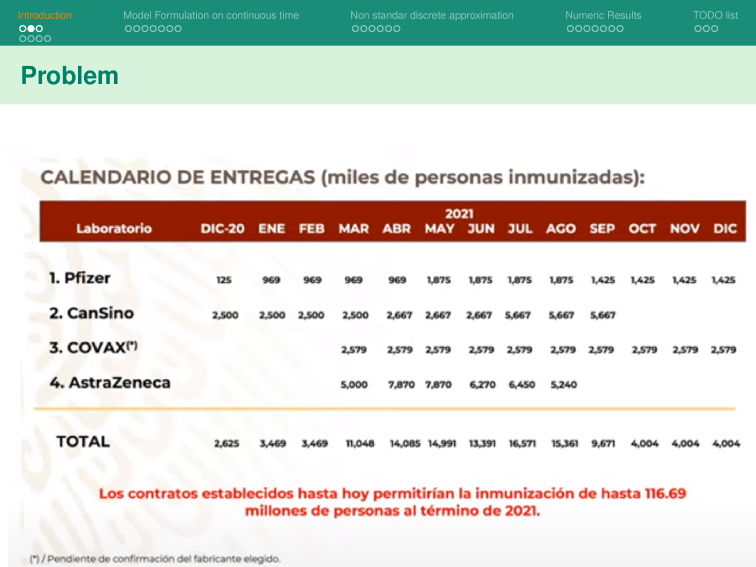

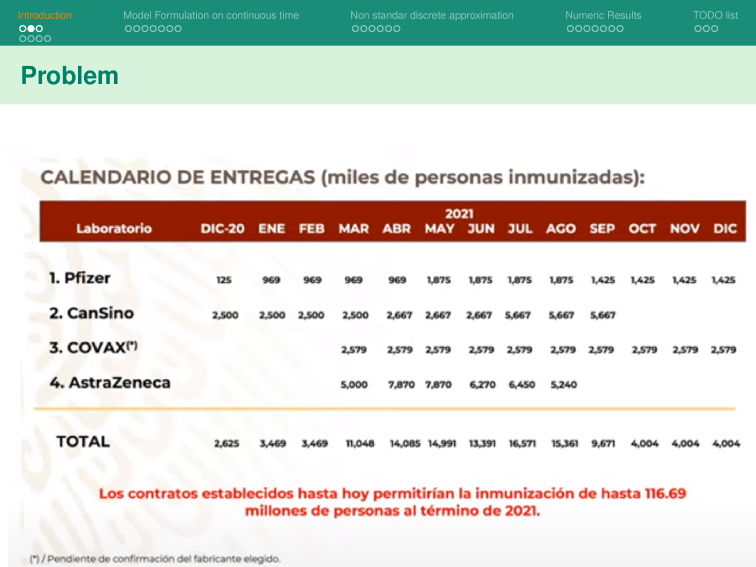

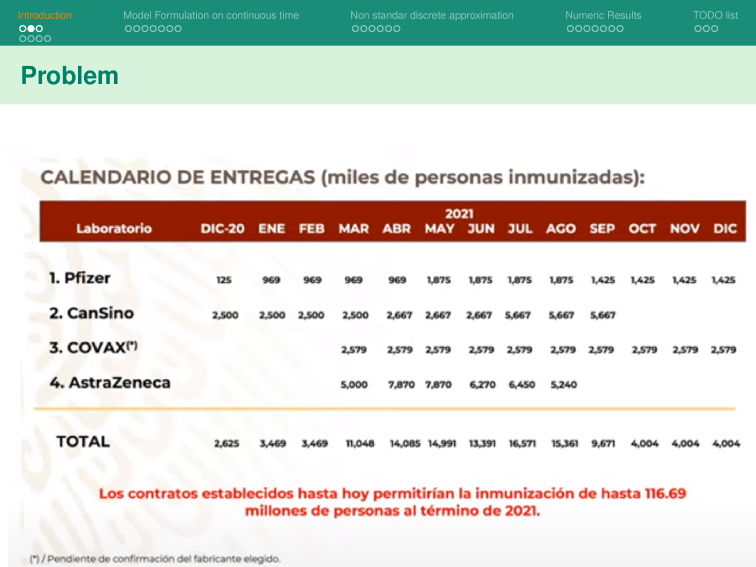

On October 13 2020, the Mexican government announced a vaccine delivery plan from Pfizer-BioNTech and other companies as part of the COVID-19 vaccination campaign.

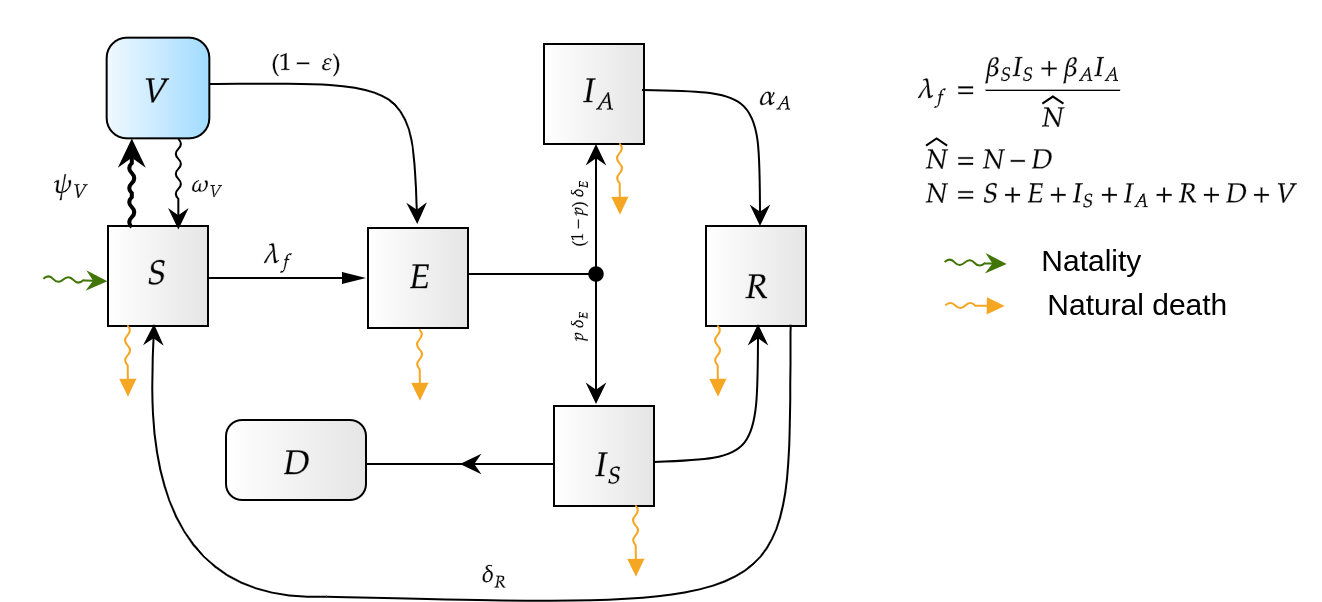

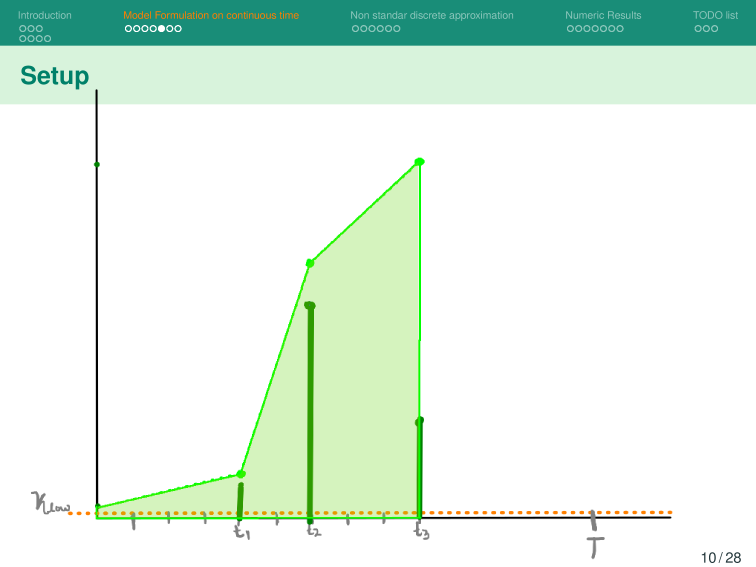

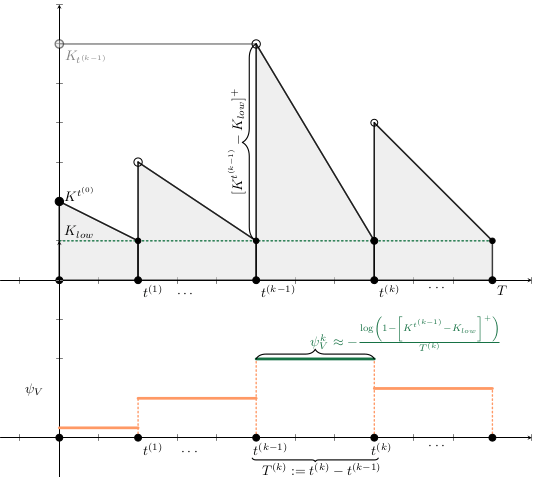

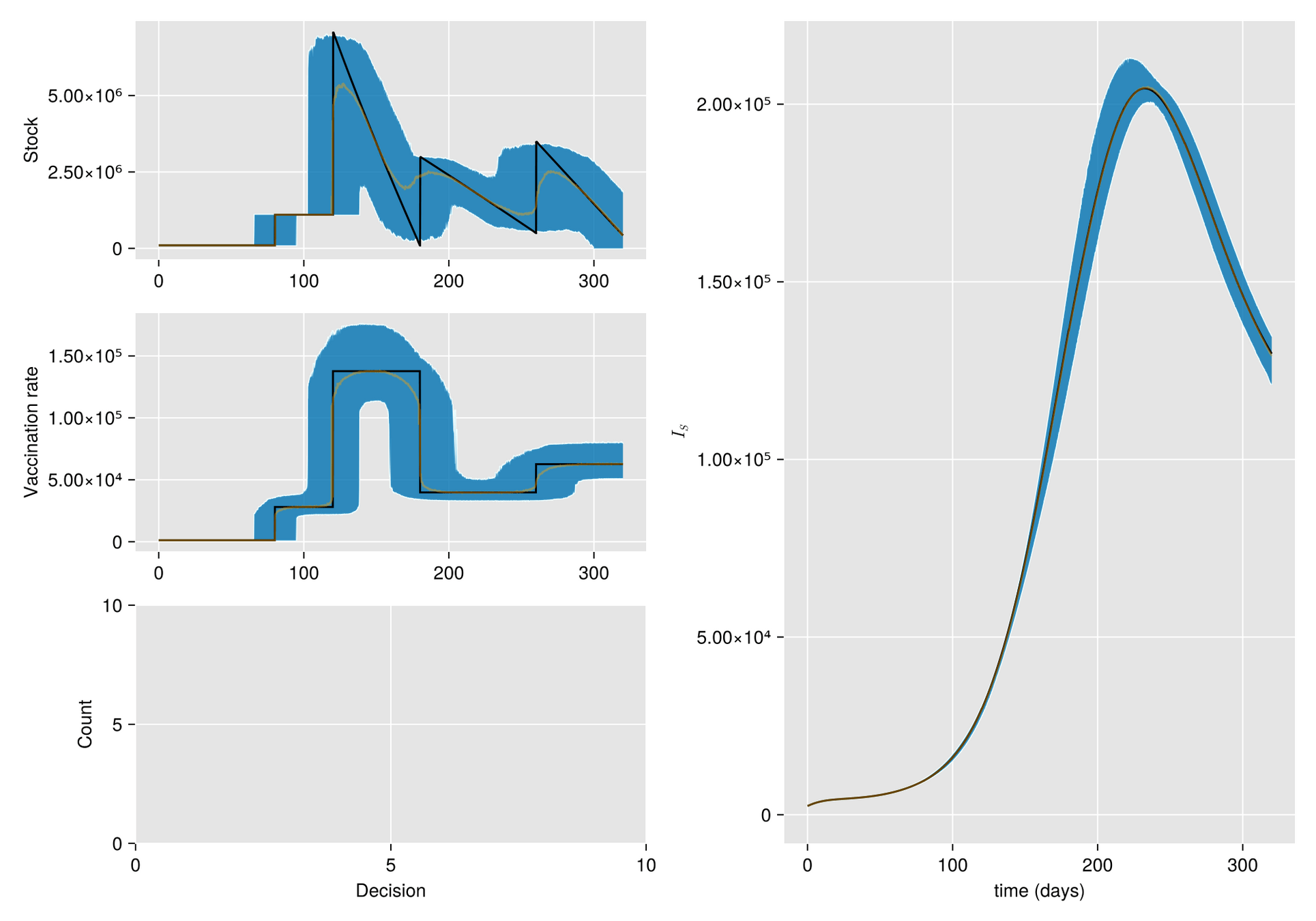

Methods. Given a vaccine shipping schedule, we describe stock management with a backup protocol and quantify the random fluctuations due to a program under high uncertainty.

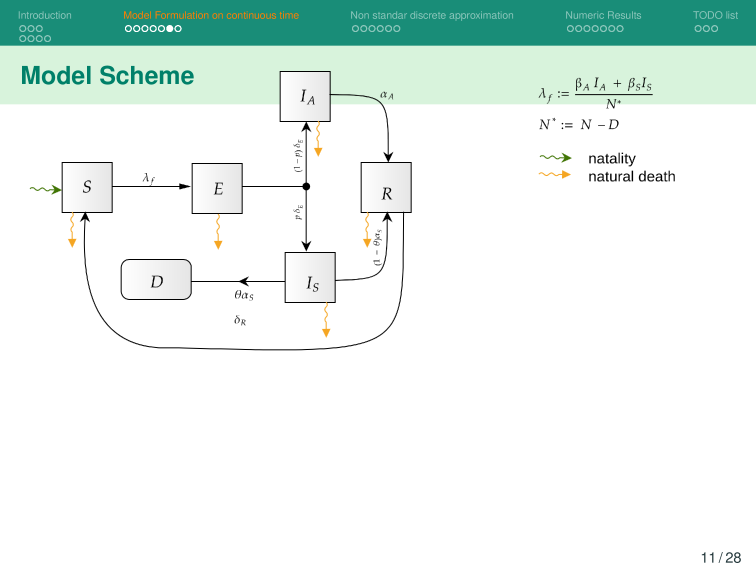

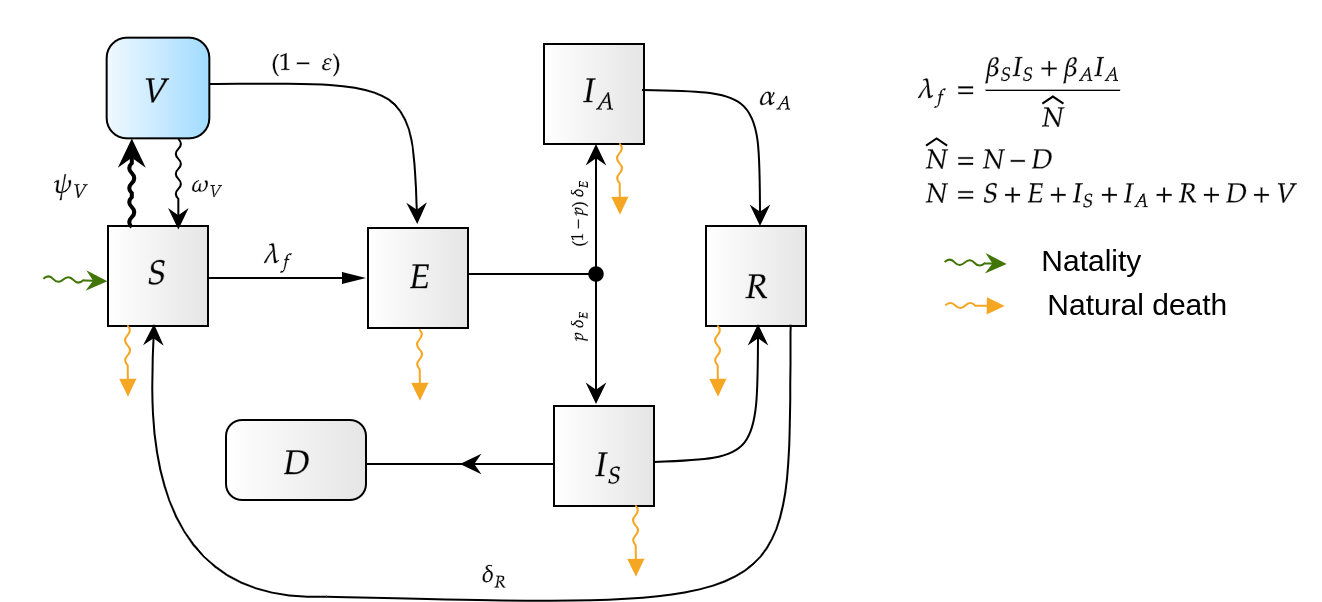

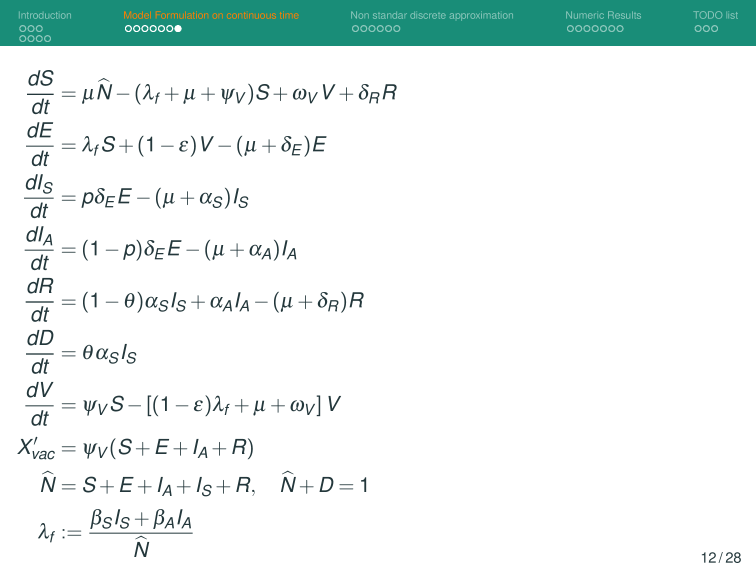

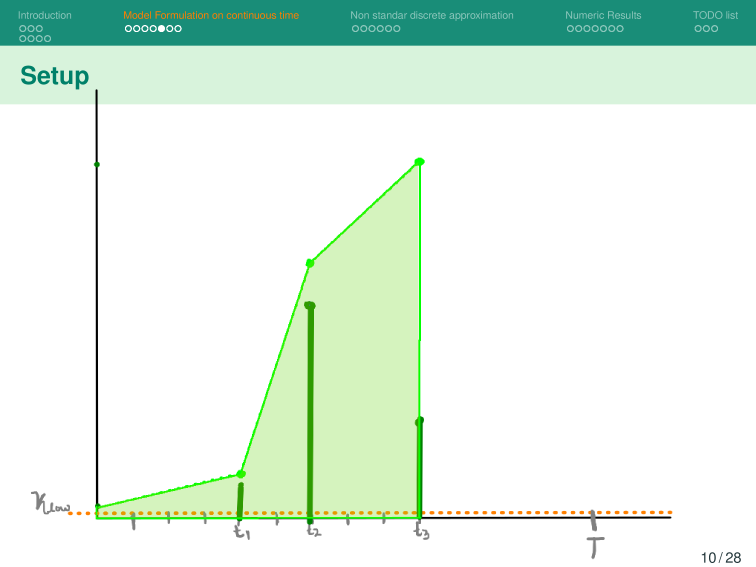

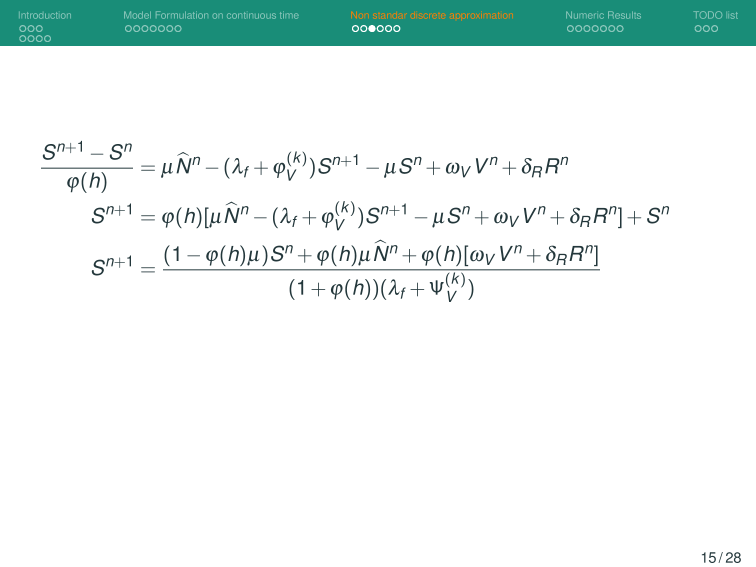

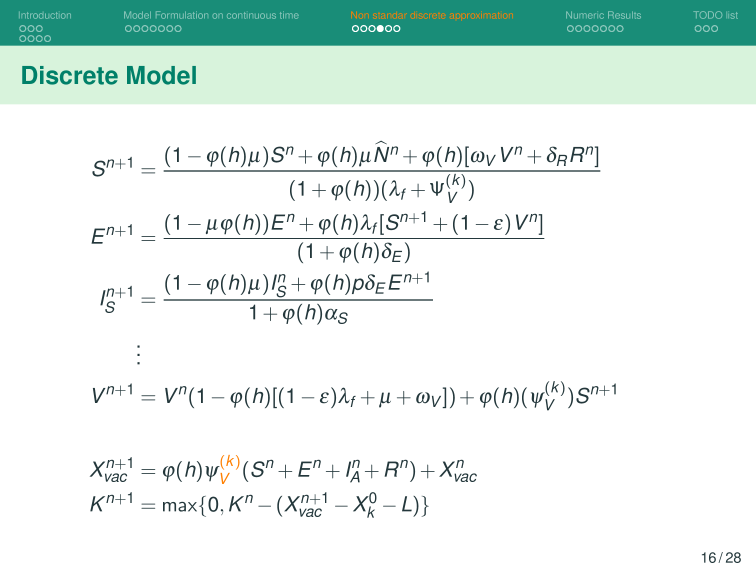

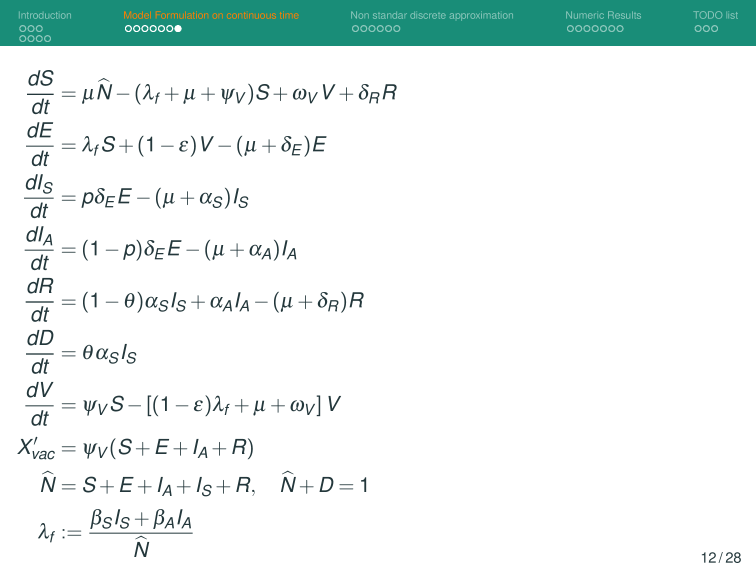

Then, we incorporate this dynamic into a system of ODE that describes the disease and evaluate its response.

Nonlinear control: HJB and DP

Given

Goal:

Desing

to follow

s. t. optimize cost

Agent

Nonlinear control: HJB and DP

Bellman optimality principle

Control Problem

s.t.

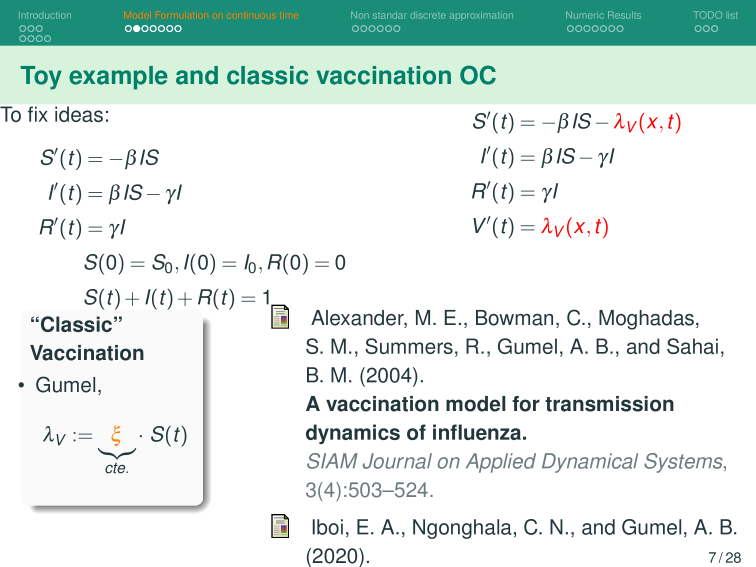

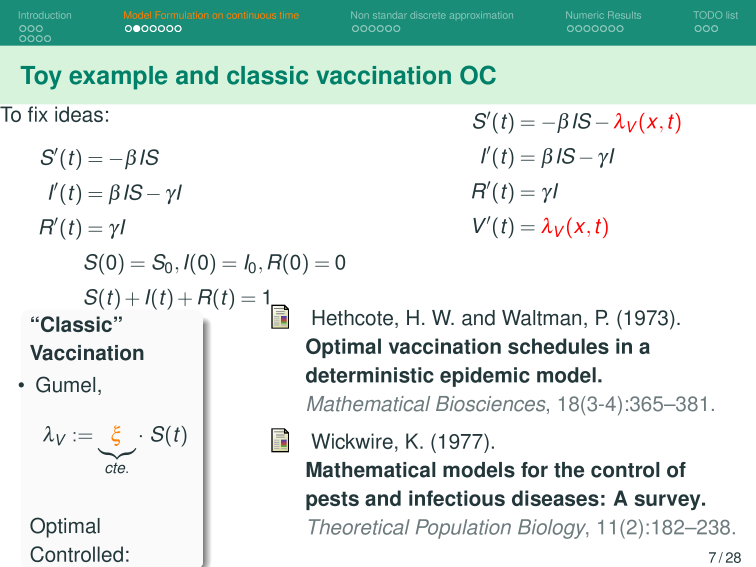

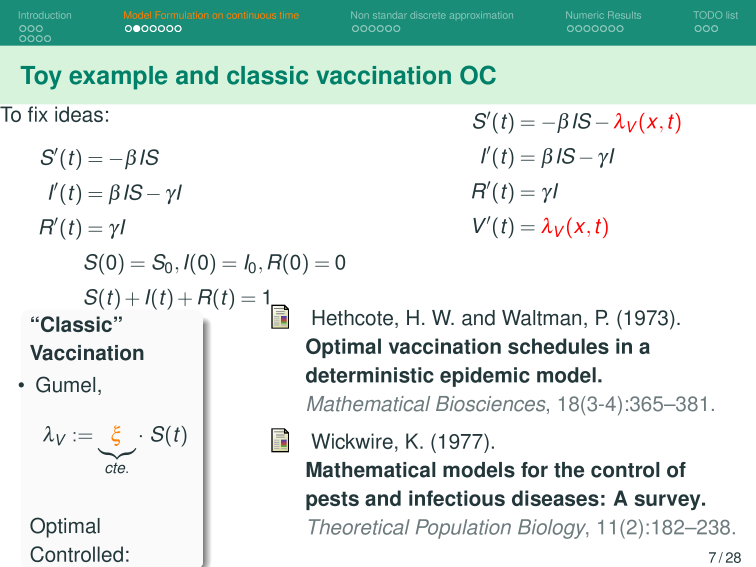

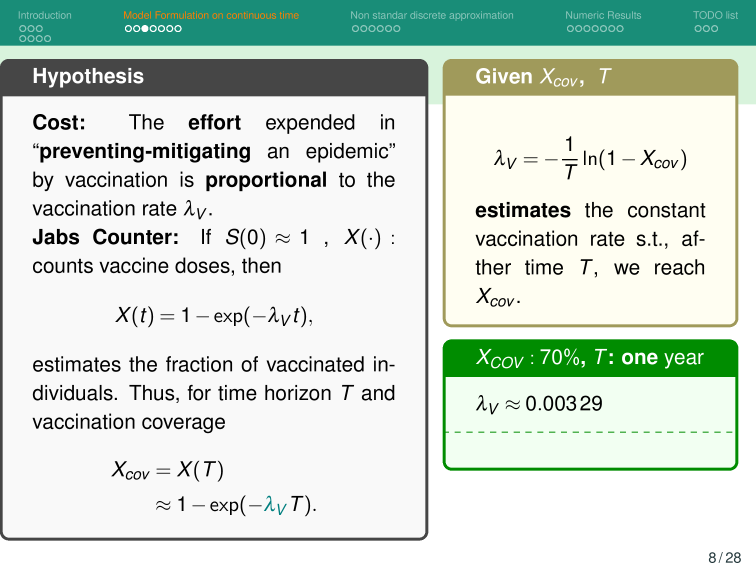

The effort invested in preventing or mitigating an epidemic through vaccination is proportional to the vaccination rate

Let us assume at the beginning of the outbreak:

Then we estimate the number of vaccines with

Then, for a vaccination campaign, let:

Then we estimate the number of vaccines with

Then, for a vaccination campaign, let:

Estimated population of Hermosillo, Sonora in 2024 is 930,000.

So to vaccinate 70% of this population in one year:

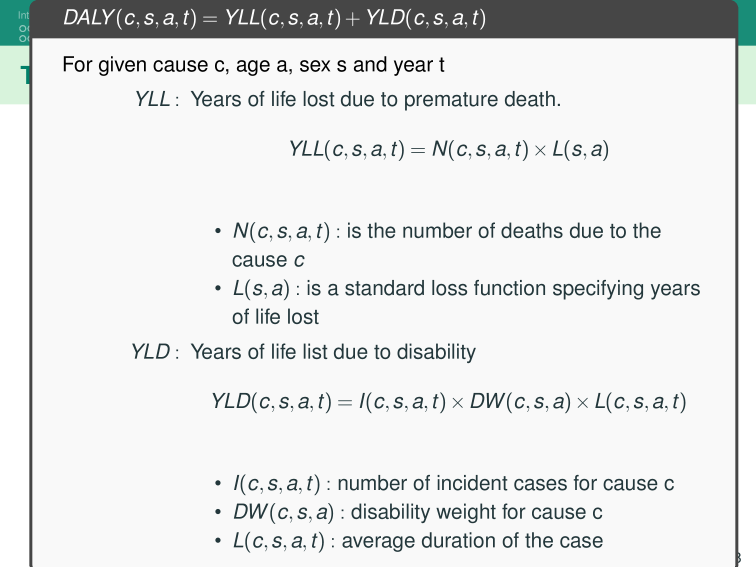

Base Model

Nonlinear control: HJB and DP

Given

Agent

Objective:

Design

to follow

t. q. optimize cost

Agent

action

state

reward

reward

Agent

action

state

Dopamine reward

Agent

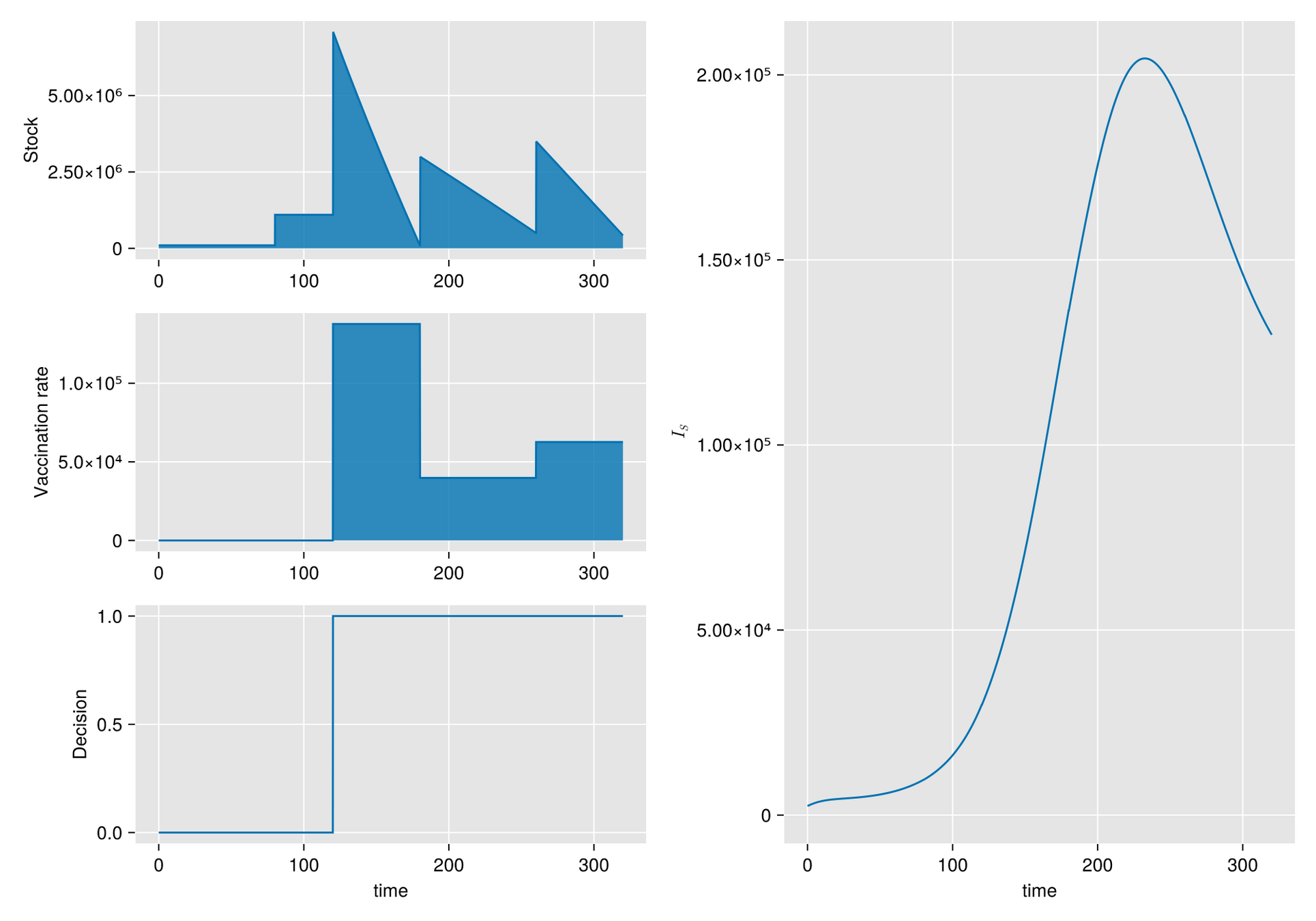

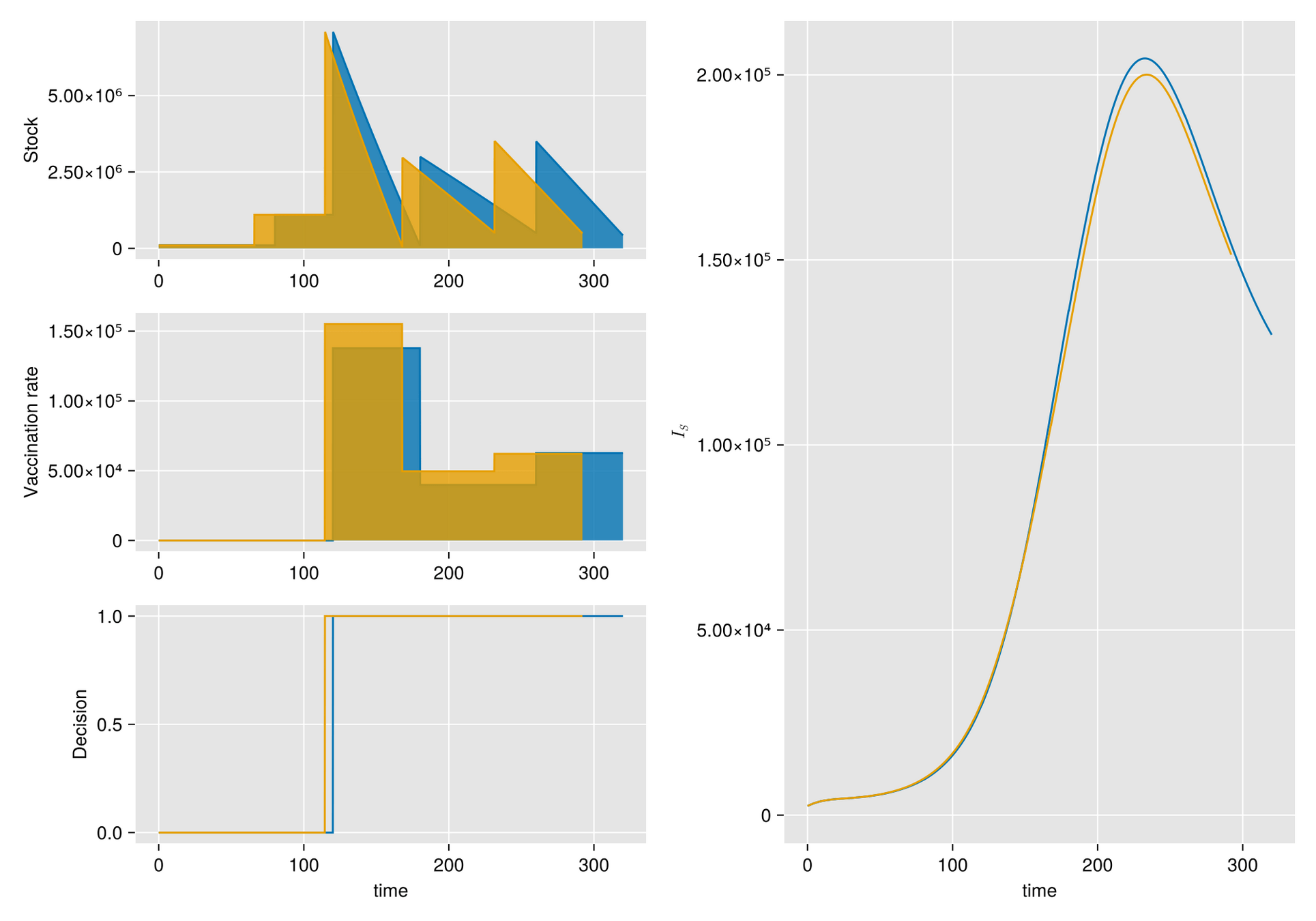

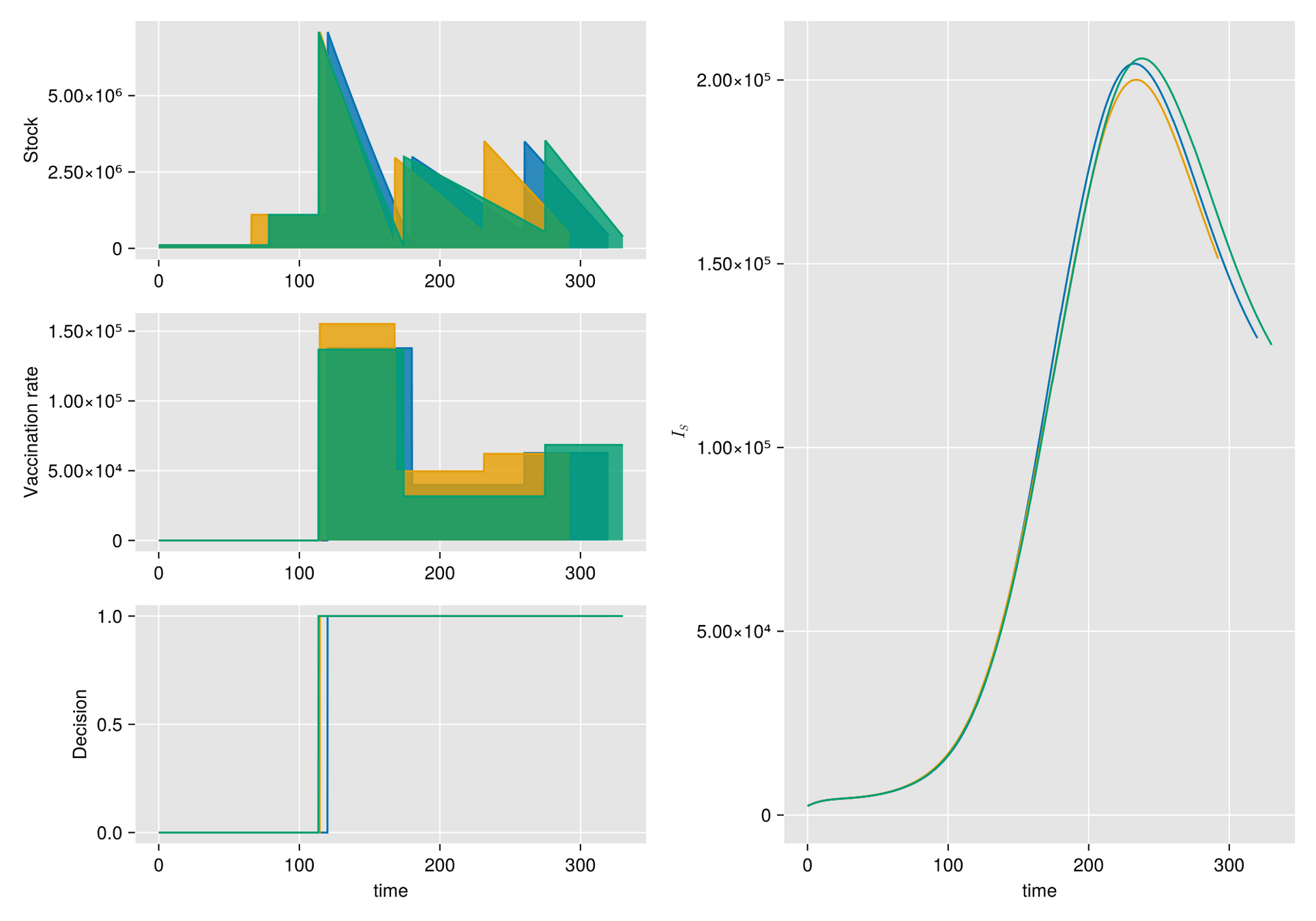

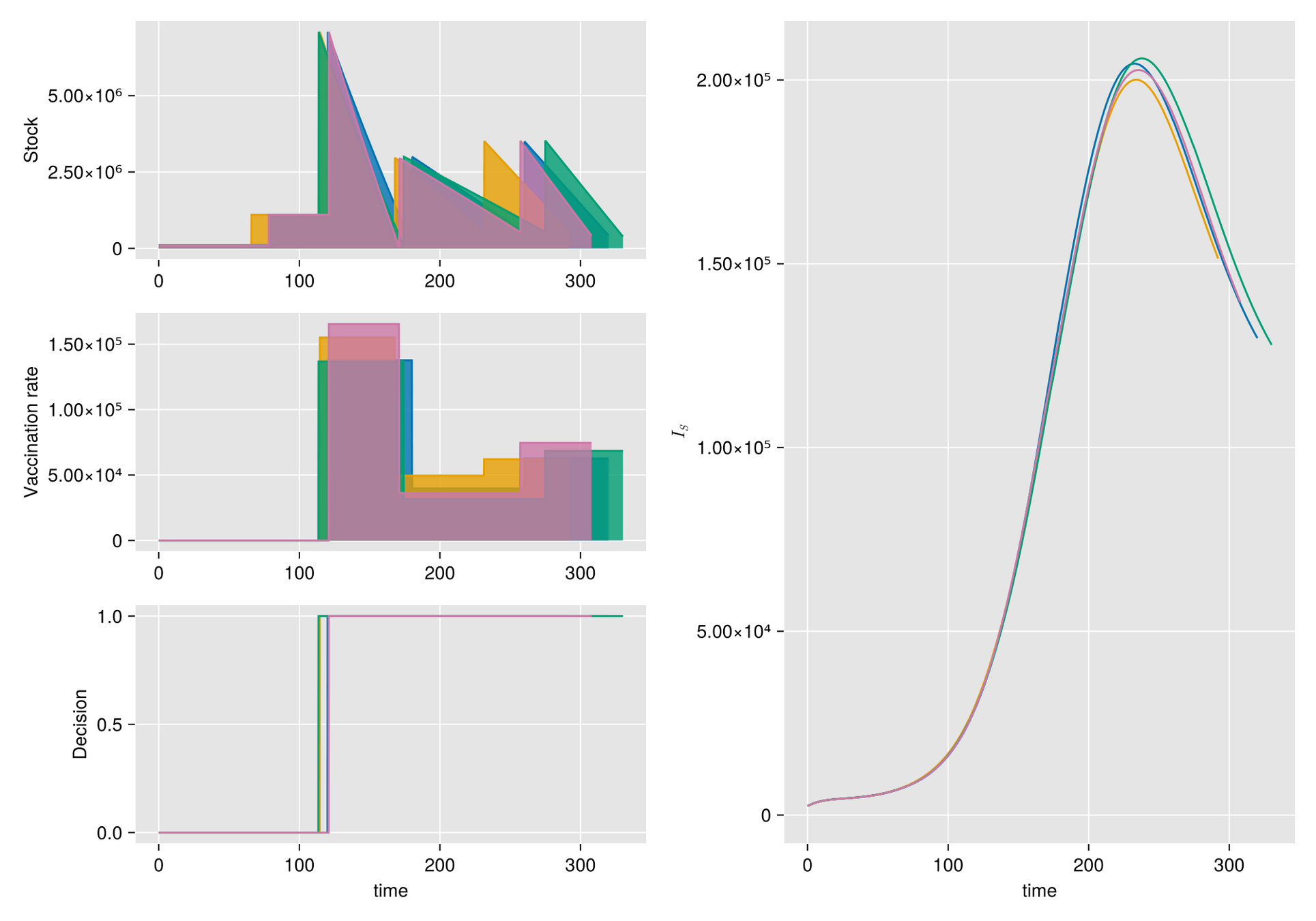

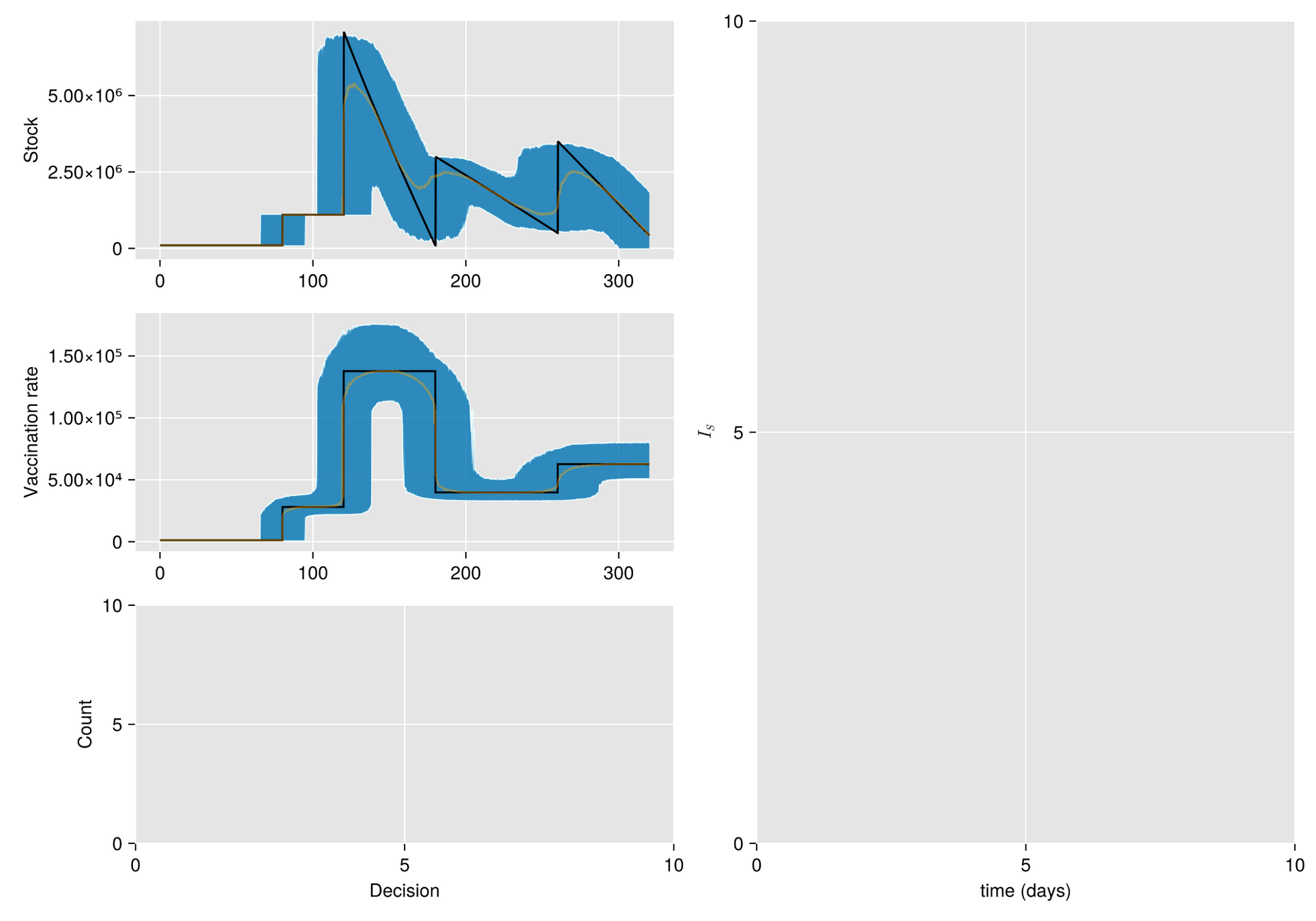

Deterministic Control

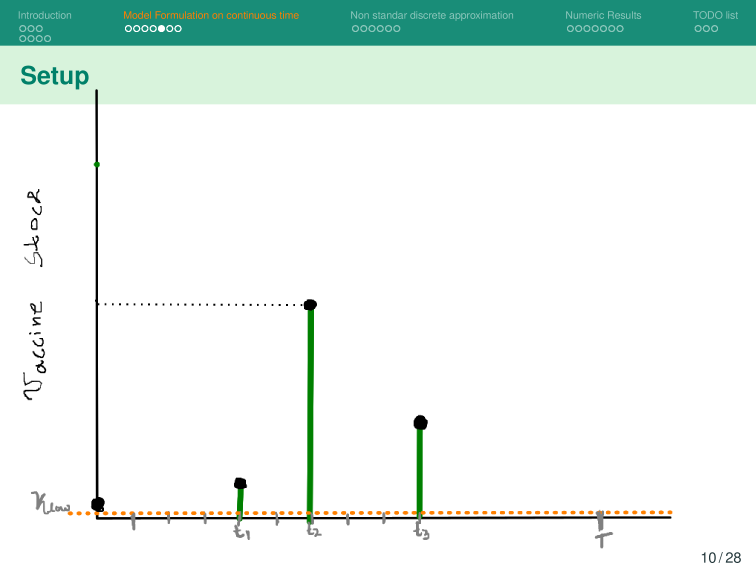

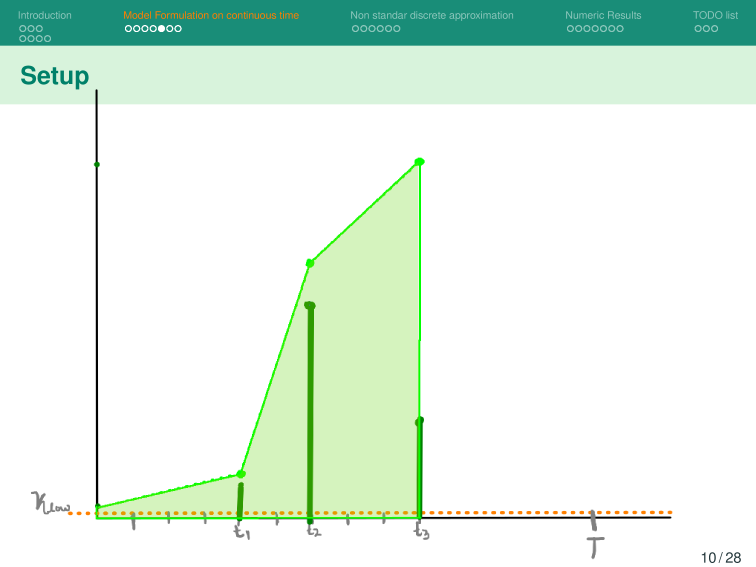

Stock

Vaccination rate

Decision

HJB (Dynamic Programming)- Curse of dimensionality

HJB(Neuro-Dynamic Programming)

Abstract dynamic programming.

Athena Scientific, Belmont, MA, 2013. viii+248 pp.

ISBN:978-1-886529-42-7

Rollout, policy iteration, and distributed reinforcement learning.

Revised and updated second printing

Athena Sci. Optim. Comput. Ser.

Athena Scientific, Belmont, MA, [2020], ©2020. xiii+483 pp.

ISBN:978-1-886529-07-6

Reinforcement learning and optimal control

Athena Sci. Optim. Comput. Ser.

Athena Scientific, Belmont, MA, 2019, xiv+373 pp.

ISBN: 978-1-886529-39-7

Powell, Warren B.

Reinforcement Learning and Stochastic Optimization: A Unified Framework for Sequential Decisions. United Kingdom: Wiley, 2022.

GRACIAS!!,

Preguntas?

MexSIAM-2024

By Saul Diaz Infante Velasco

MexSIAM-2024

Discover innovative strategies for optimizing vaccine inventory management during epidemics, focusing on uncertainty quantification and decision-making models. Join us to explore how effective logistics can enhance public health outcomes!

- 220