Convolutional Neural Networks

Shen Shen

March 22, 2022

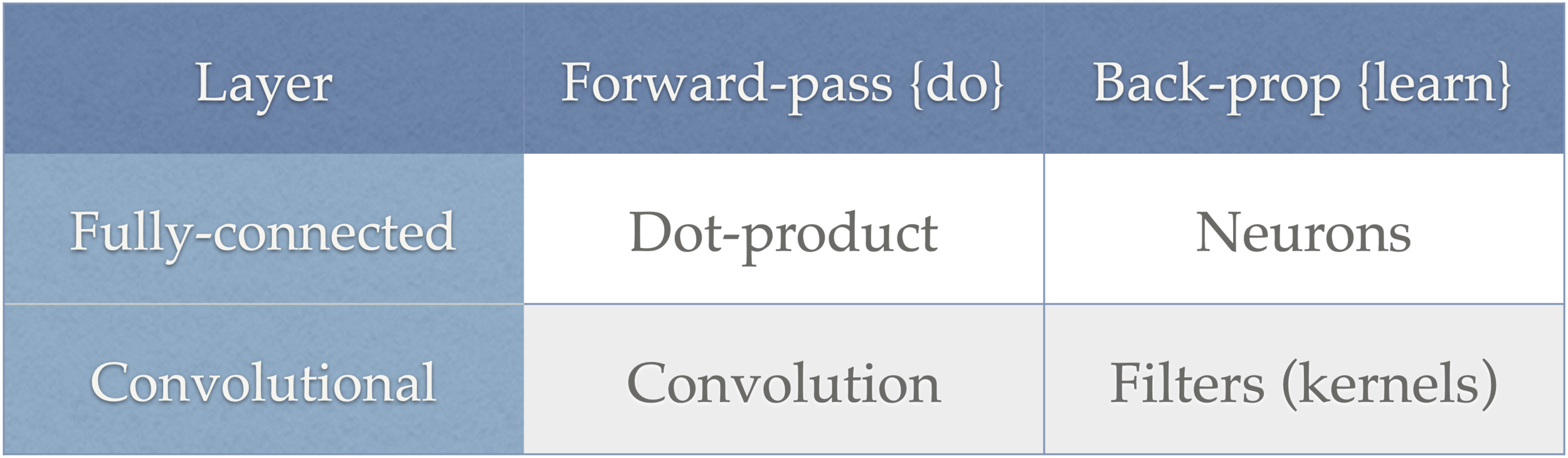

Recap: fully-connected networks

- layered structure

- fully-connected neurons

- dot-product

- nonlinear activation

- back-propagation

- gradient descent

- code one up in python

[image credit: 3b1b]

convolutional neural networks

-

Why do we need a special network for images?

-

Why is CNN (the) special network for images?

9

Why do we

need a specialized net for images?

[video credit: 3b1b]

Q: Why do we need a specialized network?

426-by-426 grayscale image

Use the same small network?

need to learn ~3M parameters

Imagine even higher-resolution images, or more complex tasks...

A: Vanilla fully-connected nets don't scale well to (interesting) images

Why do we think

9?

is

Why do we think

9?

is

- Visual hierarchy

- Spatial locality

- Translational invariance

- Visual hierarchy

- Spatial locality

- Translational invariance

CNN cleverly exploits

to handle images efficiently

via

- (deep) layers

- convolution

- pooling

CNN cleverly exploits

to handle images efficiently

via

- (deep) layers

- convolution

- pooling

- Visual hierarchy

- Spatial locality

- Translational invariance

cleverly exploits

to handle efficiently

via

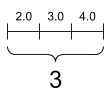

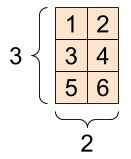

Let's move on to the board

to see what a 1D convolution looks like ...

Convolutional layer might sound foreign, but...

Let's move on to the board to see what a 1D convolution looks like ...

Convolutional layer might sound foreign, but...

(and there's even deeper 'similarities', but more on it later)

0

1

0

1

1

-1

1

input image

filter

output image

1

-1

1

0

Hyper-parameters

- Zero-padding

- Receptive field ('window' size, e.g., a different size here)

- Stride

-1

1

-1

1

(e.g. of size 2)

0

0

0

0

1

1

0

0

1

1

1

1

0

0

0

0

1

1

0

0

1

1

1

1

0

1

0

1

1

-1

1

input image

filter

output image

1

-1

1

0

- 'look' locally

- parameter sharing

0

1

0

1

1

-1

1

convolve

with

=

1

-1

1

0

0

0

1

-1

-1

0

0

0

0

0

1

0

0

0

-1

1

0

1

-1

0

0

or dot

with

0

1

0

1

1

0

1

0

1

1

convolve with ?

=

dot-product with ?

=

0

1

0

1

1

0

1

0

1

1

convolve with

dot-product with

1

0

1

0

1

1

-1

1

input image

filter

output image

1

-1

1

0

2D Convolution

input image

filter

output image

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

0

1

0

0

1

1

1

1

1

0

0

0

0

0

0

1

1

1

1

-1

| 0 | 1 | -1 | 0 | 0 |

| 1 | 1 | 0 | 1 | -1 |

| 1 | 2 | 1 | 0 | 0 |

| 0 | 2 | 1 | 1 | 0 |

| 0 | 1 | 1 | 0 | 0 |

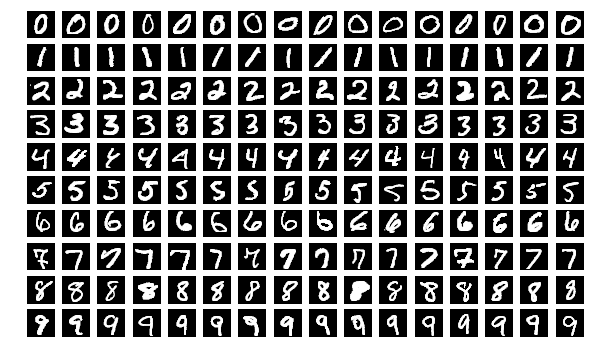

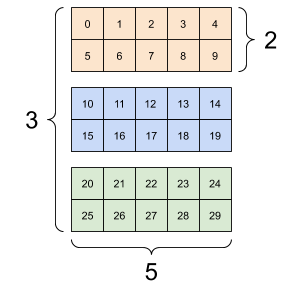

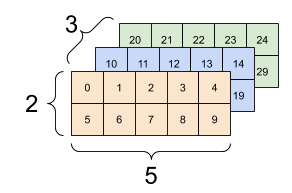

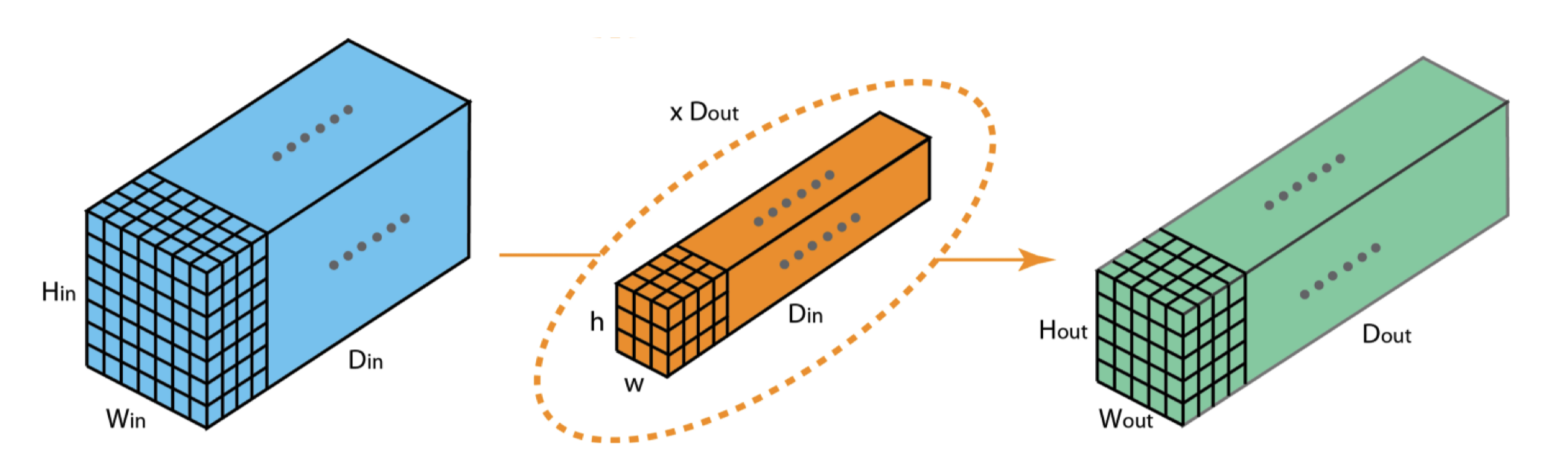

A tender intro to tensor:

[image credit: tensorflow]

red

green

blue

image channels

image width

image

height

image channels

image width

image

height

input tensor

filters

outputs

input tensor

filters

output tensor

[image credit: medium]

Parting thoughts

- On-going research

- Other network architectures, e.g.,

Parting thoughts

- On-going research

- Other network architectures

- Interpretation of features learned, e.g.,

Parting thoughts

- On-going research

- Other network architectures

- Interpretation of features learned

- Adversarial attacks and robustness

| voter | sex | age | income | education | ... | voted |

|---|---|---|---|---|---|---|

| 1 | F | 30 | 70k | post-grad | ... | Bernie |

| 2 | M | 75 | 80k | college | ... | Biden |

...

[HW credit: Princeton ORF363]

don't abuse convolution...

Thank you.

Questions?

CNN

By Shen Shen

CNN

- 118