Motion Sets for

Contact-Rich Manipulation

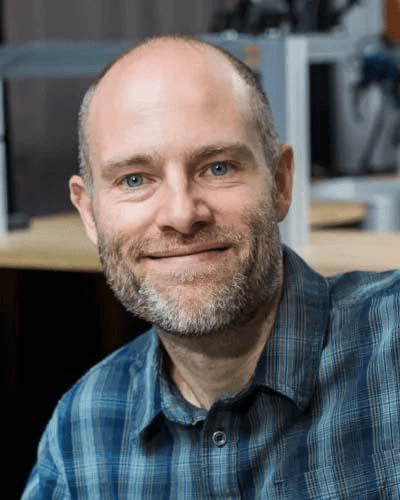

H.J. Terry Suh, MIT

RLG Group Meeting

Spring 2024

The Software Bottleneck in Robotics

A

B

Do not make contact

Make contact

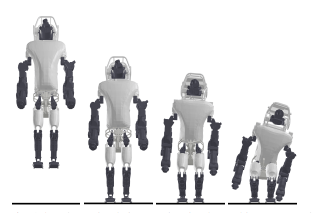

Today's robots are not fully leveraging its hardware capabilities

Larger objects = Larger Robots?

Contact-Rich Manipulation Enables Same Hardware to do More Things

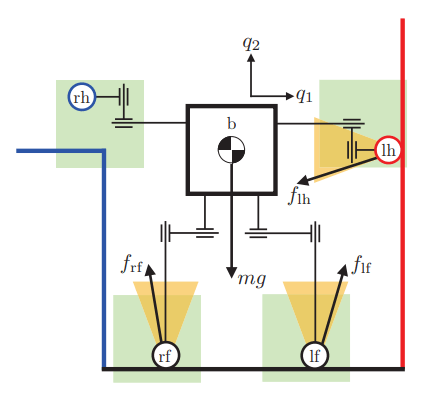

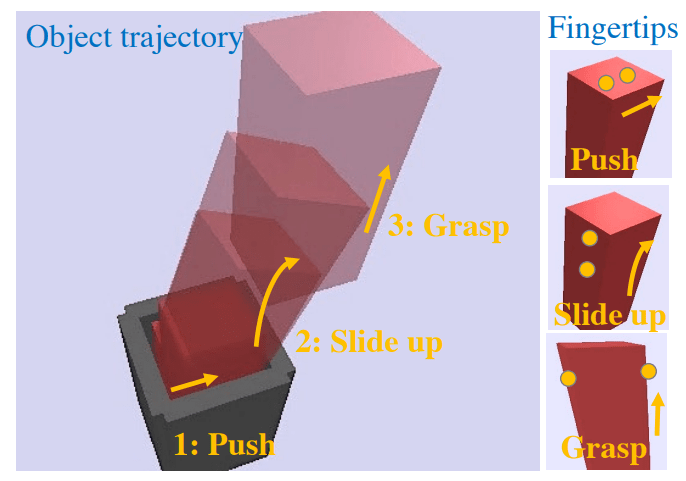

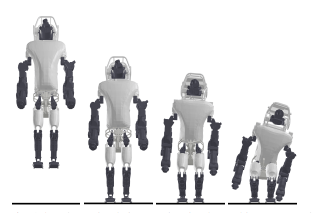

Case Study 1. Whole-body Manipulation

What is Contact-Rich Manipulation?

What is Contact-Rich Manipulation?

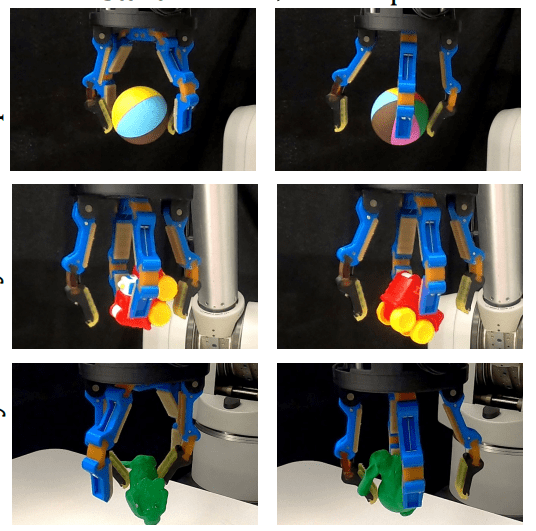

Morgan et al., RA-L 2022

Kandate et al., RSS 2023

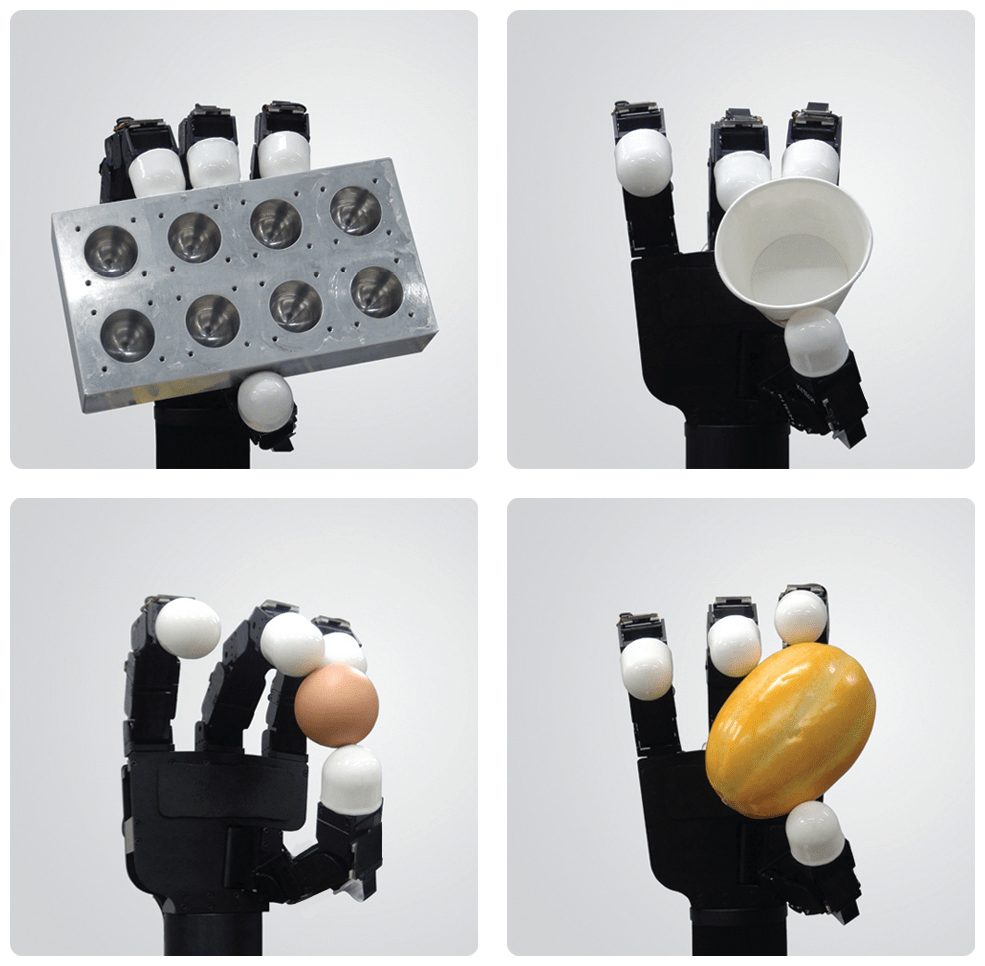

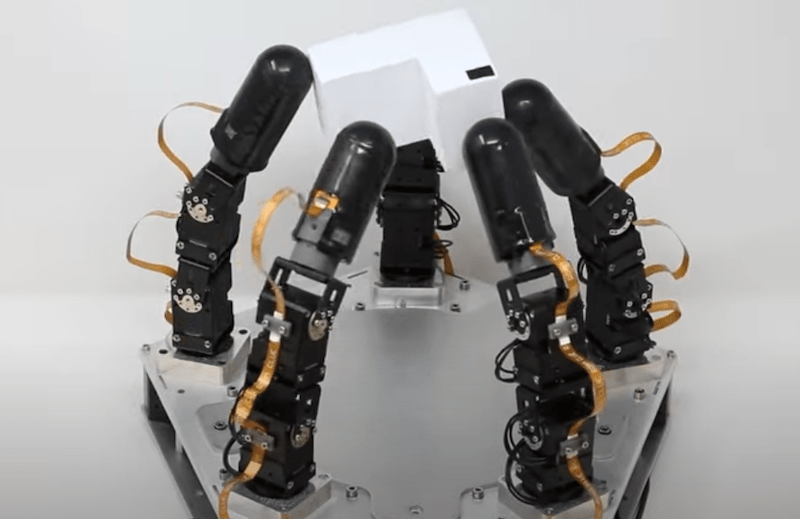

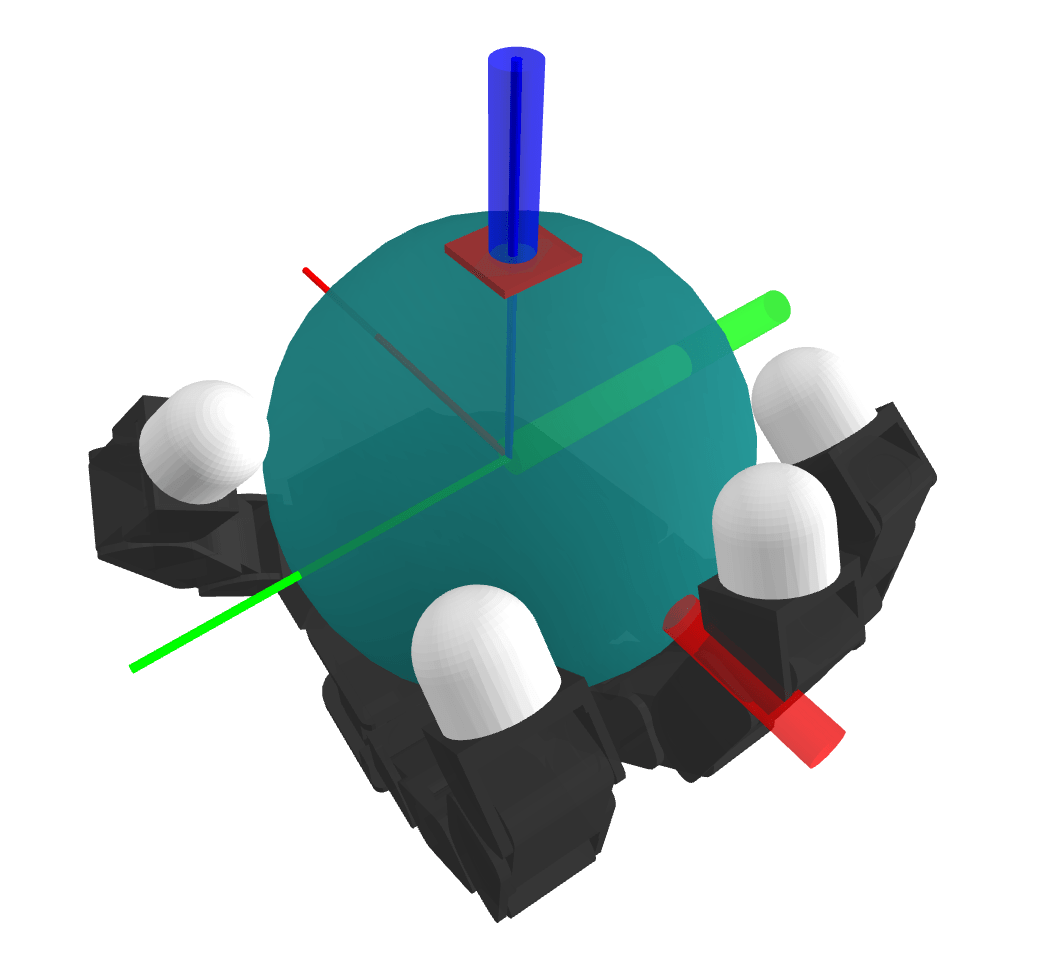

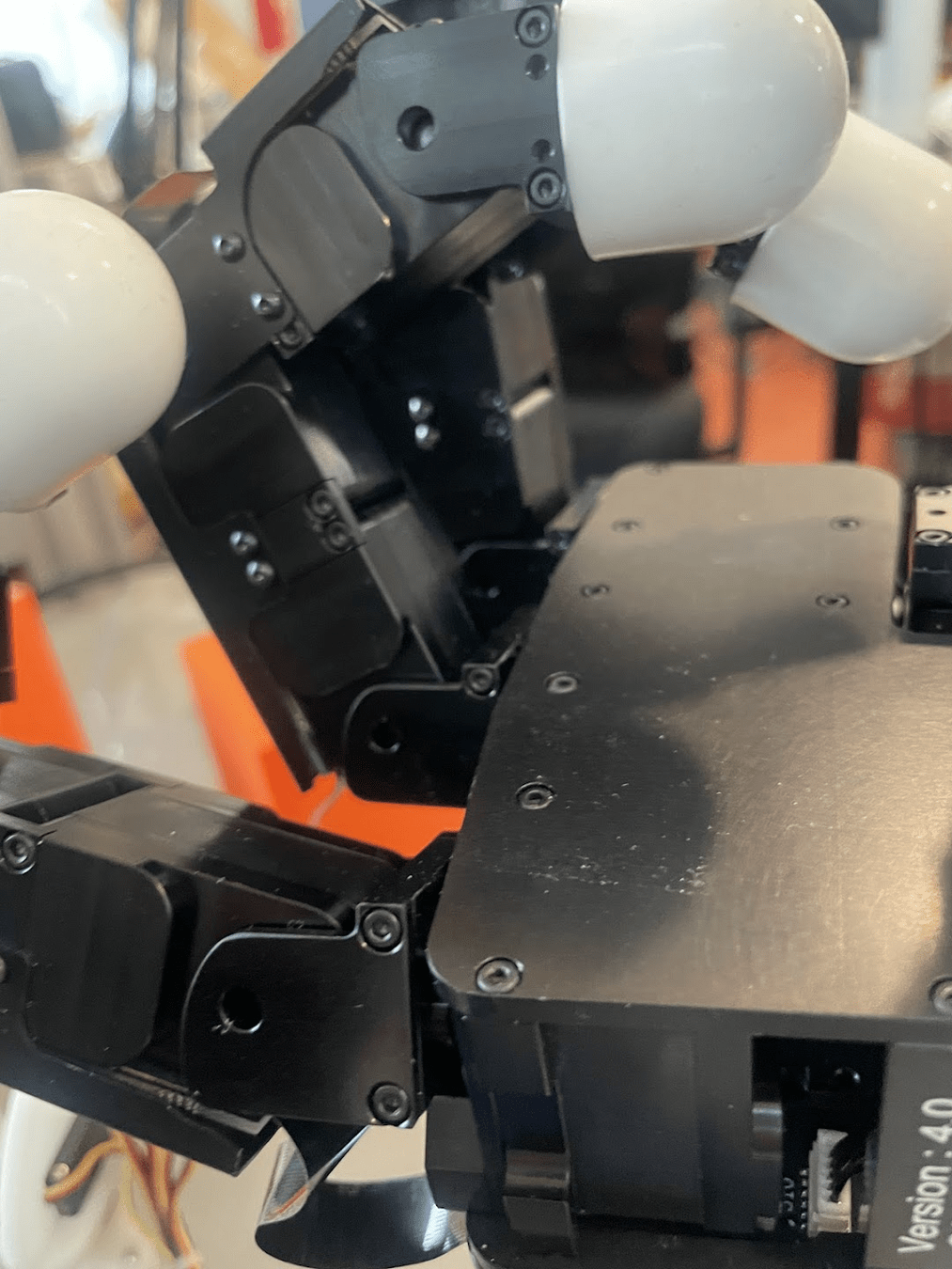

Wonik Allegro Hand

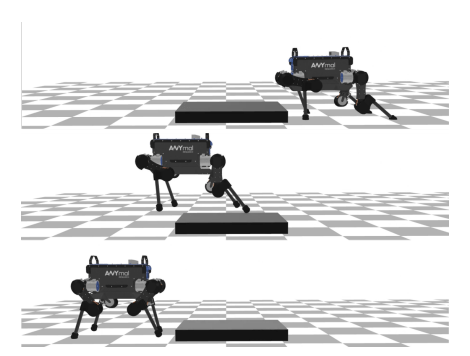

Case Study 2. Dexterous Hands

What is Contact-Rich Manipulation?

Case Study 2. Dexterous Hands

You should do hands Terry

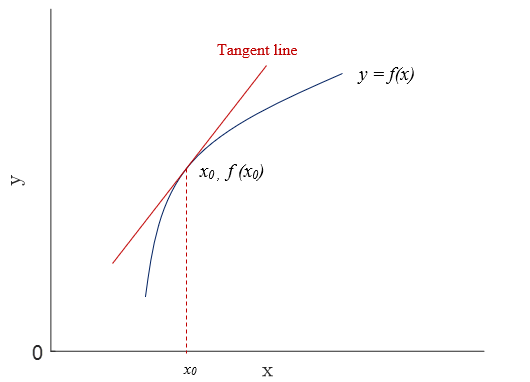

Using Gradients for Planning & Control

Optimization

Find minimum step subject to Taylor approximation

Leads to Gradient Descent

Using Gradients for Planning & Control

Optimization

Find minimum step subject to Taylor approximation

Leads to Gradient Descent

Optimal Control (1-step)

Find minimum step subject to Taylor approximation

Linearization

Fundamental tool for analyzing control for smooth systems

What does it mean to linearize contact dynamics?

What is the "right" Taylor approximation for contact dynamics?

Version 1. The "mathematically correct" Taylor approximation

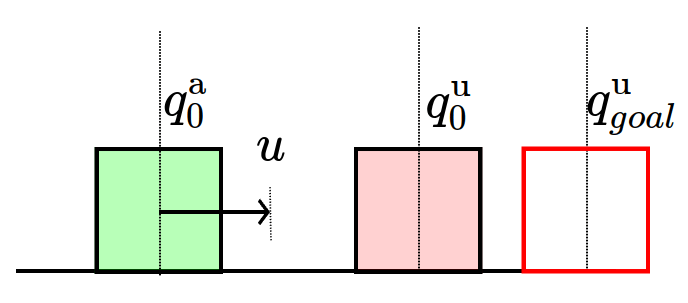

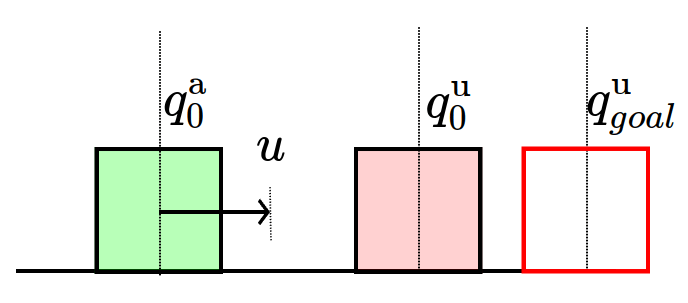

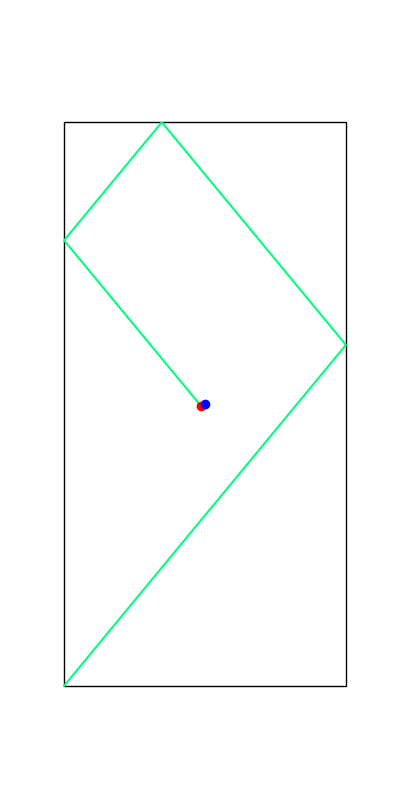

Toy Problem

Simplified Problem

Given initial and goal ,

which action minimizes distance to the goal?

Toy Problem

Simplified Problem

Consider simple gradient descent,

Dynamics of the system

No Contact

Contact

Toy Problem

Simplified Problem

Consider simple gradient descent,

Dynamics of the system

No Contact

Contact

The gradient is zero if there is no contact!

The gradient is zero if there is no contact!

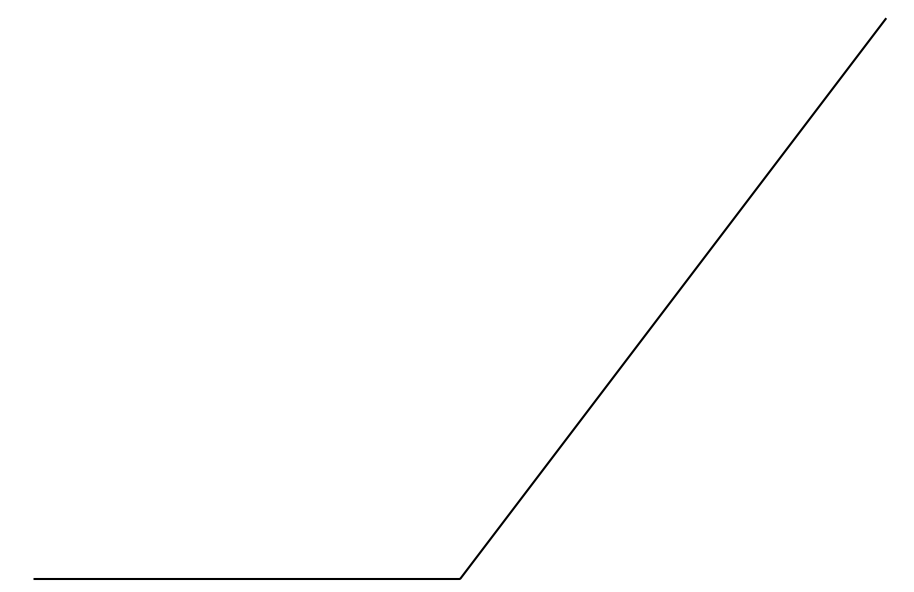

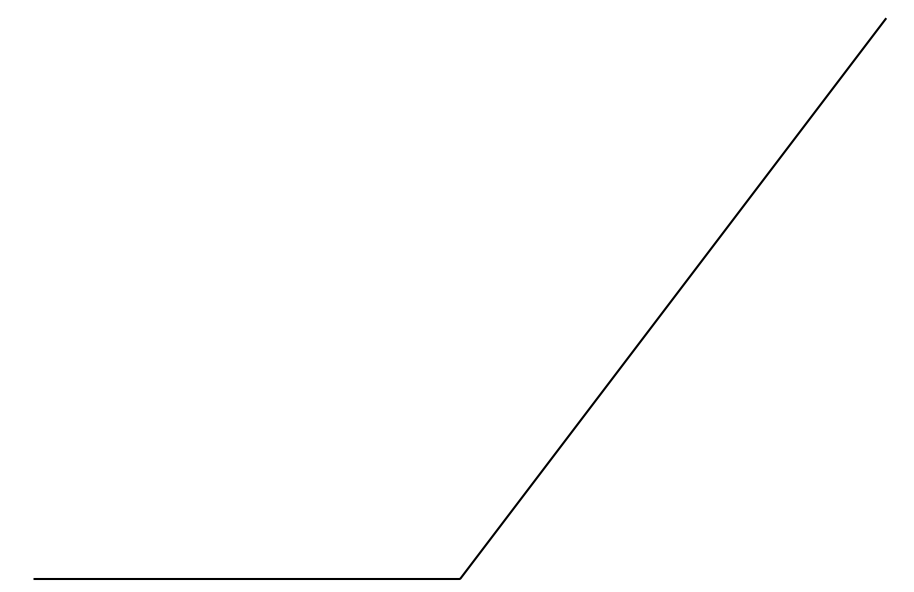

Previous Approaches to Tackling the Problem

Why don't we search more globally for each contact mode?

In no-contact, run gradient descent.

In contact, run gradient descent.

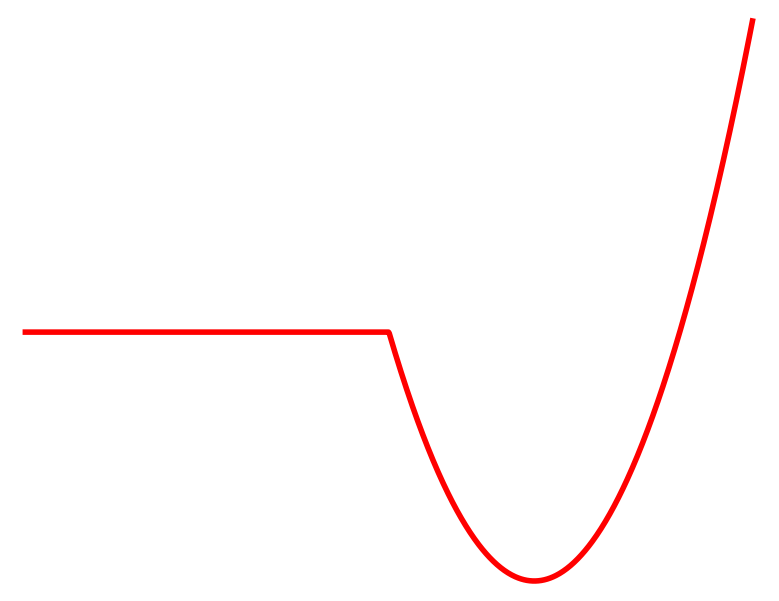

Contact

No Contact

Cost

Previous Approaches to Tackling the Problem

Mixed Integer Programming

Mode Enumeration

Active Set Approach

Contact

No Contact

Cost

Why don't we search more globally for each contact mode?

In no-contact, run gradient descent.

In contact, run gradient descent.

Previous Approaches to Tackling the Problem

[MDGBT 2017]

[HR 2016]

[CHHM 2022]

[AP 2022]

Contact

No Contact

Cost

Mixed Integer Programming

Mode Enumeration

Active Set Approach

Why don't we search more globally for each contact mode?

In no-contact, run gradient descent.

In contact, run gradient descent.

Problems with Mode Enumeration

System

Number of Modes

No Contact

Sticking Contact

Sliding Contact

Number of potential active contacts

Problems with Mode Enumeration

System

Number of Modes

The number of modes scales terribly with system complexity

No Contact

Sticking Contact

Sliding Contact

Number of potential active contacts

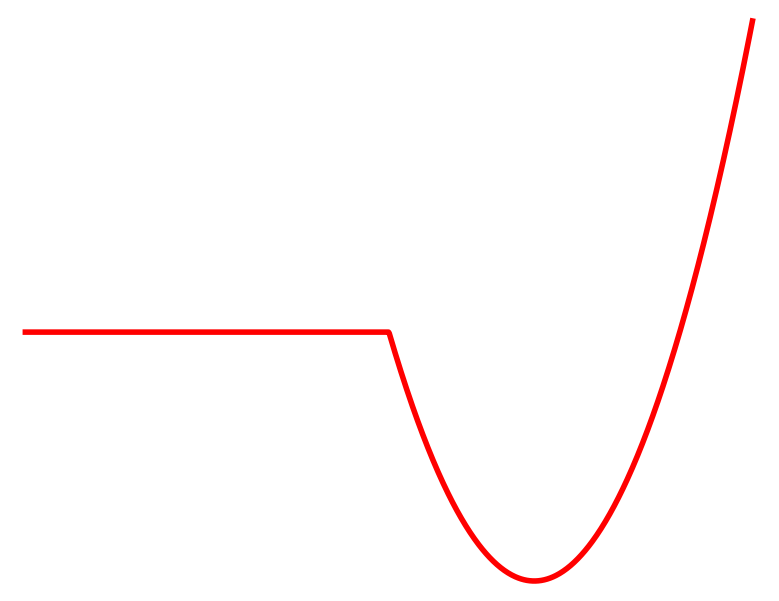

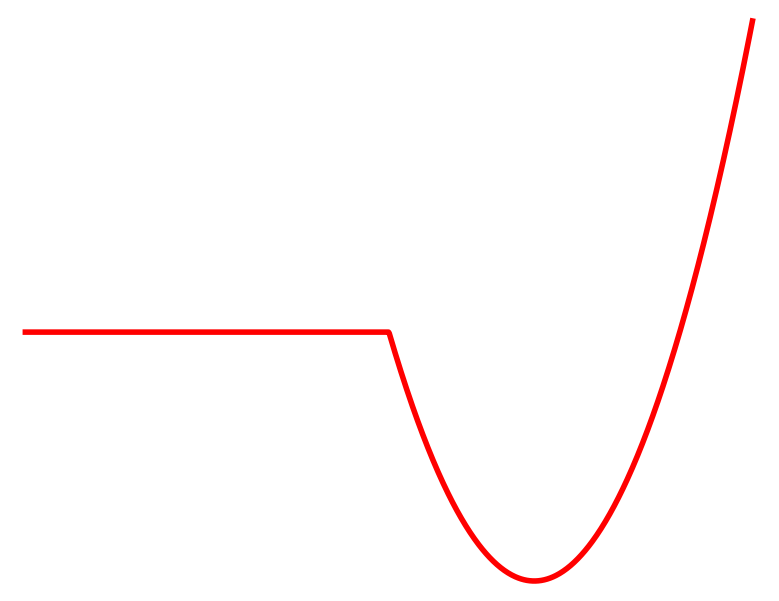

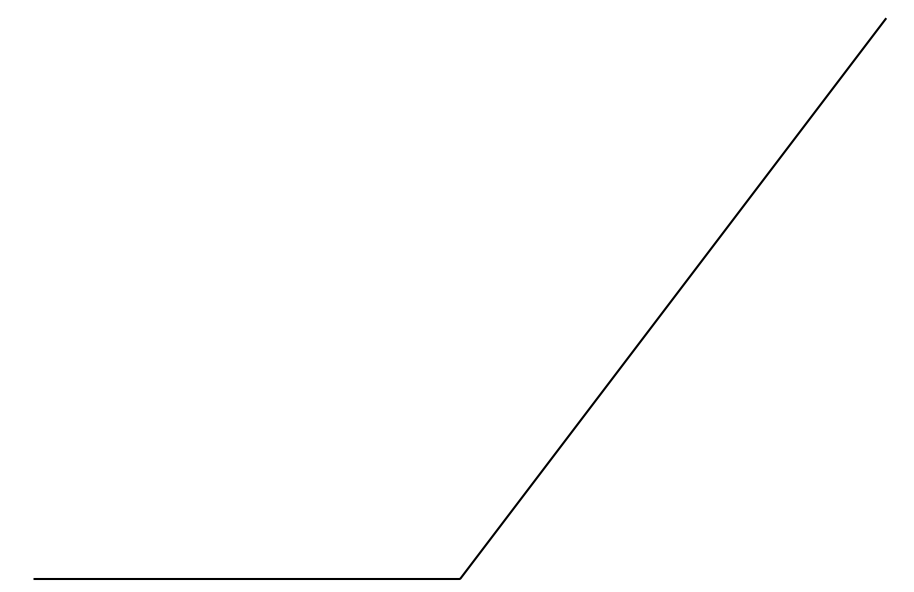

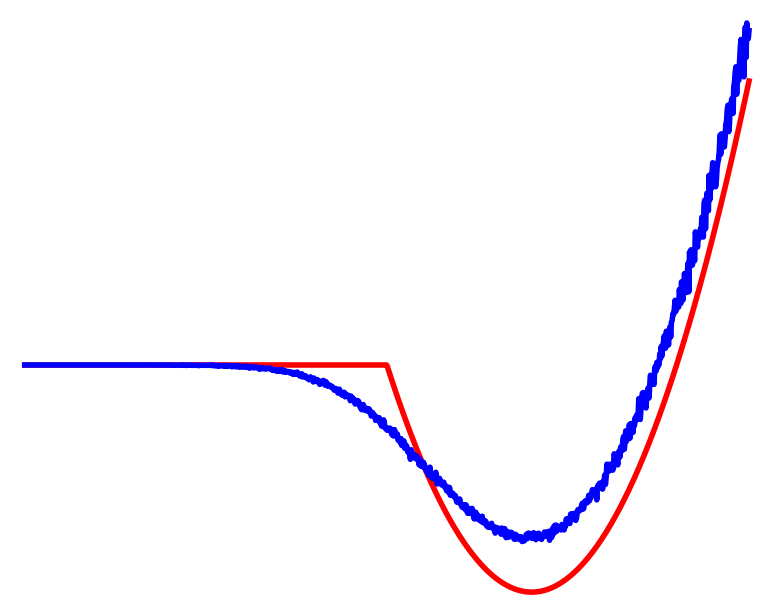

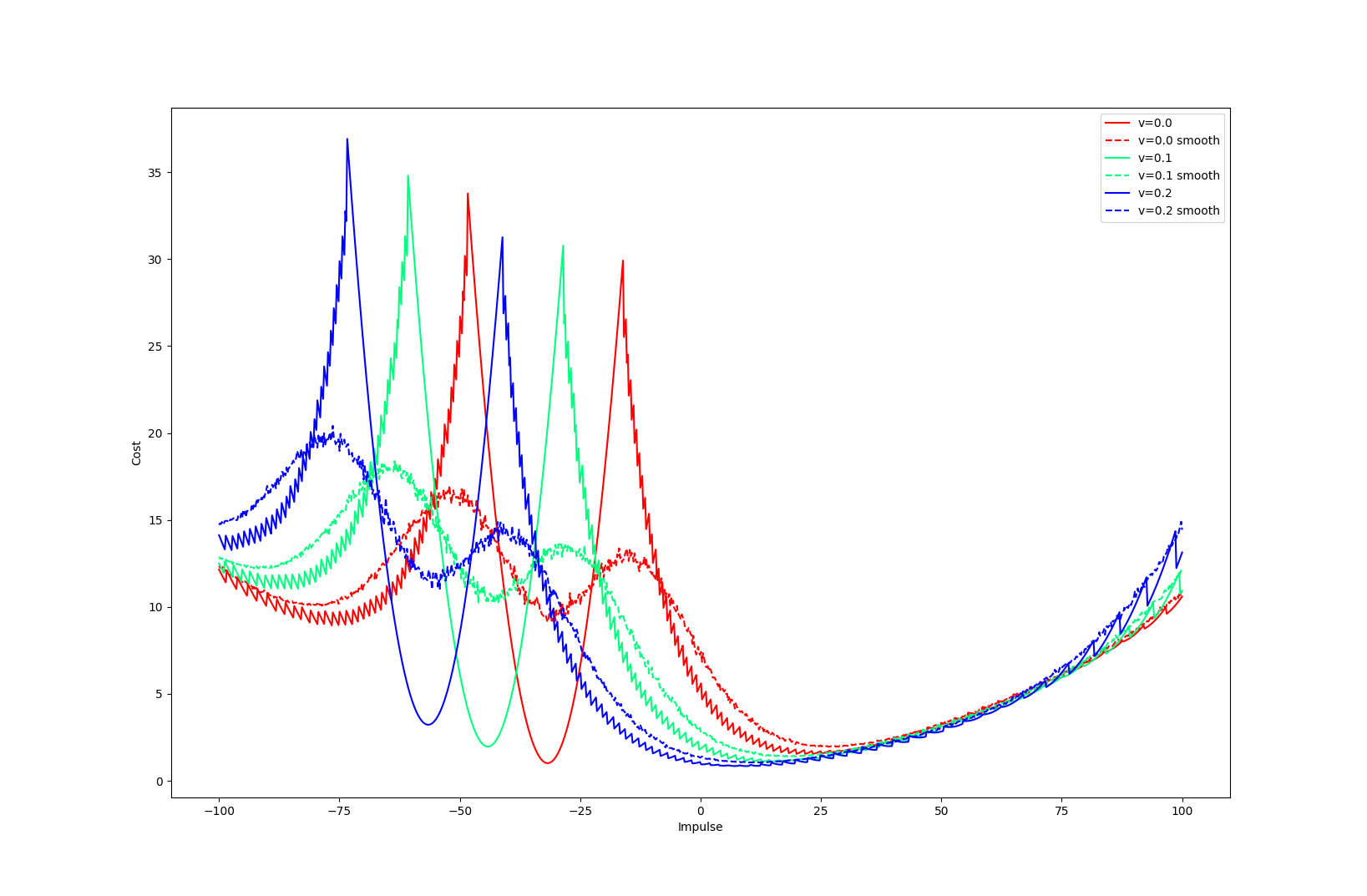

Non-smooth Contact Dynamics

Smooth Surrogate Dynamics

No Contact

Contact

Averaged

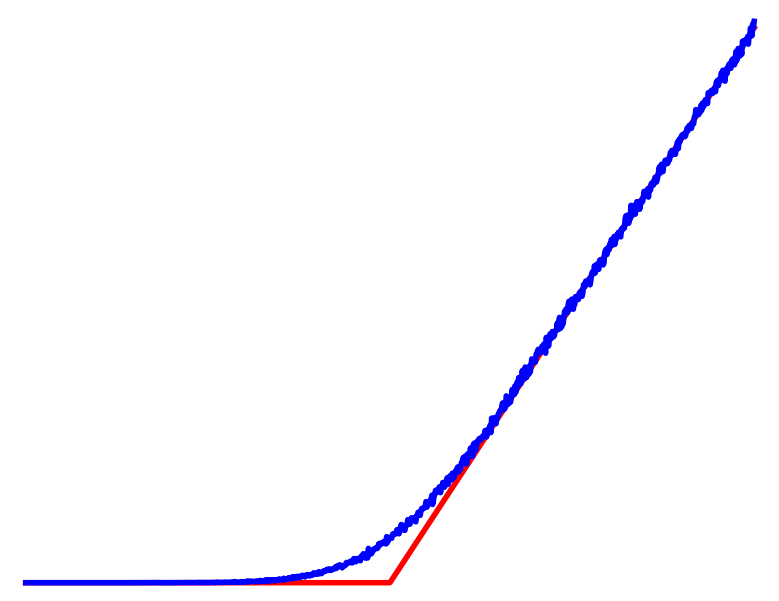

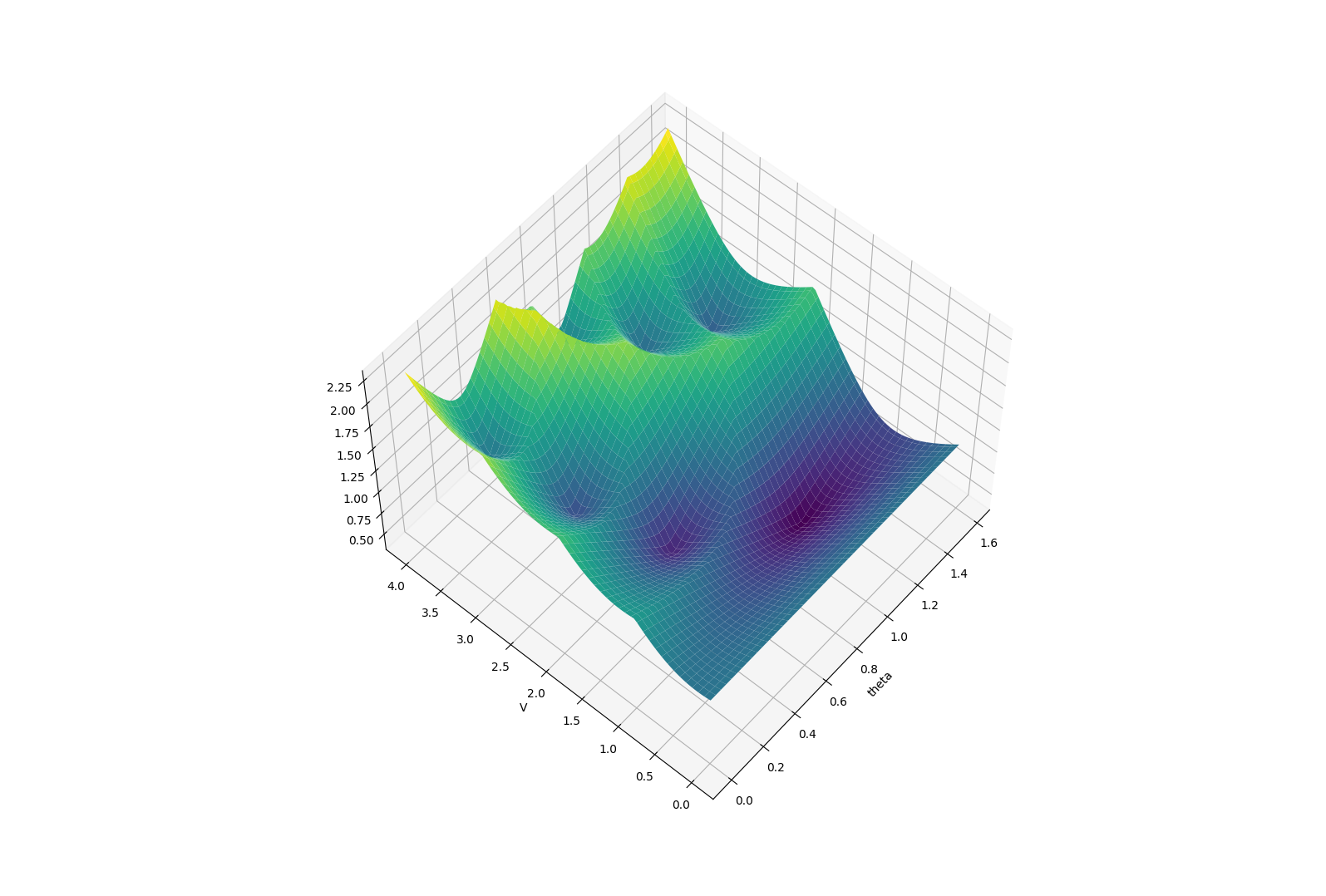

Dynamic Smoothing

What if we had smoothed dynamics for more-than-local effects?

Effects of Dynamic Smoothing

Reinforcement Learning

Cost

Contact

No Contact

Averaged

Dynamic Smoothing

Averaged

Contact

No Contact

No Contact

Can still claim benefits of averaging multiple modes leading to better landscapes

Importantly, we know structure for these dynamics!

Can often acquire smoothed dynamics & gradients without Monte-Carlo.

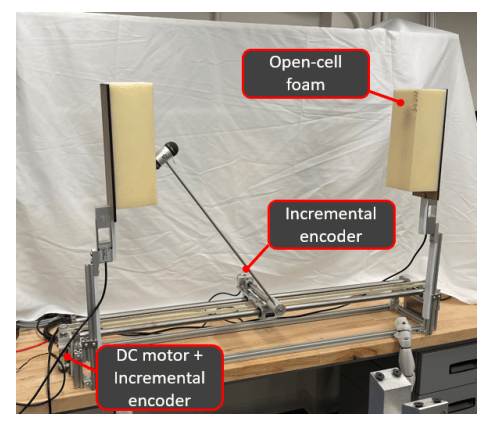

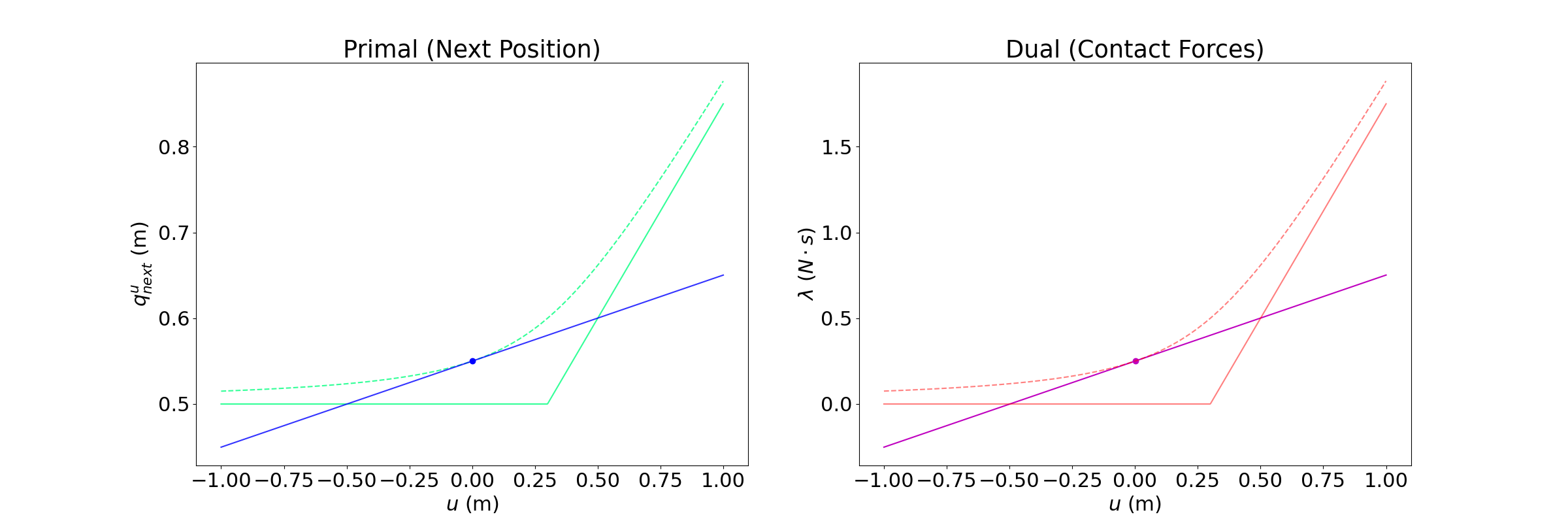

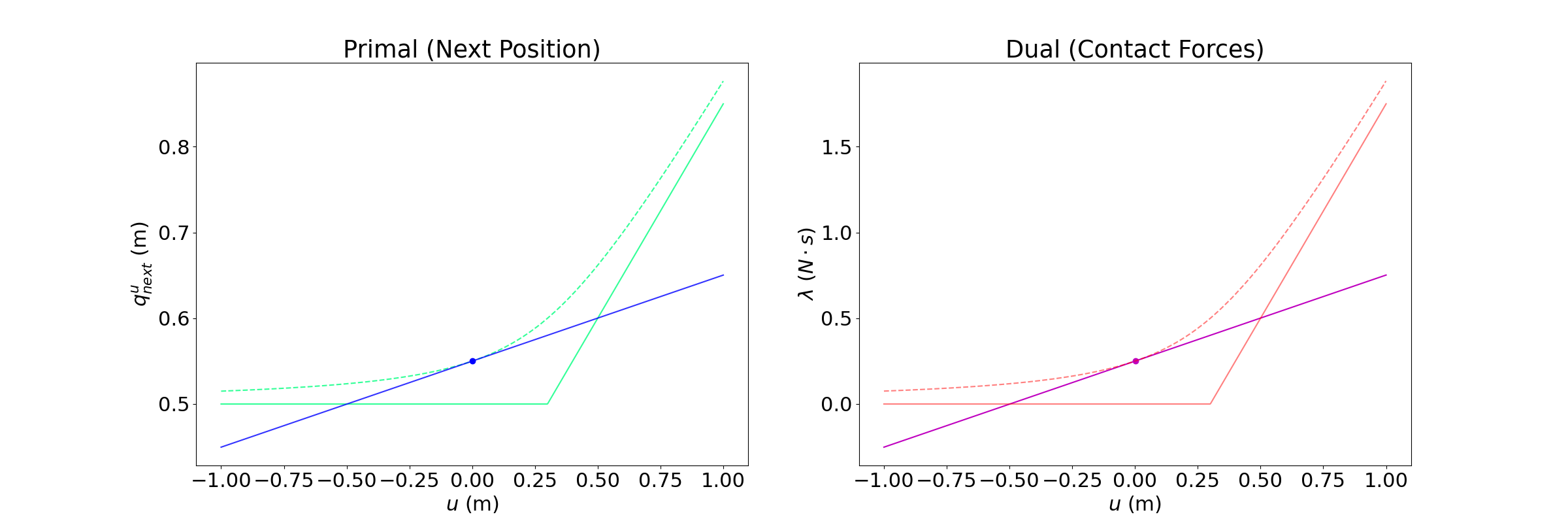

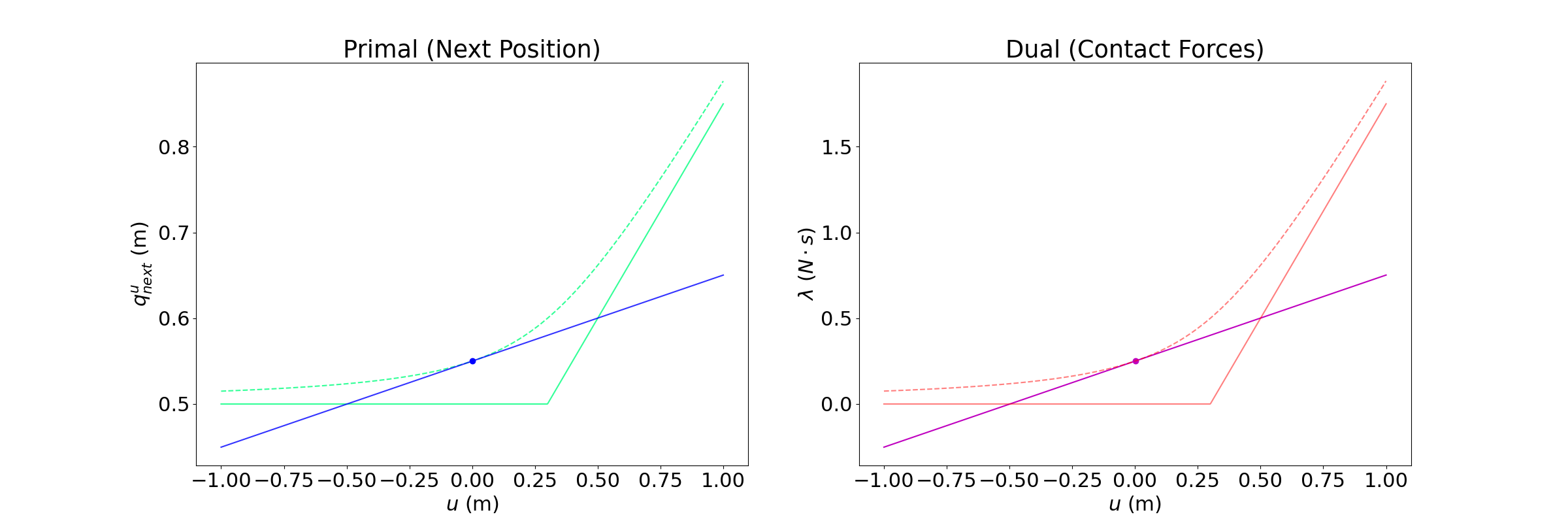

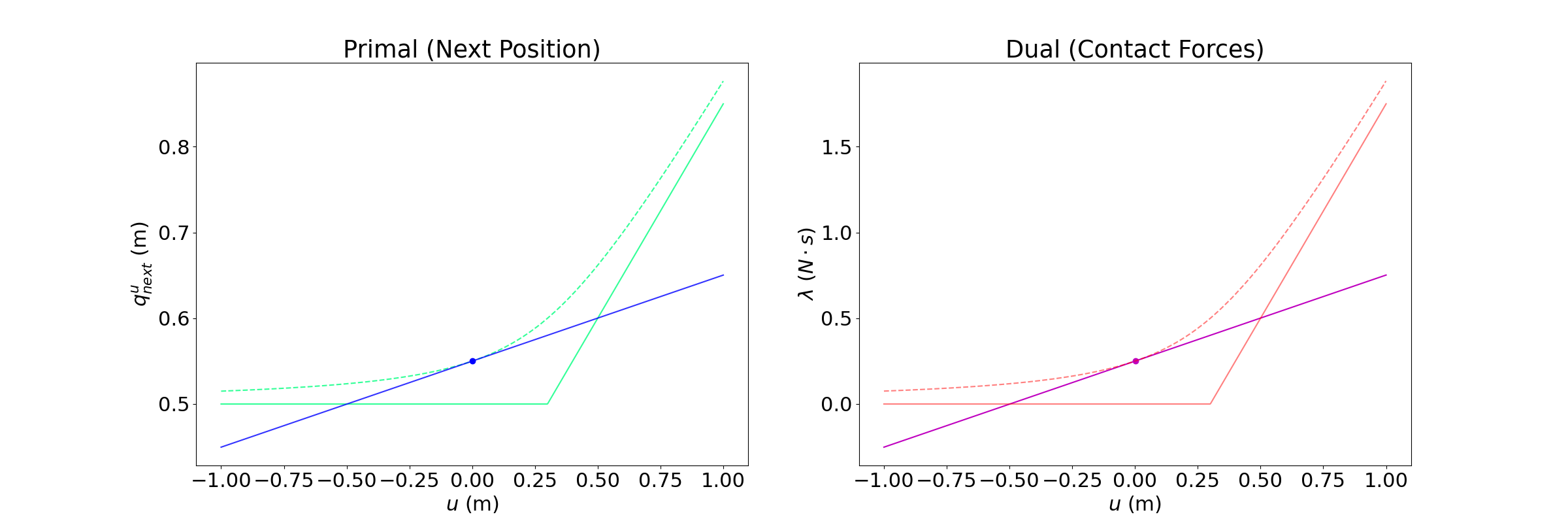

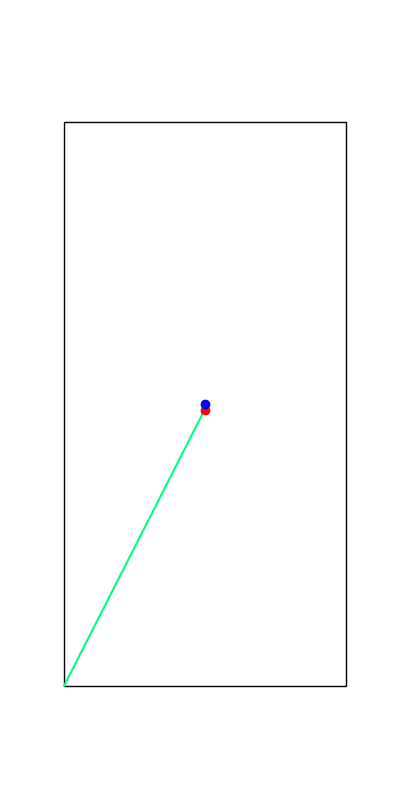

Example: Box vs. wall

Commanded next position

Actual next position

Cannot penetrate into the wall

Implicit Time-stepping simulation

No Contact

Contact

Structured Smoothing: An Example

Importantly, we know structure for these dynamics!

Can often acquire smoothed dynamics & gradients without Monte-Carlo.

Example: Box vs. wall

Implicit Time-stepping simulation

Commanded next position

Actual next position

Cannot penetrate into the wall

Log-Barrier Relaxation

Structured Smoothing: An Example

Importantly, we know structure for these dynamics!

Can often acquire smoothed dynamics & gradients without Monte-Carlo.

Example: Box vs. wall

Barrier smoothing

Barrier vs. Randomized Smoothing

Differentiating with Sensitivity Analysis

How do we obtain the gradients from an optimization problem?

Differentiating with Sensitivity Analysis

How do we obtain the gradients from an optimization problem?

Differentiate through the optimality conditions!

Stationarity Condition

Implicit Function Theorem

Differentiate by u

Differentiating with Sensitivity Analysis

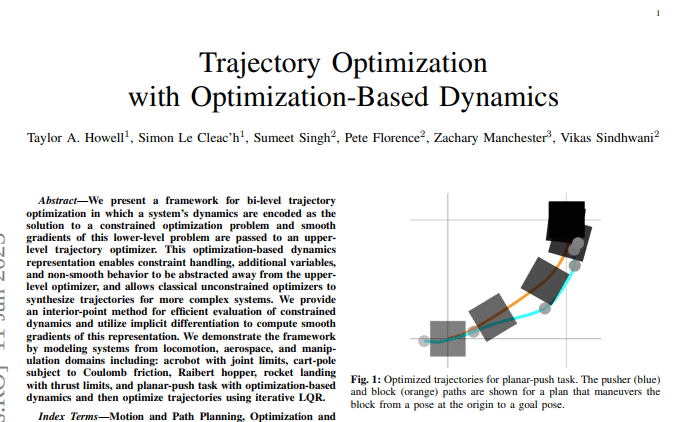

[HLBKSM 2023]

[MBMSHNCRVM 2020]

[PSYT 2023]

How do we obtain the gradients from an optimization problem?

Differentiate through the optimality conditions!

Stationarity Condition

Implicit Function Theorem

Differentiate by u

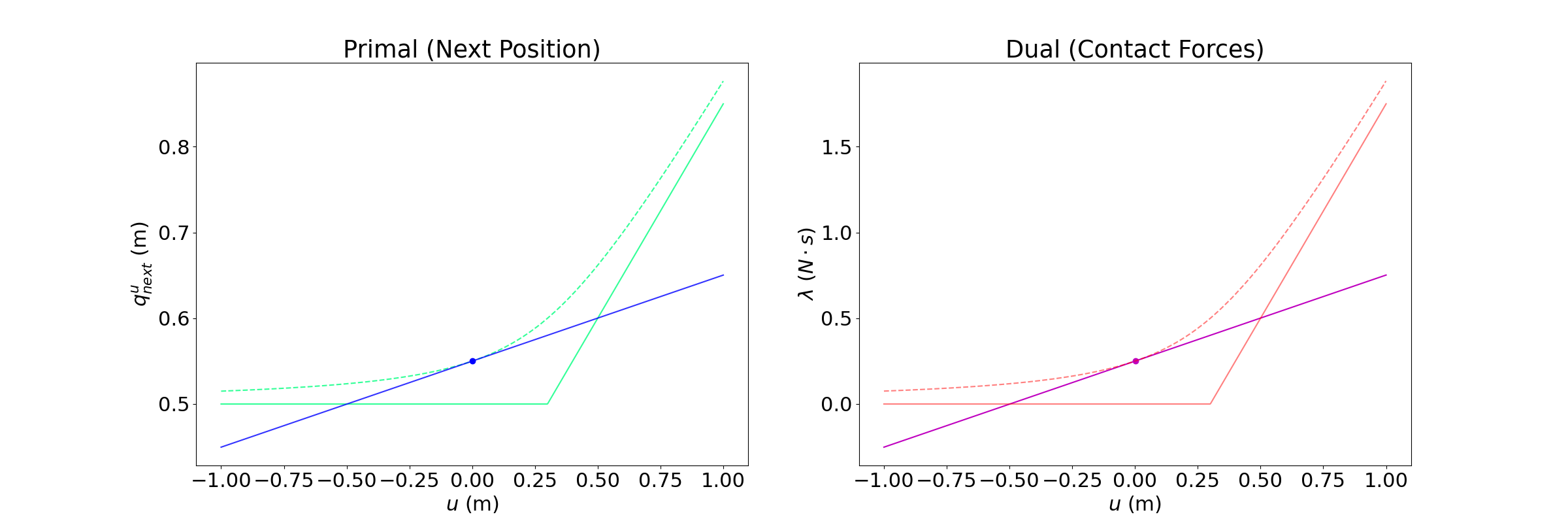

What does it mean to linearize contact dynamics?

What is the "right" Taylor approximation for contact dynamics?

Version 1. The "mathematically correct" Taylor approximation

Version 2. The "mathematically wrong but a bit more global" Taylor approximation

From Sensitivity Analysis of Convex Programs for Contact Dynamics

What does it mean to linearize contact dynamics?

What is the "right" Taylor approximation for contact dynamics?

Version 1. The "mathematically correct" Taylor approximation

Version 2. The "mathematically wrong but a bit more global" Taylor approximation

iLQR-like tools much more effective for contact dynamics

What does it mean to linearize contact dynamics?

Did we get it right this time?

What does it mean to linearize contact dynamics?

Still missing something!

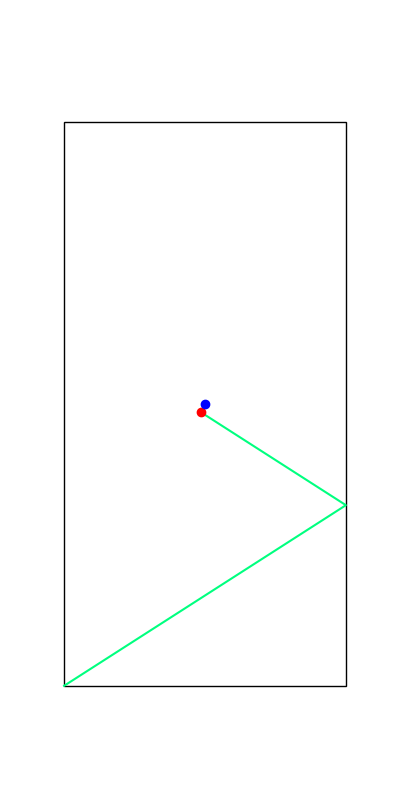

Flaws of Linearization

Goal

Linearization

Flaws of Linearization

Goal

Linearization

Pushes in the right direction

Flaws of Linearization

Goal

Linearization

Flaws of Linearization

Goal

Linearization

Linearization thinks we can pull

Flaws of Linearization

Goal

Linearization

How do we tell linearization some directional information?

What are we missing?

What are we missing?

Dynamics Linearization

Contact Force Linearization

Contact Constraints

Local Approximations to Contact Dynamics have to take contact constraints into account!

*image taken from Stephane Caron's blog

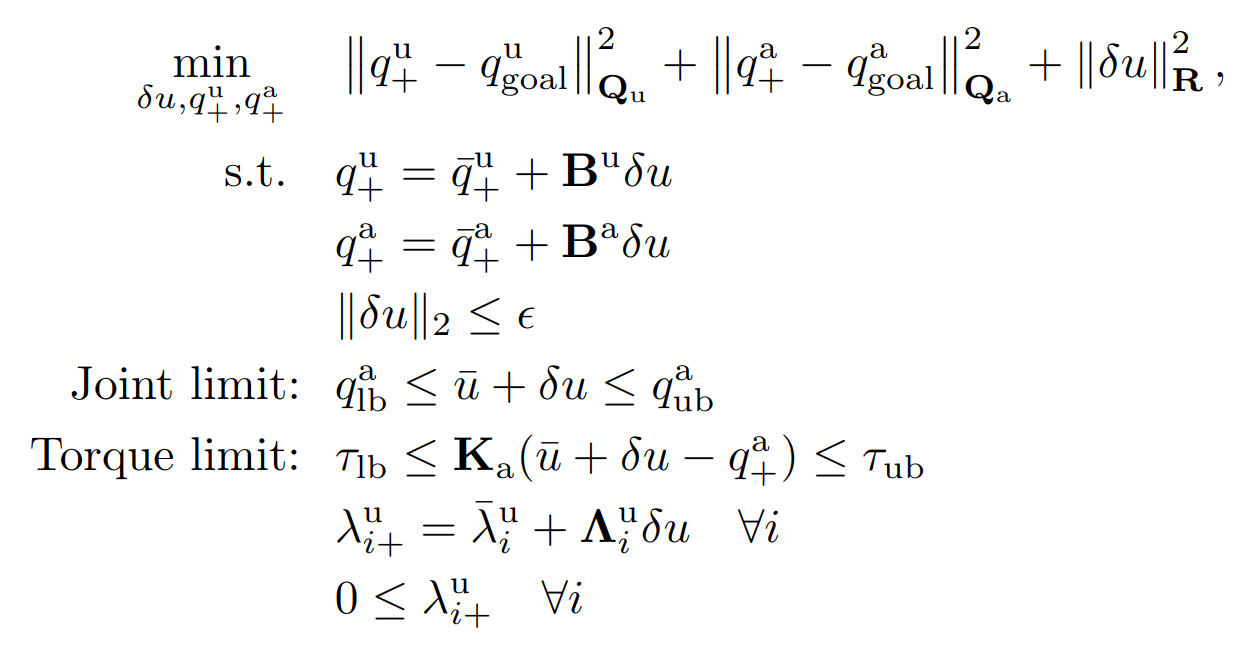

Taylor Approximation for Contact Dynamics

Dynamics Linearization

Contact Force Linearization

Contact Constraints

Input Limit

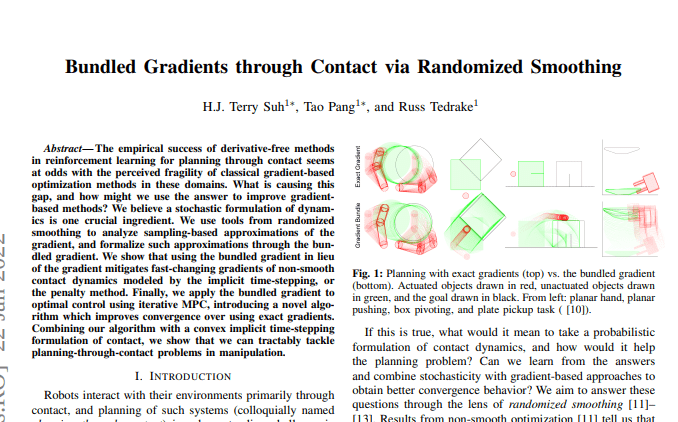

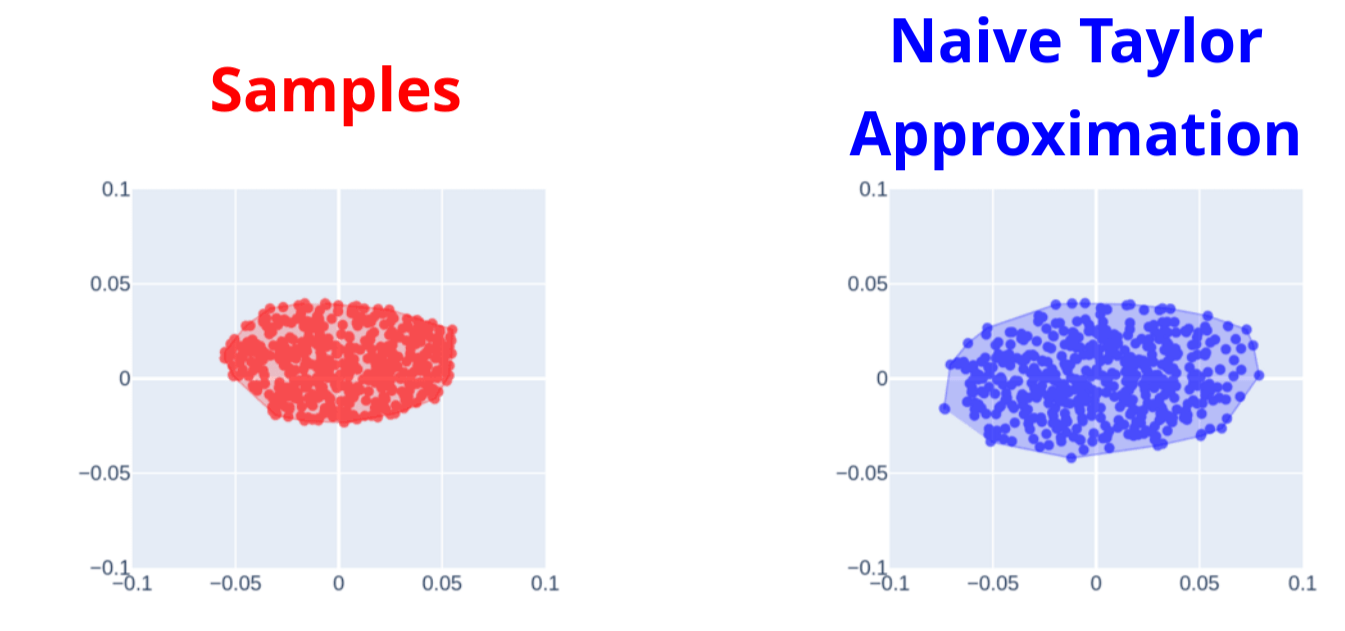

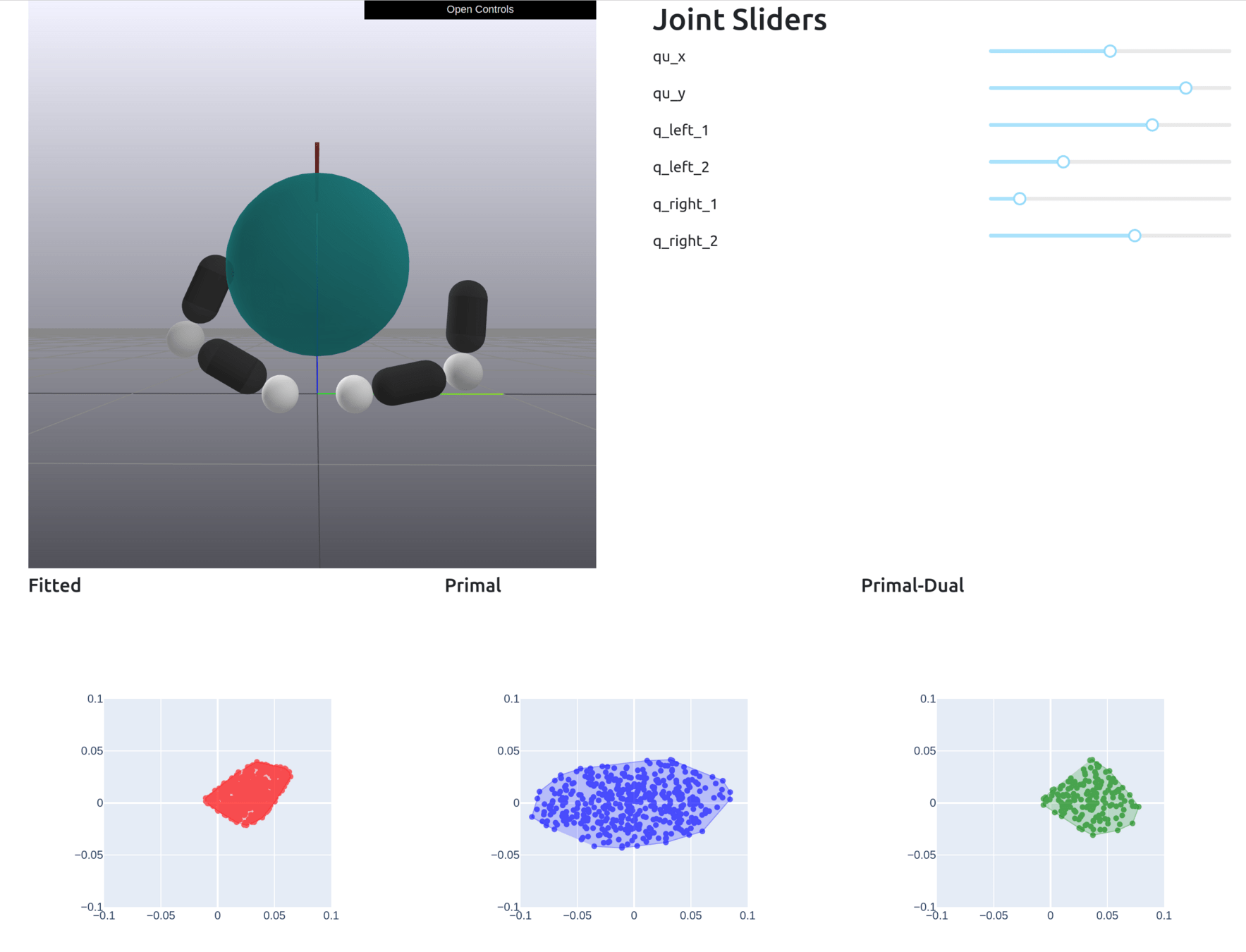

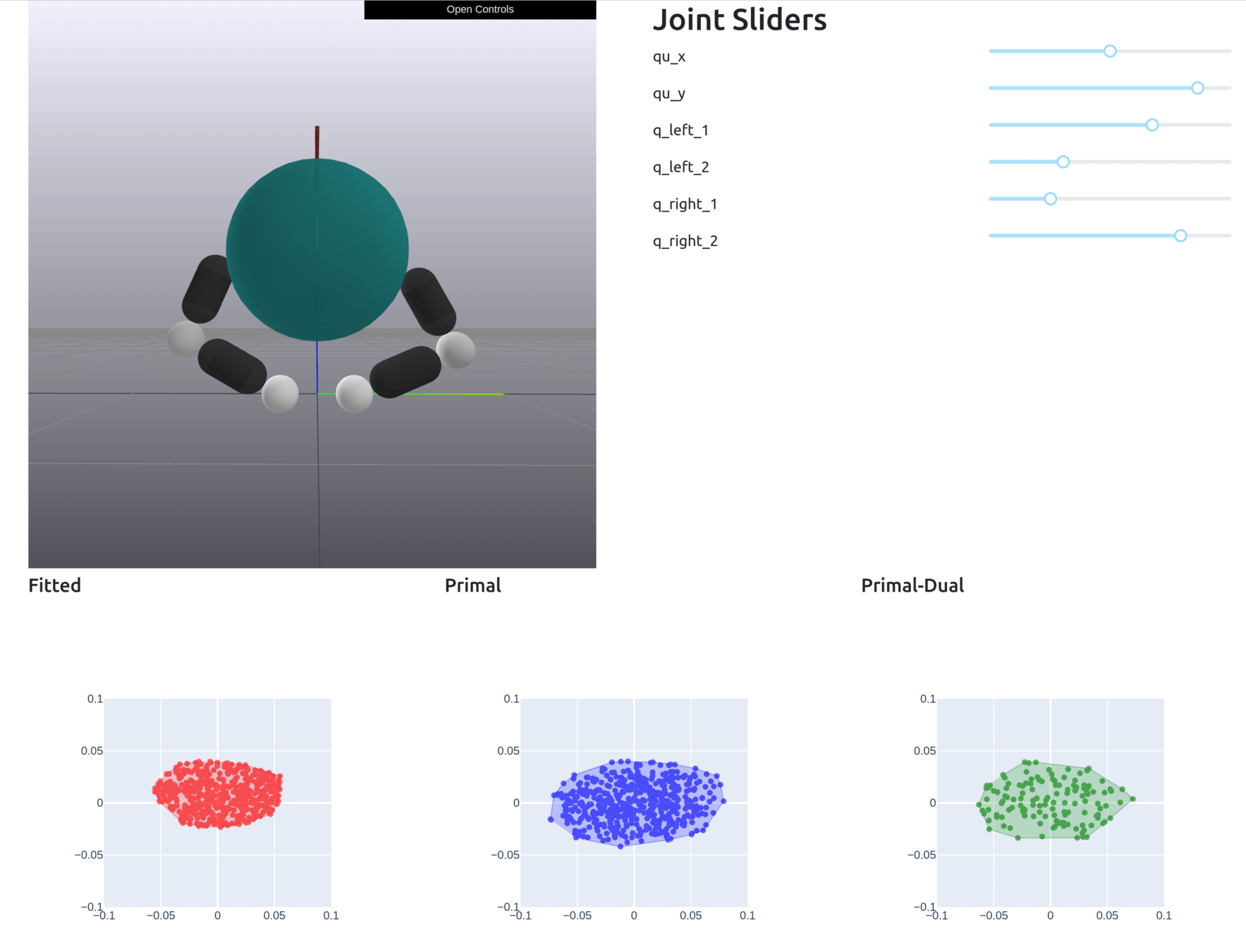

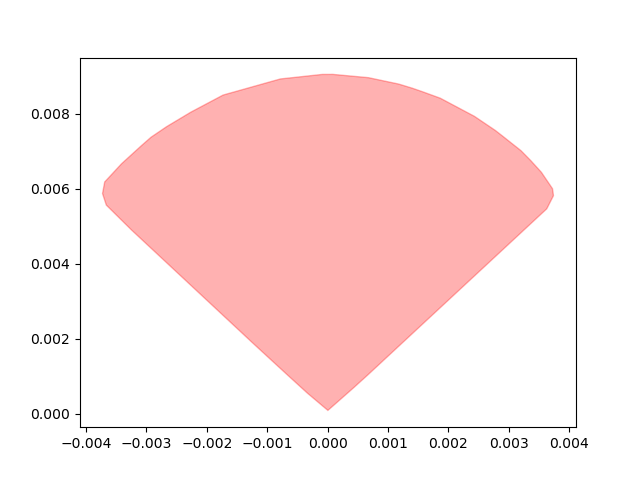

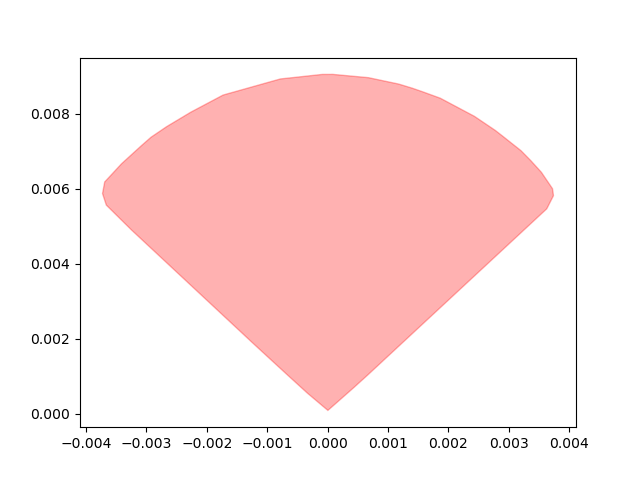

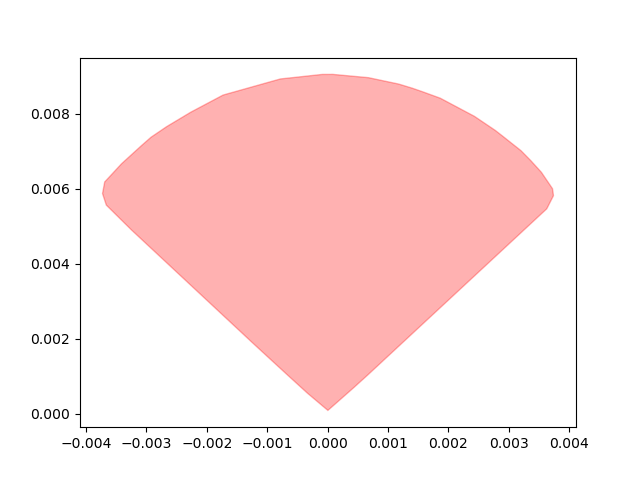

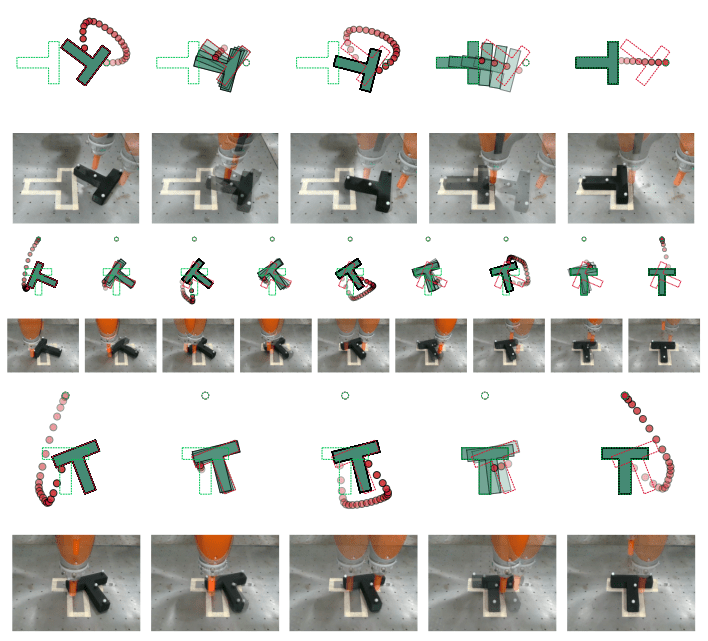

Motion Sets: Local Convex Approximation to Allowable Motion (1-step Reachable Set)

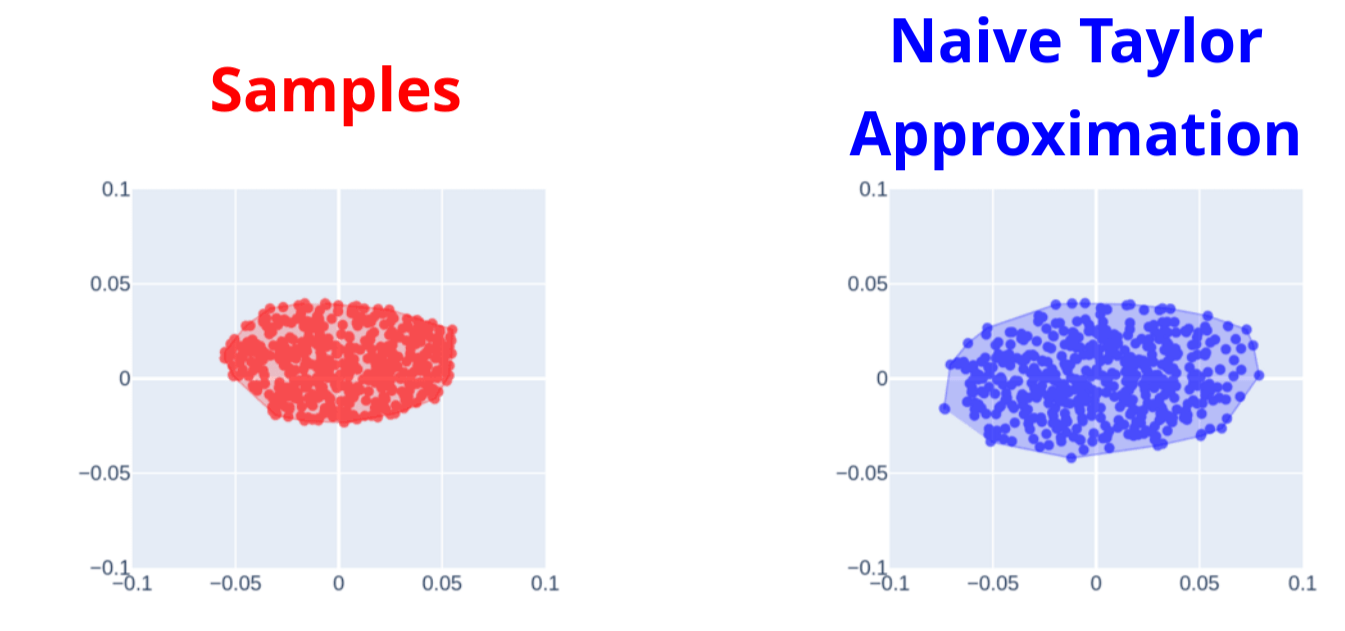

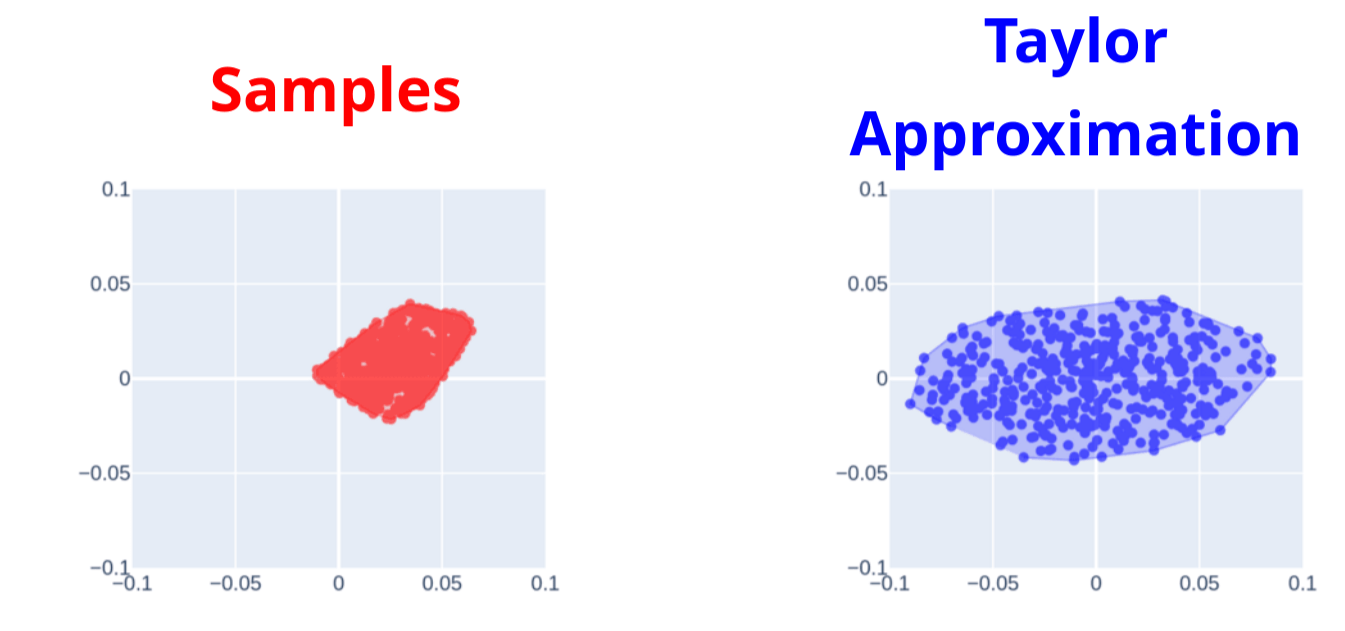

Contact-Aware Taylor Approximation

Naive Taylor Approximation

Samples

Naive Taylor Approximation

Samples

Contact-Aware Taylor Approximation

Contact-Aware Taylor Approximation

Naive Taylor Approximation

Samples

What does it mean to linearize contact dynamics?

What is the "right" Taylor approximation for contact dynamics?

Version 1. The "mathematically correct" Taylor approximation

Version 2. The "mathematically wrong but a bit more global" Taylor approximation

Version 3. Smooth Taylor approximation with contact constraints

What does it mean to linearize contact dynamics?

What is the "right" Taylor approximation for contact dynamics?

Version 1. The "mathematically correct" Taylor approximation

Version 2. The "mathematically wrong but a bit more global" Taylor approximation

Version 3. Smooth Taylor approximation with contact constraints

- Connection to sensitivity analysis in convex optimization

- Connection to Linear Complementary Systems (LCS)

- Connection to Wrench Sets from classical grasp theory

What is sensitivity analysis really?

Consider a simple QP, we want the gradient of optimal solution w.r.t. parameters,

and it's KKT conditions

What is sensitivity analysis really?

Consider a simple QP, we want the gradient of optimal solution w.r.t. parameters,

and it's KKT conditions

Differentiate the Equalities w.r.t. q

What is sensitivity analysis really?

Consider a simple QP, we want the gradient of optimal solution w.r.t. parameters,

and it's KKT conditions

Throw away inactive constraints and linsolve.

As we perturb q, the optimal solution changes to preserve the equality constraints in KKT.

What is sensitivity analysis really?

Consider a simple QP, we want the gradient of optimal solution w.r.t. parameters,

and it's KKT conditions

What is sensitivity analysis really?

Consider KKT conditions

and first-order Taylor approximations

What is sensitivity analysis really?

Consider KKT conditions

and first-order Taylor approximations

Theorem: Equality Constraints are preserved up to first-order.

What is sensitivity analysis really?

Consider KKT conditions

and first-order Taylor approximations

Limitations of Sensitivity Analysis

1. Throws away inactive constraints

2. Throws away feasibility constraints

Gradients are "correct" but too local!

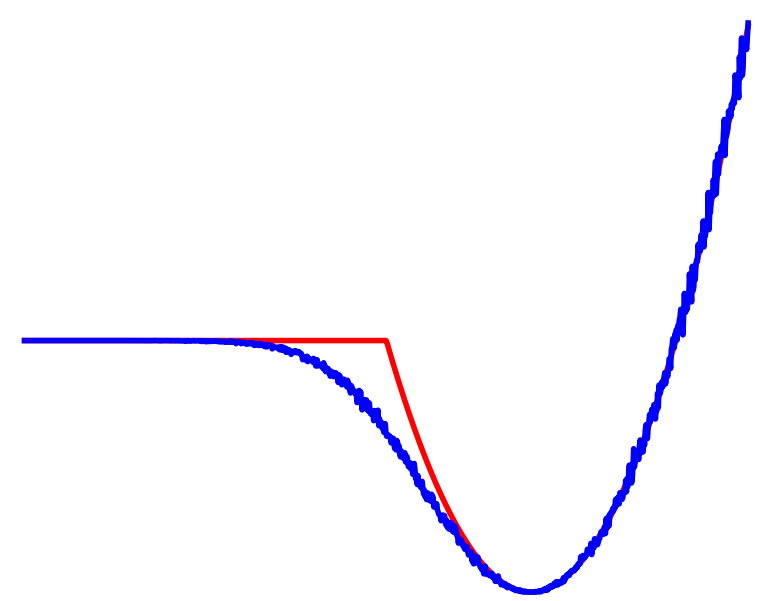

Remedy 1. Constraint Smoothing

1. Throws away inactive constraints

2. Throws away feasibility constraints

Gradients are "correct" but too local!

By interior-point smoothing, you get slightly more information about inactive constraints.

Remedy 2. Handling Inequalities

Connection between gradients and "best linear model" still generalizes.

What's the best linear model that locally approximates xstar as a function of q?

IF we didn't have feasibility constraints, the answer is Taylor expansion

Connection between gradients and "best linear model" still generalizes.

What's the best linear model that locally approximates xstar as a function of q?

But not every direction of dq results in feasibility!

KKT Conditions

Remedy 2. Handling Inequalities

Linearize the Inequalities to limit directions dq.

KKT Conditions

Set of locally "admissible" dq.

Remedy 2. Handling Inequalities

Connection to LCS

Linear Complementarity System (LCS)

Michael's version of approximation for contact

Connection to LCS

Linear Complementarity System (LCS)

Perturbed LCS

Connection to LCS

Perturbed LCS

Take the Equality Constraints, Linearize.

Throw away the second-order terms, and we're left with similar looking equations!

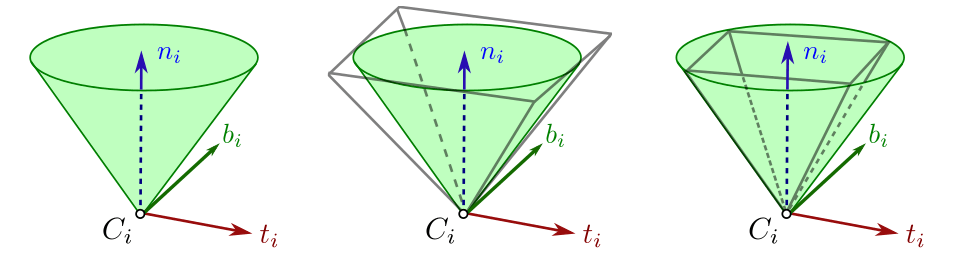

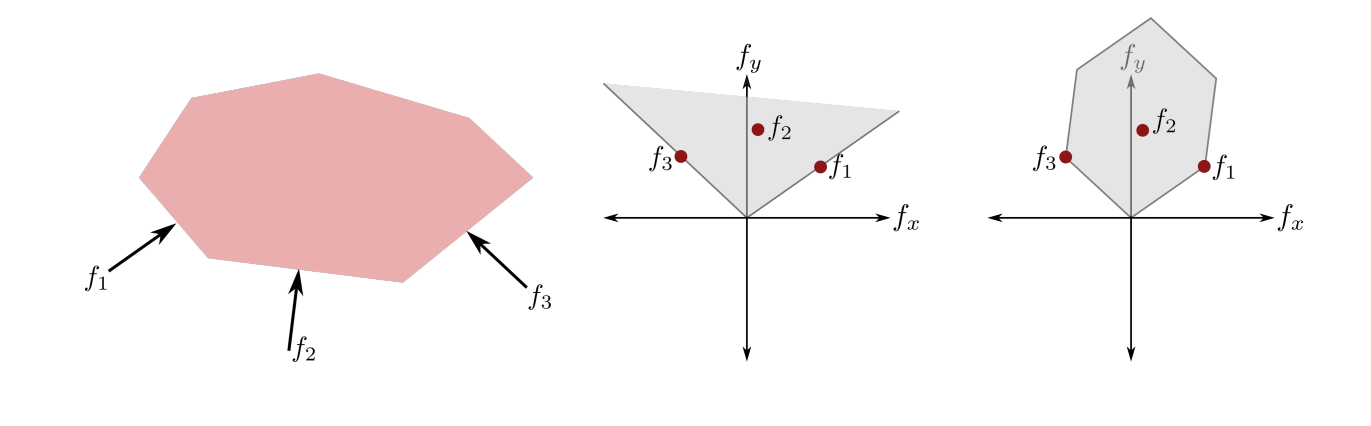

Wrench Set

Recall the "Wrench Set" from old grasping literature.

from Stanford CS237b

Wrench Set: Limitations

Recall the "Wrench Set" from old grasping literature.

The Motion Set is a linear transform of the wrench set under quasistatic dynamics.

But the wrench set has limitations if we go beyond point fingers

1. Singular configurations of the manipulator

2. Self collisions within the manipulator

3. Joint Limits of the manipulator

4. Torque limits of the manipulator

The Achievable Wrench Set

Write down forces as function of input instead

Recall the "Wrench Set" from old grasping literature.

The Achievable Wrench Set

Write down forces as function of input instead

Recall the "Wrench Set" from old grasping literature.

Recall we also have linear models for these forces!

The Achievable Wrench Set

The Achievable Wrench Set

Let's compute the Jacobian sum

The Achievable Wrench Set

The Achievable Wrench Set

Let's compute the Jacobian sum

Transform it to generalized coordinates

The Achievable Wrench Set

Transform it to generalized coordinates

To see the latter term, note that from sensitivity analysis,

The Achievable Wrench Set

Transform it to generalized coordinates

Motion Sets as Wrench Sets

Motion Set

Wrench Set

Equivalent to Minkowski sum of rotated Lorentz cones for linearized contact forces

Motion Sets as Wrench Sets

Can add other constraints as we see fit

Inverse Dynamics Control for Contact

Dynamics Linearization

Contact Force Linearization

Contact Constraints

Input Limit

Find best input within the motion set that minimizes distance to goal.

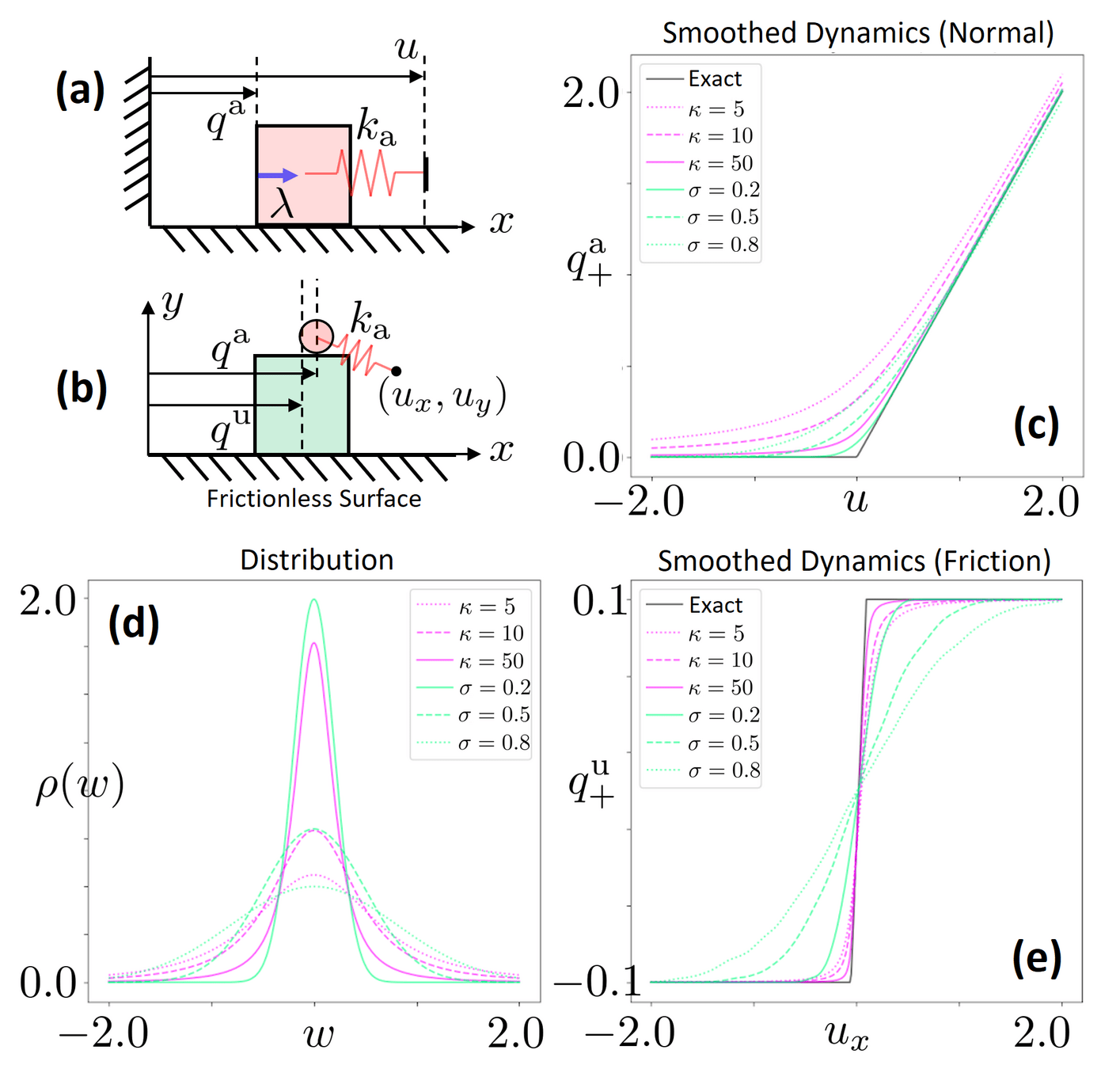

Gradient-Based Inverse Dynamics

Naive Linearization

Contact-Aware Linearization

Greedy Control Goes Surprisingly Far

Inverse Dynamics Performance

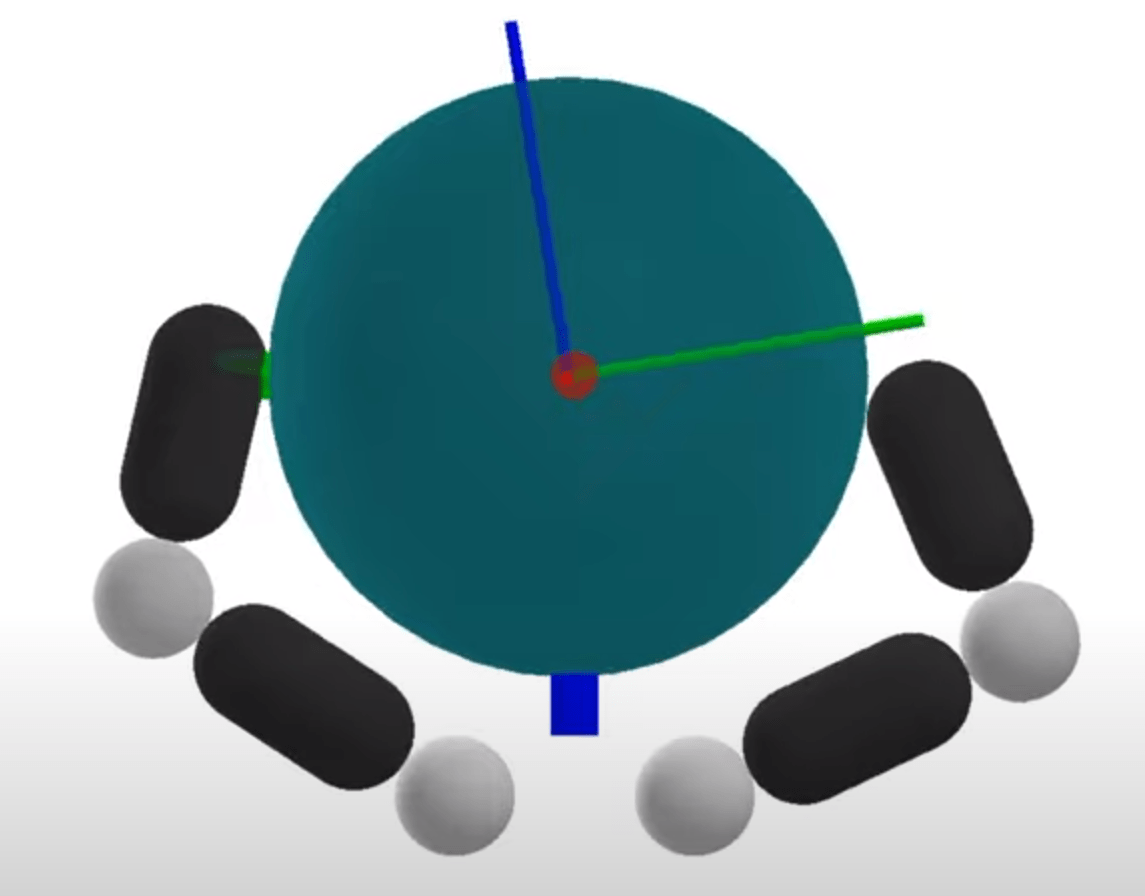

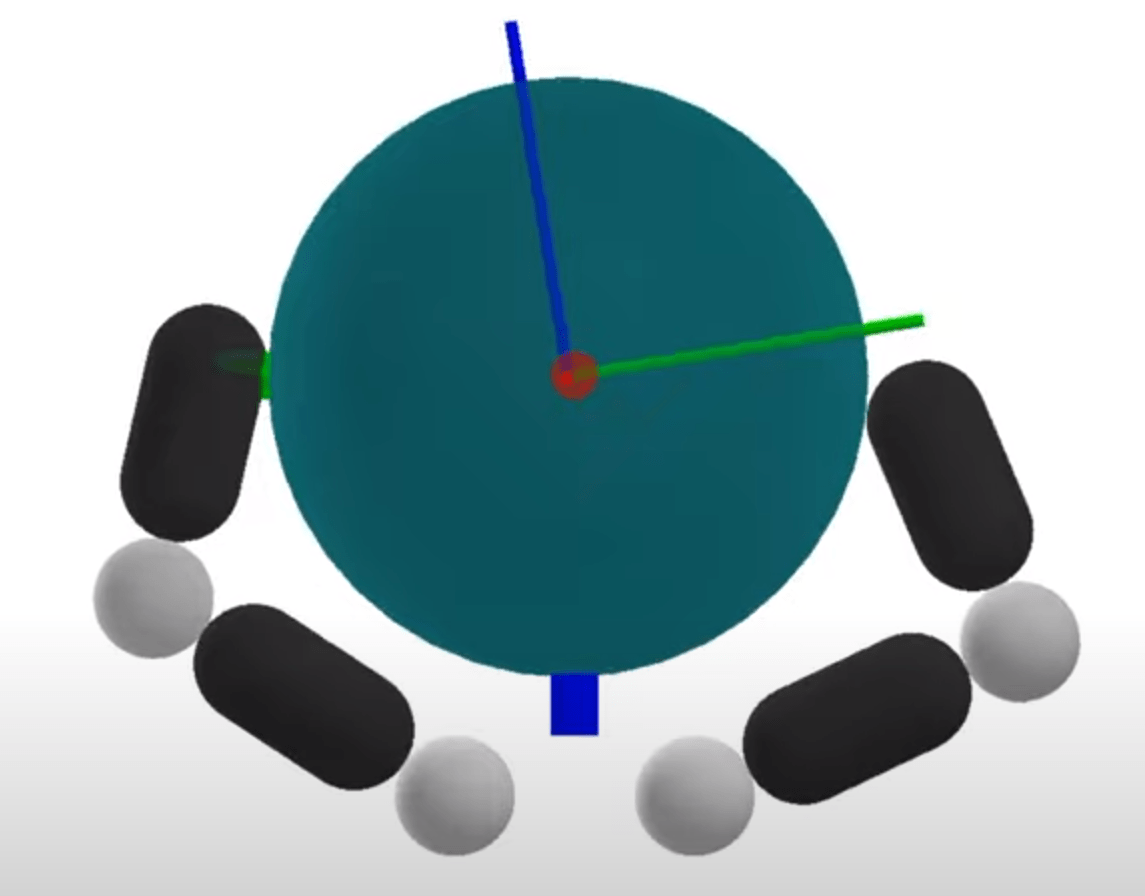

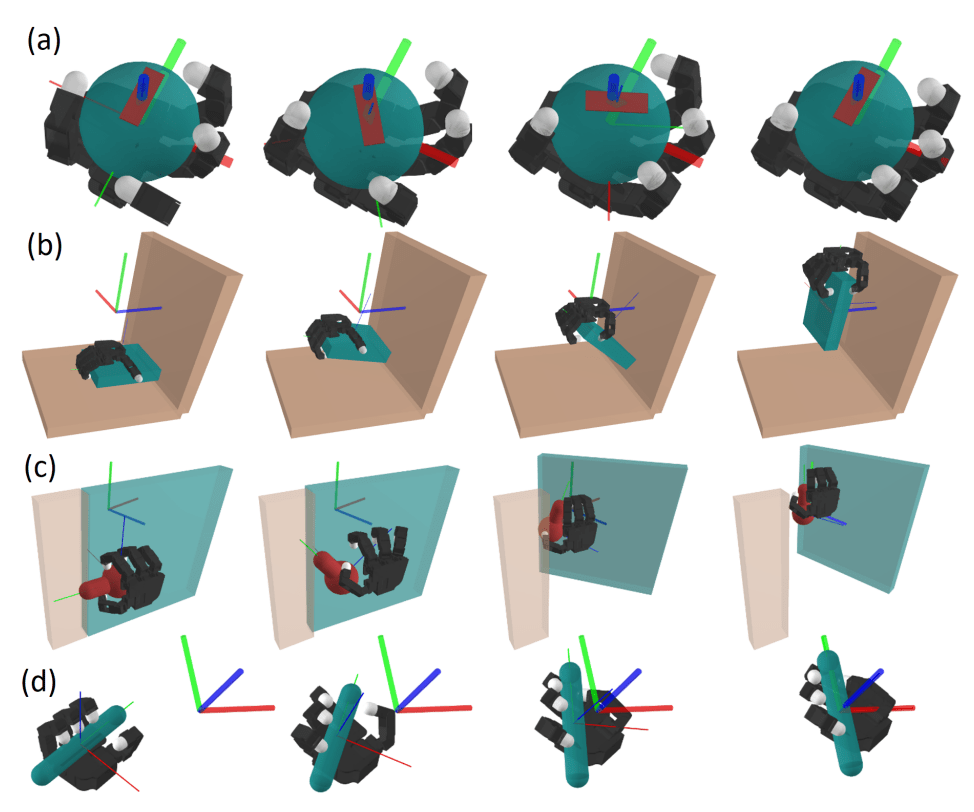

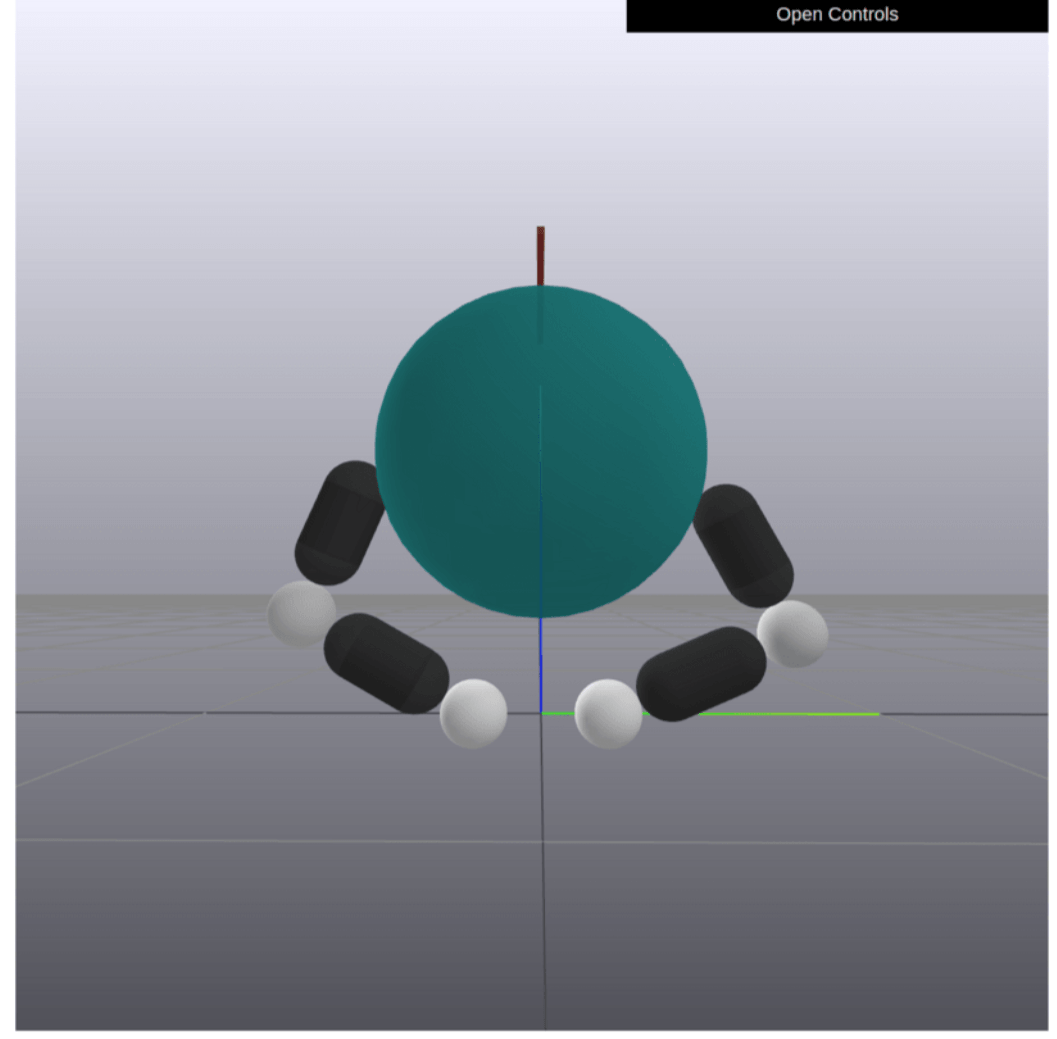

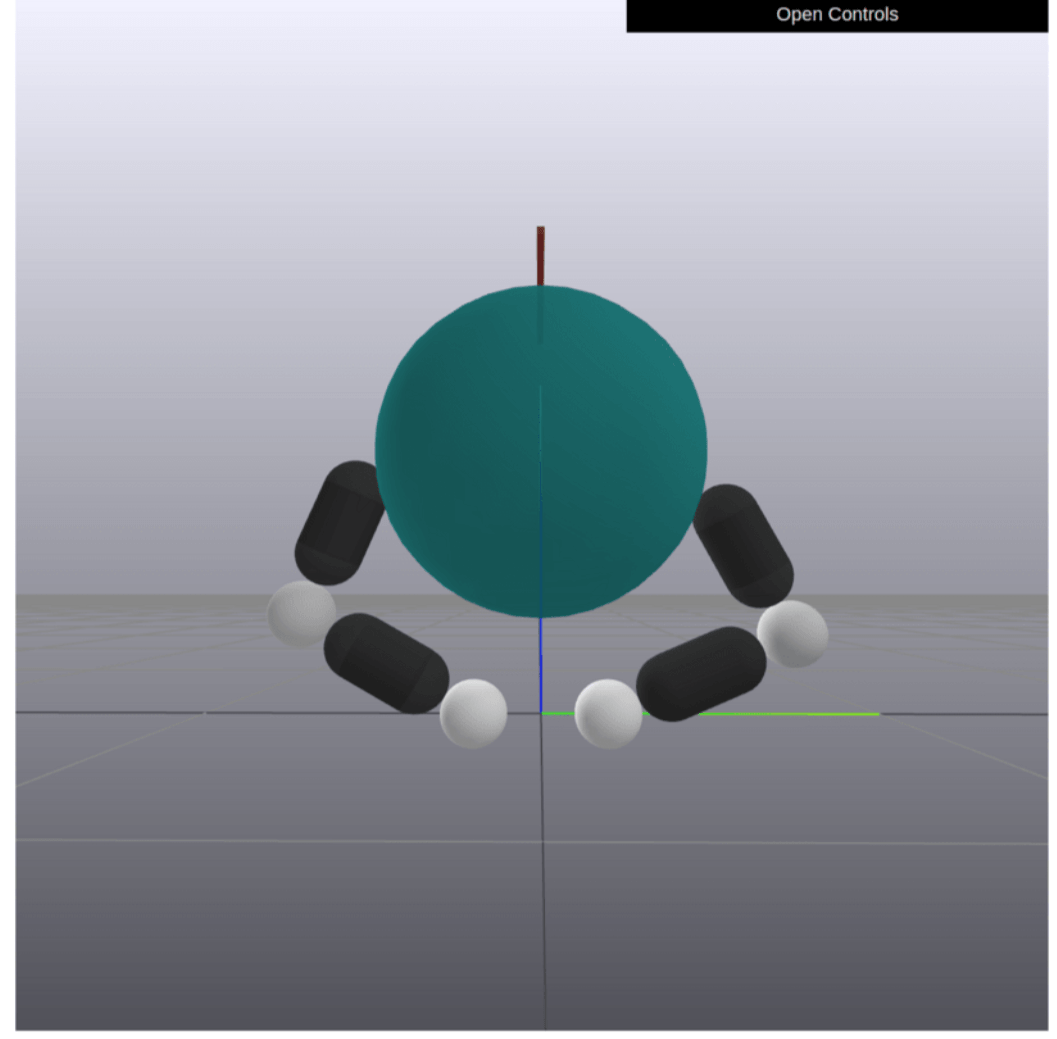

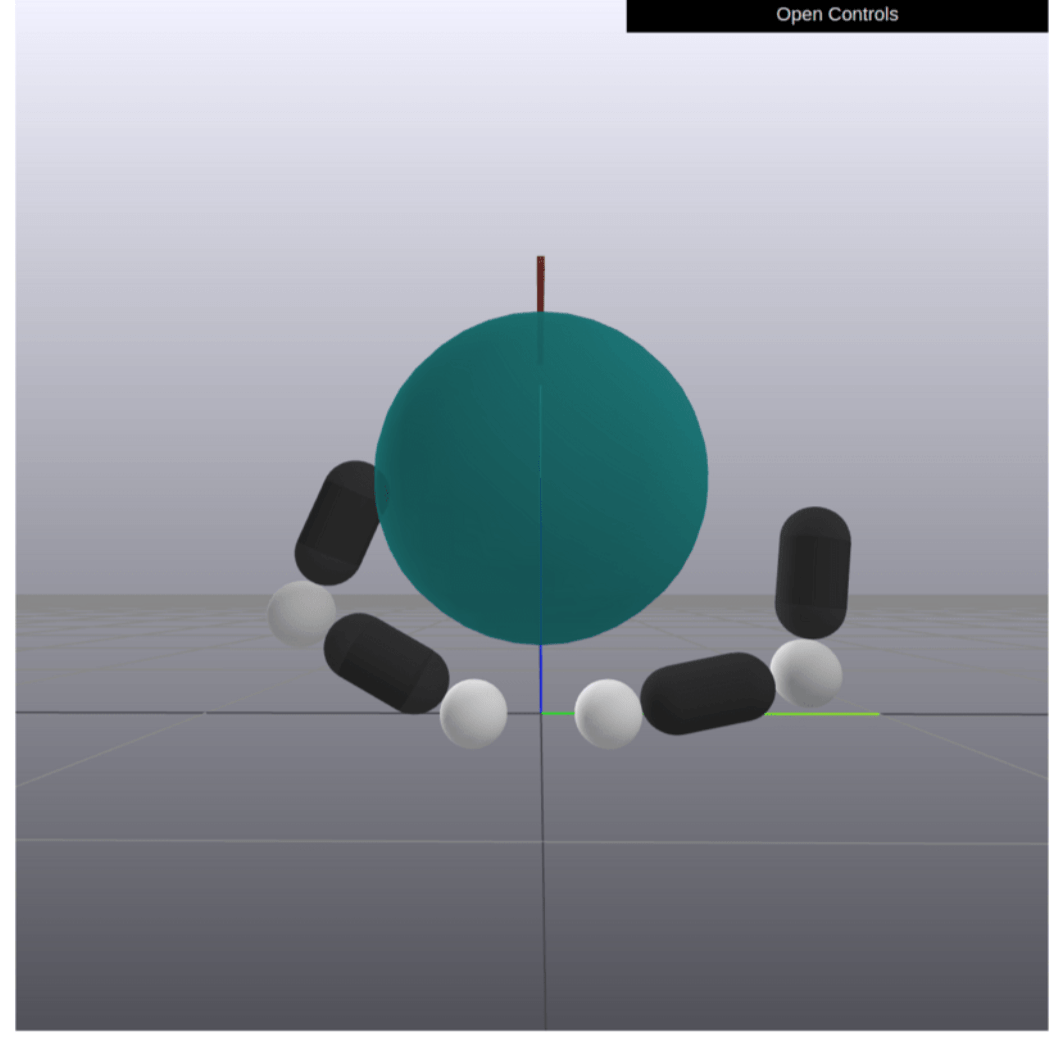

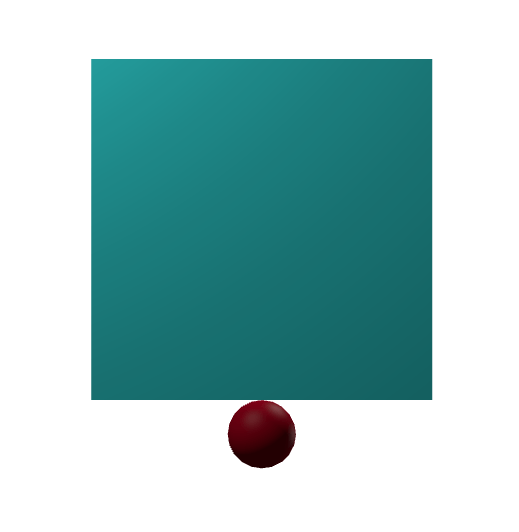

3D In-hand Reorientation

Dynamics Linearization

Contact Force Linearization

Contact Constraints

Input Limit

Hybrid Force-Velocity Control

Having Dual Linearization gives us control over forces.

Inverse Dynamics Performance

Zooming Out

What have we done here?

1. Highly effective local approximations to contact dynamics.

2. Contact-rich is a fake difficulty.

3. If a fancy method cannot beat a simple strategy (e.g. MJPC), the formulation is likely a bit off.

What is hard about manipulation?

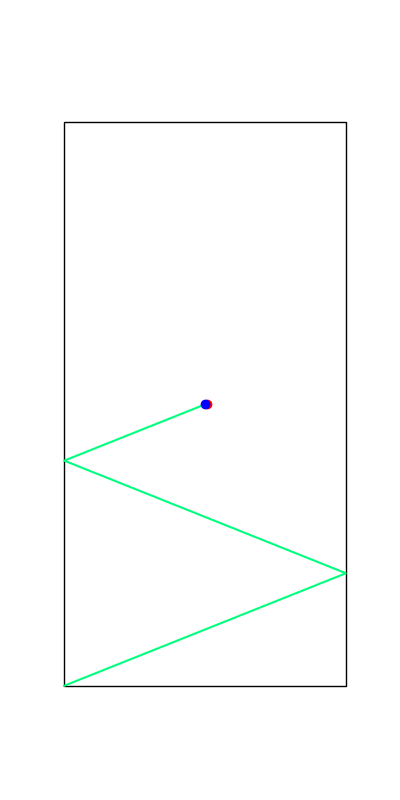

Can achieve local movements along the motion set.

What is hard about manipulation?

Can achieve local movements along the motion set.

But what if we want to move towards the opposite direction?

What is hard about manipulation?

Can achieve local movements along the motion set.

But what if we want to move towards the opposite direction?

Contact constraints force us to make a seemingly non-local decision

in response to different goals / disturbances.

What is hard about manipulation?

This explains why we need drastically non-local behavior in order to "stabilize" contact sometimes.

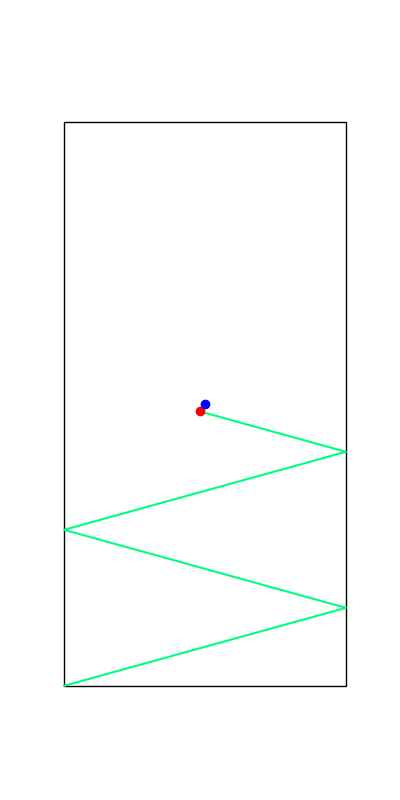

UltimateLocalControl++

I did it!!

What is hard about manipulation?

This explains why we need drastically non-local behavior in order to "stabilize" contact sometimes.

UltimateLocalControl++

I did it!!

Trivial Disturbance

Oh no..

What is hard about manipulation?

In slightly more well-conditioned problems, greedy can go far even with contact constraints.

What is hard about manipulation?

Attempting to achieve a 180 degree rotation goes out of bounds!

What is hard about manipulation?

Attempting to achieve a 180 degree rotation goes out of bounds!

Joint Limit Constraints

again introduce some discrete-looking behavior

Fundamental Limitations with Local Search

How do we push in this direction?

How do we rotate further in presence of joint limits?

Non-local movements are required to solve these problems

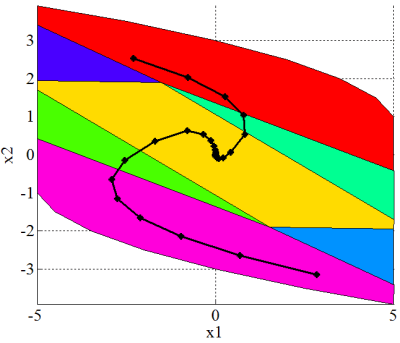

Discrete-Level Decisions from Constraints

We already know even trivial constraints lead to discreteness.

[MATLAB Multi-Parametric Toolbox]

https://github.com/dfki-ric-underactuated-lab/torque_limited_simple_pendulum

Torque-Limited Pendulum

Explicit MPC

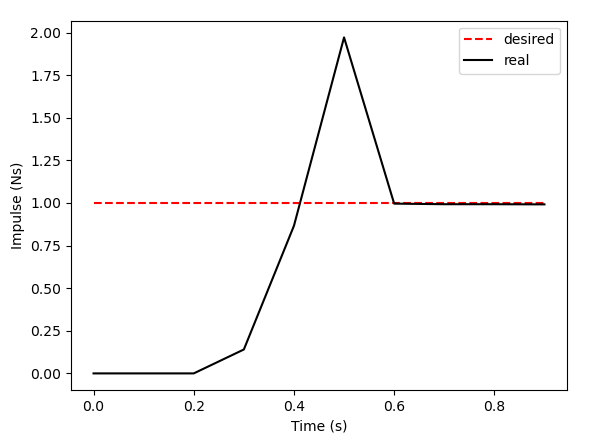

Limitations of Smoothing

Limitations of Smoothing

Apply negative impulse

to stand up.

Apply positive impulse to bounce on the wall.

Smooth all you want, can't hide the fact that these are discrete.

The Landscape of Contact-Rich Manipulation

Bemporad, "Explicit Model Predictive Control", 2013

But not all constraints create discrete behavior.

The Landscape of Contact-Rich Manipulation

Bemporad, "Explicit Model Predictive Control", 2013

But not all constraints create discrete behavior.

Contact-rich manipulation is not easy, but not much harder than contact-poor manipulation

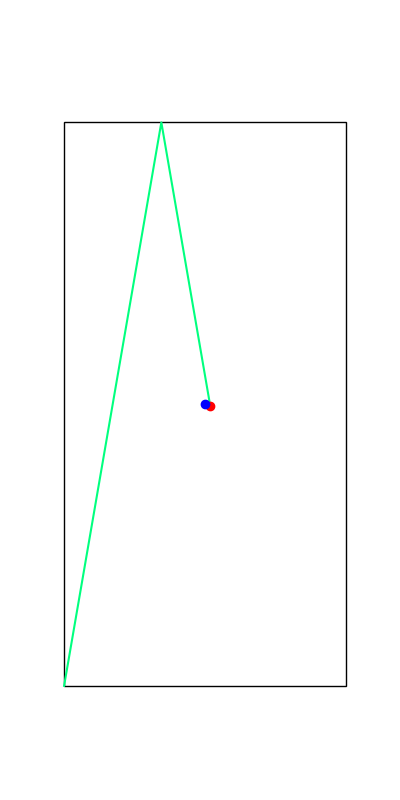

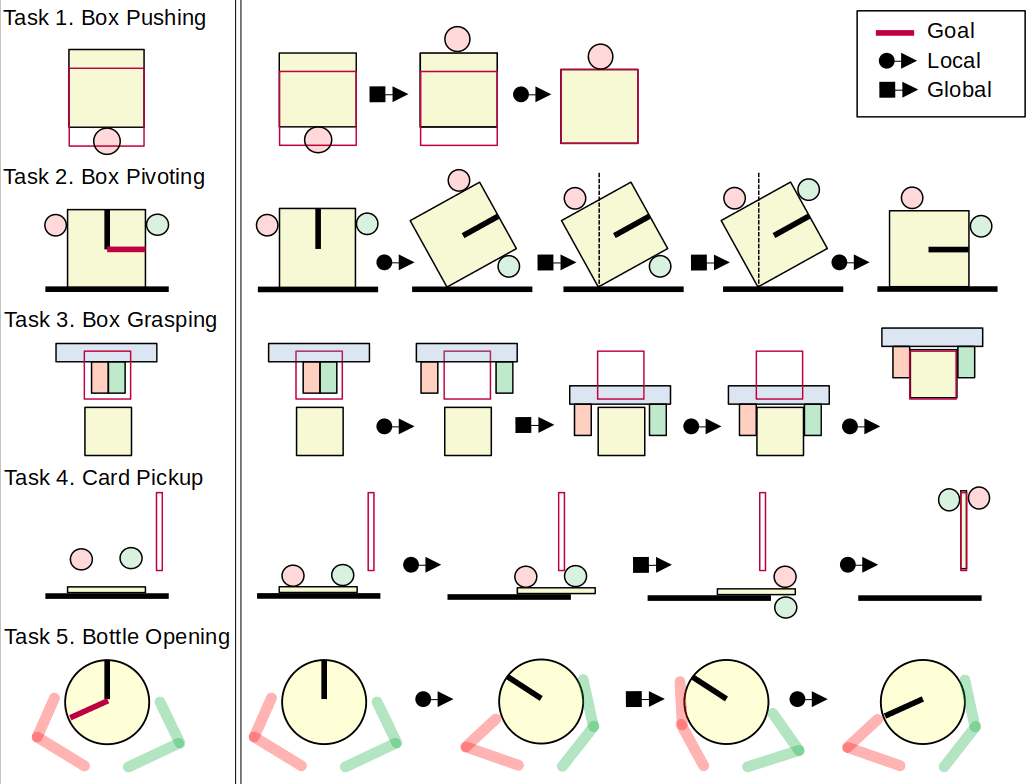

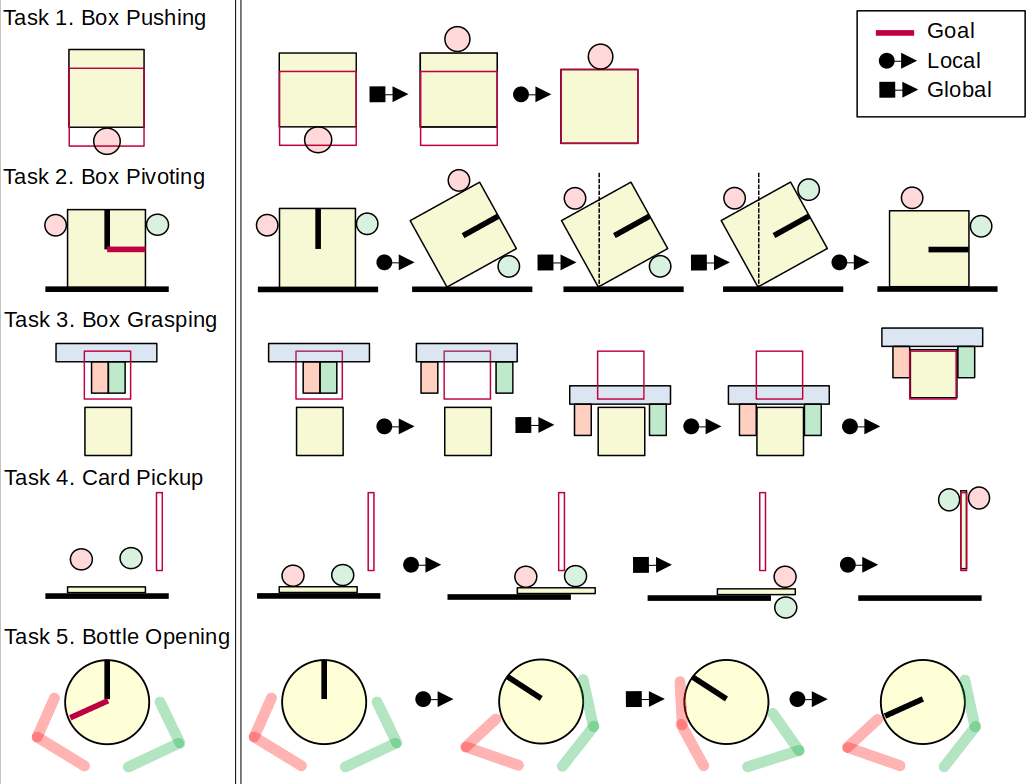

Motivating the Hybrid Action Space

Local Control

(Inverse Dynamics)

Actuator Placement if feasible

What if we gave the robot a decision to move to a different configuration?

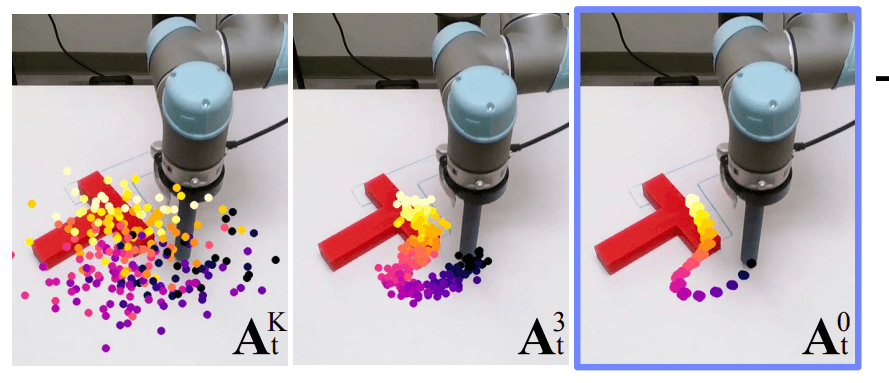

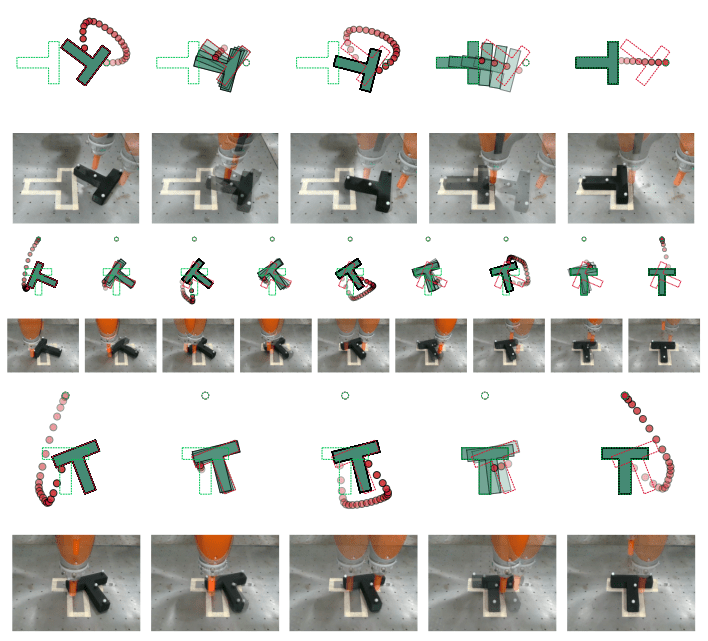

T-Pusher Problem

[GCMMAPT 2024]

[CXFCDBTS 2024]

T-Pusher Problem

[GCMMAPT 2024]

[CXFCDBTS 2024]

Motion Sets help decide

where we should make contact

Some lessons

The Sim2Real Gap is real and it's brutal.

Do we....

1. Domain randomize?

2. Improve our models?

3. Fix our hardware to be more friendly?

4. Design strategies that will transfer better?

The Fundamental Difficulty of Manipulation

How do we need know we need to open the gripper before we grasp the object?

The Fundamental Difficulty of Manipulation

How do we need know we need to open the gripper before we grasp the object?

We need to have knowledge that grasping the box is beneficial

The Fundamental Difficulty of Manipulation

D. Povinelli, "Folks Physics for Apes: The Chimpanzee's Theory of How The World Works"

Collection of contact-poor, yet significantly challenging problems in manipulation

Pure online forward search struggles quite a lot

Takeaways.

1. Contact-Rich Manipulation really should not be harder than Contact-Poor manipulation.

2. Contact-Poor Manipulation is still hard.

3. Discrete logical-looking decisions induced by contact / embodiment constraints make manipulation difficult.

Group Meeting

By Terry Suh

Group Meeting

- 327