Randomized Smoothing

for Policy / Trajectory Optimization

Terry

Motivation

Classically

The (Inevitable) Rebound

Recent Trends

Tools we use for robotics / optimal control relies heavily on analytical gradients

Resurgence of analytic gradients.

- LQR Derivation

- Trajectory Optimization (Dircol, Ipopt, Snopt, etc.)

- Stability Analysis (Lyapunov)

- CEM / MPPI in Model-based RL

- Policy Gradient Theorem / TRPO / PPO

Zero-order methods are on the rise among the RL community.

- Policy Gradient on LQR (Fazel)

- Differentiable Simulation

- "Differentiable RL"

1

0

As a "roboticist"....

I've been using analytical gradients forever! Why should I listen to the RL people and do zero order?

(In fact, coming from dynamics / nonlinear control background, it took me such a long time to understand any of their probabilistic language....)

Analytic Gradients vs. Zero-order Methods

"Rule of thumb" intuitions.

If we have the actual gradient, why don't we use it? it must be useful somewhere. -Pang, Hongkai, Twan, Emo Todorov, Justin Carpentier....

Convergence Rate / Sample Efficiency

- As a rule of thumb, we might expect better convergence properties using analytic gradients.

- Zero-order methods require samples to estimate the gradient, thus requires more computation (parallelization kills some of its limitations)

- Often, zero-order methods may be subject to high variance estimates (which, again, affects convergence properties)

Nonconvexity

Problem Specification

- Analytical gradients require the problem to be "differentiable". Sometimes a strong requirement.

- There may be cases in optimal control where it's much easier to specify some non-differentiable cost.

- Zero-order optimization can potentially address non-convexity

- Nonsmooth, but not combinatorial, optimization (contact mechanics)

- True combinatorial optimization

- Reward supervision (1 if success, 0 if failure)

Discontinuity of the Optimal Control Problem

Discontinuity doesn't just rise in contact mechanics.

The ability to use discontinuities: quite powerful!

Wide vs. Sharp Minima. Finite Differences.

Discontinuity doesn't just rise in contact mechanics.

The ability to use discontinuities: quite powerful!

Analytic Policy Gradient for Deterministic Systems

Setting: Deterministic dynamics and policies. Define the value function:

Policy Search Objective:

where is some distribution over initial states of the problem.

Some remarks over this problem:

- The standard proof for the policy gradient theorem cannot be translated to the deterministic setting, but extensions exist.

- For the LQR problem with linear dynamics and linear policies, analytic gradient descent converges to optimal policy.

Gradient Sampling Solution to Policy Optimization

Given the access to the following gradients:

One can use the chain rule to obtain the analytic gradients, that can be computed efficiently using autodiff on the rollout:

Conceivably, this can be used to update the parameters of the policy.

Policy Gradient Sampling Algorithm

Initialize some parameter estimate

While desired convergence:

Sample some initial states

Compute the analytical gradient of the value function from each sampled state.

Average the sampled gradients to obtain the gradient of the expected performance:

Update the parameters using gradient descent / Gauss-Newton.

Delta Strikes Again: Discontinuous Value Functions

Recall the following theorem: For discontinuous functions,

Recall the following theorem: For discontinuous functions,

We've previously looked at this in the context of contact dynamics, but the policy search suffers from similar problems.

But unlike contact dynamics (non-smooth but mostly continuous), the value function may truly suffer from many discontinuities.

Meaningful Question to ask: For which class of specifications / problems do we have discontinuous value functions?

- Could it appear for smooth systems in the presence of constraints?

- Viscosity solutions to the HJB equation: usually nonsmooth, but not quite discontinuous

- torque-limited pendulum (courtesy of Jack)

- Non-smoothness / Bifurcation in Dynamics

- Discontinuities in Cost

Policy Gradient Sampling for Stochastic Systems

Simple extension by taking an expected value over stochastic dynamics:

- Randomness of the dynamics

- Randomness of the policy

- Randomness of the initial condition.

Note that the expectation now includes:

Policy Gradient Sampling Algorithm (Stochastic Systems)

Initialize some parameter estimate

While desired convergence:

Sample some initial states, the plant, and policy noise.

Compute the analytical gradient of the value function from each sampled state.

Average the sampled gradients to obtain the gradient of the expected performance:

Update the parameters using gradient descent / Gauss-Newton.

A trivial extension of the analytic gradient can be made by sampling over the noise in the stochastic system.

Deterministic value function once the noises are known in advance.

Comparison with Zero-order Policy Gradient

I claim that this is not a particularly useful improvement over zero-order policy gradients.

- It still suffers from high variance estimation of the policy gradient

- (Put it another way) The variance of the policy gradient comes from how the noises at time 0 affect results at time T, which didn't disappear

Randomized Smoothing of the Value Function

Consider solving the following problem for deterministic initial condition and deterministic dynamics.

This setting covers the following problems:

- Open-loop policy optimization (i.e. trajectory optimization). Policy is simply parameterized as sequence of open-loop inputs.

- Closed-loop policy optimization from fixed initial state. (Typical policy optimization deals with expected value of this over multiple initial conditions)

Why might this problem be hard to tackle with typical gradients?

- Non-convexity of the optimization landscape (value function w.r.t. policy parameters).

- Non-smoothness that arises in some typical problems inherent in RL problems.

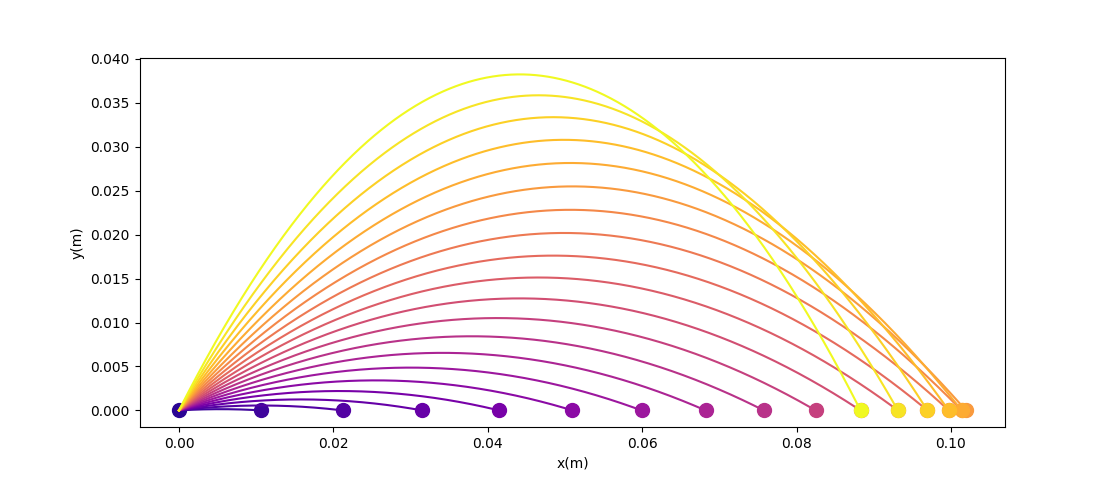

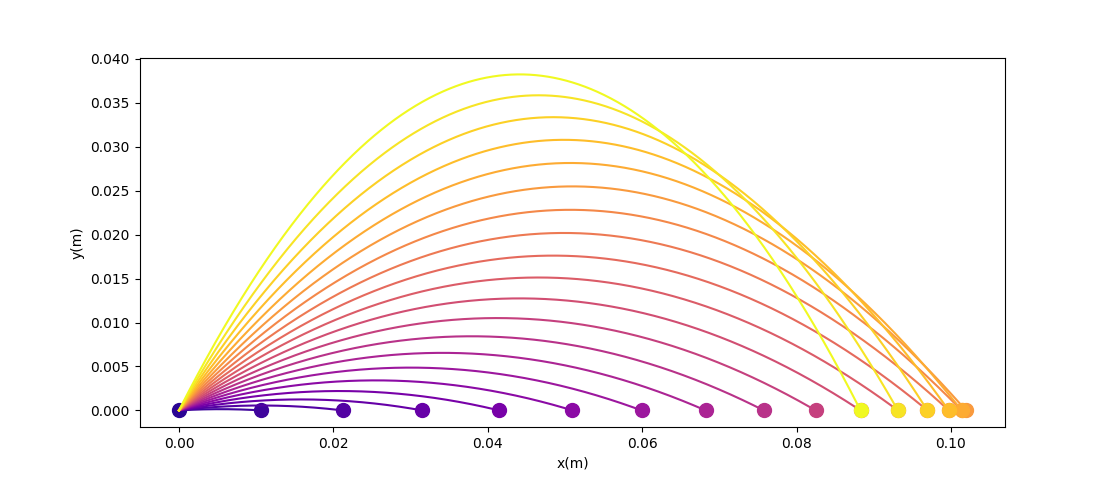

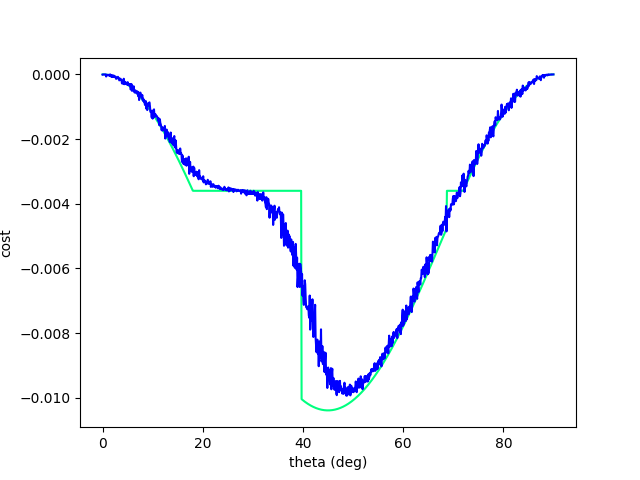

Toy Problem: Throwing Ball against the Wall

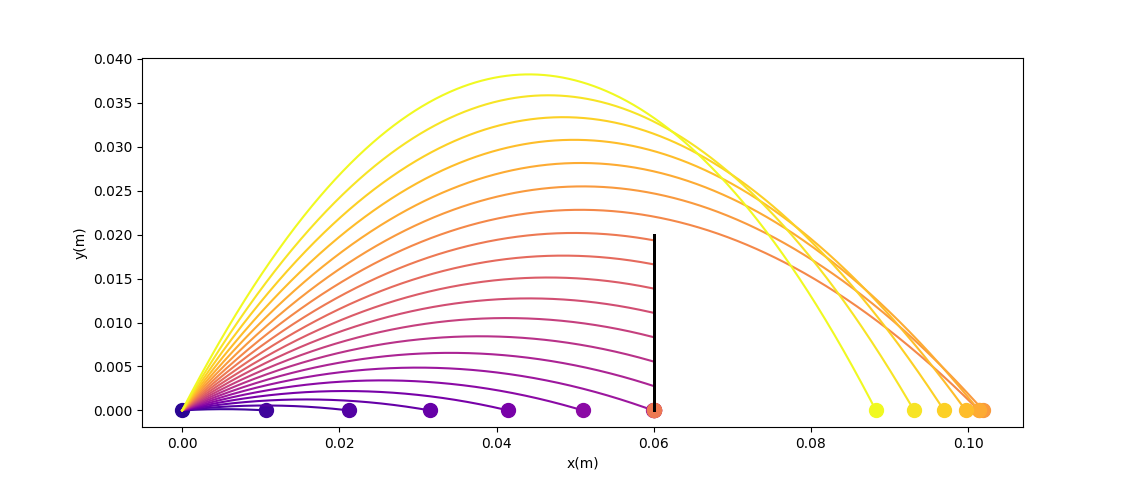

Back to high-school physics: suppose we throw a ball (Ballistic motion) and want to maximize the distance thrown using gradient descent.

Quiz: what is the optimal angle for maximizing the distance thrown?

Toy Problem: Throwing Ball against the Wall

Back to high-school physics: suppose we throw a ball (Ballistic motion) and want to maximize the distance thrown using gradient descent.

Quiz: what is the optimal angle for maximizing the distance thrown?

45 degrees!

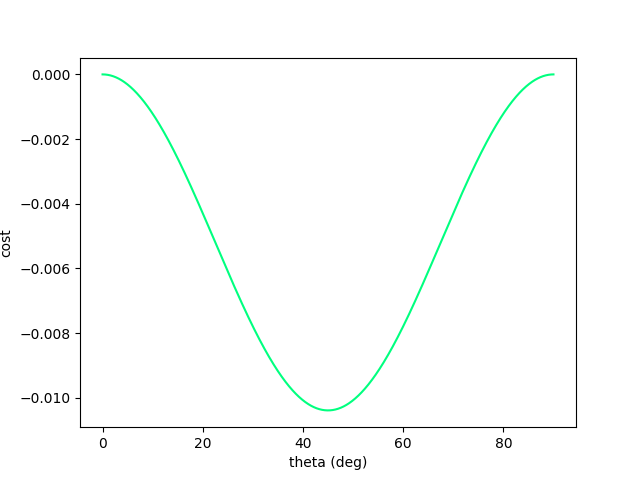

If we plot the objective as a function of the angle, it is a nice gradient dominant function that will converge to the local minima.

(Interestingly, this is non-convex. Typical example of one of Jack's PL inequality functions).

Toy Problem: Throwing Ball against the Wall

Back to high-school physics: suppose we throw a ball (Ballistic motion) and want to maximize the distance thrown using gradient descent.

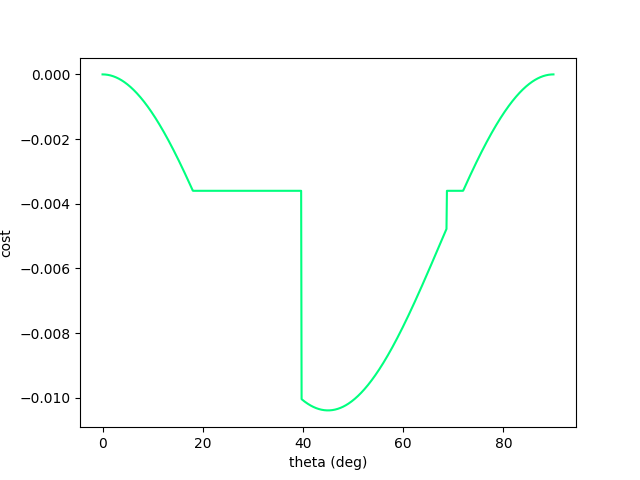

Now we no longer have such nice structure....gradient descent fails.

There is fundamentally no local information to improve once we've hit the wall!

Even though the physical gradients are very well defined, we can no longer numerically obtain the minimum of the function.

Now let's add a wall to make things more interesting. (Assume inelastic collision with the wall - once it hits, falls straight down)

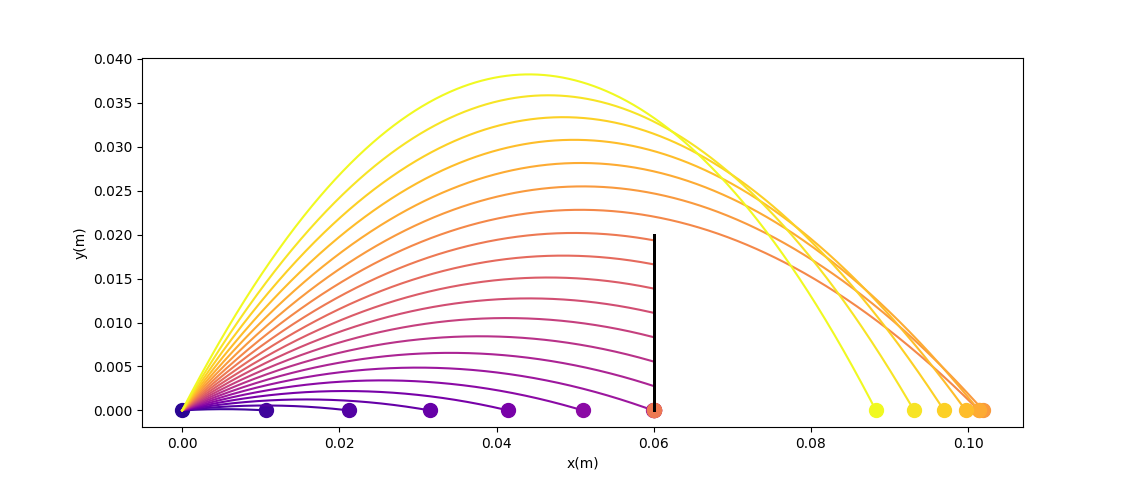

Randomized Smoothing to the Rescue.

Back to high-school physics: suppose we throw a ball (Ballistic motion) and want to maximize the distance thrown using gradient descent.

Now we have recover gradient dominance! Now we know where to go when we've hit the wall.

Note: there might exist an inflection point. But zero probability of landing there during gradient descent iterations?

To resolve this, consider a stochastic objective by adding noise to the decision variable:

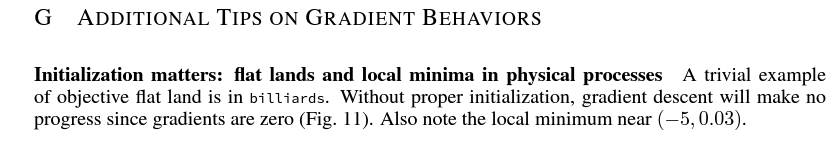

Similar Examples: Lessons from Differentiable Sim.

Excerpt from Difftaichi, one of the big diff. sims.

Rather surprising that we can tackle this problem with a randomized solution, without:

- manual tuning of initial guesses

- tree / graph search.

(Or rather, it was surprising to me at the beginning that gradients can't tackle this problem, the problem seems pretty easy and a search direction seems to exist.)

Some Connections

When are randomized policies better than deterministic policies?

The fact that you can obtain better performance with randomization in the presence of discontinuities is not new.

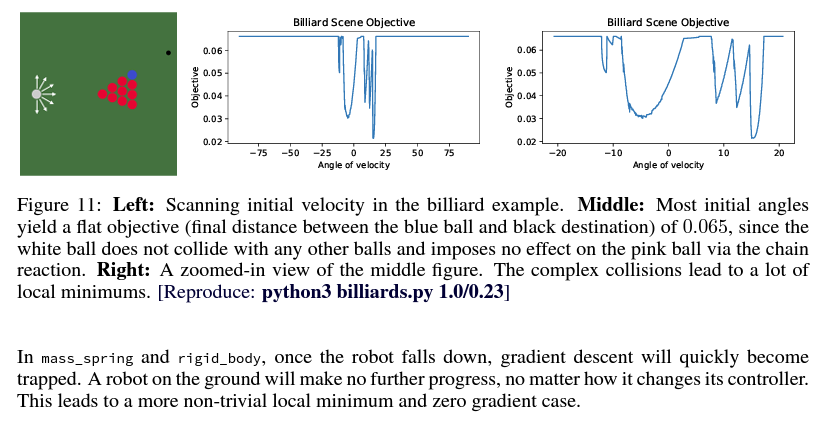

Binary Hypothesis Testing / Statistical Decision Theory (Neyman Pearson)

Deterministic Rules

Pareto Frontier

By randomizing, you can achieve better performance using the Neyman-Pearson criteria:

Danger of Gradient Strikes Again.

So we know that the stochastic objective is better for a more global search.

The problem is that we can no longer have a good way to utilize the gradient when the objective function is discontinuous.

Randomized Smoothing Policy Optimization

Note: In "Policy Optimization", we deal with optimization of closed-loop policy parameters.

Original Problem

Surrogate Problem

We think the surrogate problem has a nicer landscape compared to the original problem, w.r.t. two criteria:

- "Gradient dominance" of the landscape.

- Flatness / discontinuity of the gradients.

which suggests an algorithm to use the surrogate problem to better solve the original problem.

Zero-Order Policy Update

How do we solve the surrogate problem? Start with zero-order optimization.

Surrogate Problem

1. Sample the perturbations of the policy parameters.

2. Compute a direction of improvement w.r.t. policy parameters.

3. Update policy parameters with the computed direction.

4. Decrease the variance on injected noise as iterations converge.

Zero-Order Gradient Estimation (SPSA)

Randomized Smoothing Trajectory Optimization

Note: In "Trajectory Optimization", we deal with optimization of open-loop input sequences starting from a single initial point.

Original Problem (Single-shooting formulation)

Surrogate Problem

Note: Comparison with Previous Work

expectation over entire trajectory of noises

expectation over noise in a single timestep.

Question: how should we inject noise?

- Introducing noise of same variance at every timestep leads to extremely high variance estimates

- Least variance is when we take a deterministic policy up until the last step and sample the last action. However, such a solution does not smooth out the objective much.

- Adding noise in the beginning of the trajectory leads to large variance. However, it is most effective in exploring different possibilities.

- Fundamental tension between variance and exploration when optimizing for sequential decisions in open loop.

Variance Reduction vs. Exploration

Injecting noise right at the end of the trajectory will lead to a small variance among the distribution of value functions.

However, the capability to explore different solutions is extremely limited to a single timestep.

Injecting noise right at the front of the trajectory will lead to high variance among the distribution of value functions.

However, the method now has a lot of capability to explore. (Think of the ball throwing example)

What is the optimal way to trade off exploration vs. variance of the expected value function?

Perhaps we can reason about this in the space of increasing the width of the distribution for the latter part of the trajecotires.

Example:

Randomized Smoothing Trajectory Optimization

We would like to use this for a more global exploration of contact modes.

Consider the following toy problem of pushing the box.

Goal configuration

Initial configuration

What we know about the problem:

-

Without any smoothing whatsoever, the gradient is zero. Unable to find good directions of improvement.

-

If we just smooth the dynamics and reason about the "bundled dynamics", cannot

Directions:

We have some intuition for how randomized smoothing is going to make things better in policy / trajectory optimization, through simple toy examples.

Our intuition suggests a class of algorithms that can be useful search trajopt / policy search.

Current State of the Work:

Max, Kaiqing, Jack,

For which class of dynamical systems can we actually guarantee gradient dominance / quantify suboptimality?

Pang, Mark, Yunzhu

Empirically throw this algorithm with some hyperparameters in more "realistic" problems involving contact. Show it does better than real gradients.

But by the time you rigorously qualify the assumptions to be able to say something about the guarantees, the dynamics are no longer interesting to real problems of interest.

But we somehow extrapolated intuition optimistically to say it will work (achieve uniformly better performance than, say, gradient descent.) Not sure if empirical results on a few set of benchmarks strongly says anything about the benefits of the method uniformly across problems of interest.

????

Policy Gradients

By Terry Suh

Policy Gradients

- 339