Searching for

Optimal Per-Coordinate Step-sizes with

Frederik Kunstner, Victor S. Portella, Nick Harvey, and Mark Schmidt

Multidimensional Backtracking

Adaptive algorithms in optimizaton

"Adaptive" step-sizes for each parameter

One formal definition from Online Learning (AdaGrad)

Hypergradient

Adam, RMSProp, RProp

Aprox. 2nd Order Methods

Designed for adversarial and non-smooth optimization

Classical line-search is better in simpler problems

But what does adaptive mean?

Definition of an Optimal Preconditioner

Adaptivity for

smooth

strongly convex

problems

Multidimensional Backtracking

High level Idea

In each iteration

If

If

makes

enough progress

Update \(\mathbf{x}\):

Else

Update \(\mathbf{P}\)

Classical Line Search

Backtracking line search

Armijo condition

Within a factor of 2

on

smooth functions

Diagonal Preconditioner Search

Armijo condition

Generalized

Set of Candidate

Preconditioners

"Too Big"

Volume removed is exponentially small with dimension

Diagonal Preconditioner Search

Armijo condition

Generalized

"Too Big"

Key idea: w.r.t. \(\mathbf{P}\) yields a

Hypergradient

separating hyperplane

Set of Candidate

Preconditioners

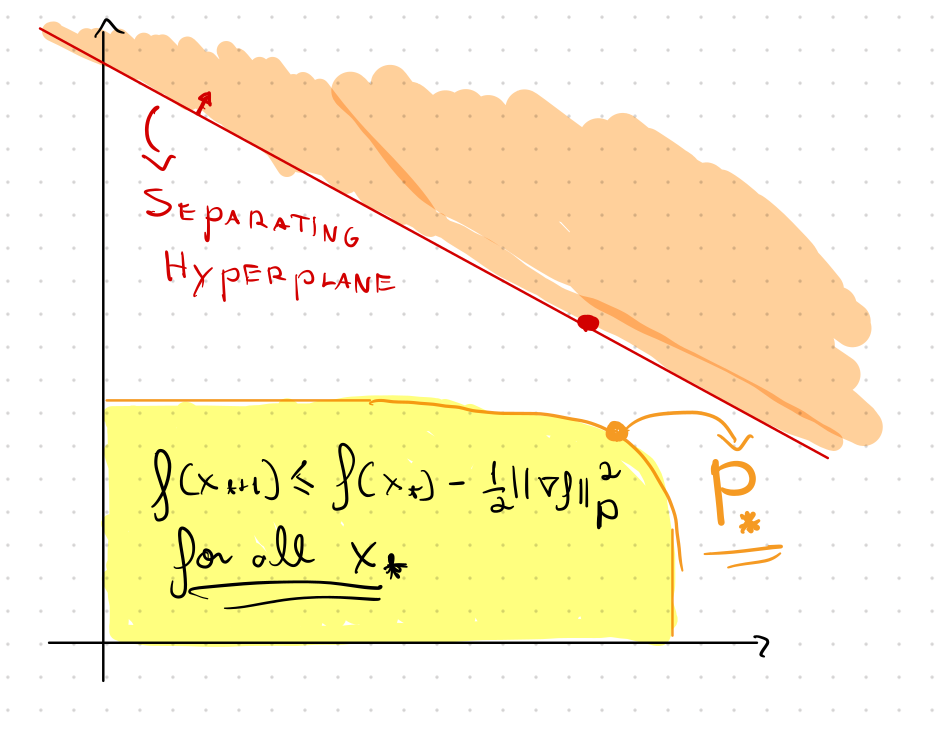

Cutting Planes Methods and Performance

Design efficient cutting plane methods that guarantee

Cutting Planes Methods and Performance

Design efficient cutting plane methods that guarantee

Old Slides to the Right

Definition of an Optimal Preconditioner

Preconditioned Condition Number

Definition of adaptivity for problems

smooth

strongly convex

Preconditioned GD

Condition Number

Diagonal Preconditioner Search

Armijo condition

Generalized

Set of Candidate

Preconditioners

Gradient of

Use of Hypergradients with formal guarantees

Also Fail the Armijo Condition

Almost no overhead by exploiting symmetry

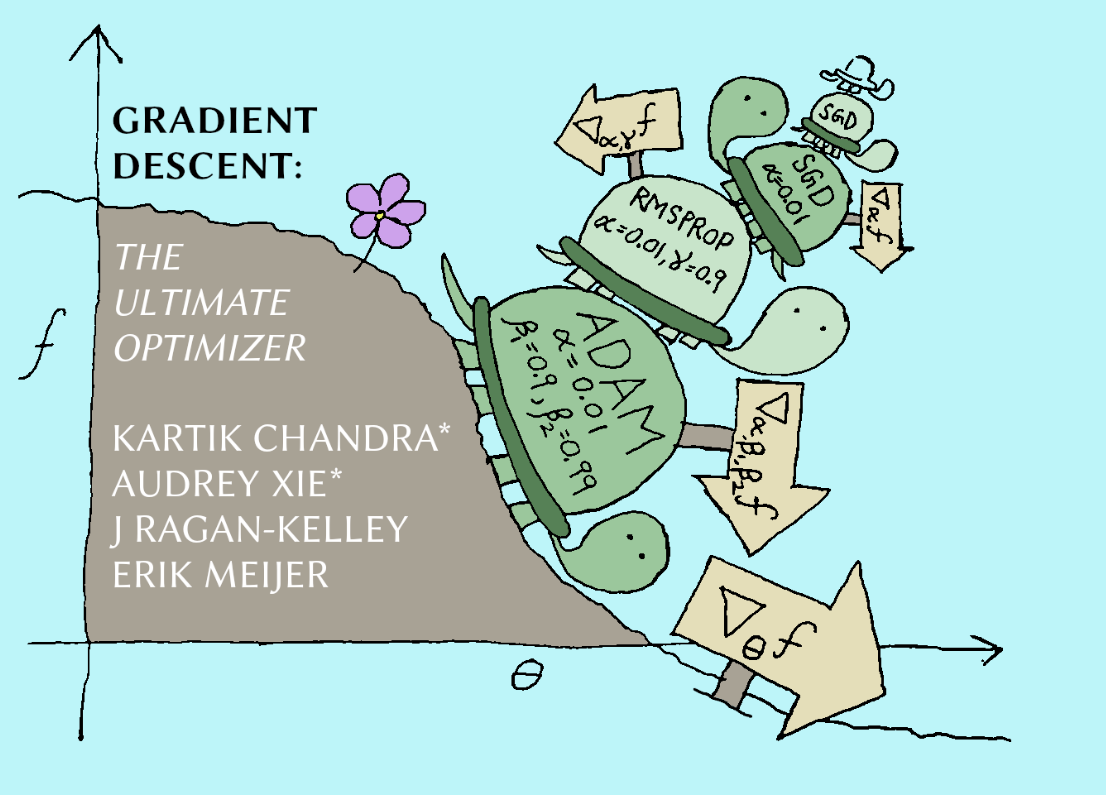

Adaptive First-Order Methods

Finding Good Step-Sizes on the Fly

What if we don't know \(L\)?

Line-search!

"Halve your step-size if too big"

\(\mu\)-strongly convex

\(f\) is

\(L\)-smooth

and

"Easy to optimize"

Gradient Descent

"Adaptive" Methods

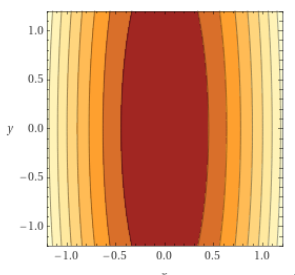

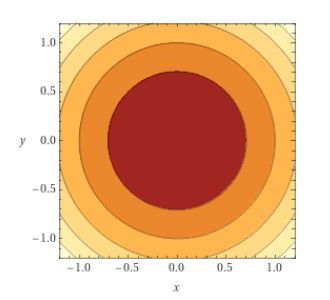

We often can do better if we use a (diagonal) matrix preconditioner

We often can do better if we use a (diagonal)

(Quasi-)Newton Methods

Hypergradient Methods

Hyperparameter tuning as an opt problem

Unstable and no theory/guarantees

Online Learning

Formally adapts to adversarial and changing inputs

Super-linear convergence close to opt

What is a good \(P\)?

May need 2nd-order information.

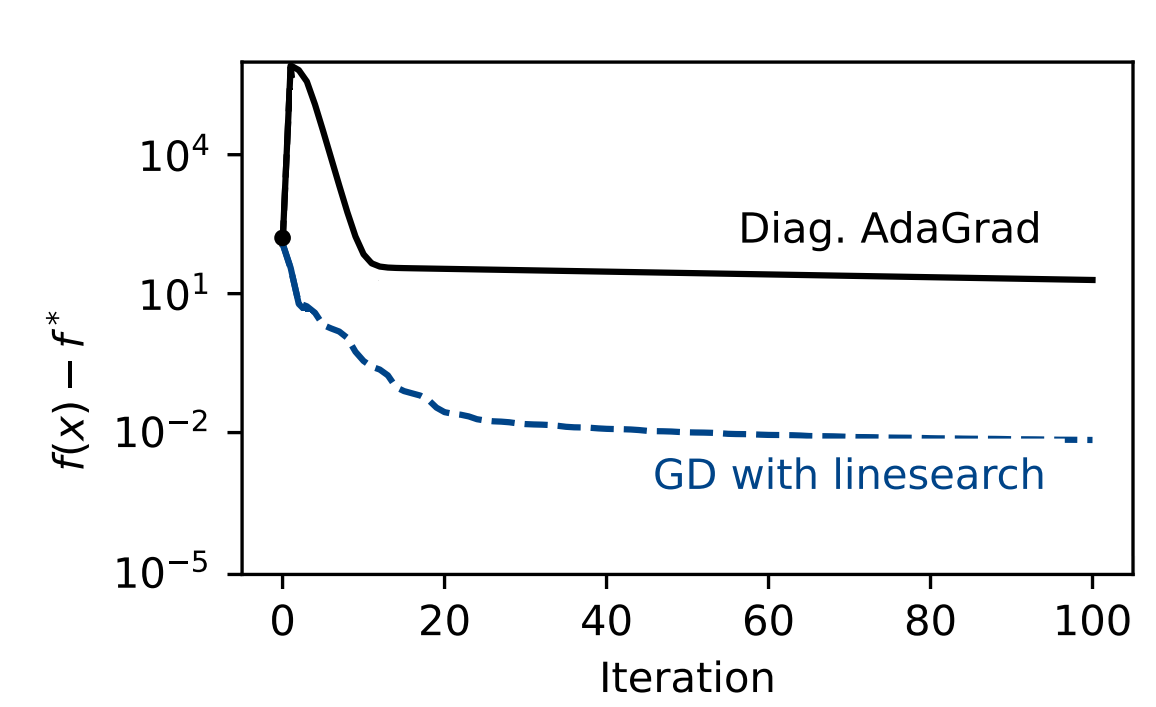

Too conservative in this case (e.g., AdaGrad)

"Fixes" (e.g., Adam) have few guarantees

State of Affairs

"AdaGrad inspired an incredible number of clones, most of them with similar, worse, or no regret guarantees.(...) Nowadays, [adaptive methods] seems to denote any kind of coordinate-wise learning rates that does not guarantee anything in particular."

Orabona, F. (2019). A modern introduction to online learning.

adaptive methods

only guarantee (globally)

In Smooth

and Strongly Convex optimization,

Should be better if there is a good Preconditioner \(P\)

Can we get a line-search analog for diagonal preconditioners?

State of Affairs

"AdaGrad inspired an incredible number of clones, most of them with similar, worse, or no regret guarantees.(...) Nowadays, [adaptive methods] seems to denote any kind of coordinate-wise learning rates that does not guarantee anything in particular."

Orabona, F. (2019). A modern introduction to online learning.

Online Learning

Smooth Optimization

1 step-size

\(d\) step-sizes

(diagonal preconditioner )

Backtracking Line-search

Diagonal AdaGrad

Multidimensional Backtracking

Scalar AdaGrad

(and others)

(and others)

Preconditioner Search

Optimal (Diagonal) Preconditioner

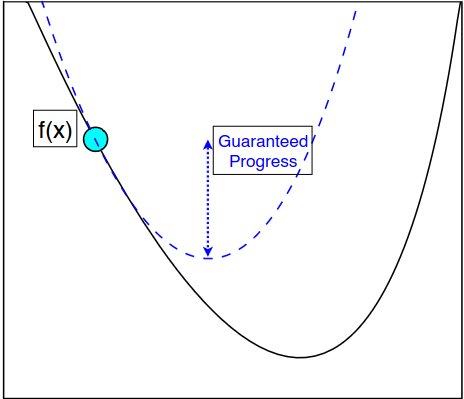

Optimal step-size: biggest that guarantees progress

Optimal preconditioner: biggest (??) that guarantees progress

\(L\)-smooth

\(\mu\)-strongly convex

\(f\) is

and

Optimal Diagonal Preconditioner

\(\kappa_* \leq \kappa\), hopefully \(\kappa_* \ll \kappa\)

Over diagonal matrices

minimizes \(\kappa_*\) such that

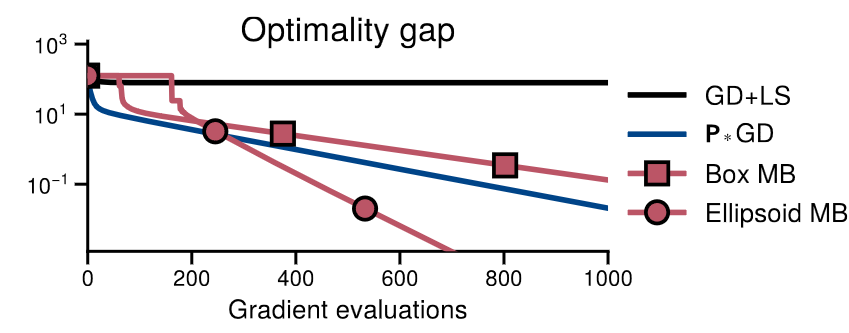

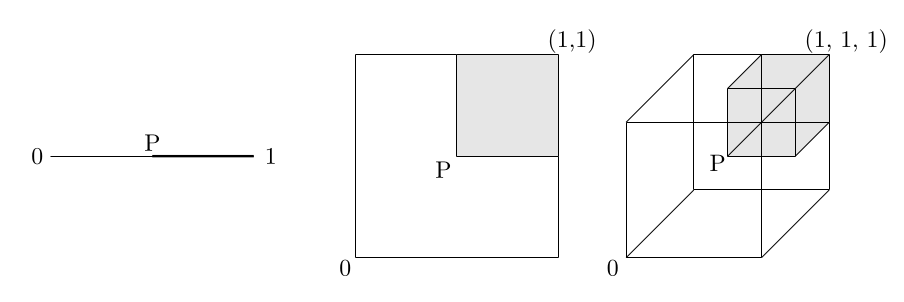

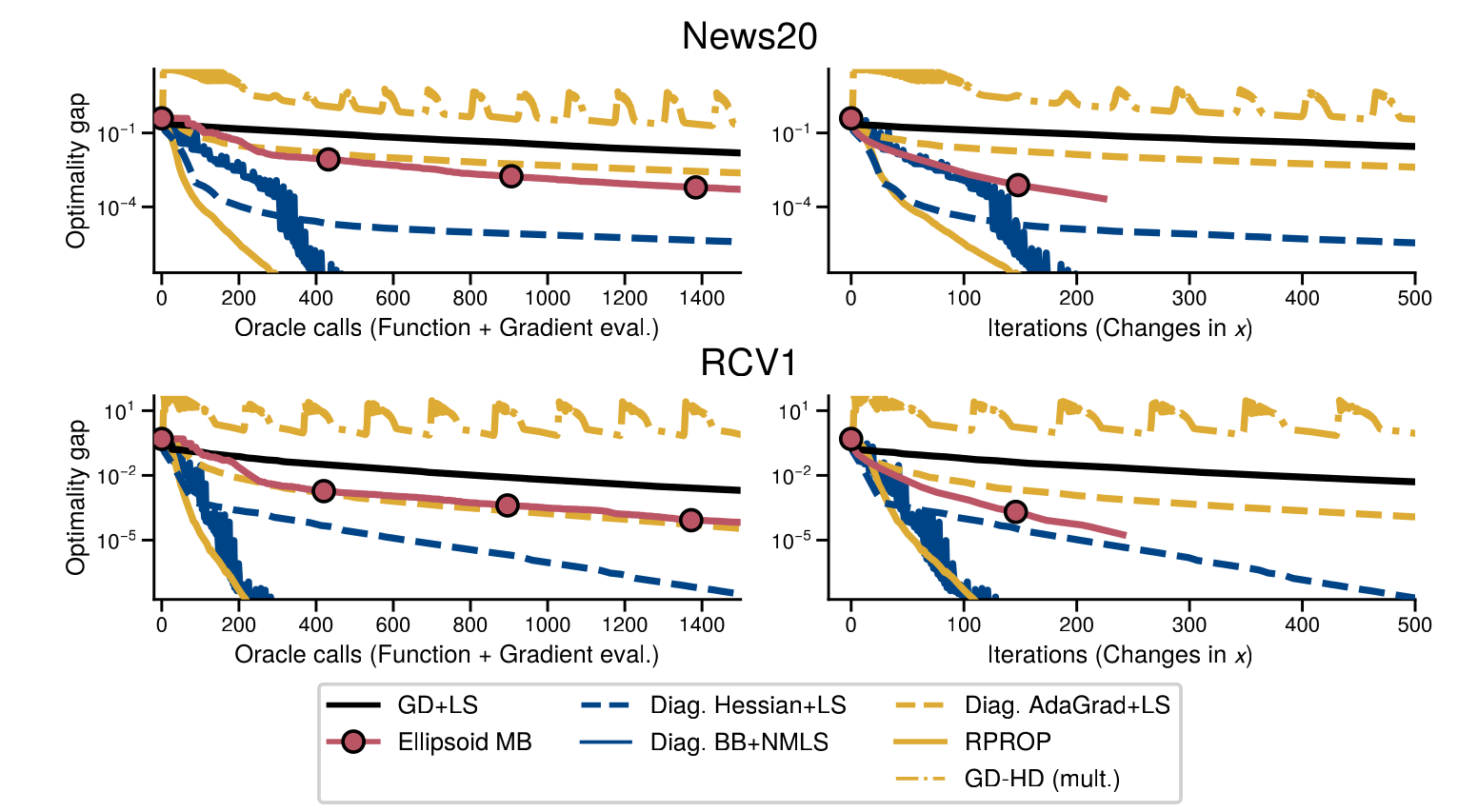

From Line-search to Preconditioner Search

Line-search

Worth it if \(\sqrt{2d} \kappa_* \ll 2 \kappa\)

Multidimensional Backtracking

(our algorithm)

# backtracks \(\lesssim\)

# backtracks \(\leq\)

Multidimensional Backtracking

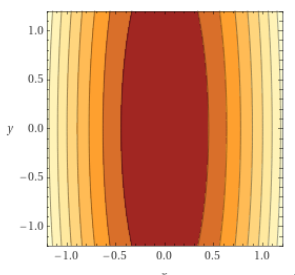

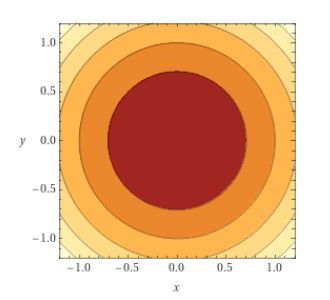

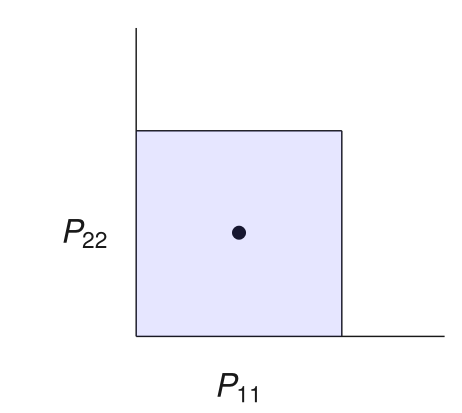

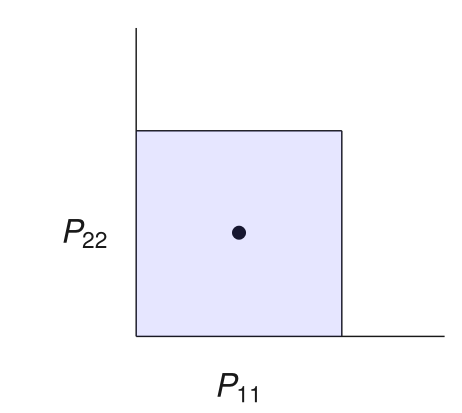

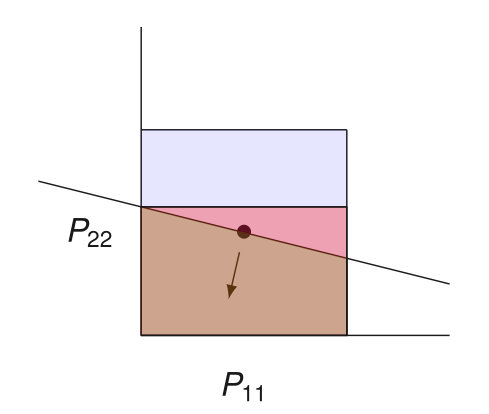

Why Naive Search does not Work

Line-search: test if step-size \(\alpha_{max}/2\) makes enough progress:

Armijo condition

If this fails, cut out everything bigger than \(\alpha_{\max}/2\)

Preconditioner search:

Test if preconditioner \(P\) makes enough progress:

Candidate preconditioners: diagonals in a box/ellipsoid

If this fails, cut out everything bigger than \(P\)

Why Naive Search does not Work

Line-search:

"Progress if \(f\) were

\(\frac{2}{\alpha_{\max}}\)-smooth"

If this fails, cut out everything bigger than \(\alpha_{\max}/2\)

Test if step-size \(\alpha_{\max}/2\) makes enough progress:

Candidate step-sizes: interval \([0, \alpha_{\max}]\)

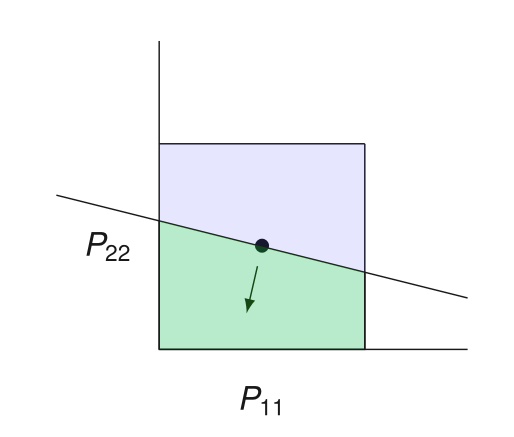

Why Naive Search does not Work

Preconditioner search:

Test if preconditioner \(P\) makes enough progress:

Candidate preconditioners: diagonals in a box/ellipsoid

If this fails, cut out everything bigger than \(P\)

"Progress if \(f\) were

\(P\)-smooth"

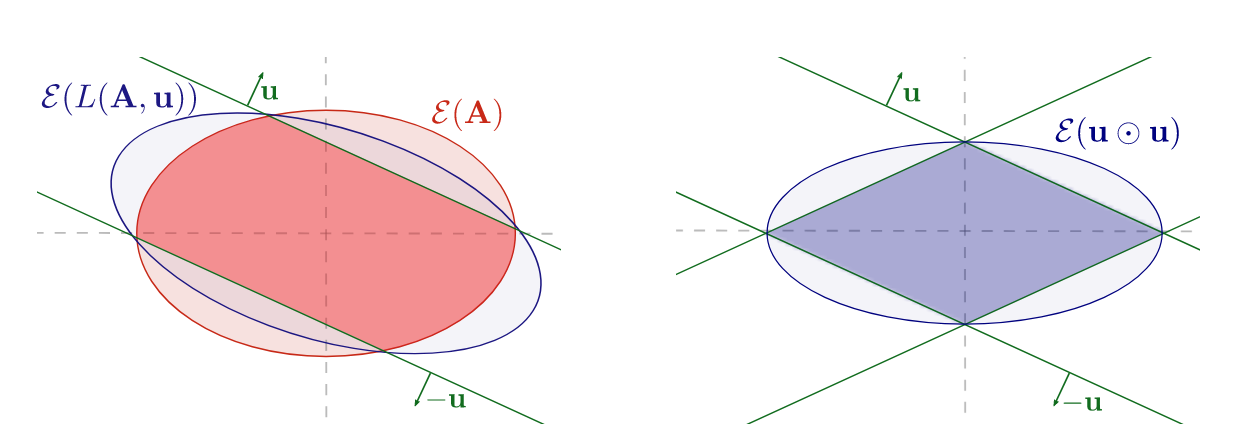

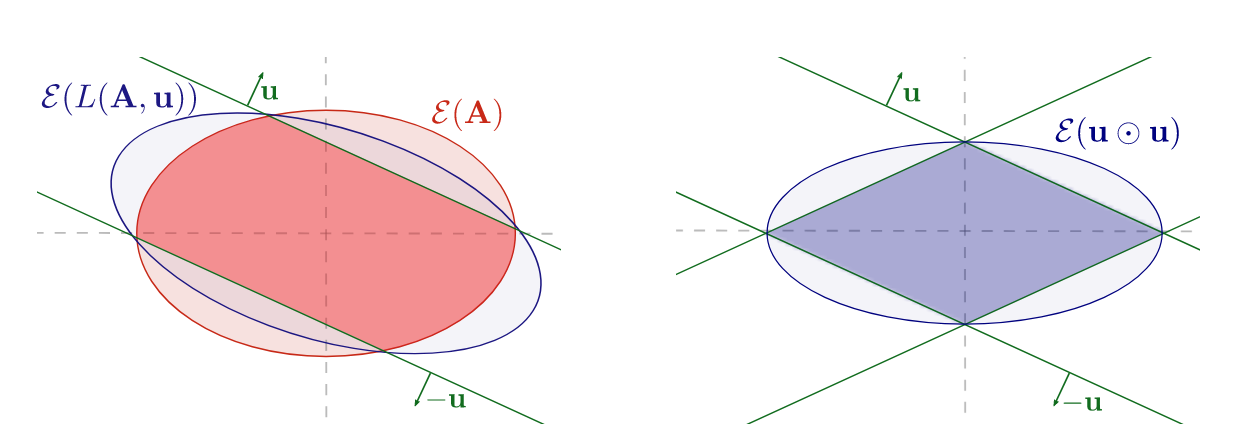

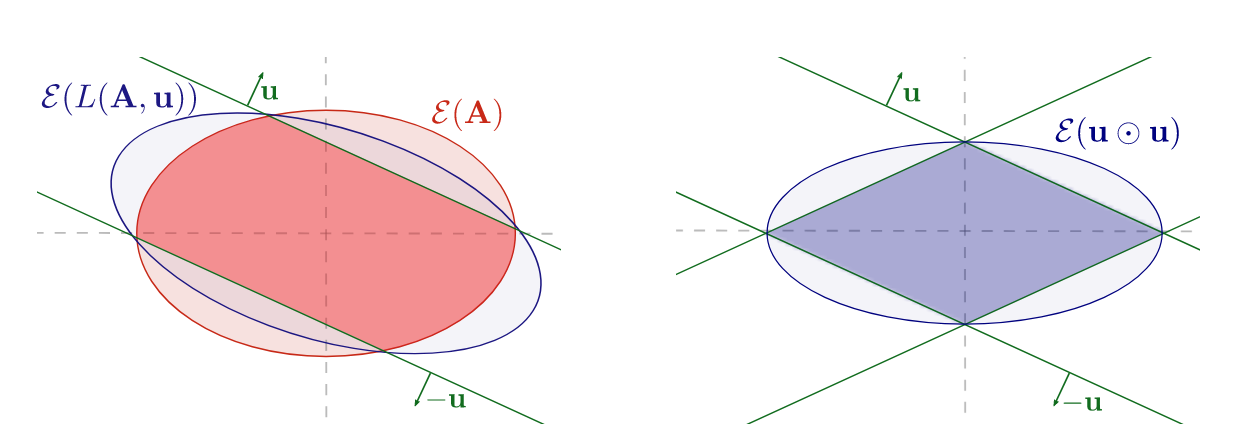

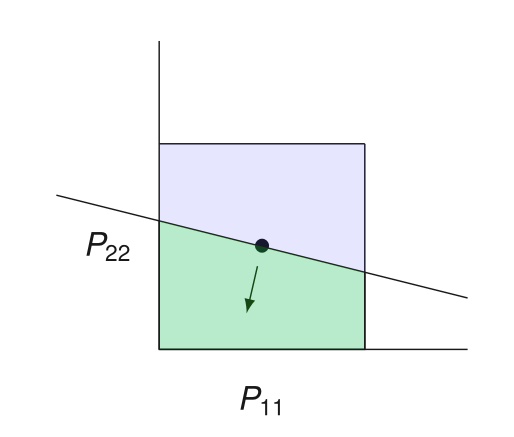

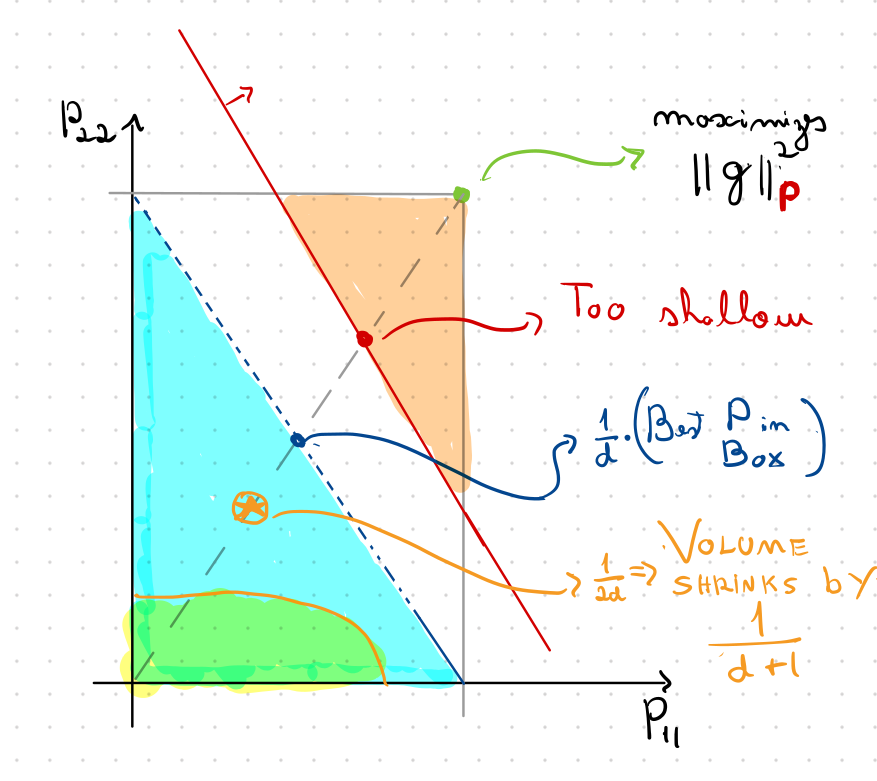

Convexity to the Rescue

\(P\) does not yield sufficient progress

Which preconditioners can be thrown out?

\(P \) yields sufficient progress \(\iff\) \(h(P) \leq 0\)

Convexity \(\implies\)

induces a separating hyperplane!

"Hypergradient"

Main technical Idea

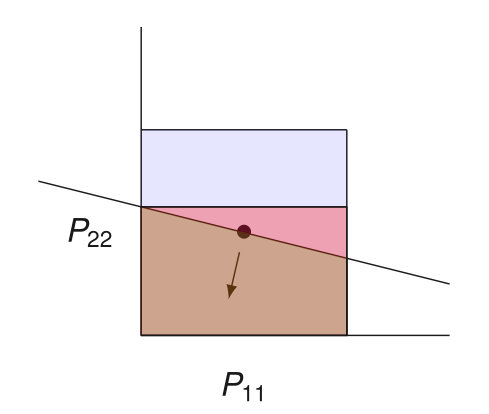

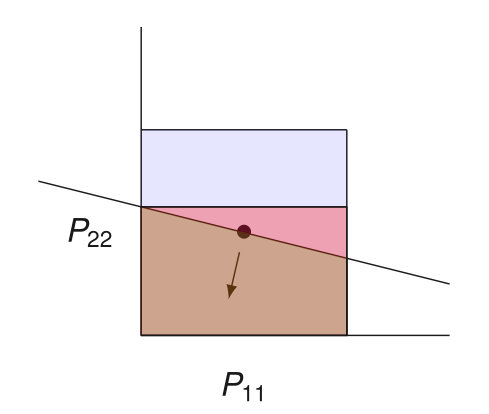

Boxes vs Ellipsoids

Box case: query point needs to be too close to the origin

Volume decrease \(\implies\) query points close to the origin

Good convergence rate \(\implies\) query point close to the boundary

Ellipsoid method might be better.

\(\Omega(d^3)\) time per iteration

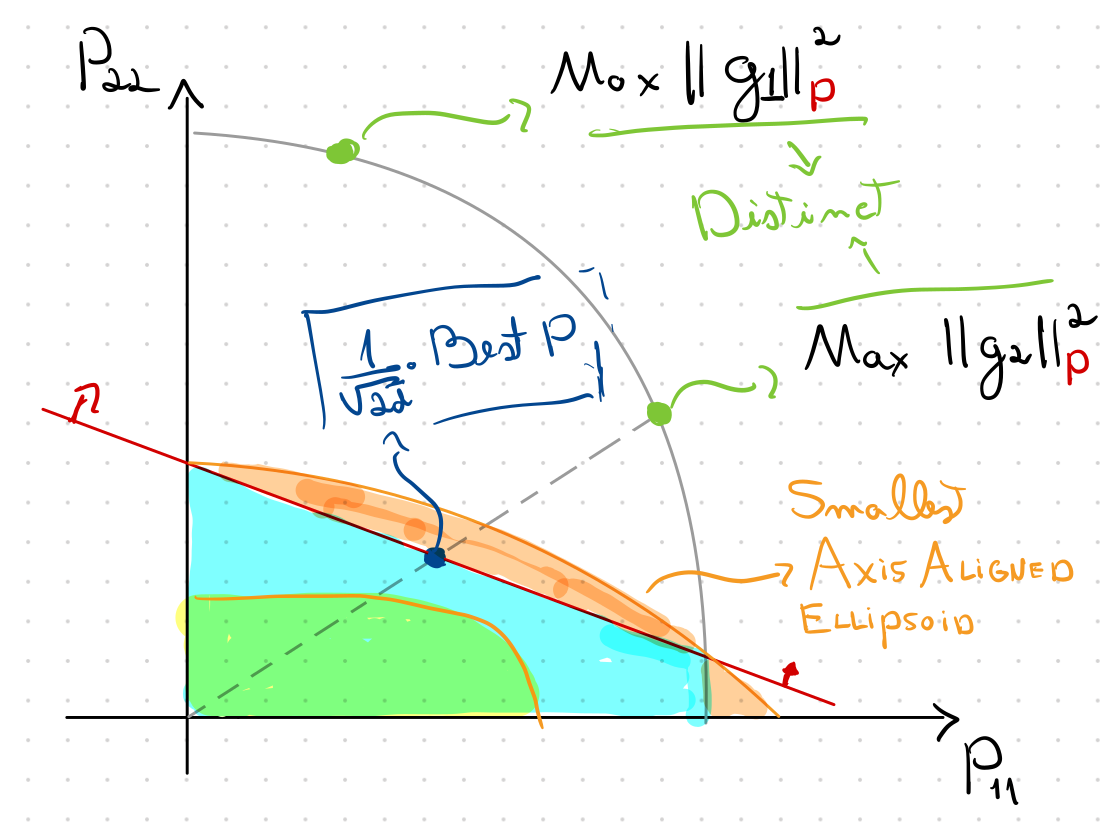

Ellipsoid Method with Symmetry

We want to use the Ellipsoid method as our cutting plane method

\(\Omega(d^3)\) time per iteration

We can exploit symmetry!

\(O(d)\) time per iteration

Constant volume decrease on each CUT

Query point \(1/\sqrt{2d}\) away from boundary

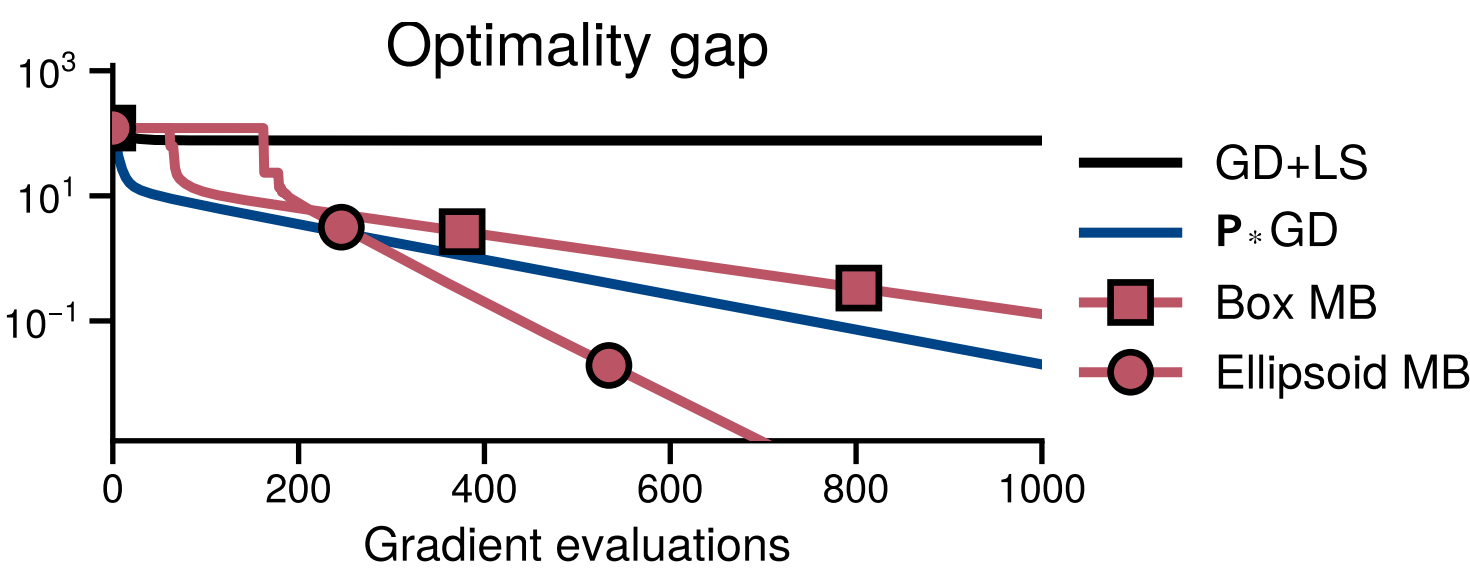

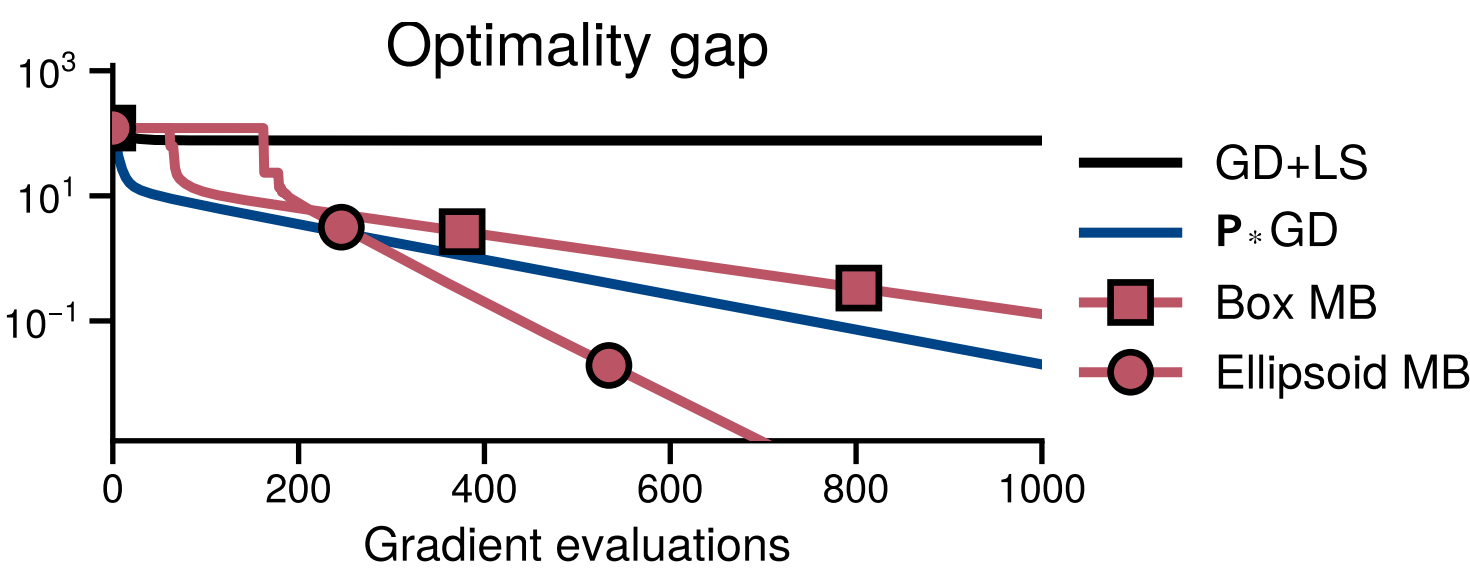

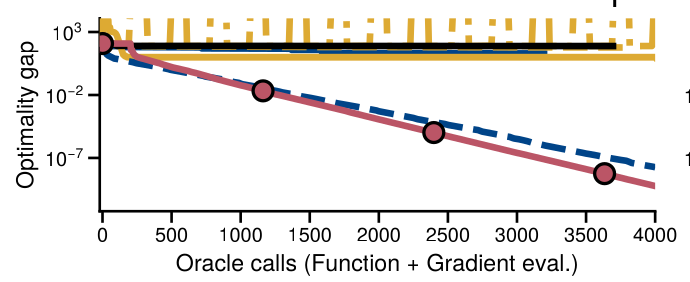

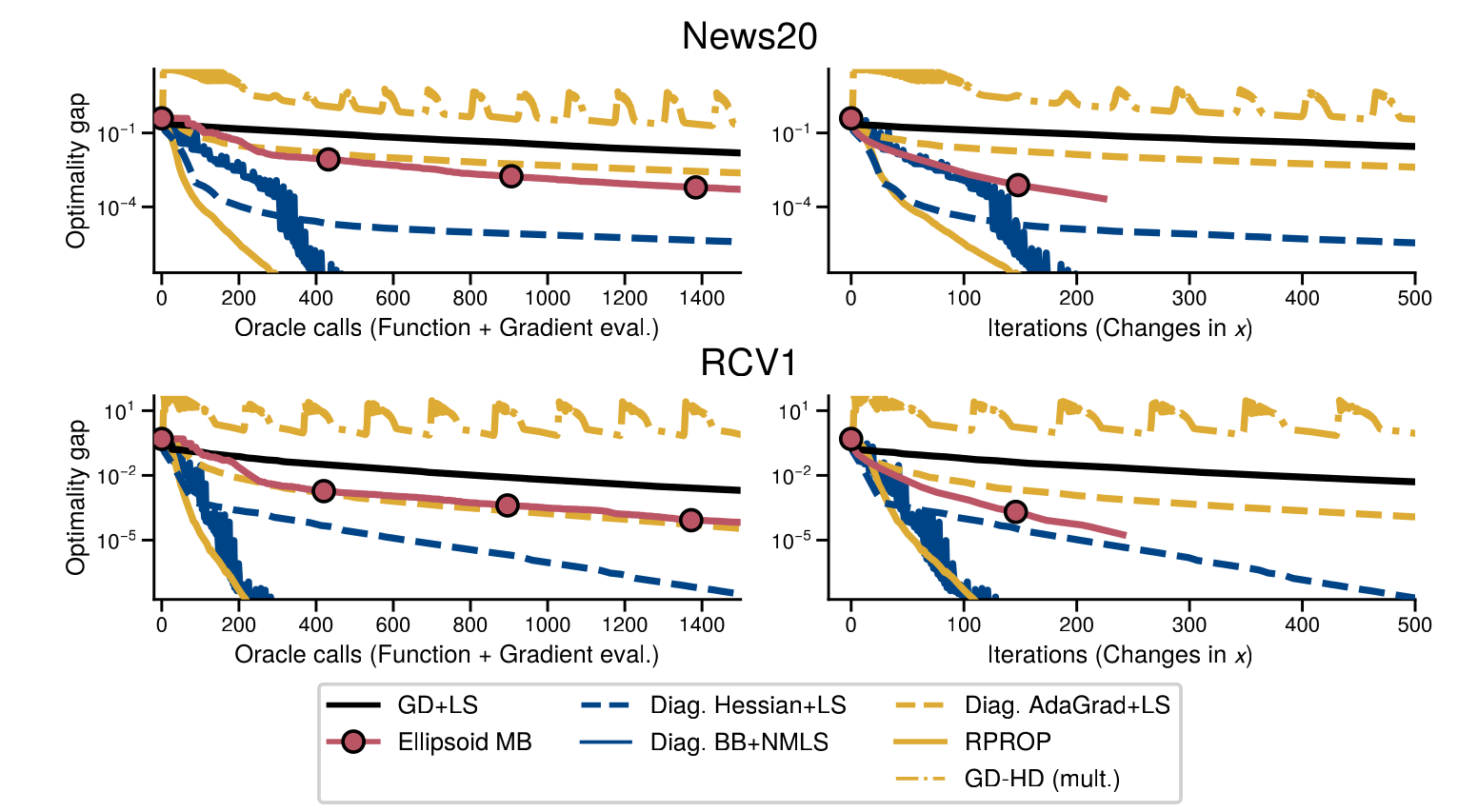

Experiments

Experiments

Conclusions

Theoretically principled adaptive optimization method for smooth strongly convex optimization

A theoretically-informed use of "hypergradients"

ML Optimization meets Cutting Plane methods

Thanks!

arxiv.org/abs/2306.02527

Additional Slides

Box as Feasible Sets

How Deep to Query?

Ellipsoid Method to the Rescue

Convexity to the Rescue

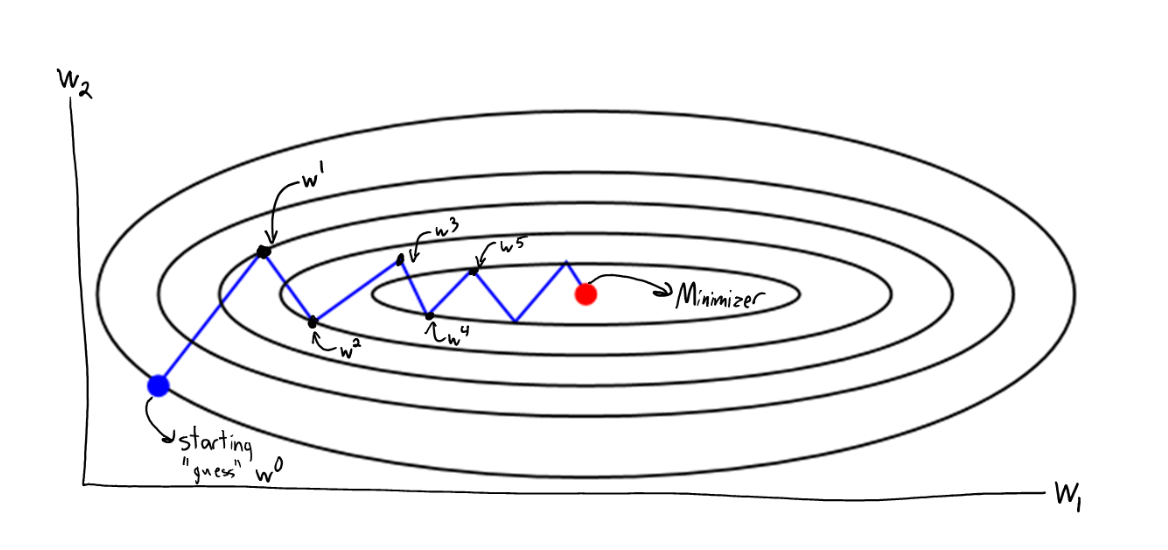

Gradient Descent and Line Search

Why first-order optimization?

Training/Fitting a ML model is often cast a (uncontrained) optimization problem

Usually in ML, models tend to be BIG

\(d\) is BIG

Running time and space \(O(d)\) is usually the most we can afford

First-order (i.e., gradient based) methods fit the bill

(stochastic even more so)

Usually \(O(d)\) time and space per iteration

Convex Optimization Setting

\(f\) is convex

Not the case with Neural Networks

Still quite useful in theory and practice

More conditions on \(f\) for rates of convergence

\(L\)-smooth

\(\mu\)-strongly convex

Gradient Descent

Which step-size \(\alpha\) should we pick?

Condition number

\(\kappa\) Big \(\implies\) hard function

What Step-Size to Pick?

If we know \(L\), picking \(1/L\) always works

and is worst-case optimal

What if we do not know \(L\)?

Locally flat \(\implies\) we can pick bigger step-sizes

If \(f\) is \(L\) smooth, we have

"Descent Lemma"

Idea: Pick \(\eta\) big and see if the "descent condition" holds

(Locally \(1/\eta\)-smooth)

Beyond Line-Search?

Converges in 1 step

\(P\)

\(O(d)\) space and time \(\implies\) \(P\) diagonal (or sparse)

Can we find a good \(P\) automatically?

"Adapt to \(f\)"

Preconditioer \(P\)

"Adaptive" Optimization Methods

Adaptive and Parameter-Free Methods

Preconditioner at round \(t\)

AdaGrad from Online Learning

or

Better guarantees if functions are easy

while preserving optimal worst-case guarantees in Online Learning

Attains linear rate in classical convex opt (proved later)

But... Online Learning is too adversarial, AdaGrad is "conservative"

In OL, functions change every iteration adversarially

"Fixing" AdaGrad

But... Online Learning is too adversarial, AdaGrad is "conservative"

"Fixes": Adam, RMSProp, and other workarounds

"AdaGrad inspired an incredible number of clones, most of them with similar, worse, or no regret guarantees.(...) Nowadays, [adaptive] seems to denote any kind of coordinate-wise learning rates that does not guarantee anything in particular."

Francesco Orabona in "A Modern Introduction to Online Learning", Sec. 4.3

Hypergradient Methods

Idea: look at step-size/preconditioner choice as an optimization problem

Gradient descent on the hyperparameters

How to pick the step-size of this? Well...

Little/ No theory

Unpredictable

... and popular?!

Second-order Methods

Newton's method

is usually a great preconditioner

Superlinear convergence

...when \(\lVert x_t - x_*\rVert\) small

Newton may diverge otherwise

Using step-size with Newton and QN method ensures convergence away from \(x_*\)

Worse than GD

\(\nabla^2 f(x)\) is usually expensive to compute

should also help

Quasi-Newton Methods, e.g. BFGS

State of Affairs

(Quasi-)Newton: needs Hessian, can be slower than GD

Hypergradient methods: purely heuristic, unstable

Online Learning Algorithms: Good but pessimistic theory

at least for smooth optimization it seems pessimistic...

Online Learning

Smooth Optimization

1 step-size

\(d\) step-sizes

(diagonal preconditioner )

Backtracking Line-search

Diagonal AdaGrad

Coordinate-wise

Coin Betting

(non-smooth opt?)

Multidimensional Backtracking

Scalar AdaGrad

Coin-Betting

What does it mean for a method to be adaptive?

Preconditioner Search - NeurIPS 5 min

By Victor Sanches Portella

Preconditioner Search - NeurIPS 5 min

- 355