Yofre H. Garcia

Saúl Diaz-Infante Velasco

Jesús Adolfo Minjárez Sosa

sauldiazinfante@gmail.com

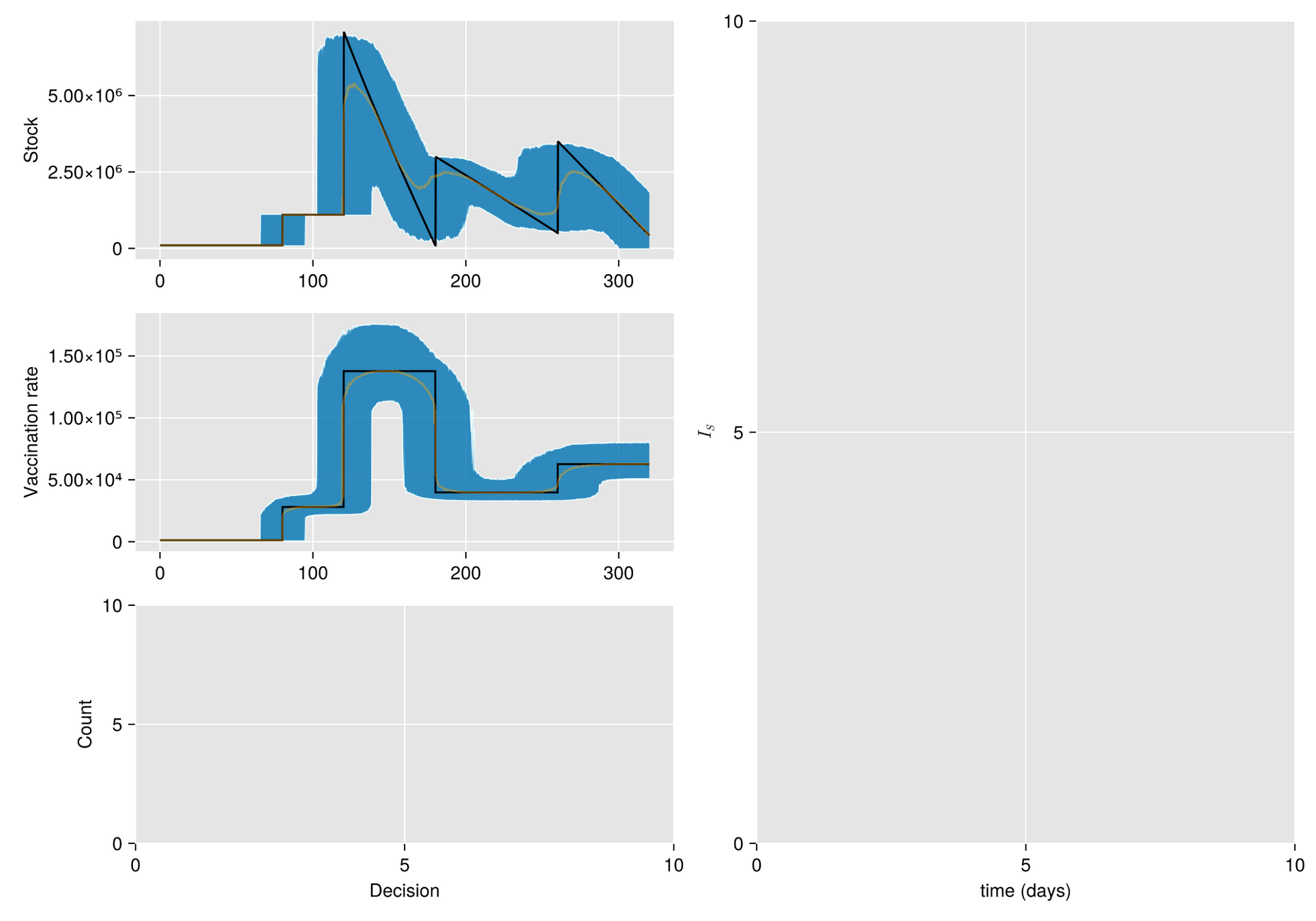

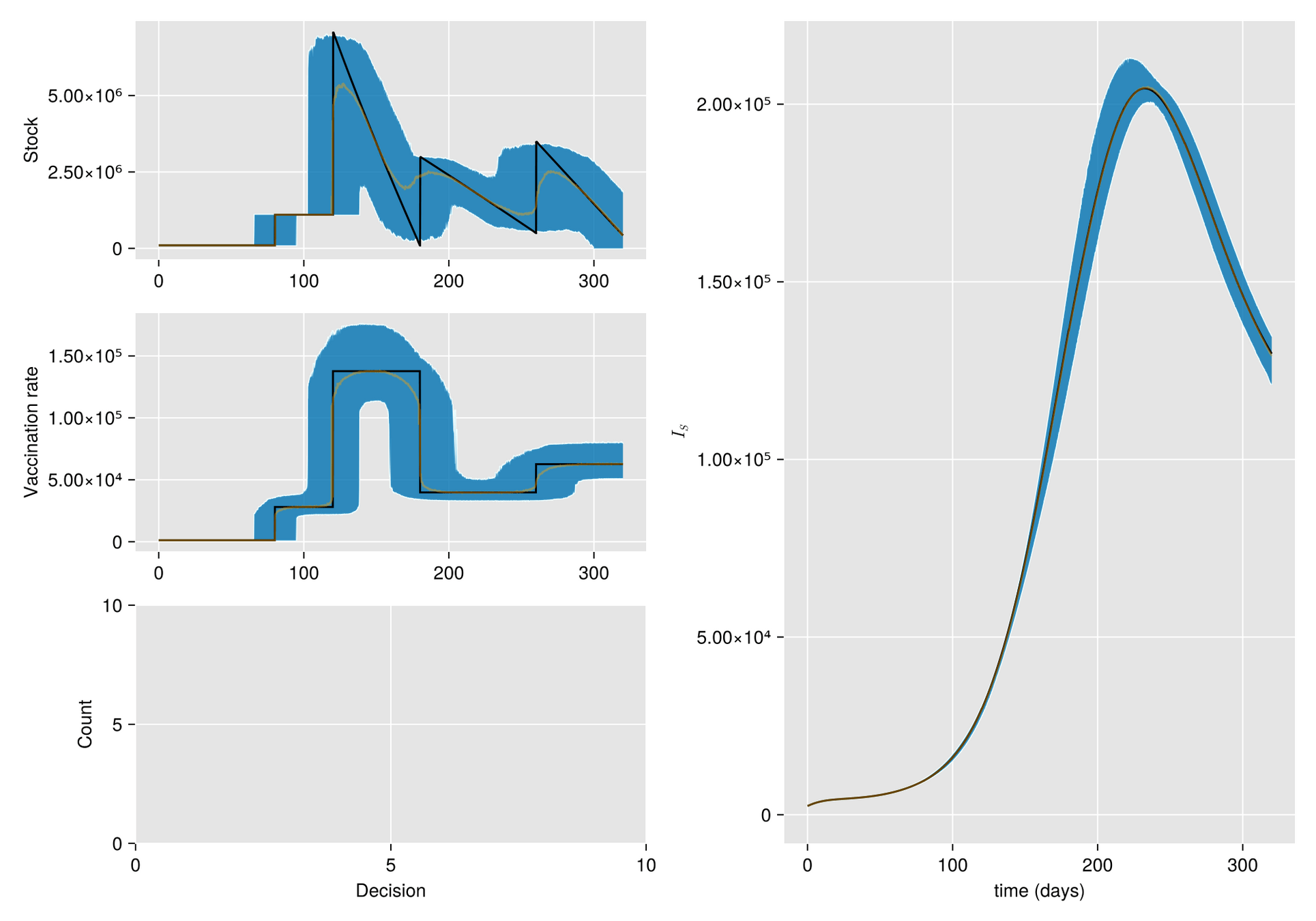

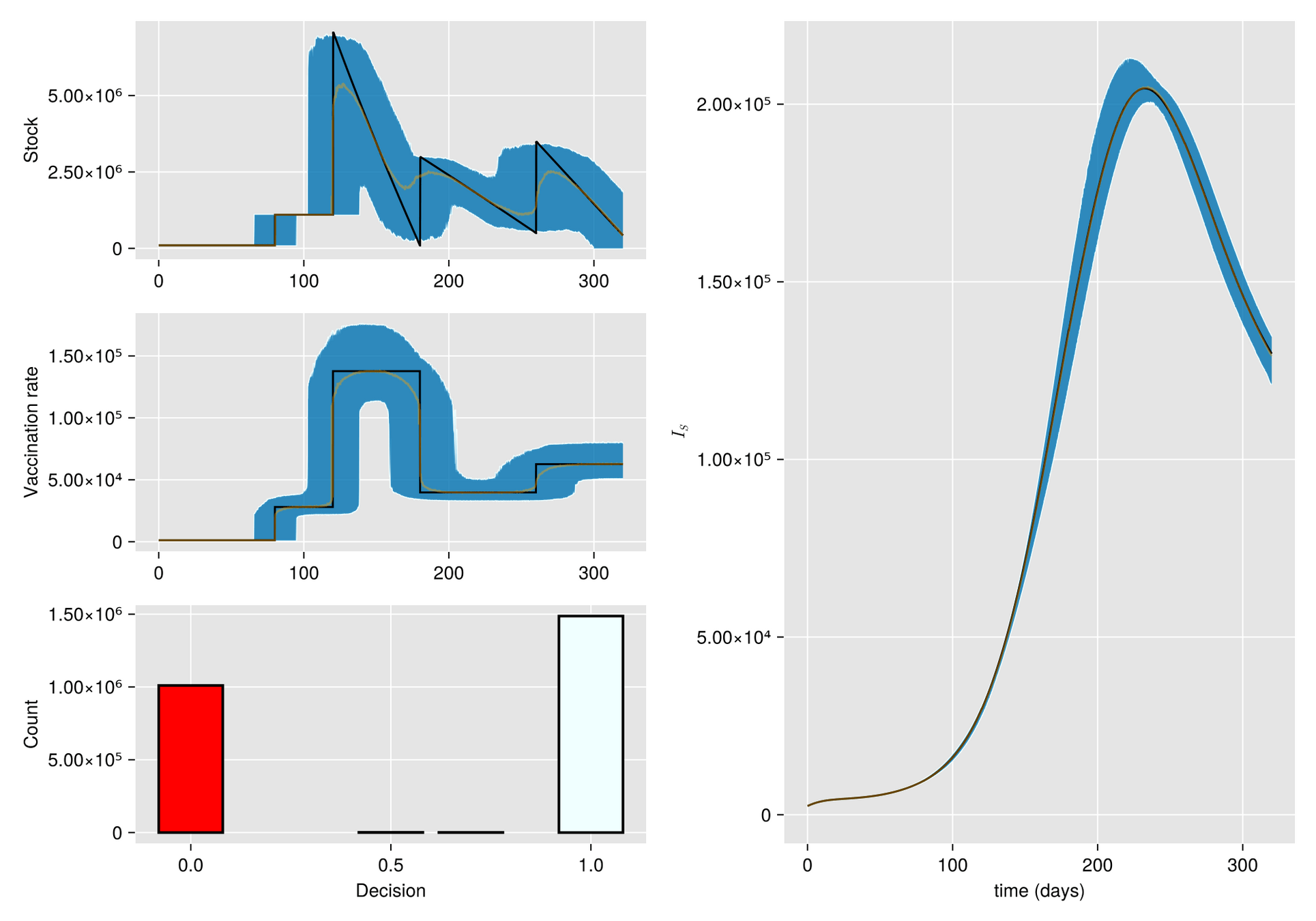

Argument. When there is a shortage of vaccines, sometimes the best response is to refrain from vaccination, at least for a while.

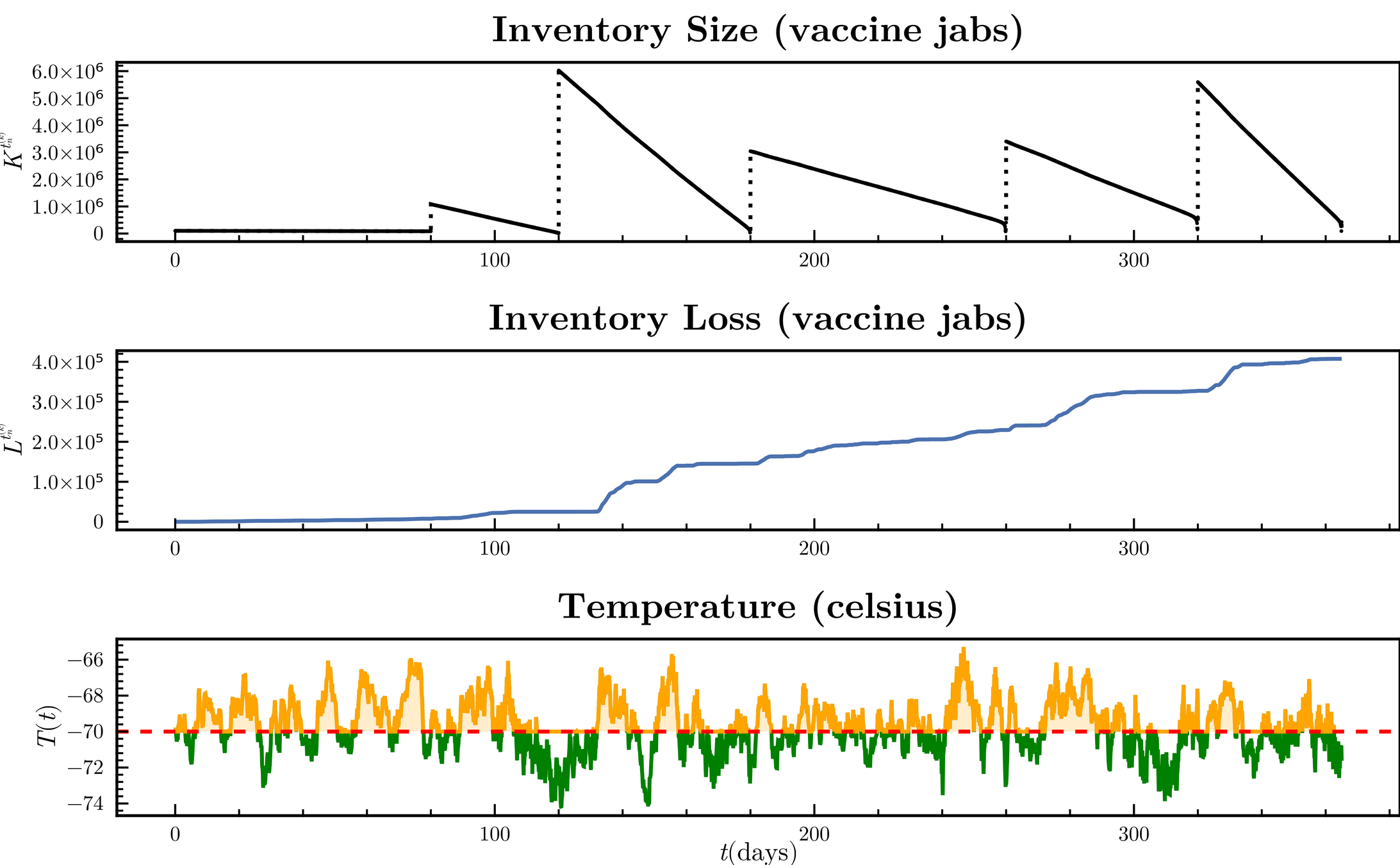

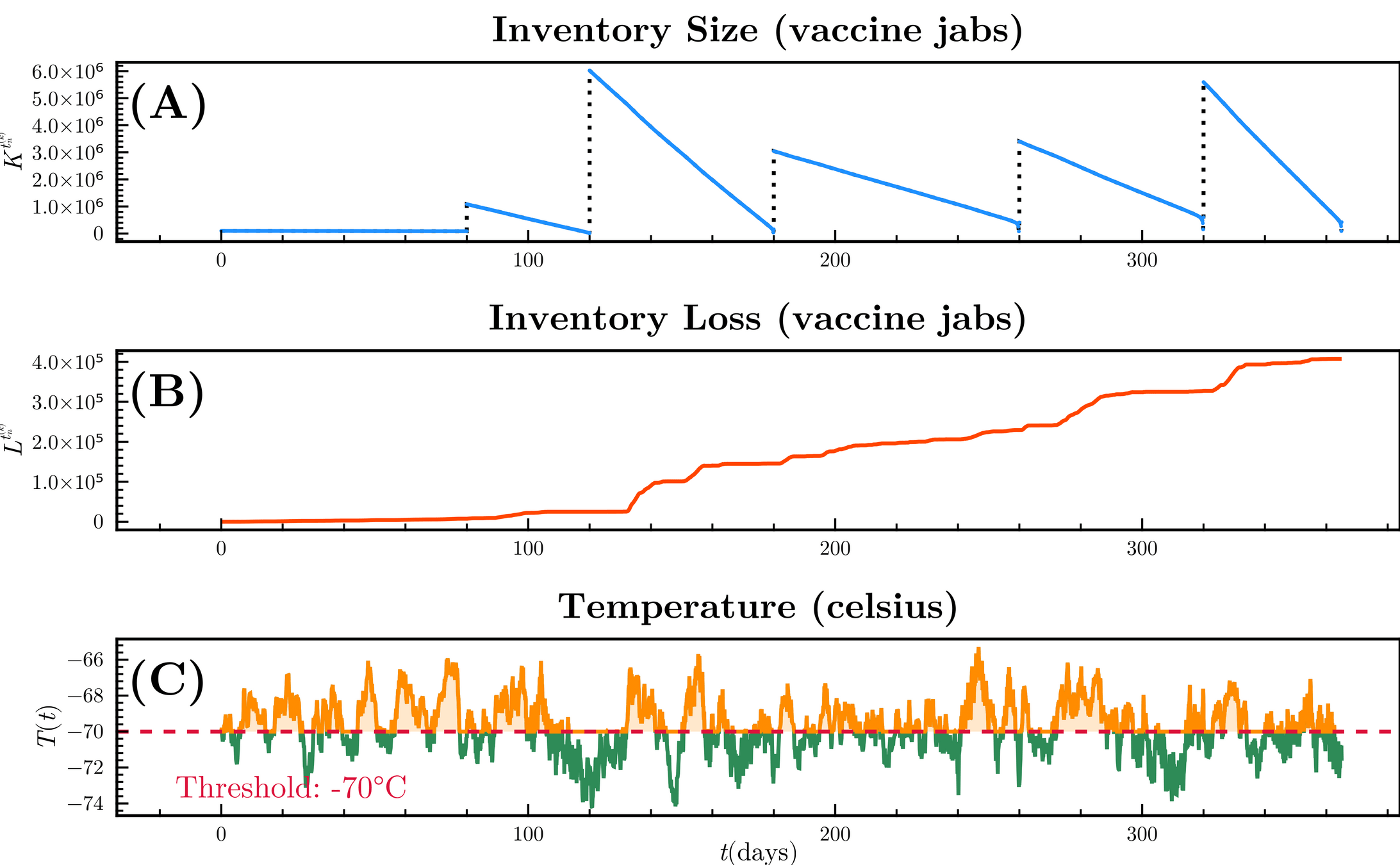

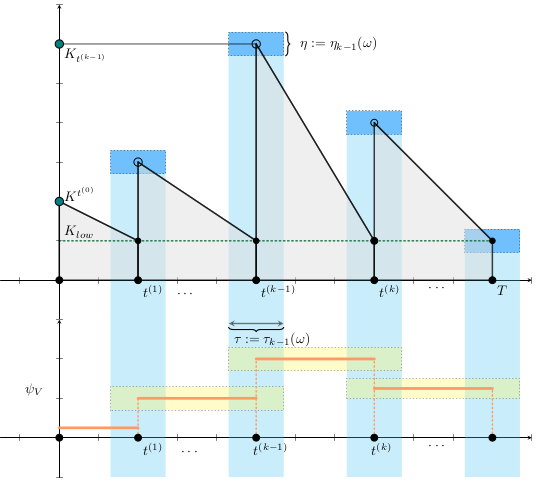

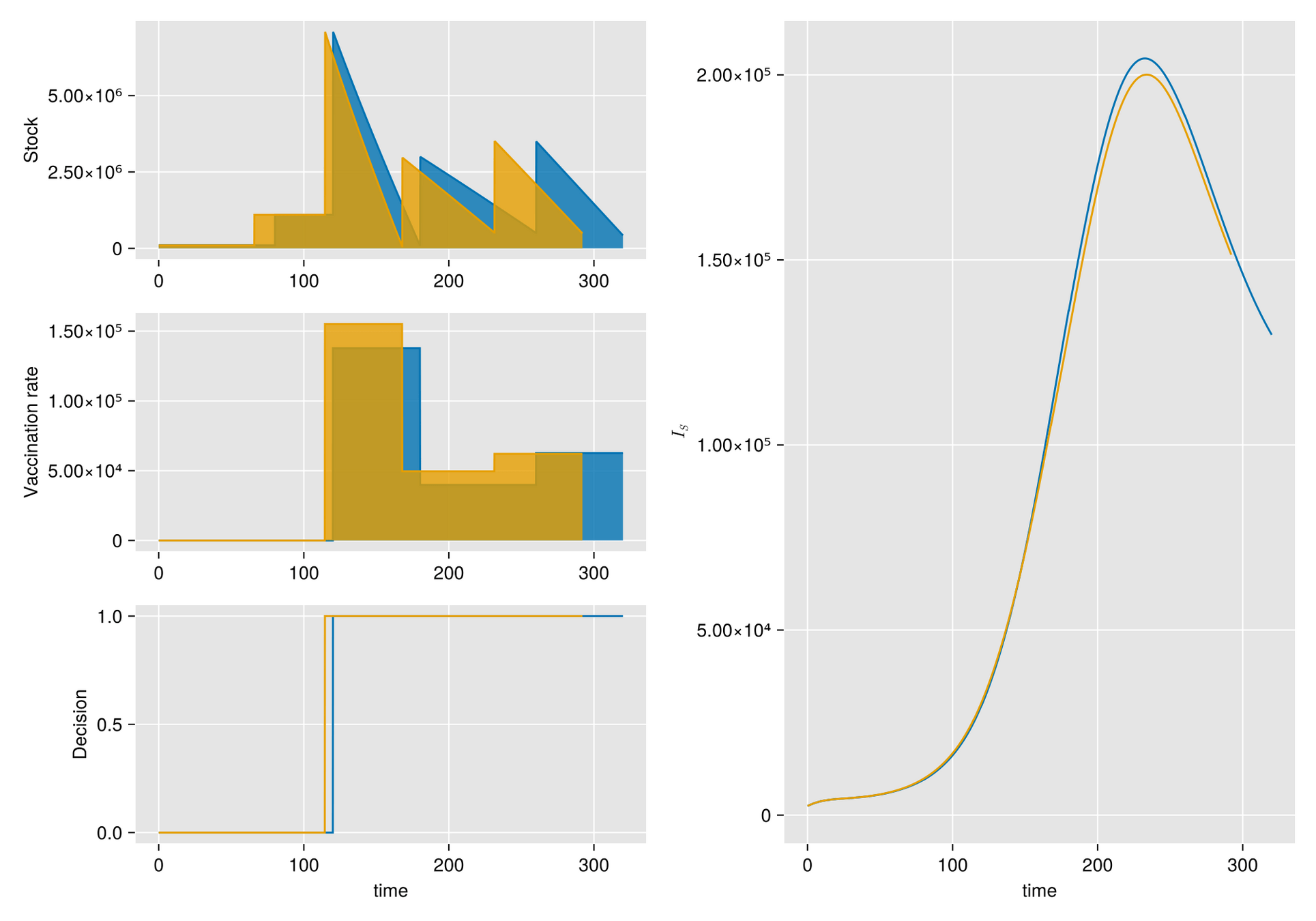

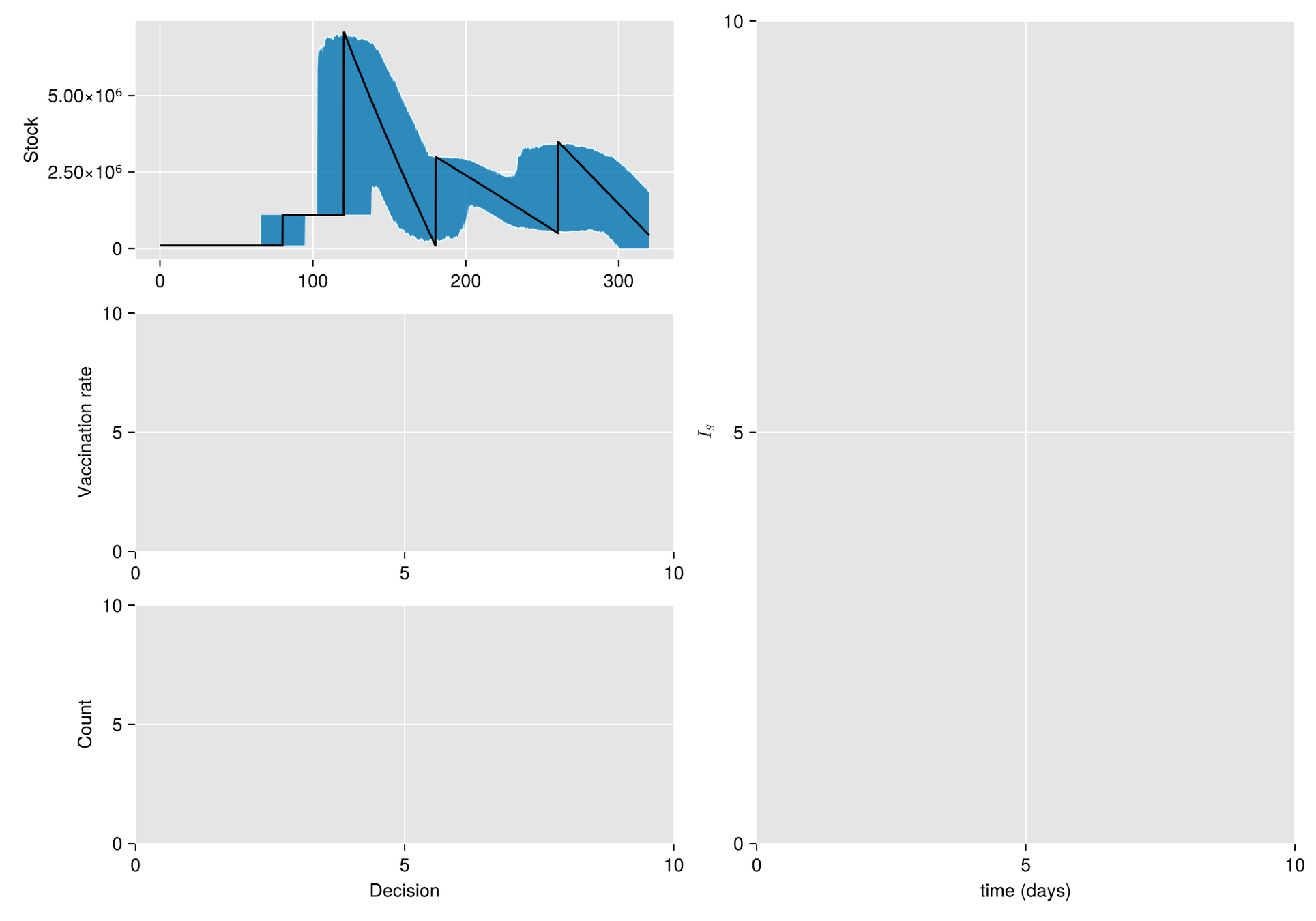

Hypothesis. Under these conditions, inventory management suffers significant random fluctuations

Objective. Optimize the management of vaccine inventory and its effect on a vaccination campaign

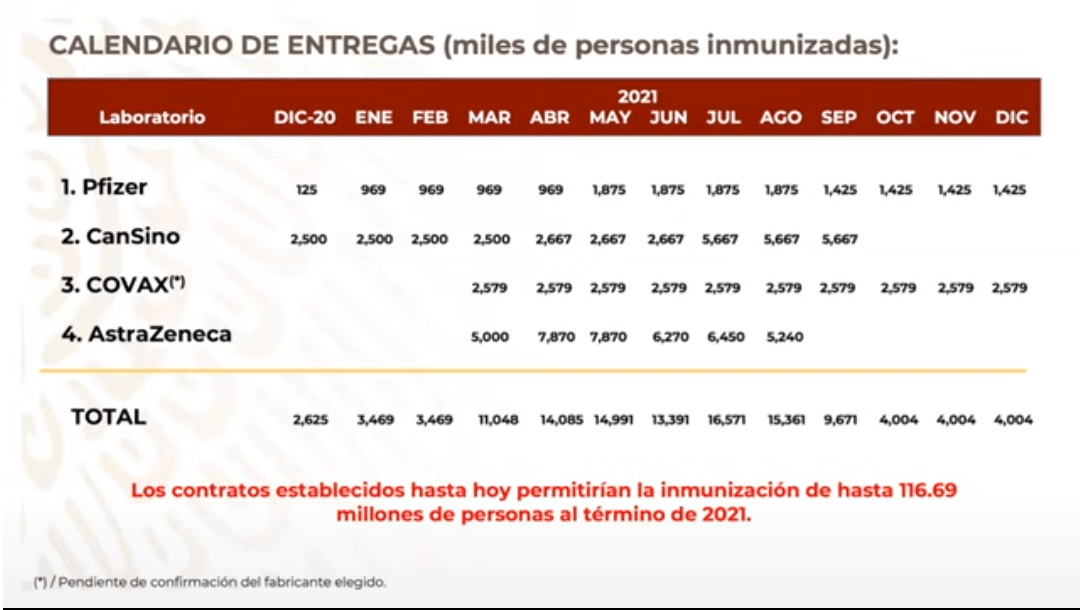

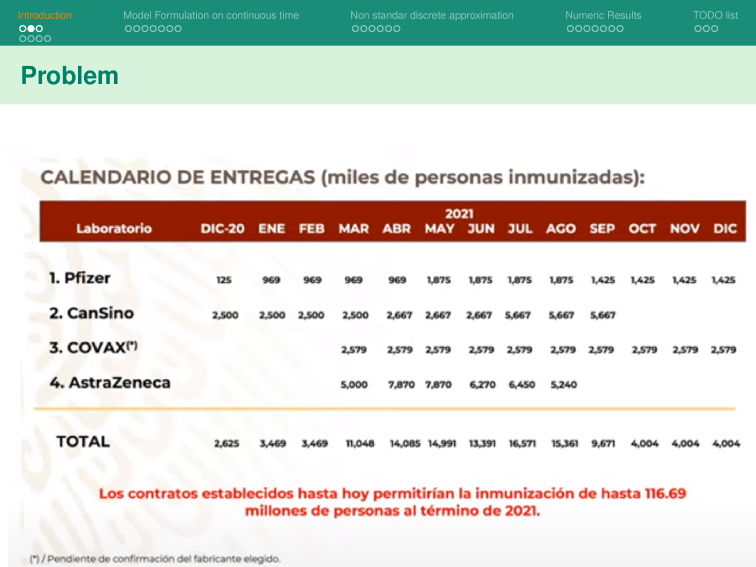

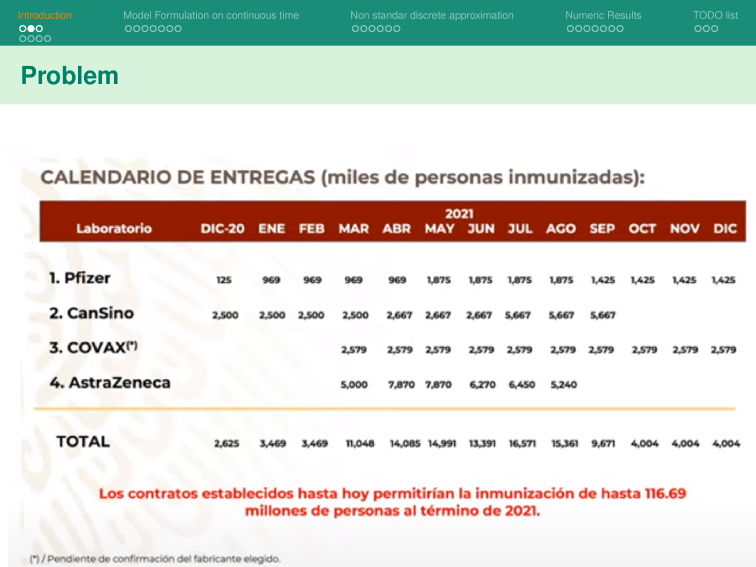

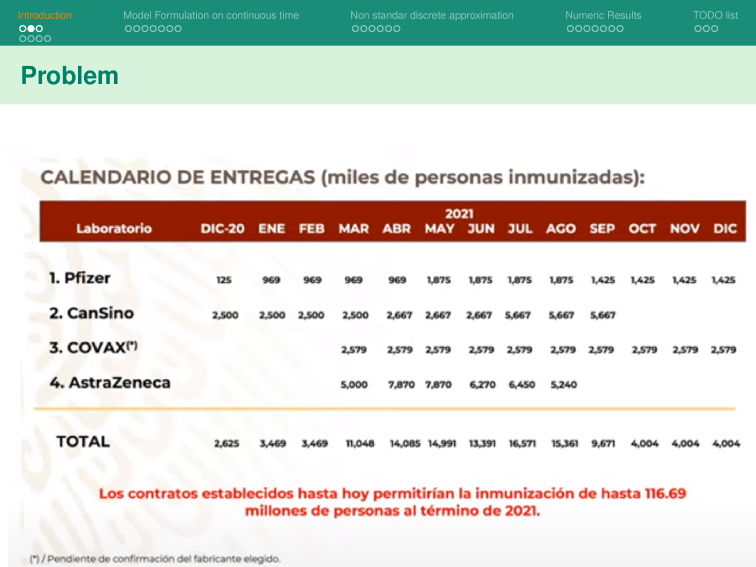

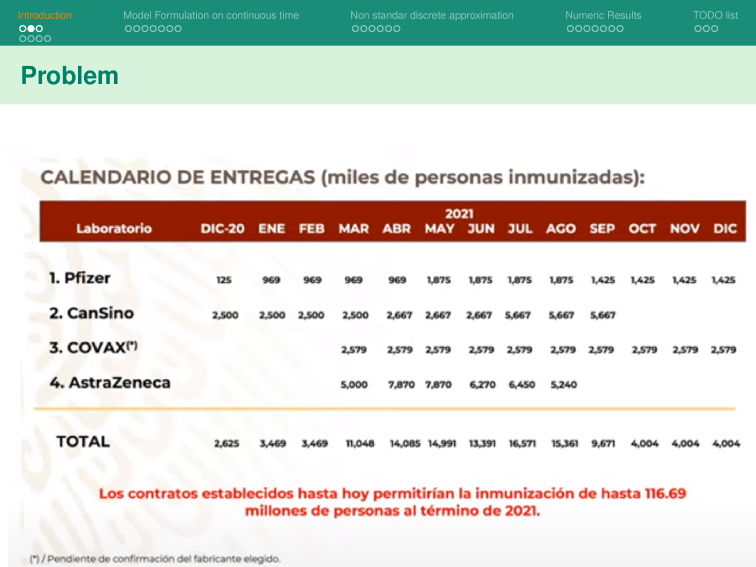

On October 13 2020, the Mexican government announced a vaccine delivery plan from Pfizer-BioNTech and other companies as part of the COVID-19 vaccination campaign.

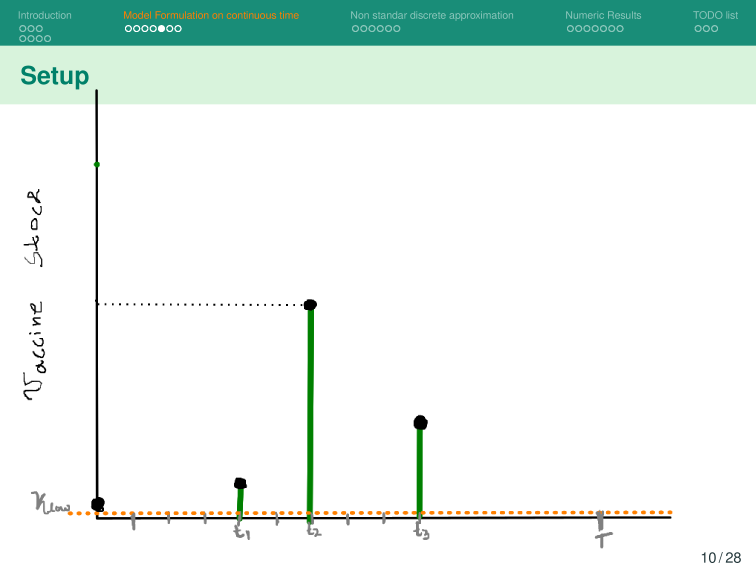

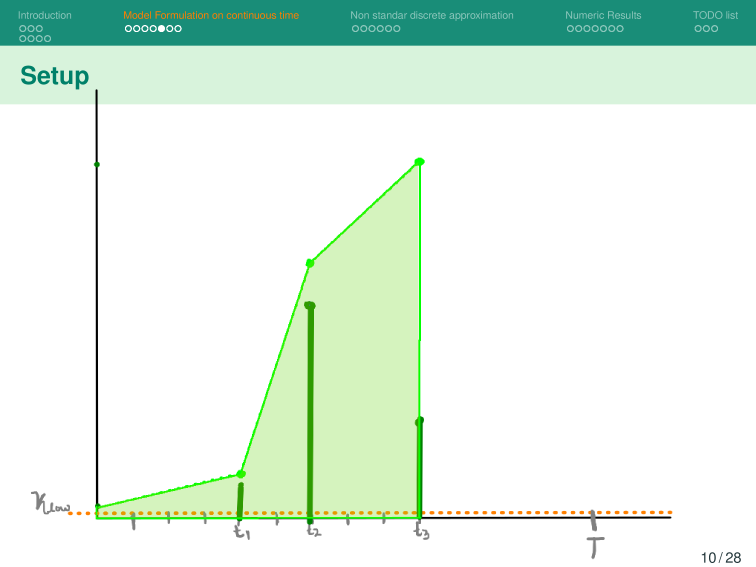

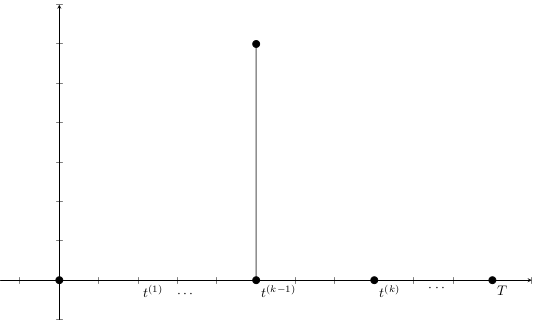

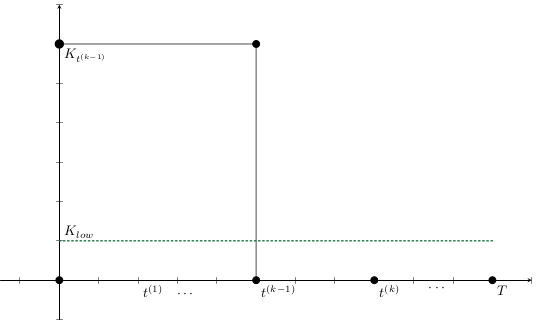

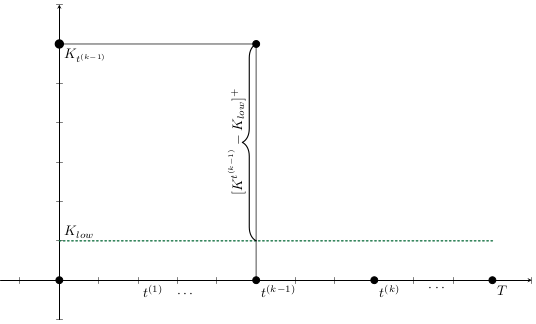

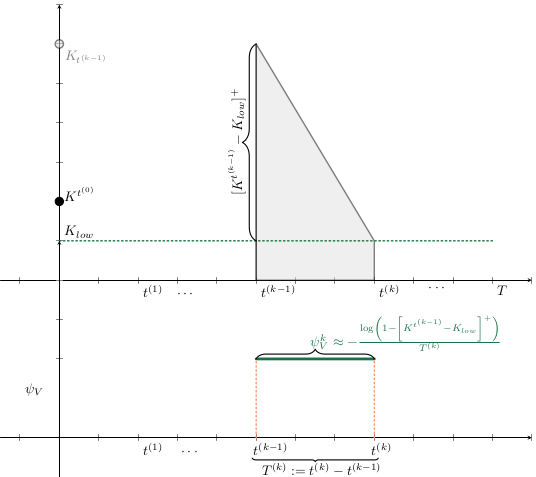

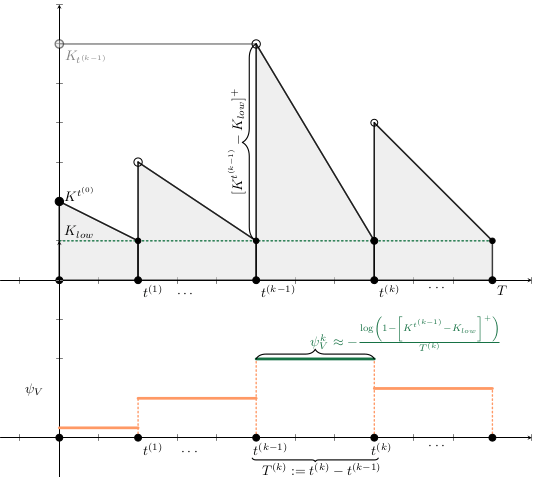

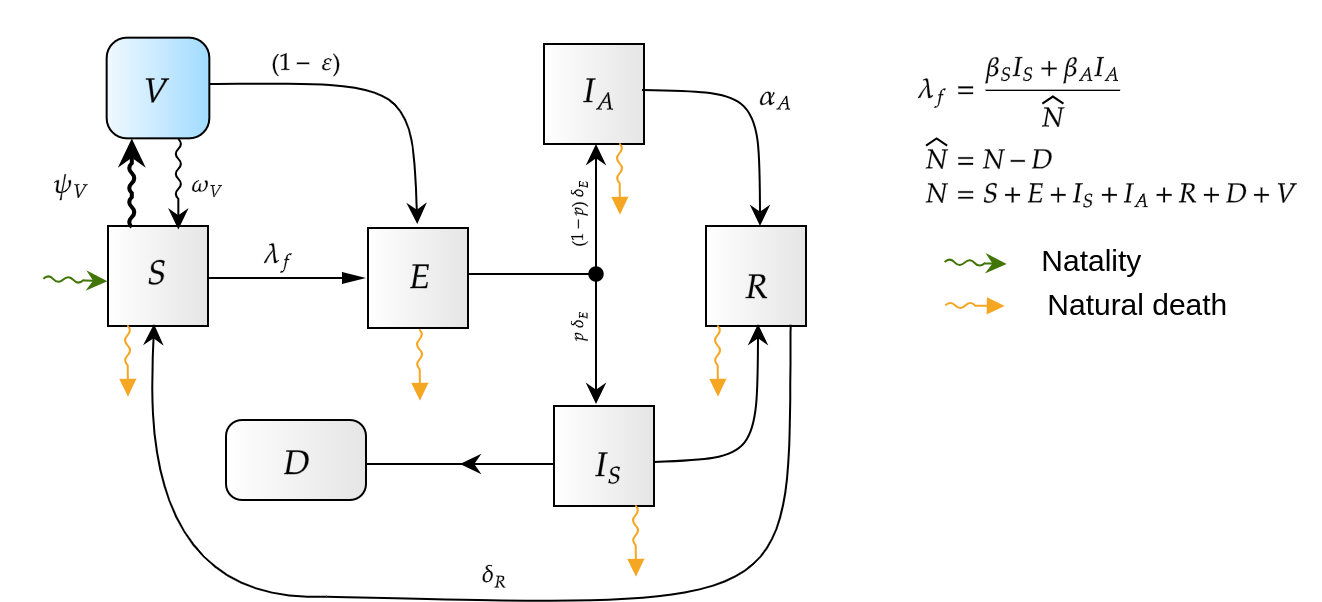

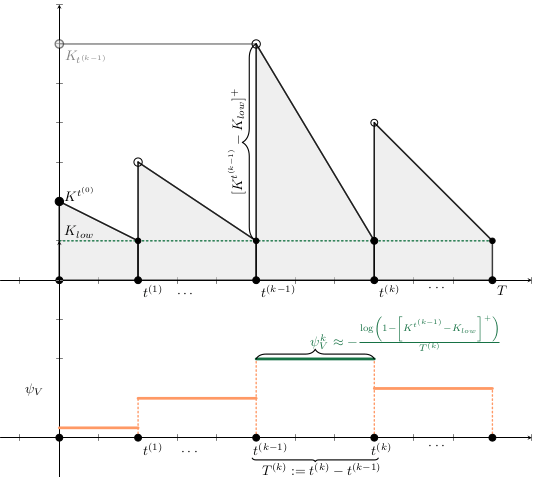

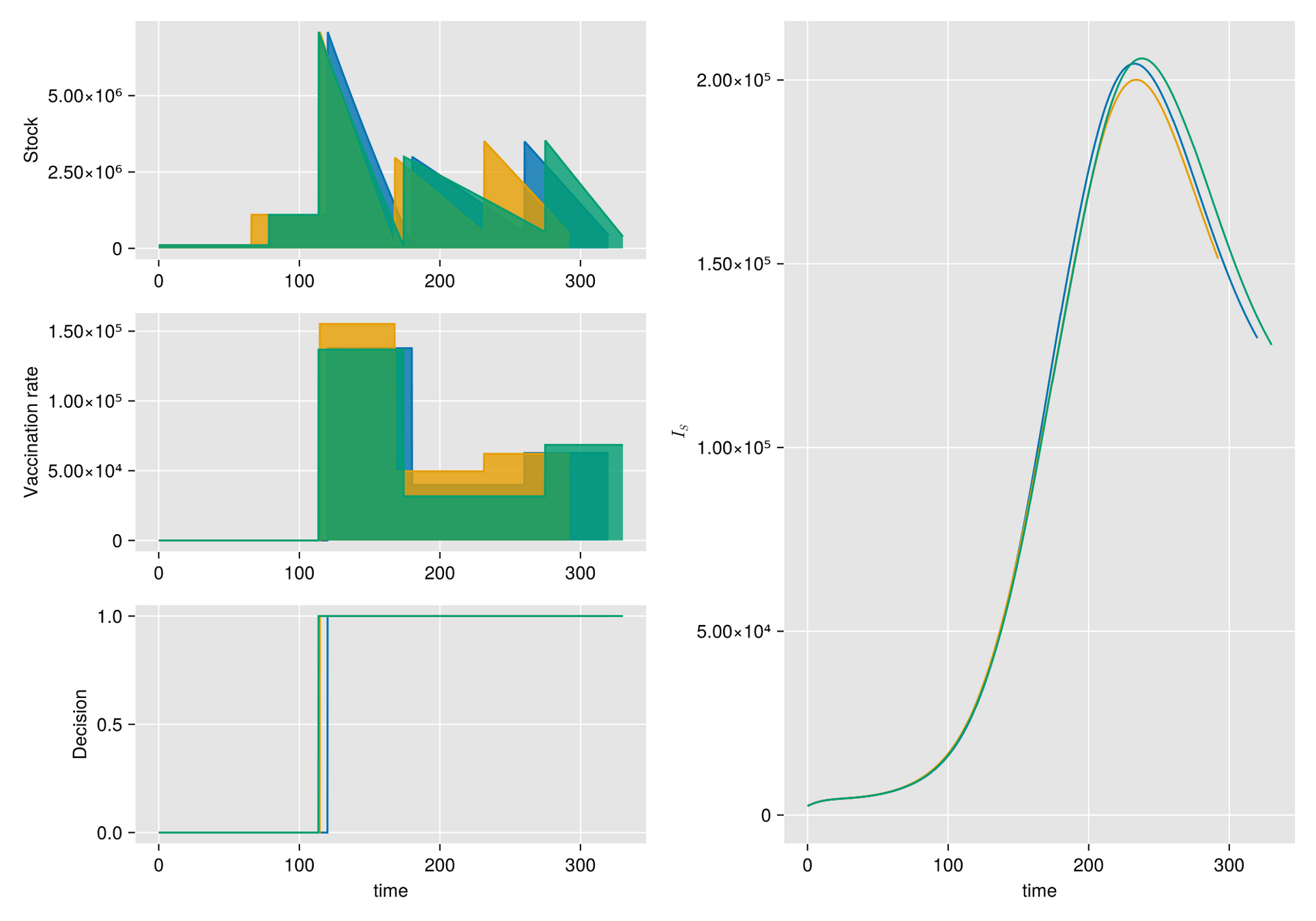

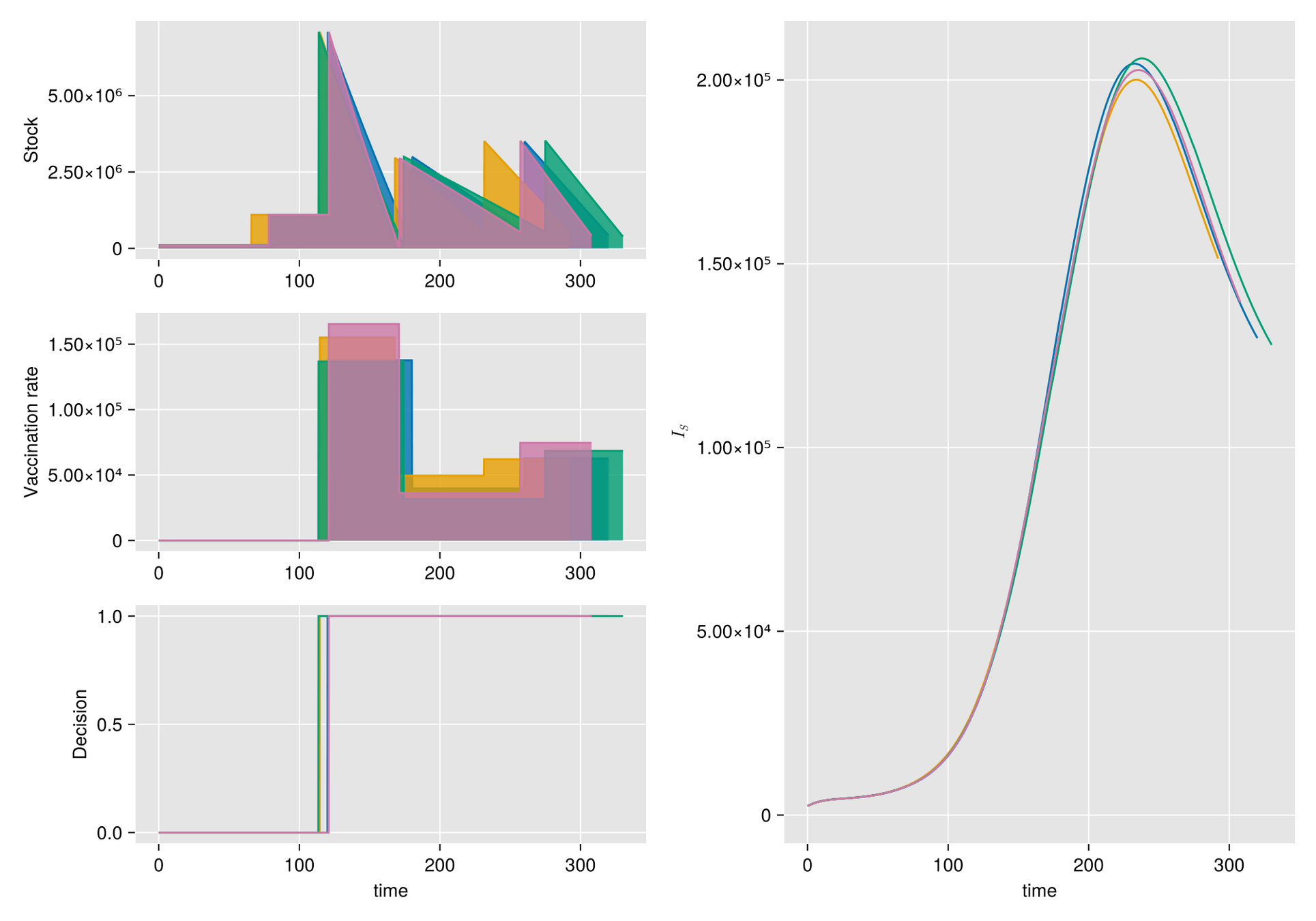

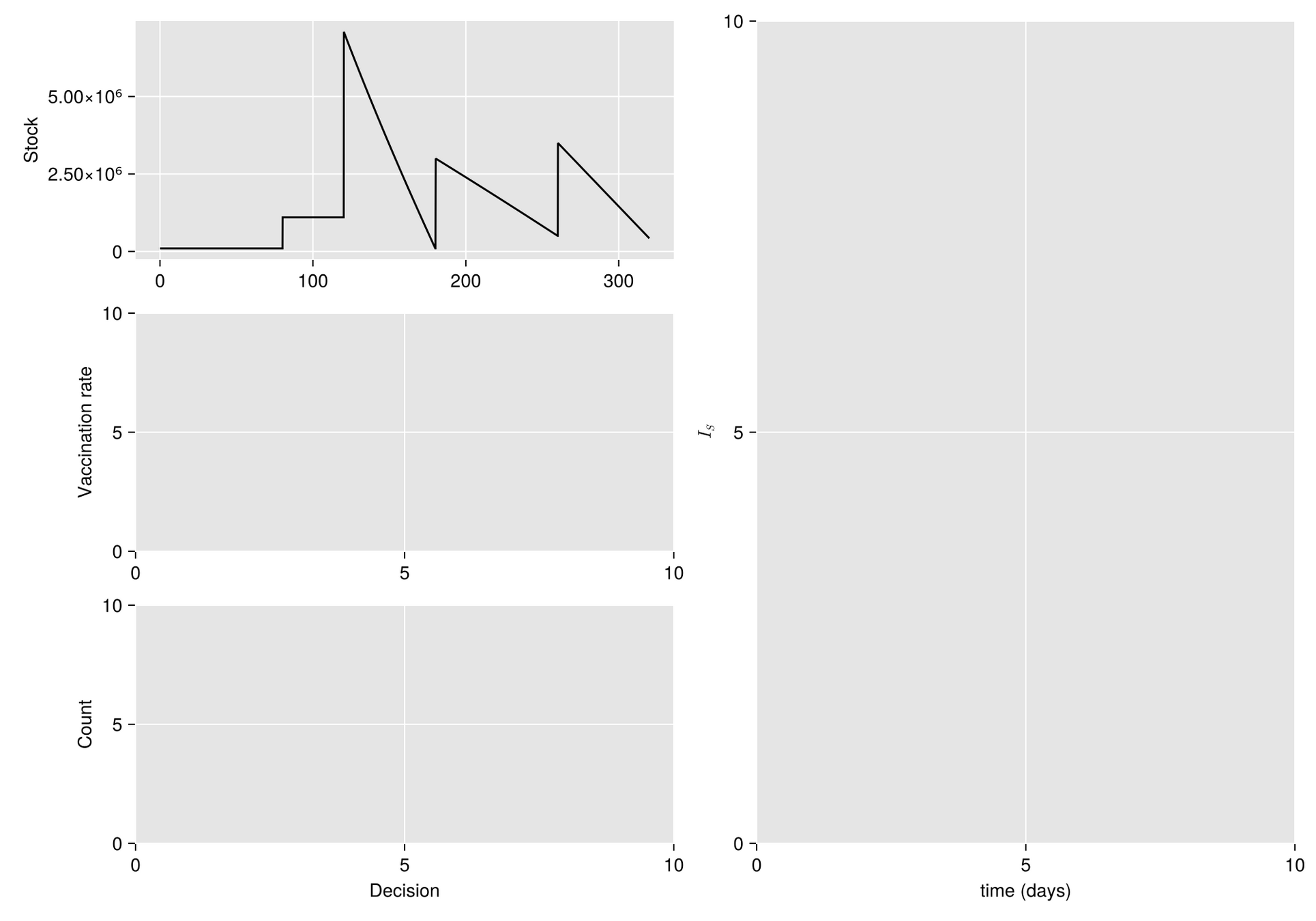

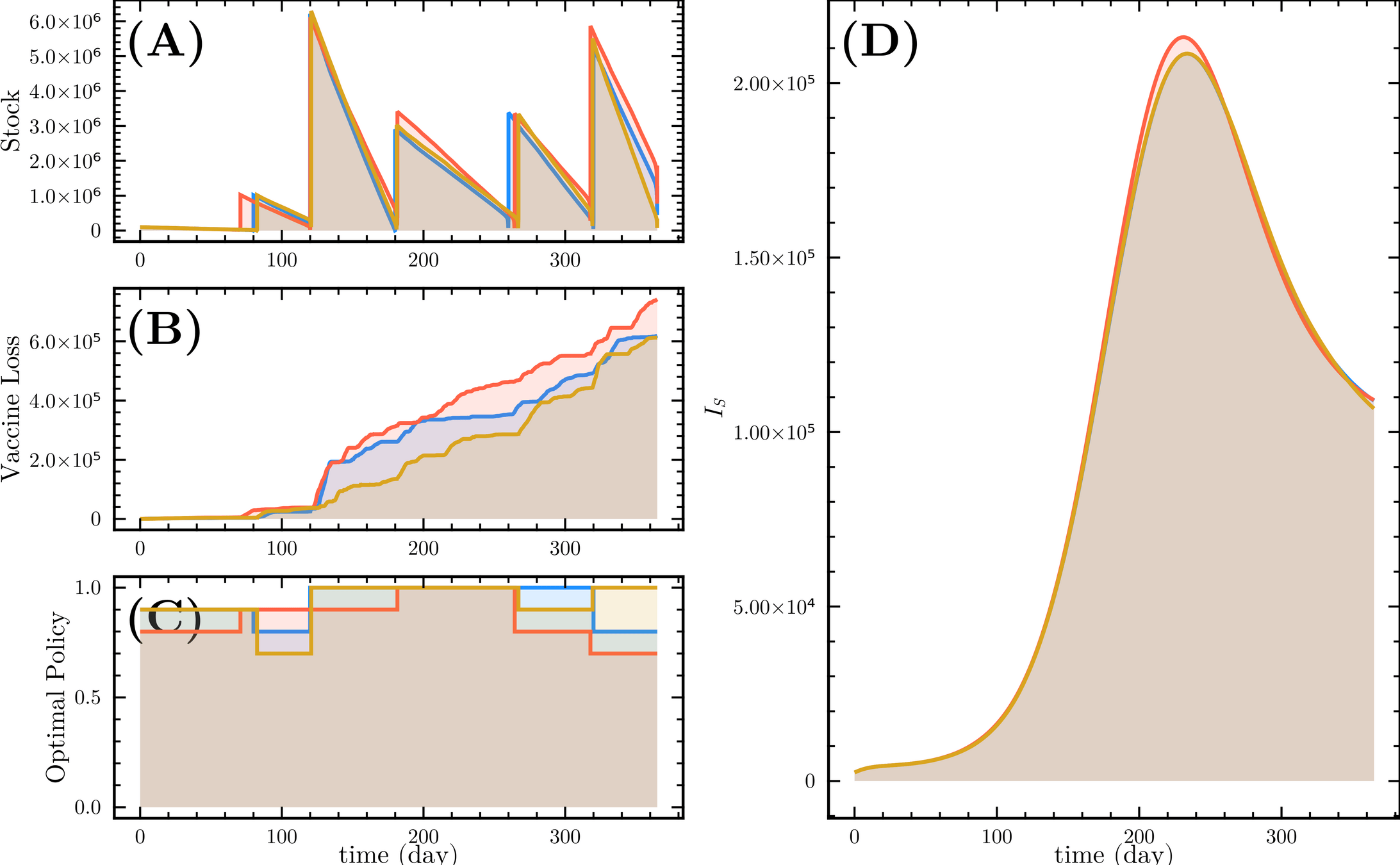

Methods. Given a vaccine shipping schedule, we describe stock management with a backup protocol and quantify the random fluctuations due to a program under high uncertainty.

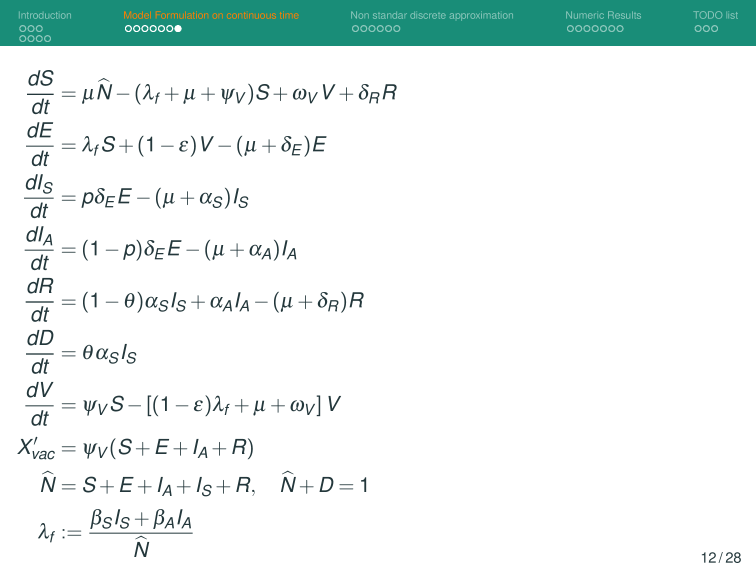

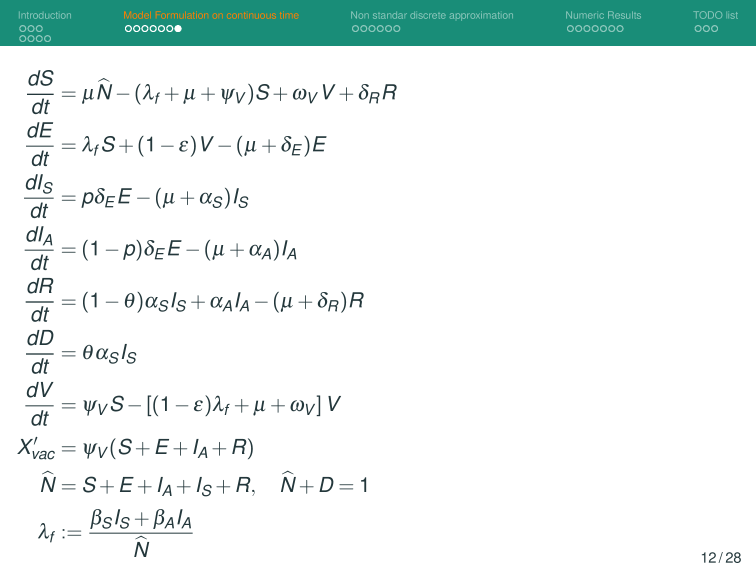

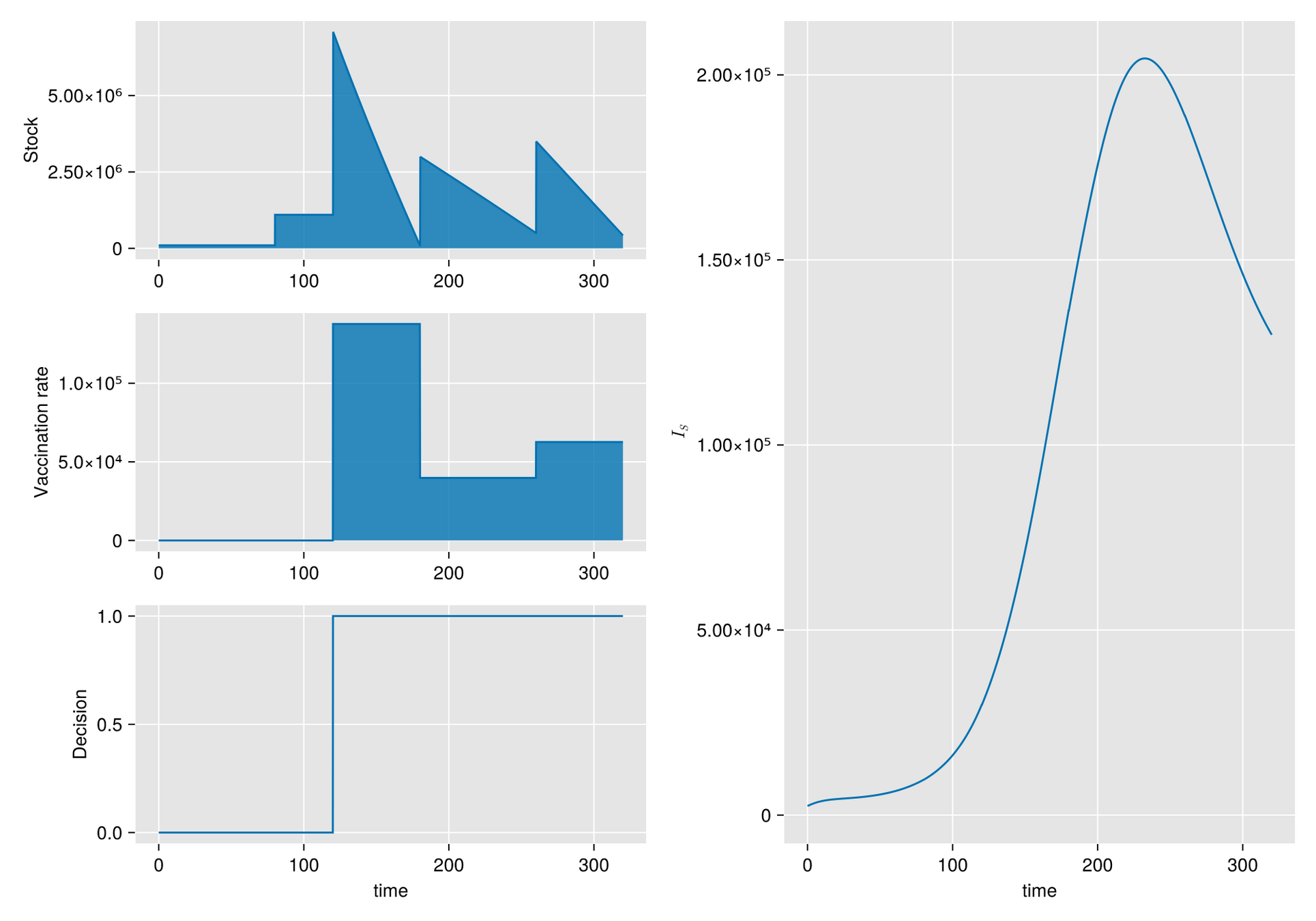

Then, we incorporate this dynamic into a system of ODE that describes the disease and evaluate its response.

Nonlinear control: HJB and DP

Given

Goal:

Desing

to follow

s. t. optimize cost

Agent

Nonlinear control: HJB and DP

Bellman optimality principle

Control Problem

s.t.

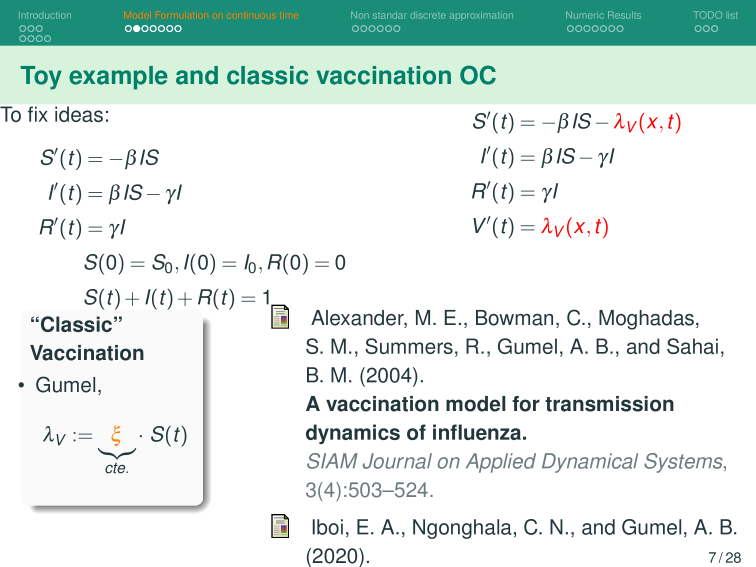

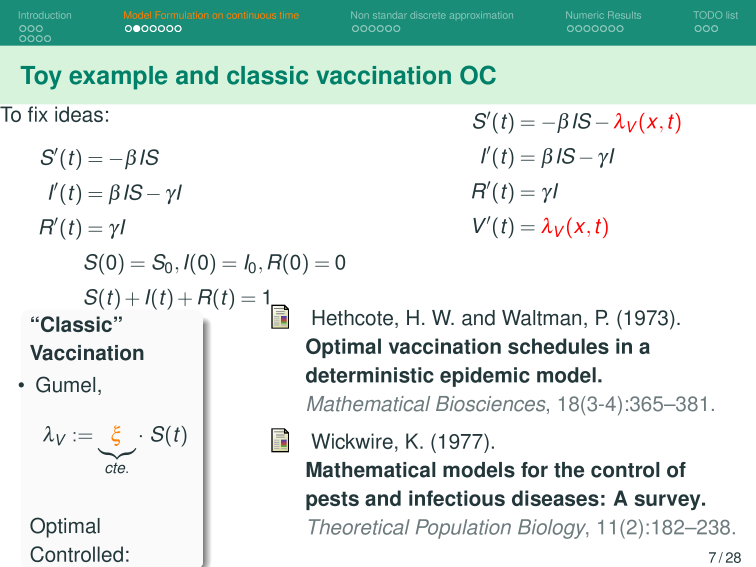

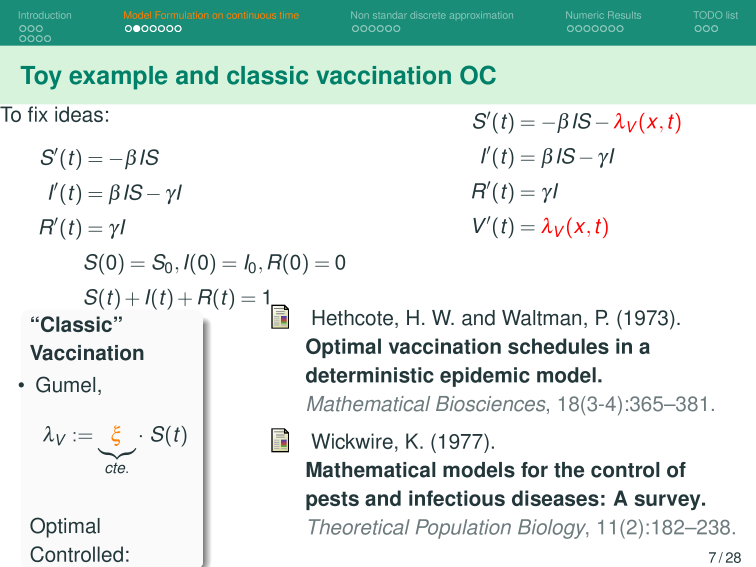

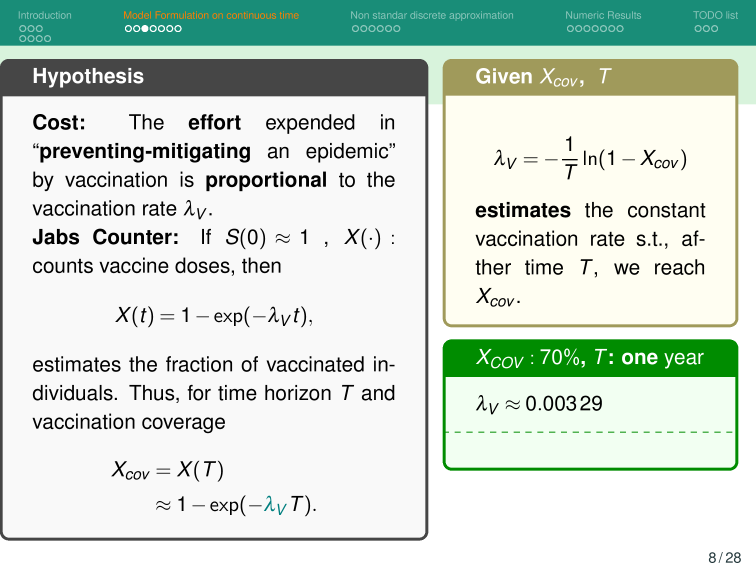

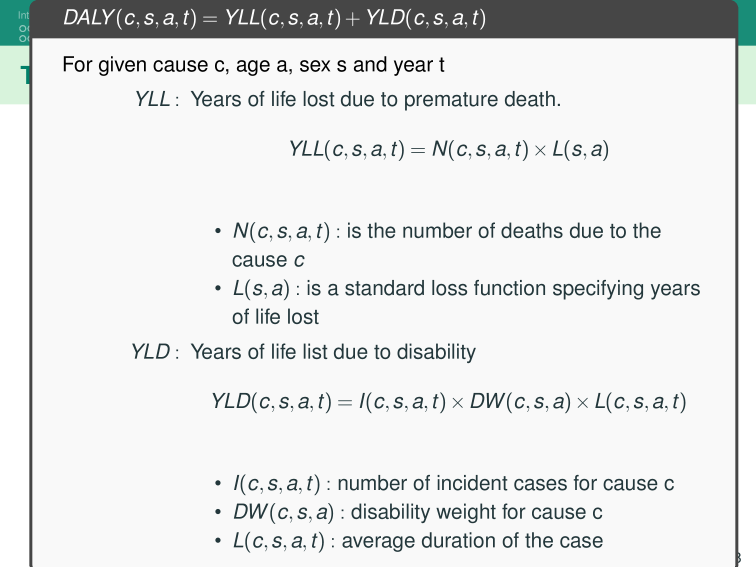

The effort invested in preventing or mitigating an epidemic through vaccination is proportional to the vaccination rate

Let us assume at the beginning of the outbreak:

Then we estimate the number of vaccines with

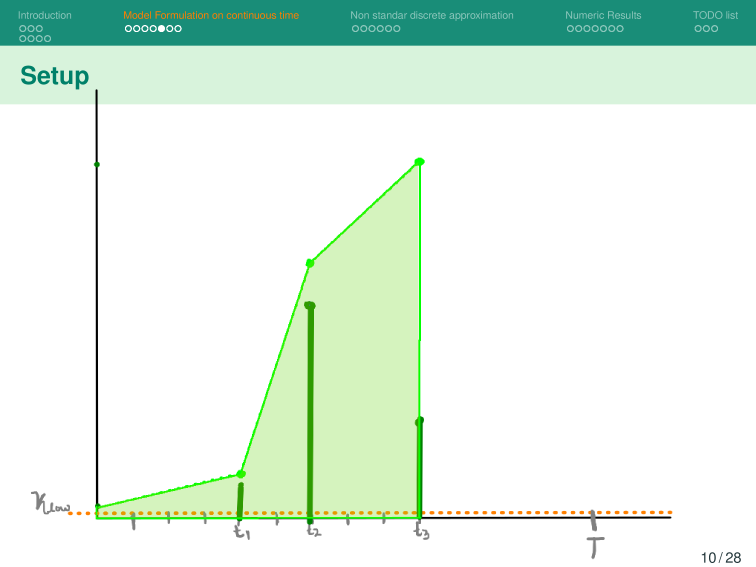

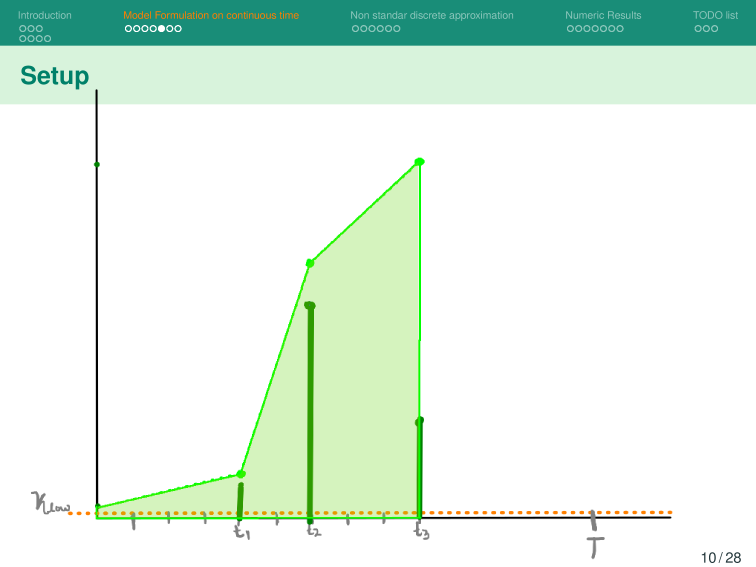

Then, for a vaccination campaign, let:

Then we estimate the number of vaccines with

Then, for a vaccination campaign, let:

Estimated population of Hermosillo, Sonora in 2024 is 930,000.

So to vaccinate 70% of this population in one year:

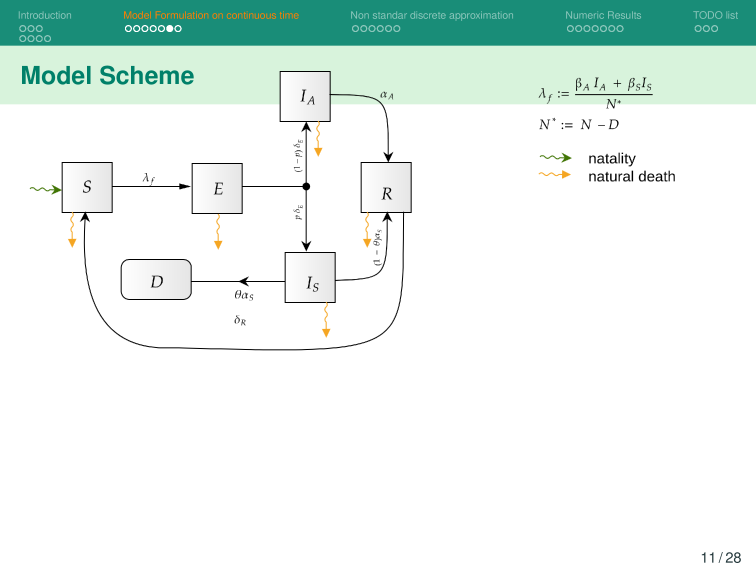

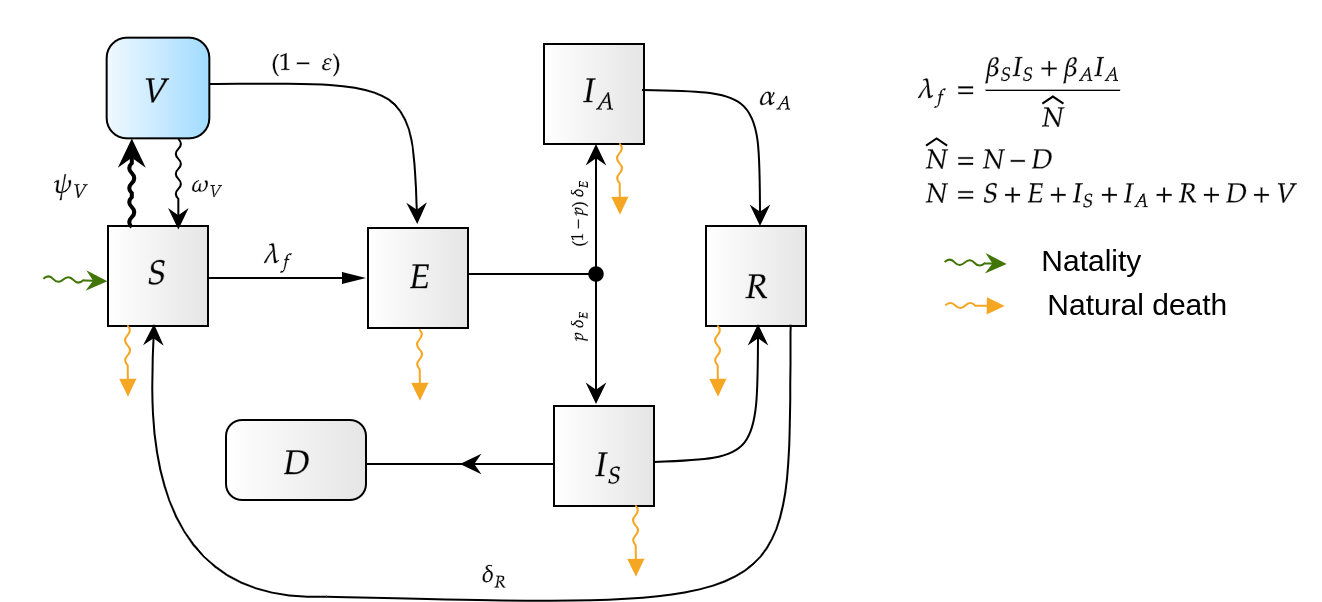

Base Model

Stock degradation due to Temperature

Agent

action

state

reward

HJB (Dynamic Programming)- Curse of dimensionality

HJB(Neuro-Dynamic Programming)

Abstract dynamic programming.

Athena Scientific, Belmont, MA, 2013. viii+248 pp.

ISBN:978-1-886529-42-7

Rollout, policy iteration, and distributed reinforcement learning.

Revised and updated second printing

Athena Sci. Optim. Comput. Ser.

Athena Scientific, Belmont, MA, [2020], ©2020. xiii+483 pp.

ISBN:978-1-886529-07-6

Reinforcement learning and optimal control

Athena Sci. Optim. Comput. Ser.

Athena Scientific, Belmont, MA, 2019, xiv+373 pp.

ISBN: 978-1-886529-39-7

Powell, Warren B.

Reinforcement Learning and Stochastic Optimization: A Unified Framework for Sequential Decisions. United Kingdom: Wiley, 2022.

https://github.com/SaulDiazInfante/rl_vac.jl

https://slides.com/sauldiazinfantevelasco/mexsiam-2025-c12521/fullscreen

GRACIAS!!,

Preguntas?

MexSIAM-2025

By Saul Diaz Infante Velasco

MexSIAM-2025

Explore the fascinating world of dynamic programming and reinforcement learning through the insights of Dimitri P. Bertsekas. Discover techniques like HJB and rollout methods that can enhance your understanding and application in optimization!

- 80