Efficient and Optimal Fixed-Time Regret with Two Experts

Laura Greenstreet, Nick Harvey, Victor Sanches Portella

The Two-Experts' Problem

Prediction with Expert Advice

Player

Adversary

\(n\) Experts

0.5

0.1

0.3

0.1

Probabilities

1

0

0.5

0.3

Costs

Player's loss:

Loss of Best Expert

Player's Loss

Knows \(T\) (fixed-time)

Known and New Results

Multiplicative Weights Update method:

Optimal for \(n,T \to \infty\) !

If \(n\) is fixed, we can do better

Worst-case regret for 2 experts:

Cover's Algorithm

\(O(T)\) time per round

Dynamic Programming

\(\{0,1\}\) costs

\(O(1)\) time per round

Stochastic Calculus

\([0,1]\) costs

[Cover '67]

Our Algorithm

Technique:

Discretize a solution to a stochastic calculus problem

[HLPR - FOCS '20]

How to exploit the knowledge of \(T\)?

We need to analyze the discretization error!

!

!

Online Learning

🤝

Stochastic Calculus

Our Results

Result:

An Efficient and Optimal Algorithm in Fixed-Time with Two Experts

\(O(1)\) time per round

was \(O(T)\) before

Holds for general costs!

Technique:

Discretize a solution to a stochastic calculus problem

[HLPR '20]

How to exploit the knowledge of \(T\)?

Non-zero discretization error!

Insight:

Cover's algorithm has connections to stochastic calculus!

This connection seems to extend to more experts and other problems in online learning in general!

Gaps and Cover's Algorithm

Simplifying Assumptions

We will look only at \(\{0,1\}\) costs

1

0

0

1

0

0

1

1

Equal costs do not affect the regret

Cover's algorithm relies on these assumptions by construction

Our alg. and analysis extends to fractional costs

Gap between experts

Thought experiment: how much probability mass to put on each expert?

Cumulative Loss on round \(t\)

\(\frac{1}{2}\) is both cases seems reasonable!

Takeaway: player's decision may depend only on the gap between experts's losses

Gap = |42 - 20| = 22

Worst Expert

Best Expert

42

20

2

2

42

42

(and maybe on \(t\))

Cover's Dynamic Program

Player strategy based on gaps:

Choice doesn't depend on the specific past costs

on the Worst expert

on the Best expert

We can compute \(V^*\) backwards in time via DP!

Max regret to be suffered at time \(t\) with gap \(g\)

\(O(T^2)\) time to compute \(V^*\)

At round \(t\) with gap \(g\)

Max. regret for a game with \(T\) rounds

Computing the optimal strategy \(p^*\) from \(V^*\) is easy!

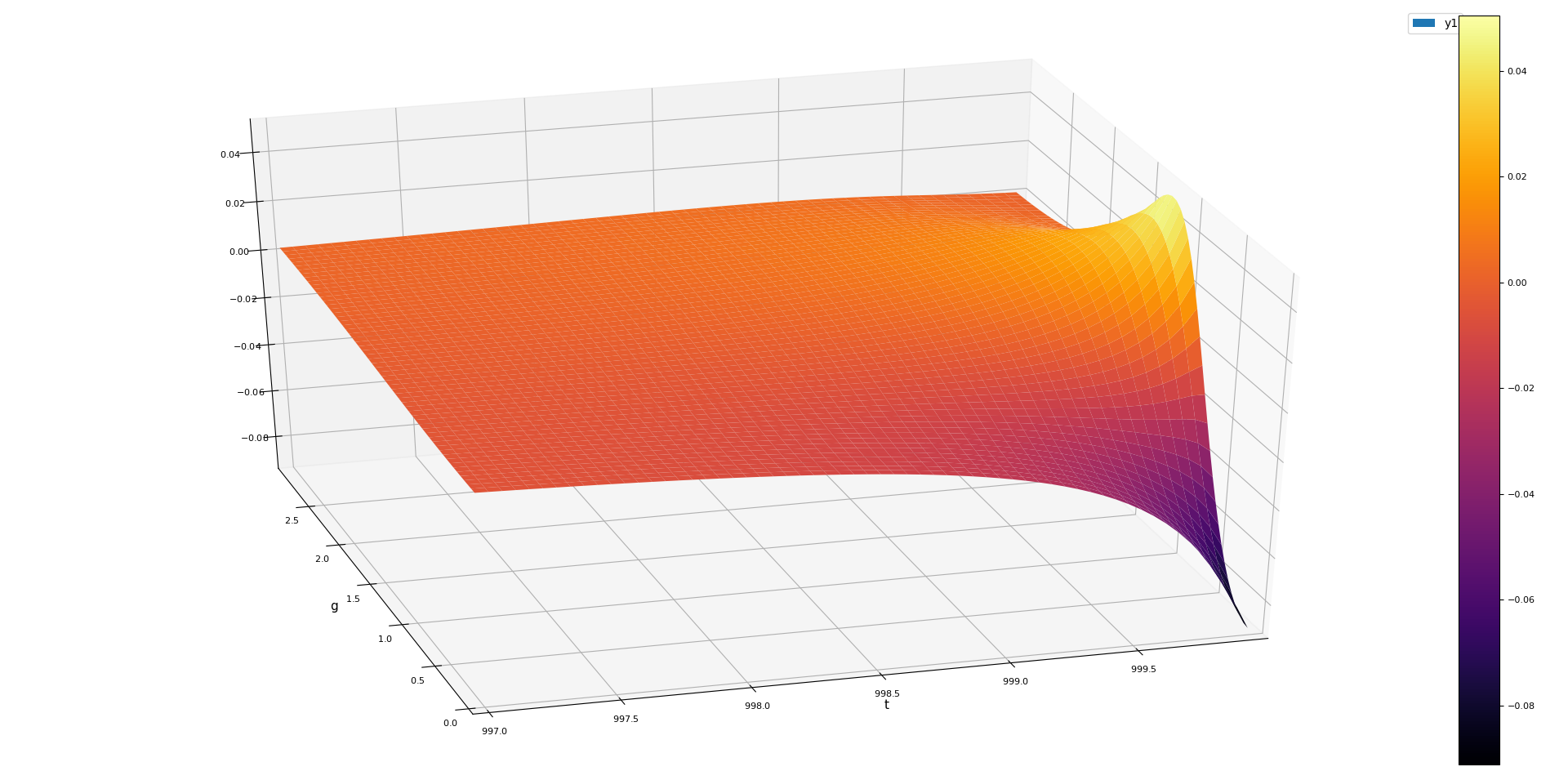

Cover's DP Table

(w/ player playing optimally)

Cover's Dynamic Program

Player strategy based on gaps:

Choice doesn't depend on the specific past costs

on the Lagging expert

on the Leading expert

We can compute \(V^*\) backwards in time via DP!

Getting an optimal player \(p^*\) from \(V^*\) is easy!

Max regret-to-be-suffered at round \(t\) with gap \(g\)

\(O(T^2)\) time to compute the table — \(O(T)\) amortized time per round

At round \(t\) with gap \(g\)

Optimal regret for 2 experts

Connection to Random Walks

Optimal player \(p^*\) is related to Random Walks

For \(g_t\) following a Random Walk

Central Limit Theorem

Not clear if the approximation error affects the regret

The DP is defined only for integer costs!

Lagging expert finishes leading

Let's design an algorithm that is efficient and works for all costs

Bonus: Connections of Cover's algorithm with stochastic calculus

Connection to Random Walks

Theorem

Player \(p^*\) is also connected to RWs

For \(g_t\) following a Random Walk

Central Limit Theorem

Not clear if the approximation error affects the regret

The DP is defined only for integer costs!

Lagging expert finishes leading

[Cover '67]

# of 0s of a Random Walk of len \(T\)

Let's design an algorithm that is efficient and works for all costs

Bonus: Connections of Cover's algorithm with stochastic calculus

Continuous Regret

A Probabilistic View of Regret Bounds

Formula for the regret based on the gaps

Discrete stochastic integral

Moving to continuous time:

Random walk \(\longrightarrow\) Brownian Motion

\(g_0, \dotsc, g_t\) are a realization of a random walk

Useful Perspective:

Deterministic bound = Bound with probability 1

A Probabilistic View of Regret Bounds

Formula for the regret based on the gaps

Random walk \(\longrightarrow\) Brownian Motion

Reflected Brownian motion (gaps)

Conditions on the continuous player \(p\)

Continuous on \([0,T) \times \mathbb{R}\)

for all \(t \geq 0\)

Stochastic Integrals and Itô's Formula

How to work with stochastic integrals?

Itô's Formula:

\(\overset{*}{\Delta} R(t, g) = 0\) everywhere

ContRegret \( = R(T, |B_T|) - R(0,0)\)

Goal:

Find a "potential function" \(R\) such that

(1) \(\partial_g R\) is a valid continuous player

(2) \(R\) satisfies the Backwards Heat Equation

Different from classic FTC!

Backwards Heat Equation

Stochastic Integrals and Itô's Formula

Goal:

Find a "potential function" \(R\) such that

(1) \(\partial_g R\) is a valid continuous player

(2) \(R\) satisfies the Backwards Heat Equation

How to find a good \(R\)?

?

Suffices to find a player \(p\) satisfying the BHE

\(\approx\) Cover's solution!

Also a solution to an ODE

Then setting

preserves BHE and

Stochastic Integrals and Itô's Formula

How to work with stochastic integrals?

Itô's Formula:

\(\overset{*}{\Delta} R(t, g) = 0\) everywhere

ContRegret is given by \(R(T, |B_T|)\)

Goal:

Find a "potential function" \(R\) such that

(1) \(\partial_g R\) is a valid continuous player

(2) \(R\) satisfies the Backwards Heat Equation

Different from classic FTC!

Backwards Heat Equation

[C-BL 06]

A Solution Inspired by Cover's Algorithm

From Cover's algorithm, we have

We can find \(R(t,g)\) such that

\(\overset{*}{\Delta} R = 0\)

\(\partial_g R = Q\)

Potential \(R\) satisfying BHE?

Player \(Q\) satisfies the BHE!

By Itô's Formula:

(BHE)

Discretization

Discrete Itô's Formula

How to analyze a discrete algorithm coming from stochastic calculus?

Discrete Itô's Formula!

Discrete Derivatives

Surprisingly, we can analyze Cover's algorithm with discrete Itô's formula

Itô's Formula

Discrete Itô's Formula

Discrete Algorithms

\(V^*\) satisfies the "discrete" Backwards Heat Equation!

Not Efficient

Efficient

Discrete Itô \(\implies\)

Regret of \(p^* \leq V^*[0,0]\)

BHE = Optimal?

Hopefully, \(R\) satisfies the discrete BHE

Discretized player:

We show the total is \(\leq 1\)

Cover's strategy

Bounding the Discretization Error

In the work of Harvey et al., they had

In this fixed-time solution, we are not as lucky.

Negative discretization error!

We show the total discretization error is always \(\leq 1\)

Our Results

An Efficient and Optimal Algorithm in Fixed-Time with Two Experts

Technique:

Solve an analogous continuous-time problem, and discretize it

[HLPR '20]

How to exploit the knowledge of \(T\)?

Discretization error needs to be analyzed carefully.

BHE seems to play a role in other problems in OL as well!

Solution based on Cover's alg

Or inverting time in an ODE!

We show \(\leq 1\)

\(V^*\) and \(p^*\) satisfy the discrete BHE!

Insight:

Cover's algorithm has connections to stochastic calculus!

Questions?

Known Results

Multiplicative Weights Update method:

Optimal for \(n,T \to \infty\) !

If \(n\) is fixed, we can do better

\(n = 2\)

\(n = 3\)

\(n = 4\)

Player knows \(T\) !

Minmax regret in some cases:

What if \(T\) is not known?

Minmax regret

\(n = 2\)

[Harvey, Liaw, Perkins, Randhawa FOCS 2020]

They give an efficient algorithm!

A Dynamic Programming View

Optimal regret (\(V^* = V_{p^*}\))

For \(g > 0\)

For \(g = 0\)

Regret and Player in terms of the Gap

Path-independent player:

If

round \(t\) and gap \(g_{t-1}\) on round \(t-1\)

on the Lagging expert

on the Leading expert

Choice doesn't depend on the specific past costs

for all \(t\), then

gap on round \(t\)

A discrete analogue of a Riemann-Stieltjes integral

A formula for the regret

A Dynamic Programming View

Maximum regret-to-be-suffered on rounds \(t+1, \dotsc, T\) when gap on round \(t\) is \(g\)

Path-independent player \(\implies\) \(V_p[t,g]\) depends only on \(\ell_{t+1}, \dotsc, \ell_T\) and \(g_t, \dotsc, g_{T}\)

Regret suffered on round \(t+1\)

Regret suffered on round \(t + 1\)

A Dynamic Programming View

Maximum regret-to-be-suffered on rounds \(t+1, \dotsc, T\) if gap at round \(t\) is \(g\)

We can compute \(V_p\) backwards in time!

Path-independent player \(\implies\)

\(V_p[t,g]\) depends only on \(\ell_{t+1}, \dotsc, \ell_T\) and \(g_t, \dotsc, g_{T}\)

We then choose \(p^*\) that minimizes \(V^*[0,0] = V_{p^*}[0,0]\)

Maximum regret of \(p\)

A Dynamic Programming View

For \(g > 0\)

Optimal player

Optimal regret (\(V^* = V_{p^*}\))

For \(g = 0\)

For \(g > 0\)

For \(g = 0\)

Discrete Derivatives

Bounding the Discretization Error

Main idea

\(R\) satisfies the continuous BHE

Approximation error of the derivatives

Lemma

Known and New Results

Multiplicative Weights Update method:

Optimal for \(n,T \to \infty\) !

If \(n\) is fixed, we can do better

Worst-case regret for 2 experts

Player knows \(T\) (fixed-time)

Player doesn't know \(T\) (anytime)

Question:

Is there an efficient algorithm for the fixed-time case?

Ideally an algorithm that works for general costs!

\(O(T)\) time per round

Dynamic Programming

\(\{0,1\}\) costs

\(O(1)\) time per round

Stochastic Calculus

\([0,1]\) costs

[Harvey, Liaw, Perkins, Randhawa FOCS 2020]

[Cover '67]

ALT 2022 - Two Experts

By Victor Sanches Portella

ALT 2022 - Two Experts

- 495