Lower Bounds for Private Estimation of

Victor Sanches Portella

July 2, 2025

joint work with Nick Harvey (UBC)

under All Reasonable Parameter Regimes

Gaussian Covariance Matrices

ime.usp.br/~victorsp

Private Covariance Estimation

Unknown Covariance Matrix

\((\varepsilon, \delta)\)-differentially private \(\mathcal{M}\) to estimate \(\Sigma\)

on \(\mathbb{R}^d\)

Goal:

Required even without privacy

Required even for \(d = 1\)

[Karwa & Vadhan '18]

Is this tight?

\((\varepsilon, \delta)\)-DP \(\mathcal{M}\) with accuracy

samples

Known algorithmic results

with

(Output of \(\mathcal{M}(X)\) does not change much if switch one \(x_i\))

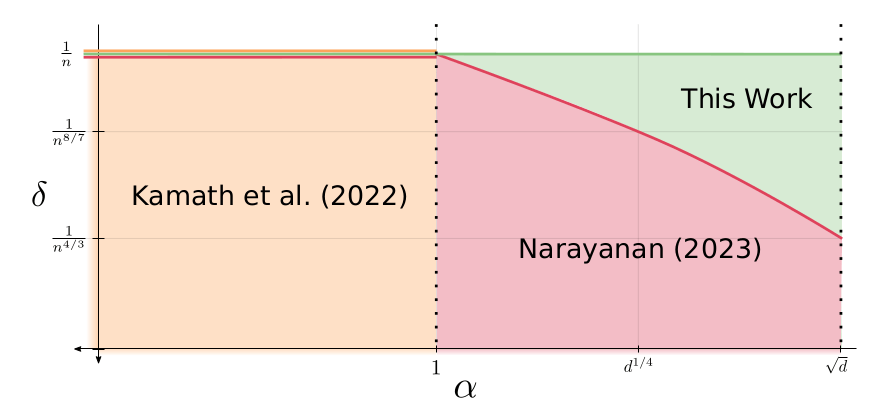

Our Results - New Lower Bounds

Theorem

For any \((\varepsilon, \delta)\)-DP algorithm \(\mathcal{M}\) such that

and

we have

Above 1/n, DP may not be meaningful

Previous

Lower Bounds

Accuracy \(\alpha^2\)

New Fingerprinting Lemma using

Stokes' Theorem

Follow up:

LBs for other problems

[Lyu & Talwar ´25]

Lower Bounds via Fingerprinting

Measures correlation between and

Correlation statistic

If \(z \sim \mathcal{N}(0, \Sigma)\) indep. of \(X\)

small

If \(\mathcal{M}\) is accurate

large

Fingerprinting Lemma

Approx. equal by privacy

Approx. equal by privacy

Example:

Randomizing \(\Sigma\)

To get a FP lemma, we need to randomize \(\Sigma\)

How to choose the distribution of \(\Sigma\)?

Narayanan (2023) drops independence with a Bayesian argument

otherwise, there is \(\mathcal{M}\) that knows \(\Sigma\) and ignores \(X\)

Most FP Lemma take independent coordinates

1 dim. FP Lemma and apply to each coord.

For covariance works only for high-accuracy \(\mathcal{M}\) (Kamath et al. 2022)

Our idea: Many FP Lemmas use Stein's identity (Integration by parts)

How to do something similar in high-dimensions?

Score Statistic

Gaussian Score function

Score Attack Statistic

First step: Pick a different correlation statistic

"Usual" choice

[Cai et al. 2023]

New Fingerprinting Lemma

\(\Sigma \sim\) Wishart leads to elegant analysis

Stein-Haff Identity

Want to "Move the derivative" with integration by parts

Stokes' Theorem

Divergence of \(\Sigma \mapsto \mathbb{E}[\mathcal{M}(X) \; | \; \Sigma]\)

Large If \(\mathbb{E}[\mathcal{M}(X) \; | \; \Sigma]\approx \Sigma\)

Main property:

\(\Sigma\) is random with density \(p\)

Summary

Our results

Score Attack Statistic

Tight lower bounds private covariance estimation

over a broad parameter regime

Stein-Haff Identify

Thanks!

Technical Secret Sauce:

New Fingerprinting Lemma

Lower Bounds for Private Estimation of

Victor Sanches Portella

July 2, 2025

joint work with Nick Harvey (UBC)

under All Reasonable Parameter Regimes

Gaussian Covariance Matrices

ime.usp.br/~victorsp

Randomizing \(\Sigma\)

To get a FP lemma, we need to randomize \(\Sigma\)

\(\Sigma\) with "small variance" \(\implies\)

FP Lemma only for high-accuracy \(\mathcal{M}\)

\(\Sigma\) with "large variance" \(\implies\)

hard to upper bound \(\mathbb{E}[|\mathcal{C}(z, \mathcal{M}(X))|]\) for independent \(z\)

If \(\mathcal{M}\) is accurate

large

Fingerprinting Lemma

is accurate and ignores \(X\)

otherwise, there is \(\mathcal{M}\) that knows \(\Sigma\) and ignores \(X\)

Randomizing \(\Sigma\)

New Fingerprinting Lemma

\(\Sigma \sim\) Wishart leads to elegant analysis

Stein-Haff Identity

Want to "Move the derivative" with integration by parts

Stokes' Theorem

FP Lemma

Upper Bound

\(\Sigma\) is random with density \(p\)

Score Statistic

Gaussian Score function

Score Attack Statistic

First step: Pick a different correlation statistic

"Usual" choice

Divergence of \(\Sigma \mapsto \mathbb{E}[\mathcal{M}(X) \; | \; \Sigma]\)

Large If \(\mathbb{E}[\mathcal{M}(X) \; | \; \Sigma]\approx \Sigma\)

Main property:

COLT 2025 Talk

By Victor Sanches Portella

COLT 2025 Talk

- 168